- Home

- /

- Analytics

- /

- Stat Procs

- /

- Question regard to the book Modern Appoaches in clinical trials Using ...

- RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

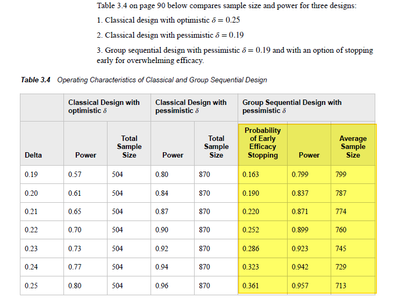

All, Recenly, I am learning the PROC SEQDESIGN procedure via book Modern Approaches in clinical trials using SAS authored by Sandeep and Zink, but found the columns could not be replicated

The code for creating the last 3 columns are as below:

ods graphics on;

proc seqdesign altref=0.19 errspend pss(cref=0 0.5 1) stopprob(cref=0 0.5 1) plots=(asn power errspend) boundaryscale=stdZ;

OneSidedErrorSpending: design nstages=2 method(alpha)=ERRFUNCGAMMA(GAMMA=-4) ALT=UPPER STOP=REJECT/*REJECT NULL STAND FOR STOP due to efficacy*/

alpha=0.025

beta=0.2

info=cum(1 2);

samplesize model=twosamplemean(stddev=1 weight=1);

run;

ods graphics off; After running such code, there is no results match the numbers in the shot from the book below.

For instance, the results generated by above code gives the size will be 870 not 799 as shown in the book.

I consulted the authors as well, but seems not reply.

Anybody could help me to figure out this? THank you in advance.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

@Jack2012 wrote:

(...) Why set the different beta values. And still can't connect these values. Generally, we specify a fixed power, i.e. 1-beta, then calculate the size and the corresponding "Early stop probability for efficacy at stage 1", but now this logic seems not workable.

To me it looks like the authors tried to replicate the power values (0.80, 0.84, ..., 0.96 -- possibly, rounded to three decimals, these were really 0.799, 0.837, ..., 0.957?) from the "Classical Design with pessimistic d" using the "Group Sequential Design with pessimistic d" in order to obtain "Average Sample Size" values comparable to the (constant) "Total Sample Size" of the classical design.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

I think you need to closely at your output.

When I run your code I see this as part of the output. I think you are confusing "Max Sample Size" from your output with "Average Sample size" in the publication. They apparently changed the label from "Expected Sample Size"

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Further help is still needed. Thank you .

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Jack2012,

I'm not an expert on PROC SEQDESIGN, so not sure if this helps:

On page 89 of the book (as seen in Google Books) it says: "Example Code 3.3 on page 89 shows how this can be done in SAS using method (alpha)=ERRFUNCOBF or using method (alpha)=ERRFUNCGAMMA(GAMMA-4) [sic! missing "=" sign before "-4"] in proc seqdesign [32]."

After replacing ERRFUNCGAMMA(GAMMA=-4) in your code with ERRFUNCOBF and using beta=1-Power (from the highlighted part of your screenshot), i.e., beta=0.201 for altref=0.19 (beta=0.163 for altref=0.20, etc.), I get Stopping probability (Stage_1) and Expected Sample Size (Alt Ref) (almost) equal to the values in your table and the same holds for the other altref values (0.20, 0.21, ..., 0.25). The rounding error in beta might explain the remaining small differences.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

However, still there is a gap for me to understand the logic on how to get this. Why set the different beta values. And still can't connect these values. Generally, we specify a fixed power, i.e. 1-beta, then calculate the size and the corresponding "Early stop probability for efficacy at stage 1", but now this logic seems not workable.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

@Jack2012 wrote:

(...) Why set the different beta values. And still can't connect these values. Generally, we specify a fixed power, i.e. 1-beta, then calculate the size and the corresponding "Early stop probability for efficacy at stage 1", but now this logic seems not workable.

To me it looks like the authors tried to replicate the power values (0.80, 0.84, ..., 0.96 -- possibly, rounded to three decimals, these were really 0.799, 0.837, ..., 0.957?) from the "Classical Design with pessimistic d" using the "Group Sequential Design with pessimistic d" in order to obtain "Average Sample Size" values comparable to the (constant) "Total Sample Size" of the classical design.

SAS Innovate 2025: Save the Date

SAS Innovate 2025 is scheduled for May 6-9 in Orlando, FL. Sign up to be first to learn about the agenda and registration!

ANOVA, or Analysis Of Variance, is used to compare the averages or means of two or more populations to better understand how they differ. Watch this tutorial for more.

Find more tutorials on the SAS Users YouTube channel.