- Home

- /

- Programming

- /

- Programming

- /

- Using DS2 to parse JSON from a REST API

- RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

SAS 9.4 introduced PROC JSON, which allows you to create JSON output from a data set. But it wasn't until SAS 9.4 Maint 3 that we have a built-in method to parse JSON content. That method was added as a DS2 package: the JSON package.

Here's an example of the method working -- using an API that powers this very community! (That's "meta" for you.) It's my first real DS2 program, so I'm open to feedback. I already know of a couple of improvements I should make, but I want to share it now as I think it's good enough to help others who are looking to do something similar.

The program requires SAS 9.4 Maint 3. It also works fine in the most recent version of SAS University Edition (using SAS Studio 3.4). All of the code runs using just Base SAS procedures.

/* DS2 program that uses a REST-based API */

/* Uses http package for API calls */

/* and the JSON package (new in 9.4m3) */

/* to parse the result. */

proc ds2;

data messages (overwrite=yes);

/* Global package references */

dcl package json j();

/* Keeping these variables for output */

dcl double post_date having format datetime20.;

dcl int views;

dcl nvarchar(128) subject author board;

/* these are temp variables */

dcl varchar(65534) character set utf8 response;

dcl int rc;

drop response rc;

method parseMessages();

dcl int tokenType parseFlags;

dcl nvarchar(128) token;

rc=0;

* iterate over all message entries;

do while (rc=0);

j.getNextToken( rc, token, tokenType, parseFlags);

* subject line;

if (token eq 'subject') then

do;

j.getNextToken( rc, token, tokenType, parseFlags);

subject=token;

end;

* board URL, nested in an href label;

if (token eq 'board') then

do;

do while (token ne 'href');

j.getNextToken( rc, token, tokenType, parseFlags );

end;

j.getNextToken( rc, token, tokenType, parseFlags );

board=token;

end;

* number of views (int), nested in a count label ;

if (token eq 'views') then

do;

do while (token ne 'count');

j.getNextToken( rc, token, tokenType, parseFlags );

end;

j.getNextToken( rc, token, tokenType, parseFlags );

views=inputn(token,'5.');

end;

* date-time of message (input/convert to SAS date) ;

* format from API: 2015-09-28T10:16:01+00:00 ;

if (token eq 'post_time') then

do;

j.getNextToken( rc, token, tokenType, parseFlags );

post_date=inputn(token,'anydtdtm26.');

end;

* user name of author, nested in a login label;

if (token eq 'author') then

do;

do while (token ne 'login');

j.getNextToken( rc, token, tokenType, parseFlags );

end;

* get the author login (username) value;

j.getNextToken( rc, token, tokenType, parseFlags );

author=token;

output;

end;

end;

return;

end;

method init();

dcl package http webQuery();

dcl int rc tokenType parseFlags;

dcl nvarchar(128) token;

dcl integer i rc;

/* create a GET call to the API */

/* 'sas_programming' covers all SAS programming topics from communities */

webQuery.createGetMethod(

'http://communities.sas.com/kntur85557/' ||

'restapi/vc/categories/id/sas_programming/posts/recent' ||

'?restapi.response_format=json' ||

'&restapi.response_style=-types,-null&page_size=100');

/* execute the GET */

webQuery.executeMethod();

/* retrieve the response body as a string */

webQuery.getResponseBodyAsString(response, rc);

rc = j.createParser( response );

do while (rc = 0);

j.getNextToken( rc, token, tokenType, parseFlags);

if (token = 'message') then

parseMessages();

end;

end;

method term();

rc = j.destroyParser();

end;

enddata;

run;

quit;

/* Add some basic reporting */

proc freq data=messages noprint;

format post_date datetime11.;

table post_date / out=message_times;

run;

ods graphics / width=2000 height=600;

title '100 recent message contributions in SAS Programming';

title2 'Time in GMT';

proc sgplot data=message_times;

series x=post_date y=count;

xaxis minor label='Messages';

yaxis label='Time created' grid;

run;

title 'Board frequency for recent 100 messages';

proc freq data=messages order=freq;

table board;

run;

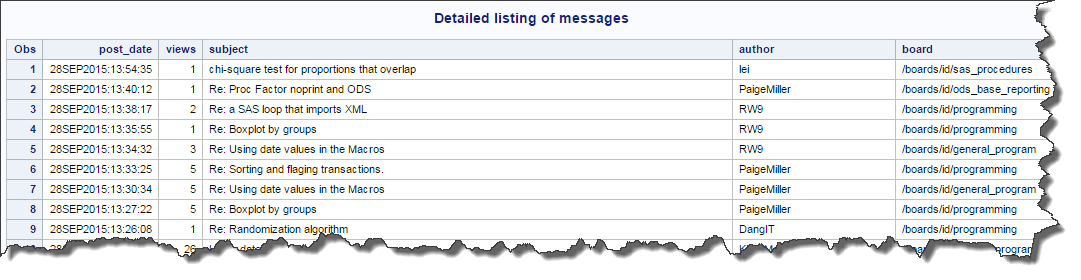

title 'Detailed listing of messages';

proc print data=messages;

run;

title;Here is some partial output:

Looking forward to your comments...

Chris

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Many thanks for this Chris - I was looking at the JSON parser recently for a job which requires parsing a lot of quite complex JSON so this will be very useful.

While I haven't had time to work through your example in detail yet I do have one question. Is it really necessary to explicitly call the destructor method for the parser in the term method? My understanding is that in DS2 when a package object leaves scope (which it will do when the program ends at the quit statement) the package instance destructor is automatically called in which case an explicit call in the term method isn't necessary. Is this correct or am I missing something?

Chris

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Let's call that a best practice. You're correct -- when the object goes out of scope (certainly when the PROC ends) the parser will be cleaned up.

If this were part of a larger program where parsing JSON was just a small part of the work, I might call destroyParser() at another point, when the parser was no longer needed but other work continued.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Chris

Thanks for the confirmation - I must admit I rarely use destructors except in the case of hashes when I'll delete the hash as soon as I've finished with it to conserve resources.

Chris

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for this Chris, this looks really exciting.

I'm just wondering if this could be adapted to accept a json message of unknown structure. So create a dataset, variable names, types, lengths dynamically based on the json we are receiving.

I am trying to build a data warehouse that uses a json message queue as its primary source of data and as the messages change over time, we want the data warehouse structure to be automatically updated.

I already have this working within SAS and loading a MS SQL database, I’m just not sure how to read in the json messages.

For instance if we get a set of messages from the queue, that may differ in structure because there has been a change within the message format, do you know of any way to create a SAS dataset with all the variables of all the messages.

Any ideas would be greatly appreciated.

Regards,

Bronwen

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

I'm sure that something like that could be done -- with more work, of course. You could read the JSON labels and types in (JSON often includes type modifiers to indicate whether the value is a string, int, date, etc.) and then write out DATA step code to build the structure dynamically.

For example, a datetime field might appear as:

"post_time": {

"type": "date_time",

"$": "2015-09-29T11:50:21+00:00"

},

Your parsing code could interpret this and assign SAS attributes such as:

length post_time 8;

format datetime20.;

informat anydtdtm26.;It would be a challenging exercise, I think -- but possible.

Chris

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for that Chris,

Yes I am considering using a SAS datastep to pass the JSON messages into csv file and then do a proc import so SAS can allocate the lengths, datatypes ect. But I am almost certain SAS will bring out a solution for this just when I have completed my ‘challenge’.

Regards,

Bronwen

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

It would take multiple passes for optimal data types to be derived - but filesize is surely not too big.

Where a value is a structure it implies a subordinate table that implies the possible need for something like recursion........

Could be an interesting project

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

I can imagine a future approach like the SAS XML Mapper. Like XML, JSON has this hierarchical structure that must somehow be mapped to the rectangular format of SAS data sets. A JSON map could be used to prevent repetitive (and possibly expensive) analysis of a JSON file. And I cannot imagine this to be succesful without user guidance to make the decision on what elements should go into the rows and columns.

Anyhow I can understand why writing JSON is so much easier than the other way around.

And thanks Chris for the code example. I collect JSON data from my car through a REST api every 5 minutes containing location, drive state, charge state etc. This works wonders in getting this data into SAS for further analysis and reporting.

Regards Jan

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Chris,

Thank you so much for this post. I am quite new to this which is a bad place to be in with little information on the internets. I upgraded to M3 from M2 and obviously am able to run your sample. I've attempted to use it as a template to pull in some JSONs I'm playing with. 2 immediate questions come to mind:

1) How would one edit your code if they wanted to consume a JSON they've already downloaded local? I've failed miserably at passing the JSON to the createParser without using your existing webQuery call. I ask this because the JSON files are large so I'm hitting a "HTTP response body truncated" which results in only partially loading the data into a dataset. I plan on downloading, importing using this method, and then cleaning up with an X command.

2) Is this ( https://www.modahealth.com/cms-data-index.json ) a "standard" JSON structure? This structure doesn't match up with your example or any of the JSONs I'm trying to import in my first question above. No matter how I try to tell the parser where the URLs are, I either get NULL values or just open brackets.

Thanks for all any help you can provide. This package seems incredibly powerful and I feel like I need to block off the next few days for only DS2 JSON fun.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

The DS2 language does not include syntax for consuming text files. Downloading the JSON to a text file first means that you will have to load it into a table using other means before processing it with DS2. If you are having trouble with the size of the JSON, try making the response variable larger size. For example change this:

dcl varchar(65534) character set utf8 response;

to this:

dcl varchar(1000000) character set utf8 response;

You won't be able to store all 1000000 charactes in your VARCHAR variable to a table, but you can manipulate it with DS2, so you can parse the JSON text using the JSON package as shown in the example.

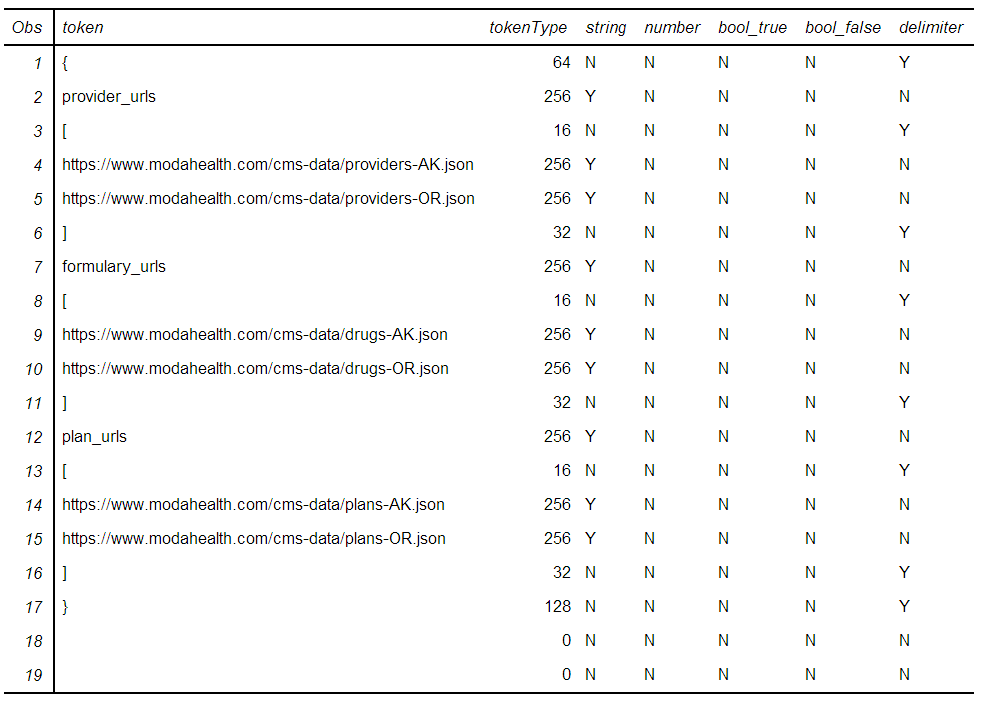

As for your second question, there really isn't a standard JSON structure - it's completely flexible. It is often useful to be able to prototype JSON data extraction routines to see how the tokens break out. Your could use code something like this:

/* Put some JSON in a macro variable for use in testing */

%let json= '{

"provider_urls": [

"https://www.modahealth.com/cms-data/providers-AK.json",

"https://www.modahealth.com/cms-data/providers-OR.json"

],

"formulary_urls": [

"https://www.modahealth.com/cms-data/drugs-AK.json",

"https://www.modahealth.com/cms-data/drugs-OR.json"

],

"plan_urls": [

"https://www.modahealth.com/cms-data/plans-AK.json",

"https://www.modahealth.com/cms-data/plans-OR.json"

]

}';

proc ds2;

data tokens/overwrite=yes;

dcl package json j();

dcl nchar(1024) token;

dcl int tokenType;

dcl char(1) string number bool_true bool_false delimiter;

method init();

dcl varchar(1000000) json;

dcl nchar(1024) thisVar;

dcl int rc parseFlags;

/* JSON for prototyping */

json=%superq(json);

rc = j.createParser();

if (rc) then do;

put 'Error' rc= ': Could not create JSON parser.';

stop;

end;

rc = j.setParserInput(json);

if (rc) then do;

put 'Error' rc= ': setParserInput failed.';

stop;

end;

/* Use the parser to parse the JSON */

/* RC of 0 means all went well. Otherwise, an error occurred */

do until (rc ne 0);

j.getNextToken( rc, token, tokenType, parseFlags );

string=if j.isstring(tokenType) then 'Y' else 'N';

number=if j.isnumeric(tokenType) then 'Y' else 'N';

bool_true=if j.isbooleantrue(tokenType) then 'Y' else 'N';

bool_false=if j.isbooleanfalse(tokenType) then 'Y' else 'N';

delimiter=if tokenType in (16,32,64,128) then 'Y' else 'N';

output;

end;

end;

enddata;

run;

quit;

proc print data=tokens; run;

and then look at how the JSON tokens parsed out:

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

/* Put some JSON in a macro variable for use in testing */

%let json= '{

"provider_urls": [

"https://www.modahealth.com/cms-data/providers-AK.json",

"https://www.modahealth.com/cms-data/providers-OR.json"

],

"formulary_urls": [

"https://www.modahealth.com/cms-data/drugs-AK.json",

"https://www.modahealth.com/cms-data/drugs-OR.json"

],

"plan_urls": [

"https://www.modahealth.com/cms-data/plans-AK.json",

"https://www.modahealth.com/cms-data/plans-OR.json"

]

}';

proc ds2;

data tokens/overwrite=yes;

dcl package json j();

dcl nchar(1024) token;

dcl int tokenType;

dcl char(1) string number bool_true bool_false delimiter;

method init();

dcl varchar(1000000) json;

dcl nchar(1024) thisVar;

dcl int rc parseFlags;

/* JSON for prototyping */

json=%superq(json);

rc = j.createParser();

if (rc) then do;

put 'Error' rc= ': Could not create JSON parser.';

stop;

end;

rc = j.setParserInput(json);

if (rc) then do;

put 'Error' rc= ': setParserInput failed.';

stop;

end;

/* Use the parser to parse the JSON */

/* RC of 0 means all went well. Otherwise, an error occurred */

do until (rc ne 0);

j.getNextToken( rc, token, tokenType, parseFlags );

string=if j.isstring(tokenType) then 'Y' else 'N';

number=if j.isnumeric(tokenType) then 'Y' else 'N';

bool_true=if j.isbooleantrue(tokenType) then 'Y' else 'N';

bool_false=if j.isbooleanfalse(tokenType) then 'Y' else 'N';

delimiter=if tokenType in (16,32,64,128) then 'Y' else 'N';

output;

end;

end;

enddata;

run;

quit;

proc print data=tokens; run;

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, Chris - I'll remember to use Chrome in the future... 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your prompt responses. Your token metadata breakout definitely makes it easier to think of a path forward with that visual.

As far as loading the json into a dataset before DS2 can consume it, am I constrained by the json contents needing to be in one text variable or is it able to search across observations? For example, the JSON I am working with right now is just over 5m rows and I'm sure I'll have plenty that are larger than this. Do I have the ability to pass the package a dataset or was the plan to load the data from the dataset into a mac var string? Obviously the size of the JSONs makes the latter impossible. That being said, I'd need to be able to freely spool across observations as I traverse the tokens else the JSON structure will be broken.

I apologize for the basic questions. I think I've read everything out there related to the DS2 JSON package and I'm just trying to figure out if loading the data I have is feasible.

Learn how use the CAT functions in SAS to join values from multiple variables into a single value.

Find more tutorials on the SAS Users YouTube channel.

SAS Training: Just a Click Away

Ready to level-up your skills? Choose your own adventure.