- Home

- /

- Programming

- /

- Programming

- /

- Re: Problem with importing csv file

- RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Dear all

I have a problem with importing a specific csv file to SAS.

The file contains data published by the Bank for International Settlments and is avialable here: https://www.bis.org/statistics/full_bis_lbs_diss_csv.zip

I've following problems:

1. The data is in the wide form, with each period being reported in a subsequent column. As a result, the dataset has over 170 variables. For this reason I would like to avoid using infile statement, as this would require typing the names 170 variables. Also, more importantly, with this approach I would have to modify the code each time new data is added to the file (as new column will then appear in the dataset).

2. I thought of using proc import instead but at the begining of the file there is some unnecesary description - real data start in row 9. When I use proc import with a datrow option I can get the data into SAS but without variable names which are in row 8.

3. Finally, I need the data to be numeric, but even if I manage to get them into SAS they get converted to character.

Can anyone help me? I got stuck.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

First, I unzipped the file and copied it to my UNIX SAS server.

Then I did

tail -n +8 full_WEBSTATS_LBS_D_PUB_DATAFLOW_csv.csv>input.csv

which removes the first 7 lines of text.

Next I ran

options validvarname=v7;

proc import

dbms=csv

datafile='$HOME/sascommunity/input.csv'

replace

out=work.input

;

guessingrows=100;

run;Then I converted to numeric by reading the column names from dictionary.columns and using that with call execute:

proc sql;

create table columns as

select name

from dictionary.columns

where libname = 'WORK' and memname = 'INPUT' and substr(name,1,1) = '_'

;

quit;

data _null_;

set columns end=done;

if _N_ = 1

then do;

call execute('data want; set input;');

end;

call execute('if ' !! trim(name) !! ' not in ("Na","NaN") then n' !! trim(name) !! '=input(' !! trim(name) !! ',20.);');

call execute('drop ' !! trim(name) !! ';');

if done then call execute('run;');

run;

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

First off, I am not downloading a zip file.

Second, what does the data specification document which details the file structure, formats etc. look like? Don't have one, welcome to the world of garbage data. Unsubscribed, badly structured data is one of the biggest sources of work and rework. As simple change to the modelling so that periods were rows rather than columns (as any right minded data modeller would do) would make your import extremely simple and robust.

On point 3, if you use a proc import function, this guesses your data. Leaving it to the computer to guess what you want will throw up issues. Hence why we always suggest infile and datastep to read in the data per the data specifications.

I would suggest to change the data import to be a sensible one, but I dount that will happen. You could use ranges:

input col1--col200 $;

To read 200 columns, you won't have names and such like and its really not a good idea.

End of the day garbage in=garbage out unless you put your time in to fix it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for the answer.

1. I have only a link to the zip file. I've tried to attach the csv, but it has over 140 MB and it gets rejected.

2. I would also prefer the data in the long format, but this is what I have. The BIS won't change the format for me. Once I get the data into SAS, I'll transpose them in whatever way I want. But I need to have them in SAS in the first place.

3. I know the structure of the data - I can open the csv and check column names. But, still, with using infile I'll have to re-write the code every quarter to add new column get the new data. This is something I want to avoid. That's why I was hoping there is a workaround for this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

First, I unzipped the file and copied it to my UNIX SAS server.

Then I did

tail -n +8 full_WEBSTATS_LBS_D_PUB_DATAFLOW_csv.csv>input.csv

which removes the first 7 lines of text.

Next I ran

options validvarname=v7;

proc import

dbms=csv

datafile='$HOME/sascommunity/input.csv'

replace

out=work.input

;

guessingrows=100;

run;Then I converted to numeric by reading the column names from dictionary.columns and using that with call execute:

proc sql;

create table columns as

select name

from dictionary.columns

where libname = 'WORK' and memname = 'INPUT' and substr(name,1,1) = '_'

;

quit;

data _null_;

set columns end=done;

if _N_ = 1

then do;

call execute('data want; set input;');

end;

call execute('if ' !! trim(name) !! ' not in ("Na","NaN") then n' !! trim(name) !! '=input(' !! trim(name) !! ',20.);');

call execute('drop ' !! trim(name) !! ';');

if done then call execute('run;');

run;

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

@Kurt_Bremser Thanks a lot! One question rearding this part:

tail -n +8 full_WEBSTATS_LBS_D_PUB_DATAFLOW_csv.csv>input.csv

I uderstand this is the Unix command, not SAS command, am I right? Can I somehow do it using SAS? If possible I want to avoid using another software, other than SAS

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

@chris2377 wrote:

@Kurt_Bremser Thanks a lot! One question rearding this part:

tail -n +8 full_WEBSTATS_LBS_D_PUB_DATAFLOW_csv.csv>input.csvI uderstand this is the Unix command, not SAS command, am I right? Can I somehow do it using SAS? If possible I want to avoid using another software, other than SAS

That's quite straightforward in SAS, it's just that I'm used to the UNIX tools when it comes to scripted text manipulation.

data _null_;

infile '$HOME/sascommunity/full_WEBSTATS_LBS_D_PUB_DATAFLOW_csv.csv' lrecl=32767;

file '$HOME/sascommunity/input.csv' lrecl=32767;

input;

if _n_ > 7;

put _infile_;

run;- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

@Kurt_Bremser Many thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Since SAS supports the zip engine for files nowadays, you can use that in the first step:

filename in zip '$HOME/sascommunity/full_bis_lbs_diss_csv.zip' member='*' lrecl=32767;

filename out '$HOME/sascommunity/input.csv';

data _null_;

infile in;

file out;

input;

if _n_ > 7;

put _infile_;

run;- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Don't use PROC IMPORT to read a file that you already know the structure of.

First take a look at the file and figure out the structure.

filename test zip "&fname" member='*.csv' ;

data _null_;

infile test obs=10;

input;

list;

run;And you get something like this in the log.

RULE: ----+----1----+----2----+----3----+----4----+----5----+----6----+----7----+----8----+----9----+----0

1 Dataset,"Locational Banking Statistics - disseminated data" 59

2 Retrieved on,Wed Jan 17 13:24:50 GMT 2018 41

3 Subject,"BIS locational banking" 32

4 "Frequency","Quarterly" 23

5 "Decimals","Three" 18

6 "Unit of measure","US Dollar" 29

7 "Unit Multiplier","Millions" 28

8 "Frequency","Measure","Balance sheet position","Type of instruments","Currency denomination","Curren

101 cy type of reporting country","Parent country","Type of reporting institutions","Reporting country",

201 "Counterparty sector","Counterparty country","Position type","Time Period","1977-Q4","1978-Q1","1978

301 -Q2","1978-Q3","1978-Q4","1979-Q1","1979-Q2","1979-Q3","1979-Q4","1980-Q1","1980-Q2","1980-Q3","1980

401 -Q4","1981-Q1","1981-Q2","1981-Q3","1981-Q4","1982-Q1","1982-Q2","1982-Q3","1982-Q4","1983-Q1","1983

501 -Q2","1983-Q3","1983-Q4","1984-Q1","1984-Q2","1984-Q3","1984-Q4","1985-Q1","1985-Q2","1985-Q3","1985

601 -Q4","1986-Q1","1986-Q2","1986-Q3","1986-Q4","1987-Q1","1987-Q2","1987-Q3","1987-Q4","1988-Q1","1988

701 -Q2","1988-Q3","1988-Q4","1989-Q1","1989-Q2","1989-Q3","1989-Q4","1990-Q1","1990-Q2","1990-Q3","1990

801 -Q4","1991-Q1","1991-Q2","1991-Q3","1991-Q4","1992-Q1","1992-Q2","1992-Q3","1992-Q4","1993-Q1","1993

901 -Q2","1993-Q3","1993-Q4","1994-Q1","1994-Q2","1994-Q3","1994-Q4","1995-Q1","1995-Q2","1995-Q3","1995

1001 -Q4","1996-Q1","1996-Q2","1996-Q3","1996-Q4","1997-Q1","1997-Q2","1997-Q3","1997-Q4","1998-Q1","1998

1101 -Q2","1998-Q3","1998-Q4","1999-Q1","1999-Q2","1999-Q3","1999-Q4","2000-Q1","2000-Q2","2000-Q3","2000

1201 -Q4","2001-Q1","2001-Q2","2001-Q3","2001-Q4","2002-Q1","2002-Q2","2002-Q3","2002-Q4","2003-Q1","2003

1301 -Q2","2003-Q3","2003-Q4","2004-Q1","2004-Q2","2004-Q3","2004-Q4","2005-Q1","2005-Q2","2005-Q3","2005

1401 -Q4","2006-Q1","2006-Q2","2006-Q3","2006-Q4","2007-Q1","2007-Q2","2007-Q3","2007-Q4","2008-Q1","2008

1501 -Q2","2008-Q3","2008-Q4","2009-Q1","2009-Q2","2009-Q3","2009-Q4","2010-Q1","2010-Q2","2010-Q3","2010

1601 -Q4","2011-Q1","2011-Q2","2011-Q3","2011-Q4","2012-Q1","2012-Q2","2012-Q3","2012-Q4","2013-Q1","2013

1701 -Q2","2013-Q3","2013-Q4","2014-Q1","2014-Q2","2014-Q3","2014-Q4","2015-Q1","2015-Q2","2015-Q3","2015

1801 -Q4","2016-Q1","2016-Q2","2016-Q3","2016-Q4","2017-Q1","2017-Q2","2017-Q3" 1874

9 "Q:Quarterly","F:FX and break adjusted change (BIS calculated)","C:Total claims","A:All instruments"

101 ,"CHF:Swiss Franc","A:All currencies (=D+F+U)","5J:All countries","A:All reporting banks/institution

201 s (domestic, foreign, consortium and unclassified)","5A:All reporting countries","A:All sectors","1C

301 :International organisations","N:Cross-border","Q:F:C:A:CHF:A:5J:A:5A:A:1C:N","","-1.725","39.519","

401 -44.993","45.005","2.231","-17.743","-27.377","8.695","-4.375","6.783","4.788","8.111","-30.537","14

501 .969","10.438","14.695","-30.734","-6.218","16.973","22.431","-19.064","-30.120","22.405","-8.142","

RULE: ----+----1----+----2----+----3----+----4----+----5----+----6----+----7----+----8----+----9----+----0

601 24.217","18.917","-3.897","755.850","78.339","171.219","38.646","29.162","289.770","-214.243","-82.9

701 07","214.799","-104.703","100.841","-49.469","-49.041","-180.986","38.557","150.402","32.981","-64.9

801 98","-91.868","10.018","-94.532","-55.913","35.107","-329.152","-135.070","255.444","1659.513","-128

901 0.252","-118.691","-26.437","-70.279","-97.137","-84.126","-234.328","5.116","328.854","-190.098","3

1001 51.855","-125.701","-260.653","-56.611","104.118","-249.208","183.592","-99.985","116.911","0.209","

1101 -147.727","-104.242","600.696","-217.827","-216.030","0.785","90.879","358.907","-14.025","-219.202"

1201 ,"-148.775","-227.680","262.076","-387.512","170.535","-358.887","226.127","-299.725","-93.493","-66

1301 .373","-19.405","338.434","-145.508","-50.894","-81.216","-79.271","-122.249","103.190","-108.853","

1401 11.883","92.430","41.019","-131.573","15.082","221.843","531.957","-150.141","69.801","-449.815","-1

1501 24.533","254.692","13.576","51.317","22.855","-113.905","41.108","-6.552","-267.612","-68.968","319.

1601 392","472.236","103.636","134.620","-42.126","77.495","18.670","-31.429","48.486","-57.423","12.881"

1701 ,"32.620","-479.392","84.138","6.756","144.042","1710.610","-27.754","-283.713","-1086.346","607.883

1801 ","-248.590","-358.287","249.439","-132.366","412.105","-10.721","-207.372","-68.462","-106.775","24

1901 1.722","-167.873","-284.361","468.192","-352.632","-168.075" 1960

So you can see that the first 7 lines have information that applies to the whole dataset. Then you can see that the actual data lines have a series of fixed variables and then a repeating set of variables that from the column headings look to be a single measure taken quarterly.

With that information you can write one data step that will read ANY of these files.

So first use you mouse and copy and paste the labels from the header lines

8 "Frequency","Measure","Balance sheet position","Type of instruments","Currency denomination","Curren

101 cy type of reporting country","Parent country","Type of reporting institutions","Reporting country",

201 "Counterparty sector","Counterparty country","Position type","Time Period"

and use them to help you define some meaningful variable names for these fields. You can use the actual column headers as labels.

label

Dataset = "Dataset content"

Retrieved = "Date retrieved"

Subject = "Measure definition"

HFrequency = "Measure Frequency from header"

Decimals = "Decimals"

UMeasure = "Unit of measure"

UMultiplier = "Unit Multiplier"

Frequency = "Measure freqeuncy"

Measure = "Measure"

Balance = "Balance sheet position"

IType = "Type of instruments"

Denom = "Currency denomination"

RepCur = "Currency type of reporting country"

PCountry = "Parent country"

RType = "Type of reporting institutions"

RCountry = "Reporting country"

Sector = "Counterparty sector"

Country = "Counterparty country"

Ptype = "Position type"

Period = "Time Period"

;Now that you have variable names you need to define each of those variables. Or just use something that won't lead to truncation and you can adjust it later after you have seen the data. So add a LENGTH statement to define the variables. Make sure to put it FIRST, before you reference the variables in other ways.

Length

Dataset $50

Retrieved $50

Subject $50

HFrequency $50

Decimals $50

UMeasure $50

UMultiplier $50

Frequency $50

Measure $50

Balance $50

IType $50

Denom $50

RepCur $50

PCountry $50

RType $50

RCountry $50

Sector $50

Country $50

Ptype $50

Period $50

;What about these repeated measures? I would recommend reading the data directly into a "tall" format. This means that you will need to store the quarter definitions from line 7 into a temporary array that you can read back out when you write the individual records. So define a temporary array (it will automatically be retained) and file it when you read line 7.

How do you know how many repeats (quarters) are this years file? If it is not already defined in the first 6 lines then it is defined in the line 7 by how many header values there are. So just let SAS count them for you.

So you end up with a program like this.

%let path=C:\Downloads;

%let fname=full_WEBSTATS_LBS_D_PUB_DATAFLOW_csv.csv ;

data want (compress=yes);

Length

Time 8

Value 8

Frequency $50

Measure $50

Balance $50

IType $50

Denom $50

RepCur $50

PCountry $50

RType $50

RCountry $50

Sector $50

Country $50

Ptype $50

Period $50

Dataset $50

Retrieved $50

Subject $50

HFrequency $50

Decimals $50

UMeasure $50

UMultiplier $50

;

label

Dataset = "Dataset content"

Retrieved = "Date retrieved"

Subject = "Measure definition"

HFrequency = "Measure Frequency from header"

Decimals = "Decimals"

UMeasure = "Unit of measure"

UMultiplier = "Unit Multiplier"

Frequency = "Measure freqeuncy"

Measure = "Measure"

Balance = "Balance sheet position"

IType = "Type of instruments"

Denom = "Currency denomination"

RepCur = "Currency type of reporting country"

PCountry = "Parent country"

RType = "Type of reporting institutions"

RCountry = "Reporting country"

Sector = "Counterparty sector"

Country = "Counterparty country"

Ptype = "Position type"

Period = "Time Period"

Time = "Measure Time"

Value = "Measure Vlaue"

;

array times (200) _temporary_ ;

infile "&path\&fname" dsd truncover obs=1000;

length dummy $50 i 8 nvals 8;

retain nvals ;

drop dummy i nvals ;

if _n_=1 then do ;

* Read the top 7 lines and skip the fixed headers ;

input dummy Dataset / dummy Retrieved / dummy Subject / dummy HFrequency

/ dummy Decimals / dummy UMeasure / dummy UMultiplier

/ 13*dummy @

;

retain Dataset -- UMultiplier ;

* Read the quarter headers ;

* Might need to modify for files using periods other than quarters ;

do nvals=1 by 1 until(dummy=' ');

input dummy @ ;

times(nvals) = input(compress(dummy,'-'),??yyq6.);

format time yyq6. ;

end;

input;

nvals=nvals-1;

end;

* Read the actual data lines ;

* Modify the input buffer to convert the NaN strings to .N ;

input @ ;

_infile_=tranwrd(_infile_,'"NaN"','.N');

* Read in the fixed columns ;

input Frequency -- Period @;

* Remove the decodes from the header fields to save space ;

array headers Frequency -- Period ;

do i=1 to dim(headers)-1 ;

headers(i)=scan(headers(i),1,':');

end;

* Read in the multiple values ;

do i=1 to nvals ;

input value @ ;

time = times(i);

* only keep the non empty values ;

if value ne . then output;

end;

format time yyq6. ;

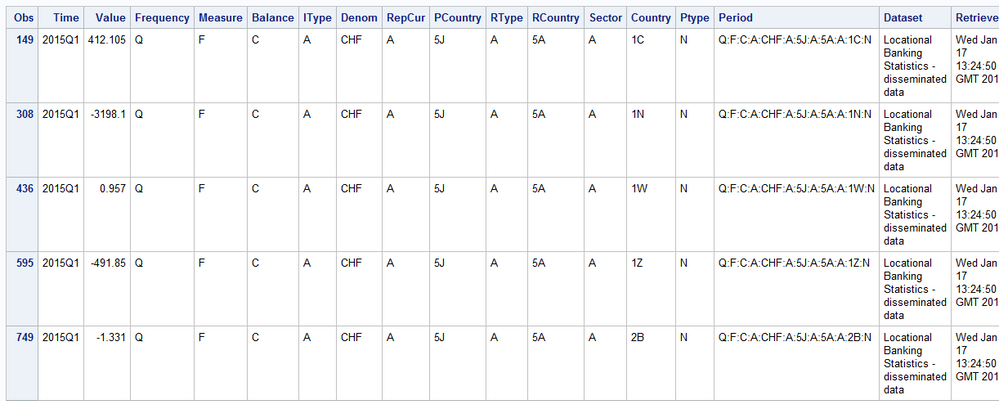

run;So if we look at a few values

proc print data=want(obs=10);

where time='01JAN2015'd ;

run;We see things like this:

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

@Tom Thanks a lot. I'll have a look at the use of array to convert data from wide to long. I've used proc transpose (twice) to achieve this, but it's good to know that there's the other way:

proc transpose data=lbs out=lbs1;

by Frequency Measure Balance_sheet_position Type_of_instruments Currency_denomination Currency_type_of_reporting_coun Parent_country

Type_of_reporting_institutions Reporting_country Counterparty_sector Counterparty_country Position_type Time_Period;

var n_:;

run;

proc sort data=lbs1;

by Frequency Measure Type_of_instruments Currency_denomination Currency_type_of_reporting_coun Parent_country

Type_of_reporting_institutions Reporting_country Counterparty_sector Counterparty_country Position_type Time_Period _NAME_;

run;

proc transpose data=lbs1 out=lbs2;

by Frequency Measure Type_of_instruments Currency_denomination Currency_type_of_reporting_coun Parent_country

Type_of_reporting_institutions Reporting_country Counterparty_sector Counterparty_country Position_type Time_Period _NAME_;

id Balance_sheet_position;

var col1;

run;As regards your suggestion to look at the data first - I fully agree. I knew that first 7 lines were the "unnecesary" description of the dataset. I just din't know how to handle the problem of getting the variable names from the 8th line. I know I can always copy or type them by hand, but this would require modifying the code every quarter, as with every update, a new column (variable) appears. That's why I like using dictionary as sugeested by @Kurt_Bremser But, once again, thanks for help and very usefull material to study the secrets of sas

Learn how use the CAT functions in SAS to join values from multiple variables into a single value.

Find more tutorials on the SAS Users YouTube channel.

SAS Training: Just a Click Away

Ready to level-up your skills? Choose your own adventure.