- Home

- /

- Programming

- /

- Programming

- /

- Re: Is there any solution for this?

- RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi all

I´m trying to improve my time processing and so I am development a hash object for a faster sort but an error about the amount of memory ocurrs and I not sure if there is any option to avoid it.

Regards

data _null_;

if 0 then set a;

declare hash epi (dataset:"a", ordered:"y");

epi.definekey ("key1","key2");

epi.definedata (all:"yes");

epi.definedone ();

epi.output (dataset: "fastorder");

stop;

run;

ERROR: Hash object added 7864304 items when memory failure occurred.

FATAL: Insufficient memory to execute DATA step program. Aborted during the EXECUTION phase.

ERROR: The SAS System stopped processing this step because of insufficient memory.

NOTE: DATA statement used (Total process time):

real time 9.06 seconds

user cpu time 7.78 seconds

system cpu time 1.20 seconds

Memory 1137687k

OS Memory 1217248k

Timestamp 15/09/2014 15:48:46

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Sergio,

There is a lot to see with that info. It are not the answers to all of my questions.

It is definitely time for a upgrade SAS 9.1 Windows XP (32) is getting outdated.

The recordlength of 672 with 10 numerics (80byte) and 6 chars 40 (240+ 3 to 9) there must be more chars in that dataset.

In you sort processing 45m45 is the time you did need to wait before it was ready.

You SAS process did use 42s and the system used 2m:40. It is a signal there is a lot time waiting for something.

That "waiting for something" must be I/O (writing/reading from disk) trying to focus on minimizing that.

Your file size must by about 22Gb with these numbers. reading 22Gb and writing that back is 44Gb data-transfer, not counted the intermediate utility files.

As the hardware is fixed some tuning options. SAS(R) 9.4 Language Reference: Concepts, Third Edition

- compress=binary You are having many char variables it can gain for reading and writing the visible datasets

- Bufsize=64k bufno=8 SAS(R) 9.4 Companion for Windows, Third Edition as attempt to minimize IO.

The Utilities files are getting options in 9.4 these are out of scope with this.

Chris proposal to spilt the dataset in eg 10 parts (2Gb) sorting them each and merging can help as avoiding a lot of those intermediate big files.

The sort memory usage is saying just 131842k being used. That one is the SAS(R) 9.4 Companion for Windows, Third Edition sortsize was set with much smaller values in older releases (64Kb default 9.1). Please verify this one.

There are possible other approaches but the are requiring more knowledge on you data. Questions:

- (answered)

- What is needed for the selection and what data is propagated?

- Which orderings are present and which are important as result?

- Is the merge split as described your wanted process?

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

To the above problem increasing the memsize will help.

to check the current or default memsize, please run the below code

PROC OPTIONS GROUP= MEMORY ;

RUN;

then increase the memsize by options memsize = X;

Thanks,

Jag

Jag

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

You also need sufficient physical RAM available.

It was interrupted at almost 8'' rows, how many more do you need?

Depending on the look-up logic (hit-rate, 1-M etc), hash-tables isn't necessary the most efficient solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Thanks guys.

I increase the amount of memory without any result. I need more physical memory installed in my pc, only 4 gb is very short

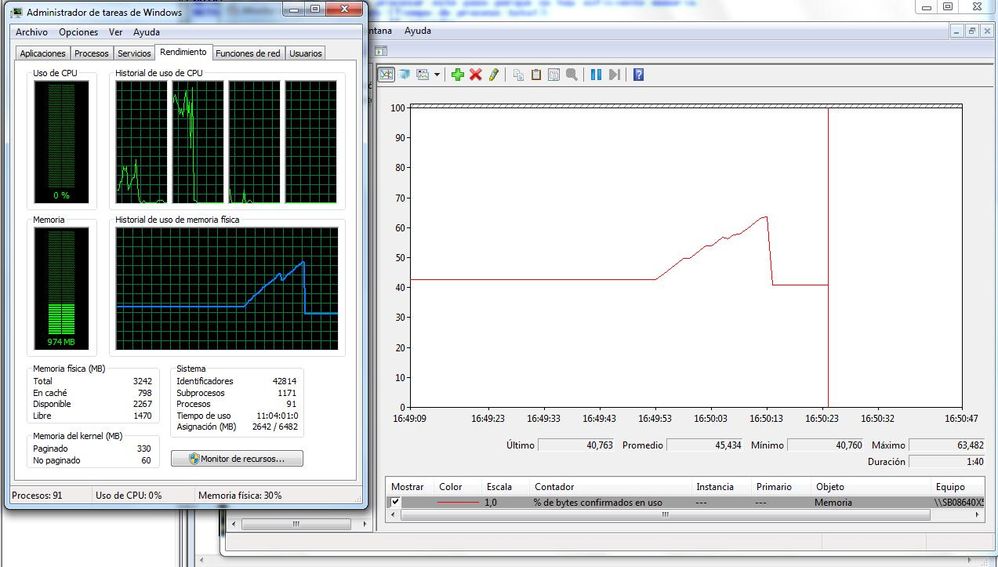

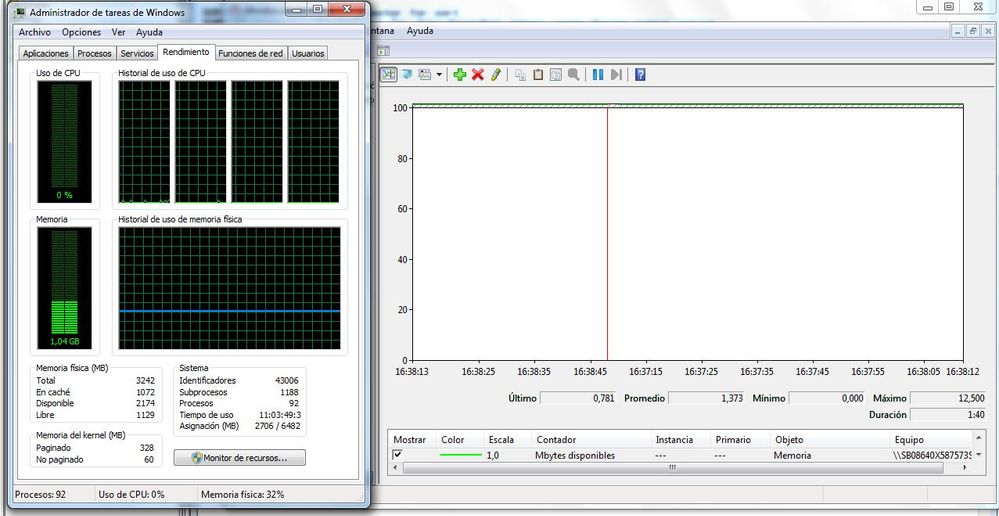

Only two images to show you the issue.

Before (1.jpg) and after (2.jpg)

Thanks for your help

P:S : A better way to sort a dataset of 40 millions rows instead of Proc report ?

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

This doesn't sound like a job for a client installation?

Are you asking for a better option or not?

If so, you must share of your whole program, the look-up logic.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

client installation?

Sorry Linush, I don't know what you mean with it.

One of the task of my job is to make sure that the data load from the source is the same that the data that is working with so we take the data set from the source, order it, take the data that is loaded in the system, order it and verify if the data are the same, wich data are in the source and not in the system and viceversa.

My code is something like this below

data a;

set b (where= vardate1<= date1 and vardate2<=date2 and vardate3<=date3 and vardate4<=date4);

run;

data x;

set y (where= vardate1<= date1 and vardate2<=date2 and vardate3<=date3 and vardate4<=date4);

run;

proc sort data = a;

order by key;

proc sort data = x (rename=(var_f = key));

order by key;

data h i j;

merge a (in=w) b(in=q);

if w then output h;

else if g then output i;

else output j;

run;

The problem is that the proc sort takes tooooooooo much time, so I´m trying to replace this piece of the code.

A good alternative was to use a hash object but muy PC hasn't enough memory for perform it with success.

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

How fast is PROC SORT on your dataset? Have you tried different sorting options?

The default SORTSIZE is sometimes very small, and gives people the feeling, that hash object is significantly better for sorting.

SAS(R) 9.2 Companion for Windows, Second Edition

try:

options sortsize=3G;

proc sort ...

But first increase your MEMSIZE to 3.5G. You can do this in a configuration file or startup option! (NOTwith options memsize=X; statement )

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Sergio replacing a proc sort is a bad idea as that one is one of the most optimal in processing (includes multithreading).

The memory sizing gives me the feeling you are running a 32-bit OS on a 4Gb system.

Only 3Gb is left for your processes and 1Gb has got lost. (yep it is there on your screenshots) And you are having 4 cpu's, could be used all in parallel.

As you are using Windows desktop the SAS installation will give you as much is available for your processing.

Please verify those assumptions. To be corrected when I am wrong. Gergely did already the on sortsize setting.

You are having 40M records en just 8M where able to fit into memory. I assume the recordsize is about 250bytes the dataset size is about 5Gb.

I see 8 numerics for dates (total 64 bytes) there must be some 180 being elsewhere. You have an unique key (key but the input is not ordered that way.

A question:

- Is the order of the key (or other indentifier) in both datasets ordered in the same way?

Than you do not need to do the sorting the merge by is accepting a non sorted input. SAS(R) 9.3 Statements: Reference

I ma not seeing the by statement so it could be a process with some defined ordering values. SAS(R) 9.3 Statements: Reference

When the source is a RDBMS you can retrieve the data ordered.

Your program will become like"

data h i j;

merge

b (in=w where=( vardate1<= date1 and vardate2<=date2 and vardate3<=date3 and vardate4<=date4) )

y (in=q where=( vardate1<= date1 and vardate2<=date2 and vardate3<=date3 and vardate4<=date4) ) ;

if w then output h;

else if g then output i;

else output j;

run;

There are possible other approaches but the are requiring more knowledge on you data. Questions:

- What is the sizing of your datasets (observations variables recordsize)?

- What I needed for the selection and what data is propagated?

- Which orderings are present and which are important as result?

- Is the merge split as described your wanted process?

An idea. Is one of the datasets just an indicator (the other key merging) in an other order of the same original dataset

just keep that key and the original order present in the dataset and drop all other variables.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

I agree with Jaap, you're most unlikely to be able to improve on the speed of a Proc Sort by using a hash object.

One thing you can do is try using SAS MP Connect to run the two sorts in parallel and then do the merge. I've done similar things myself and it's usually much faster with large data sates, even on a Windows machine.

There's an excellent example of doing just that here SAS/CONNECT(R) 9.2 User's Guide

Chris

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

I'm also wondering what your hash object actually looks like. That many records makes me think there may be a better way.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Good morning

I left here a proc sort, this proc sort is one of the bigger.

72 proc sort data = dataset_1= dataset_2;

73 by key;

74 run;

NOTA: Se han leído 32498768 observaciones del conj. datos WORK.EC_DIM_PER.

NOTA: El conj. datos WORK.PERSORDENADO tiene 32498768 observaciones y 31 variables.

NOTA: PROCEDIMIENTO SORT utilizado (Tiempo de proceso total):

tiempo real 45:25.58

tiempo de cpu del usuario 42.85 segundos

tiempo de cpu del sistema 2:40.40

Memoria 131842k

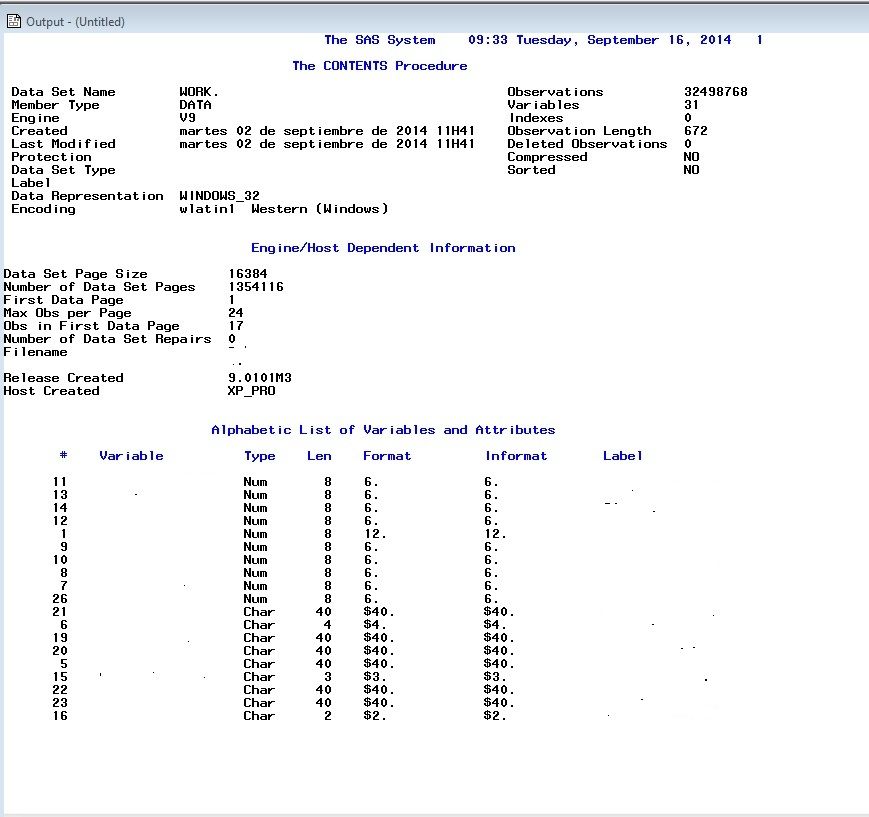

Jaap, I atttach you a proc sort screenshort, I hope it can resolved your questions.

Ballardw, Hash hasn`t enough memory for run in my PC. It couldn't load the entire table in memory. Please see my post above.

Thanks all for your help

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Sergio,

There is a lot to see with that info. It are not the answers to all of my questions.

It is definitely time for a upgrade SAS 9.1 Windows XP (32) is getting outdated.

The recordlength of 672 with 10 numerics (80byte) and 6 chars 40 (240+ 3 to 9) there must be more chars in that dataset.

In you sort processing 45m45 is the time you did need to wait before it was ready.

You SAS process did use 42s and the system used 2m:40. It is a signal there is a lot time waiting for something.

That "waiting for something" must be I/O (writing/reading from disk) trying to focus on minimizing that.

Your file size must by about 22Gb with these numbers. reading 22Gb and writing that back is 44Gb data-transfer, not counted the intermediate utility files.

As the hardware is fixed some tuning options. SAS(R) 9.4 Language Reference: Concepts, Third Edition

- compress=binary You are having many char variables it can gain for reading and writing the visible datasets

- Bufsize=64k bufno=8 SAS(R) 9.4 Companion for Windows, Third Edition as attempt to minimize IO.

The Utilities files are getting options in 9.4 these are out of scope with this.

Chris proposal to spilt the dataset in eg 10 parts (2Gb) sorting them each and merging can help as avoiding a lot of those intermediate big files.

The sort memory usage is saying just 131842k being used. That one is the SAS(R) 9.4 Companion for Windows, Third Edition sortsize was set with much smaller values in older releases (64Kb default 9.1). Please verify this one.

There are possible other approaches but the are requiring more knowledge on you data. Questions:

- (answered)

- What is needed for the selection and what data is propagated?

- Which orderings are present and which are important as result?

- Is the merge split as described your wanted process?

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Jaap for this "free of charge lessons" :smileylaugh:.

I`d try to increase the amount of memory in the buffer and total too.

As for your questions, I coul say

- The most of the datases I need the hole set, in case I only need subset it´s already implemented in the code

- Is some dataset there is no order at all, in other the order is not the one I need

- In fact it is not what I was thinking, but it works is doesn´t matter.

What about multiprocessing in local, I'd try to explain myselft. Is posible to run the two short in parallel?. I read about this but I know sure if it could be posible running a local sesion and "emulate" a server on local machine.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

I don't think using Hash Table to sort would be faster than using proc sort . If the table is too big . Here is a proposal .

data F M;

set sashelp.class;

if sex='F' then output F;

else if sex='M' then output M;

run;

proc append base=want data=F force;run;

proc append base=want data=M force;run;

Or simply make an index for it ,and you don't sort it again every time you need to BY statement .

Xia Keshan

Message was edited by: xia keshan

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

HI Sergio,

As stated, hash is not the answer here. I don't think MP will work either unless you have CONNECT on your installation, you can check that by running proc setinit. If you don't have CONNECT, you still may be able to marginally increase the speed of your sort by defragmenting your disk and/or breaking up the data into smaller chunks and sorting those. Your sort needs about 3 times the size of the data to execute, and if you have a fragmented disk then it has to get the space from multiple spots. If you split the data up into 40 different datasets of 1 million observations each, sort each of them by your keys, then set them together using the by variables, you will have a single sorted data set, and it may run faster than your sort of a single big dataset.

April 27 – 30 | Gaylord Texan | Grapevine, Texas

Registration is open

Walk in ready to learn. Walk out ready to deliver. This is the data and AI conference you can't afford to miss.

Register now and save with the early bird rate—just $795!

Learn how use the CAT functions in SAS to join values from multiple variables into a single value.

Find more tutorials on the SAS Users YouTube channel.

SAS Training: Just a Click Away

Ready to level-up your skills? Choose your own adventure.