- Home

- /

- Programming

- /

- Programming

- /

- Re: Ignoring/removing first observations

- RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Dear all,

I have a macro (%foreign_macro) that needs to process a pretty big sas data set containing time series data ("very_large"). Unfortunately, with each execution the macro can only consider one specific day in the input data set (here: &input_data.=very_large). The macro is pretty fast for dates at the beginning of the data set, but the longer it has to search through the data, the longer it takes to execute. After the 80th iteration (and >300 to go), I had to stop SAS because it already took the macro several minutes to execute.

In the example below, the macro %my_macro executes the aforementioned macro %foreign_macro for each of the dates given in the macro variable &dates. Uncommenting the code reveals the idea that I had for lowering the execution time. First, a duplicate of "very_large" called "_very_large" is made for use in further steps (disk space is not a restriction... yet). Second, before executing %foreign_macro, data that is no longer needed gets removed from the (duplicate) data set. This way, the macro doesn't have to go over this data time and time again with each execution.

Unfortunately, when using modify & remove, it seems that data isn't actually (physically) removed. The execution time still increased with each iteration, and while the program was running "_very_large" never decreased in file size (it remained the exact same size as "very_large").

My first question would be if there's another way to remove the first observations of a data set (other than using modify & remove)? The second question is if there's a better solution for my problem (other than to rewrite %foreign_macro)?

Thank you very much for your time. Any help is appreciated!

Data very_large;

Attrib Date Length=4 Informat=date11. Format=date11.;

Attrib Vars Length=3;

Input Date Vars;

Datalines;

09-JAN-2013 10

09-JAN-2013 20

09-JAN-2013 10

10-JAN-2013 20

11-JAN-2013 10

11-JAN-2013 20

Run;

%Macro foreign_macro(

input_data=,

output_data=,

date=

);

Data &output_data.;

Set &input_data.;

If Date>&date. Then Stop;

If Date=&date. Then Do;

Vars=Vars/2;

Output;

End;

Run;

%MEND;

%Macro my_macro(input_data=,dates=);

%Let dates_num=%eval(%sysfunc(count(%str(&dates.),%str(,)))+1);

/*

* FIRST: work with a duplicate of very_large ;

Data _&input_data.; Set &input_data.; Run;

%Let input_data=_&input_data.;

*/

%DO i=1 %TO &dates_num.;

/*

* SECOND: remove data from the duplicate that is no longer needed ;

Data &input_data.;

Modify &input_data.;

If Date>=%Scan(&dates,&i.,%Quote(,)) Then Stop;

Remove;

Run;

*/

%foreign_macro(

input_data=&input_data.,

output_data=output&i.,

date=%Scan(&dates.,&i.,%Quote(,))

);

%End;

%Mend;

%my_macro(

input_data=very_large,

dates=%str("09-JAN-2013"d,"11-JAN-2013"d)

);

Nachricht wurde geändert durch: Jörg Honnacker

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

How about using FIRSTOBS to select your starting observation?

This PROC step prints the data set STUDY beginning with observation 20:

proc print data=study(firstobs=20); run;

This SET statement uses both FIRSTOBS= and OBS= to read-only observations 5 through 10 from the data set STUDY. Data set NEW contains six observations.

data new; set study(firstobs=5 obs=10); run;

Also, a technique I used when dealing with large data sets is to process and cumulate the results record by record or group by group as needed. Here is an example:

%MACRO SMALLSPACE(Parameters) ;

data one_observation ;

set big_data ;

if or where data equals parameters ;

get records and do all needed processing with selections

output a single record to one_observation with results

data final_results ;

set final_results

one_observation ;

%END ;

This saves work space since each loop extracts and saves only needed data but increases processing time because you look at the large data set repeatedly. You can change the macro parameters and call as needed:

%SMALLSPACE (CAR SHOP, 1990) ;

%SMALLSPACE (FOOD STORE, 2000) ;

The final data will have all the results and needed information, any new or changed parameter need only be called in the MACRO statement, instead of hard coding something.

Just an idea depending on your situation.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Is the large data set sorted by date? Can it be indexed by date?

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Most of the time when splitting up source data sets with iteration through sub-sets, something with the design is wrong. Most of the time a design which uses by group processing is the way to go.

But o.k., let's assume your case is one of these rare exceptions. Starting with your code the change with the lowest impact which could eventually make a big difference, is to define an index as done below.

Data very_large;

Attrib Date Informat=date11. Format=date11.;

do date= "09-JAN-2013"d to "11-JAN-2016"d;

do vars=1 to 1000;

output;

end;

end;

run;

proc datasets library=work;

modify very_large;

index create Date;

run;

quit;

%Macro foreign_macro(

input_data=,

output_data=,

date=

);

Data &output_data.;

Set &input_data.

(where=(date=&date.))

;

If Date>&date. Then Stop;

If Date=&date. Then Do;

Vars=Vars/2;

Output;

End;

Run;

%MEND;

%Macro my_macro(input_data=,dates=);

%Let dates_num=%eval(%sysfunc(count(%str(&dates.),%str(,)))+1);

%DO i=1 %TO &dates_num.;

%foreign_macro(

input_data=&input_data.,

output_data=output&i.,

date=%Scan(&dates.,&i.,%Quote(,))

);

%End;

%Mend;

%my_macro(

input_data=very_large,

dates=%str("09-JAN-2013"d,"11-JAN-2016"d)

);

You've posted in your code the following syntax:

Attrib Date Length=4 Informat=date11. Format=date11.;

Attrib Vars Length=3;

That you can define a length for numeric variable is a left over from long times ago when one had to fight for every single byte.

In today's time: Please NEVER EVER define a length for numeric SAS variables. It's not worth it but it can lead to unexpected results.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Patrick, what is the reasoning behind saying SAS numeric variables should never have a length defined in todays environment?

The situations I have seen it used are similar to its use here. To save data space in large data sets some numerics are defined with less than 8 bytes. For example, if SEX_N can only be 0,1 or 2 by rule does it make sense to keep all the the unneeded bytes? In this case I think 2 bytes would suffice so 6 bytes are "wasted".

With data sets of hundreds of millions of records this is a lot of space to hold in data storage.

I have frequently been given specs that called for reduced byte sizes. Under what circumstances should I argue that these specs be changed to the default size of 8?

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

"never ever" was eventually a bit too strong a term but I'd still say that you need very good reason for a length other than 8.

I've seen too many people missunderstanding what this length statement means.

Your example with SEX_N is of course a case where one could define a length of 3 with very low risk that this ever goes wrong - but then I'd ask why not define a character variable with a length of 1 instead (yes, I know, some stats procedures require numeric indicator variables).

I'm normally less concerned about storage (where you could use compress=binary or may-be your storage facility uses anyway hardware compression) than performance - and there a smaller record size will have a positive impact.

With data sets of hundreds of millions of records this is a lot of space to hold in data storage.

These are then volumes where I normally try to argue that SAS isn't a data base and that such volumes belong into a database - and there you've got many more data types you can use.

So o.k. there might be cases where using a length other than 8 is justified - but I'd first verify that there aren't "better" options before going for it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

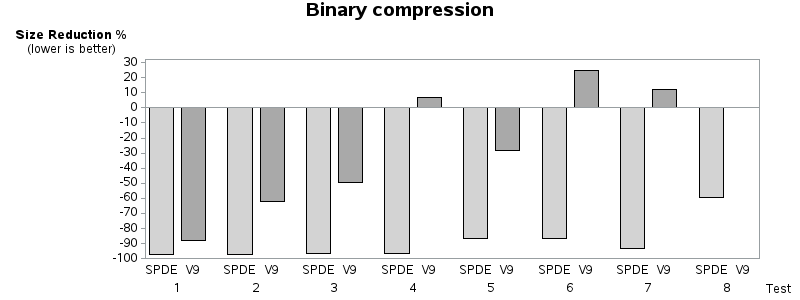

@Kow For such high volumes, I would very seriously consider storing the data using the SPDE engine and binary compression, rather than the V9 engine.

Here is a graph taken from

https://www.amazon.com/High-Performance-SAS-Coding-Christian-Graffeuille/dp/1512397490

that shows how powerful the SPDE binary compression is.

Test case #1 uses all variables with the exact same values. That's the only case where the V9 engine approaches the efficiency of the SPDE engine, but it is a totally unrealistic case. For every other data pattern, the SPDE copmpression stays at around 90% compression rate, which is incredibly good. The last case (#8) is for a table with only one numeric variable and all observations are different, and SPDE still manages a compression ratio greater than 50%.

Your processing times should drop too.

To go back to the question about numeric lengths, note that tests #2 and #7 are the same except that #2 uses numeric variables with length=8 while #7 uses length=3.

Learn how use the CAT functions in SAS to join values from multiple variables into a single value.

Find more tutorials on the SAS Users YouTube channel.

SAS Training: Just a Click Away

Ready to level-up your skills? Choose your own adventure.