- Home

- /

- Programming

- /

- Programming

- /

- Re: HASH tables in SAS Viya with CAS processing.

- RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I am trying to re purpose SAS code for use in SAS Viya with CAS.

Can be hash tables integrated with CAS, so multi thread processing is done and intermediary results are stored in CAS Memory?

When I use SAS 9.4 code with Hash tables code, referencing cas library in the set statement, the hash_table.output (dataset:'out');

creates a cas library casuser.out. However, this table contains junk data character variables look something like this:

|

�����a�@��

|

���S�@�����

|

all dates got to 1960 year value and numeric are zeros.

When do debugging, I can see that the cas library is read correctly.

Does CAS even support hash tables--could not find anything in the documentation.

Thank you.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

I can replicate this behavior except I get 100009 rows (usually):

NOTE: Running DATA step in Cloud Analytic Services.

NOTE: The DATA step will run in multiple threads.

NOTE: There were 200000 observations read from the table TEST in caslib CASUSER.

NOTE: The table out in caslib CASUSER has 100009 observations and 7 variables.

I think what is happening here is a timing issue. I updated the hash definition to include the threadid and hostname both when a row is added to the hash and when it is replaced:

data _null_;

length hostname hostname_add $50;

if _n_=1 then do;

dcl hash h(ordered:"A");

h.defineKey("id");

h.defineData("id","var","var_c", "threadid_add", "threadid_replace", "hostname_add", "hostname_replace");

h.defineDone();

call missing(threadid, threadid_add, hostname, hostname_add);

end;

set casuser.test end=lr;

*by id;

if h.find()=0 then do;

var=sum(var,var_n);

threadid_replace = _threadid_;

hostname_replace = _hostname_;

h.replace();

end;

else do;

var=var_n;

threadid_add = _threadid_;

hostname_add = _hostname_;

h.add();

end;

if lr then h.output(dataset:'out');

run;

data dup_id_rows;

set casuser.out;

by id;

if not (first.id and last.id);

run;

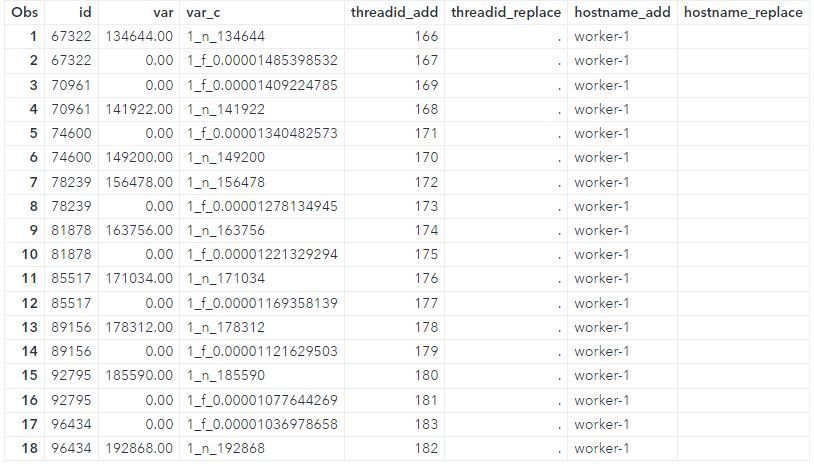

The dup_id_rows dataset contains the 18 rows associated with the 9 "extra" ids. In each case, the id was added to the hash twice by a separate thread and never replaced.

There is no locking of the hash table going on behind the scenes, so I think these are instances where the second thread checked the hash before the first thread's add was completed.

The issue is not as pronounced as it could be, in this particular case, because the casuser.test table is not distributed across all the worker nodes. When it was initially created, the log said:

NOTE: Running DATA step in Cloud Analytic Services.

NOTE: The DATA step has no input data set and will run in a single thread.

It can be distributed by running table.partition:

proc cas;

table.partition / casout={caslib="casuser", name="test", replace=true} table={caslib="casuser", name="test"} ;

table.tabledetails / caslib="casuser" name="test" level="node";

table.tableinfo / table="test";

quit;

My system has 4 workers, so the output of table.tabledetails now shows 50k rows on each node instead of 200k on one node. When I run the hash DATA step there are now 100088 rows due to more threads at work:

NOTE: Running DATA step in Cloud Analytic Services.

NOTE: The DATA step will run in multiple threads.

NOTE: There were 200000 observations read from the table TEST in caslib CASUSER.

NOTE: The table out in caslib CASUSER has 100088 observations and 7 variables.

To avoid this and still run multi-threaded, we can make all the rows associated with a given ID be processed by the same thread. This can be done by adding BY ID; to the DATA step. I have it in the above code but commented out. When I uncomment and run, there are 100000 rows:

NOTE: Running DATA step in Cloud Analytic Services.

NOTE: The DATA step will run in multiple threads.

NOTE: There were 200000 observations read from the table TEST in caslib CASUSER.

NOTE: The table out in caslib CASUSER has 100000 observations and 7 variables.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

The documentation of the DECLARE statement shows it is also of type CAS.

Please post the complete log of the step creating the dataset.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

The declare, in the documentation, references reading from work library not cas library.

*create table with duplicate IDs, numeric and character variables;

data test;

do id=1 to 100000 ;

var_n=id*2;

var_c=cats(_N_,"_n_",var_n);

output;

var_n=1/id;

var_c=cats(_N_,"_f_",var_n);

output;

end;

run;

8use Hash for aggregation by ID;

data _null_;

if _n_=1 then do;

dcl hash h(ordered:"A");

h.defineKey("id");

h.defineData("id","var","var_c");

h.defineDone();

end;

set test end=lr;

if h.find()=0 then do;

var=sum(var,var_n);

h.replace();

end;

else do;

var=var_n;

h.add();

end;

if lr then h.output(dataset:'out');

run;The log from the above program running on a work server gets me 100000,which is exactly how many I do expect-- because in the first data step we have two entries per each id.

libname casuser cas;

data casuser.test;

do id=1 to 100000 ;

var_n=id*2;

var_c=cats(_N_,"_n_",var_n);

output;

var_n=1/id;

var_c=cats(_N_,"_f_",var_n);

output;

end;

run;data _null_;

if _n_=1 then do;

dcl hash h(ordered:"A");

h.defineKey("id");

h.defineData("id","var","var_c");

h.defineDone();

end;

set casuser.test end=lr;

if h.find()=0 then do;

var=sum(var,var_n);

h.replace();

end;

else do;

var=var_n;

h.add();

end;

if lr then h.output(dataset:'out');

run;Running the above with reference to casuser.test --cas library produces, some extra 5 entries

The above is very basic sample; I am using iterators and and multiple hashes--code works in SAS processing on a single work station. Only when start using CAS the total numbers for rows are way off and the data becomes meaningless.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

I can replicate this behavior except I get 100009 rows (usually):

NOTE: Running DATA step in Cloud Analytic Services.

NOTE: The DATA step will run in multiple threads.

NOTE: There were 200000 observations read from the table TEST in caslib CASUSER.

NOTE: The table out in caslib CASUSER has 100009 observations and 7 variables.

I think what is happening here is a timing issue. I updated the hash definition to include the threadid and hostname both when a row is added to the hash and when it is replaced:

data _null_;

length hostname hostname_add $50;

if _n_=1 then do;

dcl hash h(ordered:"A");

h.defineKey("id");

h.defineData("id","var","var_c", "threadid_add", "threadid_replace", "hostname_add", "hostname_replace");

h.defineDone();

call missing(threadid, threadid_add, hostname, hostname_add);

end;

set casuser.test end=lr;

*by id;

if h.find()=0 then do;

var=sum(var,var_n);

threadid_replace = _threadid_;

hostname_replace = _hostname_;

h.replace();

end;

else do;

var=var_n;

threadid_add = _threadid_;

hostname_add = _hostname_;

h.add();

end;

if lr then h.output(dataset:'out');

run;

data dup_id_rows;

set casuser.out;

by id;

if not (first.id and last.id);

run;

The dup_id_rows dataset contains the 18 rows associated with the 9 "extra" ids. In each case, the id was added to the hash twice by a separate thread and never replaced.

There is no locking of the hash table going on behind the scenes, so I think these are instances where the second thread checked the hash before the first thread's add was completed.

The issue is not as pronounced as it could be, in this particular case, because the casuser.test table is not distributed across all the worker nodes. When it was initially created, the log said:

NOTE: Running DATA step in Cloud Analytic Services.

NOTE: The DATA step has no input data set and will run in a single thread.

It can be distributed by running table.partition:

proc cas;

table.partition / casout={caslib="casuser", name="test", replace=true} table={caslib="casuser", name="test"} ;

table.tabledetails / caslib="casuser" name="test" level="node";

table.tableinfo / table="test";

quit;

My system has 4 workers, so the output of table.tabledetails now shows 50k rows on each node instead of 200k on one node. When I run the hash DATA step there are now 100088 rows due to more threads at work:

NOTE: Running DATA step in Cloud Analytic Services.

NOTE: The DATA step will run in multiple threads.

NOTE: There were 200000 observations read from the table TEST in caslib CASUSER.

NOTE: The table out in caslib CASUSER has 100088 observations and 7 variables.

To avoid this and still run multi-threaded, we can make all the rows associated with a given ID be processed by the same thread. This can be done by adding BY ID; to the DATA step. I have it in the above code but commented out. When I uncomment and run, there are 100000 rows:

NOTE: Running DATA step in Cloud Analytic Services.

NOTE: The DATA step will run in multiple threads.

NOTE: There were 200000 observations read from the table TEST in caslib CASUSER.

NOTE: The table out in caslib CASUSER has 100000 observations and 7 variables.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for getting to the solution!

Learn how use the CAT functions in SAS to join values from multiple variables into a single value.

Find more tutorials on the SAS Users YouTube channel.

SAS Training: Just a Click Away

Ready to level-up your skills? Choose your own adventure.