- Home

- /

- Analytics

- /

- SAS Data Science

- /

- Re: Using Cut Off Node and Interpreting Predicted Probabilities.

- RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

This is in continuation with my earlier post on whether the original priors (which is not 50:50, but 2:98 for Y and N) have to be used when I am modeling on a balanced dataset in SAS EM. Thanks for your response. I could see that the original priors will have to be used to remove the "bias" introduced due to oversampling the minority instances. Later on, I can use these results on the same dataset to check how many instances who were originally tagged as "Y" have been correctly classified.

Some questions that I am not able to understand in this analysis are:

1) There is another strategy (to handle class imbalance, called cost sensitive learning) of using the profit/loss matrix in the decision processing option for the input dataset. I specify the profit/loss/weights for each decision of TP, TN, FP, FN in this decision processing node. No oversampling is used. Decision trees are supposed to consider that decision which yields the max profit by considering profit/weights as well as proportions of Y and N at each node. But it seems that here again SAS EM has chosen 0.5 as the decision threshold, because of which only 75 out of 1840 minority classes have been chosen as TP.

2) In the exported dataset of the above decision tree model, I did a comparison of certain flags (From_Target_Flag, Into_Target_Flag) and (Actual Target Flag and Decision_Target_Flag) and found the following results:

a) The above mentioned count of TP = 75 occurs for only 2 nodes of the decision tree where the following condition is satisfied: From_Target_Flag = Y and Into_Target_Flag = Y. These nodes have predicted probability > 0.5. (one has 0.53 and other has 0.9).

b) The count of TP (could be proxy) = 1271 occurs for around 7 nodes of the decision tree where the following condition is satisfied: Actual Target Flag = Y and Decision_Target_Flag = Y. These nodes have predicted probability which varies from 0.14 to 0.9.

So to summarize, which is the correct approach for finding the count of TP from question 2) and if necessary, how to implement the cut off node for selecting a smaller decision threshold from question 1).

Also attached is the table from cut off node for default value of 0.5 used post running the decision tree.

Thanks and Regards,

Aditya.

| Cut Off | Cumulative Expected Profit | Count of TP | Count of FP | Count of TN | Count of FN | Count of Predicted Positives | Count of Predicted Negatives | Count of FP and FN | Count of TP and TN | Overall Classification Rate | Change Count TP | Change Count FP | TP Rate | TN Rate | FP Rate | Event Precision Rate | Non Event Precision Rate | Overall Precision Rate | DataRole |

| 0.99 | -4.92954348 | 0 | 0 | 63042 | 1286 | 0 | 64328 | 1286 | 63042 | 98.000871 | 0 | 0 | 0 | 100 | 0 | NaN | 98.00087054 | NaN | TRAIN |

| 0.99 | -7.896983998 | 0 | 0 | 27020 | 552 | 0 | 27572 | 552 | 27020 | 97.997969 | 0 | 0 | 0 | 100 | 0 | NaN | 97.99796895 | NaN | VALIDATE |

| 0.98 | -9.859086961 | 0 | 0 | 63042 | 1286 | 0 | 64328 | 1286 | 63042 | 98.000871 | 0 | 0 | 0 | 100 | 0 | NaN | 98.00087054 | NaN | TRAIN |

| 0.98 | -15.793968 | 0 | 0 | 27020 | 552 | 0 | 27572 | 552 | 27020 | 97.997969 | 0 | 0 | 0 | 100 | 0 | NaN | 97.99796895 | NaN | VALIDATE |

| 0.97 | -14.78863044 | 0 | 0 | 63042 | 1286 | 0 | 64328 | 1286 | 63042 | 98.000871 | 0 | 0 | 0 | 100 | 0 | NaN | 98.00087054 | NaN | TRAIN |

| 0.97 | -23.69095199 | 0 | 0 | 27020 | 552 | 0 | 27572 | 552 | 27020 | 97.997969 | 0 | 0 | 0 | 100 | 0 | NaN | 97.99796895 | NaN | VALIDATE |

| 0.96 | -19.71817392 | 0 | 0 | 63042 | 1286 | 0 | 64328 | 1286 | 63042 | 98.000871 | 0 | 0 | 0 | 100 | 0 | NaN | 98.00087054 | NaN | TRAIN |

| 0.96 | -31.58793599 | 0 | 0 | 27020 | 552 | 0 | 27572 | 552 | 27020 | 97.997969 | 0 | 0 | 0 | 100 | 0 | NaN | 97.99796895 | NaN | VALIDATE |

| 0.95 | -24.6477174 | 0 | 0 | 63042 | 1286 | 0 | 64328 | 1286 | 63042 | 98.000871 | 0 | 0 | 0 | 100 | 0 | NaN | 98.00087054 | NaN | TRAIN |

| 0.95 | -39.48491999 | 0 | 0 | 27020 | 552 | 0 | 27572 | 552 | 27020 | 97.997969 | 0 | 0 | 0 | 100 | 0 | NaN | 97.99796895 | NaN | VALIDATE |

| 0.94 | -29.57726088 | 0 | 0 | 63042 | 1286 | 0 | 64328 | 1286 | 63042 | 98.000871 | 0 | 0 | 0 | 100 | 0 | NaN | 98.00087054 | NaN | TRAIN |

| 0.94 | -47.38190399 | 0 | 0 | 27020 | 552 | 0 | 27572 | 552 | 27020 | 97.997969 | 0 | 0 | 0 | 100 | 0 | NaN | 97.99796895 | NaN | VALIDATE |

| 0.93 | -34.50680436 | 0 | 0 | 63042 | 1286 | 0 | 64328 | 1286 | 63042 | 98.000871 | 0 | 0 | 0 | 100 | 0 | NaN | 98.00087054 | NaN | TRAIN |

| 0.93 | -55.27888799 | 0 | 0 | 27020 | 552 | 0 | 27572 | 552 | 27020 | 97.997969 | 0 | 0 | 0 | 100 | 0 | NaN | 97.99796895 | NaN | VALIDATE |

| 0.92 | -39.43634784 | 0 | 0 | 63042 | 1286 | 0 | 64328 | 1286 | 63042 | 98.000871 | 0 | 0 | 0 | 100 | 0 | NaN | 98.00087054 | NaN | TRAIN |

| 0.92 | -63.17587199 | 0 | 0 | 27020 | 552 | 0 | 27572 | 552 | 27020 | 97.997969 | 0 | 0 | 0 | 100 | 0 | NaN | 97.99796895 | NaN | VALIDATE |

| 0.91 | -44.36589132 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 10 | 2 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.91 | -71.07285598 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 5 | 2 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.9 | -49.2954348 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.9 | -78.96983998 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.89 | -54.22497828 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.89 | -86.86682398 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.88 | -59.15452176 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.88 | -94.76380798 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.87 | -64.08406524 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.87 | -102.660792 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.86 | -69.01360873 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.86 | -110.557776 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.85 | -73.94315221 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.85 | -118.45476 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.84 | -78.87269569 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.84 | -126.351744 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.83 | -83.80223917 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.83 | -134.248728 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.82 | -88.73178265 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.82 | -142.145712 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.81 | -93.66132613 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.81 | -150.042696 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.8 | -98.59086961 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.8 | -157.93968 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.79 | -103.5204131 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.79 | -165.836664 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.78 | -108.4499566 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.78 | -173.733648 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.77 | -113.3795 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.77 | -181.630632 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.76 | -118.3090435 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.76 | -189.527616 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.75 | -123.238587 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.75 | -197.4246 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.74 | -128.1681305 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.74 | -205.321584 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.73 | -133.097674 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.73 | -213.218568 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.72 | -138.0272175 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.72 | -221.115552 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.71 | -142.9567609 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.71 | -229.0125359 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.7 | -147.8863044 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.7 | -236.9095199 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.69 | -152.8158479 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.69 | -244.8065039 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.68 | -157.7453914 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.68 | -252.7034879 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.67 | -162.6749349 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.67 | -260.6004719 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.66 | -167.6044783 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.66 | -268.4974559 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.65 | -172.5340218 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.65 | -276.3944399 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.64 | -177.4635653 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.64 | -284.2914239 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.63 | -182.3931088 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.63 | -292.1884079 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.62 | -187.3226523 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.62 | -300.0853919 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.61 | -192.2521957 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.61 | -307.9823759 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.6 | -197.1817392 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.6 | -315.8793599 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.59 | -202.1112827 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.59 | -323.7763439 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.58 | -207.0408262 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.58 | -331.6733279 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.57 | -211.9703697 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.57 | -339.5703119 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.56 | -216.8999131 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.56 | -347.4672959 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.55 | -221.8294566 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.55 | -355.3642799 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.54 | -226.7590001 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.54 | -363.2612639 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.53 | -231.6885436 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.53 | -371.1582479 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.52 | -236.6180871 | 10 | 2 | 63040 | 1276 | 12 | 64316 | 1278 | 63050 | 98.013307 | 0 | 0 | 0.777605 | 99.996828 | 0.00317249 | 83.33333333 | 98.01604577 | 90.67468955 | TRAIN |

| 0.52 | -379.0552319 | 5 | 2 | 27018 | 547 | 7 | 27565 | 549 | 27023 | 98.00885 | 0 | 0 | 0.9057971 | 99.992598 | 0.00740192 | 71.42857143 | 98.01559949 | 84.72208546 | VALIDATE |

| 0.51 | -241.5476305 | 55 | 89 | 62953 | 1231 | 144 | 64184 | 1320 | 63008 | 97.948016 | 45 | 87 | 4.2768274 | 99.858824 | 0.14117572 | 38.19444444 | 98.08207653 | 68.13826049 | TRAIN |

| 0.51 | -386.9522159 | 20 | 46 | 26974 | 532 | 66 | 27506 | 578 | 26994 | 97.90367 | 15 | 44 | 3.6231884 | 99.829756 | 0.17024426 | 30.3030303 | 98.06587654 | 64.18445342 | VALIDATE |

| 0.5 | -246.477174 | 55 | 89 | 62953 | 1231 | 144 | 64184 | 1320 | 63008 | 97.948016 | 0 | 0 | 4.2768274 | 99.858824 | 0.14117572 | 38.19444444 | 98.08207653 | 68.13826049 | TRAIN |

| 0.5 | -394.8491999 | 20 | 46 | 26974 | 532 | 66 | 27506 | 578 | 26994 | 97.90367 | 0 | 0 | 3.6231884 | 99.829756 | 0.17024426 | 30.3030303 | 98.06587654 | 64.18445342 | VALIDATE |

| 0.49 | -251.4067175 | 55 | 89 | 62953 | 1231 | 144 | 64184 | 1320 | 63008 | 97.948016 | 0 | 0 | 4.2768274 | 99.858824 | 0.14117572 | 38.19444444 | 98.08207653 | 68.13826049 | TRAIN |

| 0.49 | -402.7461839 | 20 | 46 | 26974 | 532 | 66 | 27506 | 578 | 26994 | 97.90367 | 0 | 0 | 3.6231884 | 99.829756 | 0.17024426 | 30.3030303 | 98.06587654 | 64.18445342 | VALIDATE |

| 0.48 | -256.336261 | 55 | 89 | 62953 | 1231 | 144 | 64184 | 1320 | 63008 | 97.948016 | 0 | 0 | 4.2768274 | 99.858824 | 0.14117572 | 38.19444444 | 98.08207653 | 68.13826049 | TRAIN |

| 0.48 | -410.6431679 | 20 | 46 | 26974 | 532 | 66 | 27506 | 578 | 26994 | 97.90367 | 0 | 0 | 3.6231884 | 99.829756 | 0.17024426 | 30.3030303 | 98.06587654 | 64.18445342 | VALIDATE |

| 0.47 | -261.2658045 | 515 | 1110 | 61932 | 771 | 1625 | 62703 | 1881 | 62447 | 97.075923 | 460 | 1021 | 40.046656 | 98.239269 | 1.76073094 | 31.69230769 | 98.77039376 | 65.23135073 | TRAIN |

| 0.47 | -418.5401519 | 219 | 464 | 26556 | 333 | 683 | 26889 | 797 | 26775 | 97.109386 | 199 | 418 | 39.673913 | 98.282754 | 1.71724648 | 32.06442167 | 98.76157537 | 65.41299852 | VALIDATE |

| 0.46 | -266.1953479 | 515 | 1110 | 61932 | 771 | 1625 | 62703 | 1881 | 62447 | 97.075923 | 0 | 0 | 40.046656 | 98.239269 | 1.76073094 | 31.69230769 | 98.77039376 | 65.23135073 | TRAIN |

| 0.46 | -426.4371359 | 219 | 464 | 26556 | 333 | 683 | 26889 | 797 | 26775 | 97.109386 | 0 | 0 | 39.673913 | 98.282754 | 1.71724648 | 32.06442167 | 98.76157537 | 65.41299852 | VALIDATE |

| 0.45 | -271.1248914 | 515 | 1110 | 61932 | 771 | 1625 | 62703 | 1881 | 62447 | 97.075923 | 0 | 0 | 40.046656 | 98.239269 | 1.76073094 | 31.69230769 | 98.77039376 | 65.23135073 | TRAIN |

| 0.45 | -434.3341199 | 219 | 464 | 26556 | 333 | 683 | 26889 | 797 | 26775 | 97.109386 | 0 | 0 | 39.673913 | 98.282754 | 1.71724648 | 32.06442167 | 98.76157537 | 65.41299852 | VALIDATE |

| 0.44 | -276.0544349 | 515 | 1110 | 61932 | 771 | 1625 | 62703 | 1881 | 62447 | 97.075923 | 0 | 0 | 40.046656 | 98.239269 | 1.76073094 | 31.69230769 | 98.77039376 | 65.23135073 | TRAIN |

| 0.44 | -442.2311039 | 219 | 464 | 26556 | 333 | 683 | 26889 | 797 | 26775 | 97.109386 | 0 | 0 | 39.673913 | 98.282754 | 1.71724648 | 32.06442167 | 98.76157537 | 65.41299852 | VALIDATE |

| 0.43 | -280.9839784 | 515 | 1110 | 61932 | 771 | 1625 | 62703 | 1881 | 62447 | 97.075923 | 0 | 0 | 40.046656 | 98.239269 | 1.76073094 | 31.69230769 | 98.77039376 | 65.23135073 | TRAIN |

| 0.43 | -450.1280879 | 219 | 464 | 26556 | 333 | 683 | 26889 | 797 | 26775 | 97.109386 | 0 | 0 | 39.673913 | 98.282754 | 1.71724648 | 32.06442167 | 98.76157537 | 65.41299852 | VALIDATE |

| 0.42 | -285.9135219 | 515 | 1110 | 61932 | 771 | 1625 | 62703 | 1881 | 62447 | 97.075923 | 0 | 0 | 40.046656 | 98.239269 | 1.76073094 | 31.69230769 | 98.77039376 | 65.23135073 | TRAIN |

| 0.42 | -458.0250719 | 219 | 464 | 26556 | 333 | 683 | 26889 | 797 | 26775 | 97.109386 | 0 | 0 | 39.673913 | 98.282754 | 1.71724648 | 32.06442167 | 98.76157537 | 65.41299852 | VALIDATE |

| 0.41 | -290.8430653 | 515 | 1110 | 61932 | 771 | 1625 | 62703 | 1881 | 62447 | 97.075923 | 0 | 0 | 40.046656 | 98.239269 | 1.76073094 | 31.69230769 | 98.77039376 | 65.23135073 | TRAIN |

| 0.41 | -465.9220559 | 219 | 464 | 26556 | 333 | 683 | 26889 | 797 | 26775 | 97.109386 | 0 | 0 | 39.673913 | 98.282754 | 1.71724648 | 32.06442167 | 98.76157537 | 65.41299852 | VALIDATE |

| 0.4 | -295.7726088 | 515 | 1110 | 61932 | 771 | 1625 | 62703 | 1881 | 62447 | 97.075923 | 0 | 0 | 40.046656 | 98.239269 | 1.76073094 | 31.69230769 | 98.77039376 | 65.23135073 | TRAIN |

| 0.4 | -473.8190399 | 219 | 464 | 26556 | 333 | 683 | 26889 | 797 | 26775 | 97.109386 | 0 | 0 | 39.673913 | 98.282754 | 1.71724648 | 32.06442167 | 98.76157537 | 65.41299852 | VALIDATE |

| 0.39 | -300.7021523 | 515 | 1110 | 61932 | 771 | 1625 | 62703 | 1881 | 62447 | 97.075923 | 0 | 0 | 40.046656 | 98.239269 | 1.76073094 | 31.69230769 | 98.77039376 | 65.23135073 | TRAIN |

| 0.39 | -481.7160239 | 219 | 464 | 26556 | 333 | 683 | 26889 | 797 | 26775 | 97.109386 | 0 | 0 | 39.673913 | 98.282754 | 1.71724648 | 32.06442167 | 98.76157537 | 65.41299852 | VALIDATE |

| 0.38 | -305.6316958 | 534 | 1172 | 61870 | 752 | 1706 | 62622 | 1924 | 62404 | 97.009078 | 19 | 62 | 41.524106 | 98.140922 | 1.85907807 | 31.30128957 | 98.79914407 | 65.05021682 | TRAIN |

| 0.38 | -489.6130079 | 228 | 479 | 26541 | 324 | 707 | 26865 | 803 | 26769 | 97.087625 | 9 | 15 | 41.304348 | 98.227239 | 1.77276092 | 32.24893918 | 98.79396985 | 65.52145451 | VALIDATE |

| 0.37 | -310.5612393 | 534 | 1172 | 61870 | 752 | 1706 | 62622 | 1924 | 62404 | 97.009078 | 0 | 0 | 41.524106 | 98.140922 | 1.85907807 | 31.30128957 | 98.79914407 | 65.05021682 | TRAIN |

| 0.37 | -497.5099919 | 228 | 479 | 26541 | 324 | 707 | 26865 | 803 | 26769 | 97.087625 | 0 | 0 | 41.304348 | 98.227239 | 1.77276092 | 32.24893918 | 98.79396985 | 65.52145451 | VALIDATE |

| 0.36 | -315.4907827 | 534 | 1172 | 61870 | 752 | 1706 | 62622 | 1924 | 62404 | 97.009078 | 0 | 0 | 41.524106 | 98.140922 | 1.85907807 | 31.30128957 | 98.79914407 | 65.05021682 | TRAIN |

| 0.36 | -505.4069759 | 228 | 479 | 26541 | 324 | 707 | 26865 | 803 | 26769 | 97.087625 | 0 | 0 | 41.304348 | 98.227239 | 1.77276092 | 32.24893918 | 98.79396985 | 65.52145451 | VALIDATE |

| 0.35 | -320.4203262 | 534 | 1172 | 61870 | 752 | 1706 | 62622 | 1924 | 62404 | 97.009078 | 0 | 0 | 41.524106 | 98.140922 | 1.85907807 | 31.30128957 | 98.79914407 | 65.05021682 | TRAIN |

| 0.35 | -513.3039599 | 228 | 479 | 26541 | 324 | 707 | 26865 | 803 | 26769 | 97.087625 | 0 | 0 | 41.304348 | 98.227239 | 1.77276092 | 32.24893918 | 98.79396985 | 65.52145451 | VALIDATE |

| 0.34 | -325.3498697 | 534 | 1172 | 61870 | 752 | 1706 | 62622 | 1924 | 62404 | 97.009078 | 0 | 0 | 41.524106 | 98.140922 | 1.85907807 | 31.30128957 | 98.79914407 | 65.05021682 | TRAIN |

| 0.34 | -521.2009439 | 228 | 479 | 26541 | 324 | 707 | 26865 | 803 | 26769 | 97.087625 | 0 | 0 | 41.304348 | 98.227239 | 1.77276092 | 32.24893918 | 98.79396985 | 65.52145451 | VALIDATE |

| 0.33 | -330.2794132 | 546 | 1220 | 61822 | 740 | 1766 | 62562 | 1960 | 62368 | 96.953115 | 12 | 48 | 42.457232 | 98.064782 | 1.93521779 | 30.91732729 | 98.81717336 | 64.86725033 | TRAIN |

| 0.33 | -529.0979279 | 233 | 509 | 26511 | 319 | 742 | 26830 | 828 | 26744 | 96.996953 | 5 | 30 | 42.210145 | 98.11621 | 1.88378979 | 31.40161725 | 98.81103243 | 65.10632484 | VALIDATE |

| 0.32 | -335.2089567 | 595 | 1424 | 61618 | 691 | 2019 | 62309 | 2115 | 62213 | 96.712163 | 49 | 204 | 46.267496 | 97.741188 | 2.25881159 | 29.47003467 | 98.89101093 | 64.1805228 | TRAIN |

| 0.32 | -536.9949119 | 255 | 621 | 26399 | 297 | 876 | 26696 | 918 | 26654 | 96.670535 | 22 | 112 | 46.195652 | 97.701702 | 2.29829756 | 29.10958904 | 98.88747378 | 63.99853141 | VALIDATE |

| 0.31 | -340.1385001 | 595 | 1424 | 61618 | 691 | 2019 | 62309 | 2115 | 62213 | 96.712163 | 0 | 0 | 46.267496 | 97.741188 | 2.25881159 | 29.47003467 | 98.89101093 | 64.1805228 | TRAIN |

| 0.31 | -544.8918959 | 255 | 621 | 26399 | 297 | 876 | 26696 | 918 | 26654 | 96.670535 | 0 | 0 | 46.195652 | 97.701702 | 2.29829756 | 29.10958904 | 98.88747378 | 63.99853141 | VALIDATE |

| 0.3 | -345.0680436 | 595 | 1424 | 61618 | 691 | 2019 | 62309 | 2115 | 62213 | 96.712163 | 0 | 0 | 46.267496 | 97.741188 | 2.25881159 | 29.47003467 | 98.89101093 | 64.1805228 | TRAIN |

| 0.3 | -552.7888799 | 255 | 621 | 26399 | 297 | 876 | 26696 | 918 | 26654 | 96.670535 | 0 | 0 | 46.195652 | 97.701702 | 2.29829756 | 29.10958904 | 98.88747378 | 63.99853141 | VALIDATE |

| 0.29 | -349.9975871 | 595 | 1424 | 61618 | 691 | 2019 | 62309 | 2115 | 62213 | 96.712163 | 0 | 0 | 46.267496 | 97.741188 | 2.25881159 | 29.47003467 | 98.89101093 | 64.1805228 | TRAIN |

| 0.29 | -560.6858639 | 255 | 621 | 26399 | 297 | 876 | 26696 | 918 | 26654 | 96.670535 | 0 | 0 | 46.195652 | 97.701702 | 2.29829756 | 29.10958904 | 98.88747378 | 63.99853141 | VALIDATE |

| 0.28 | -354.9271306 | 595 | 1424 | 61618 | 691 | 2019 | 62309 | 2115 | 62213 | 96.712163 | 0 | 0 | 46.267496 | 97.741188 | 2.25881159 | 29.47003467 | 98.89101093 | 64.1805228 | TRAIN |

| 0.28 | -568.5828479 | 255 | 621 | 26399 | 297 | 876 | 26696 | 918 | 26654 | 96.670535 | 0 | 0 | 46.195652 | 97.701702 | 2.29829756 | 29.10958904 | 98.88747378 | 63.99853141 | VALIDATE |

| 0.27 | -359.8566741 | 595 | 1424 | 61618 | 691 | 2019 | 62309 | 2115 | 62213 | 96.712163 | 0 | 0 | 46.267496 | 97.741188 | 2.25881159 | 29.47003467 | 98.89101093 | 64.1805228 | TRAIN |

| 0.27 | -576.4798319 | 255 | 621 | 26399 | 297 | 876 | 26696 | 918 | 26654 | 96.670535 | 0 | 0 | 46.195652 | 97.701702 | 2.29829756 | 29.10958904 | 98.88747378 | 63.99853141 | VALIDATE |

| 0.26 | -364.7862175 | 625 | 1597 | 61445 | 661 | 2222 | 62106 | 2258 | 62070 | 96.489864 | 30 | 173 | 48.600311 | 97.466768 | 2.53323181 | 28.12781278 | 98.93569059 | 63.53175169 | TRAIN |

| 0.26 | -584.3768159 | 265 | 695 | 26325 | 287 | 960 | 26612 | 982 | 26590 | 96.438416 | 10 | 74 | 48.007246 | 97.427831 | 2.57216876 | 27.60416667 | 98.92153916 | 63.26285291 | VALIDATE |

| 0.25 | -369.715761 | 683 | 1937 | 61105 | 603 | 2620 | 61708 | 2540 | 61788 | 96.051486 | 58 | 340 | 53.11042 | 96.927445 | 3.0725548 | 26.06870229 | 99.02281714 | 62.54575971 | TRAIN |

| 0.25 | -592.2737999 | 289 | 829 | 26191 | 263 | 1118 | 26454 | 1092 | 26480 | 96.03946 | 24 | 134 | 52.355072 | 96.931902 | 3.06809771 | 25.84973166 | 99.00582143 | 62.42777654 | VALIDATE |

| 0.24 | -374.6453045 | 683 | 1937 | 61105 | 603 | 2620 | 61708 | 2540 | 61788 | 96.051486 | 0 | 0 | 53.11042 | 96.927445 | 3.0725548 | 26.06870229 | 99.02281714 | 62.54575971 | TRAIN |

| 0.24 | -600.1707839 | 289 | 829 | 26191 | 263 | 1118 | 26454 | 1092 | 26480 | 96.03946 | 0 | 0 | 52.355072 | 96.931902 | 3.06809771 | 25.84973166 | 99.00582143 | 62.42777654 | VALIDATE |

| 0.23 | -379.574848 | 683 | 1937 | 61105 | 603 | 2620 | 61708 | 2540 | 61788 | 96.051486 | 0 | 0 | 53.11042 | 96.927445 | 3.0725548 | 26.06870229 | 99.02281714 | 62.54575971 | TRAIN |

| 0.23 | -608.0677679 | 289 | 829 | 26191 | 263 | 1118 | 26454 | 1092 | 26480 | 96.03946 | 0 | 0 | 52.355072 | 96.931902 | 3.06809771 | 25.84973166 | 99.00582143 | 62.42777654 | VALIDATE |

| 0.22 | -384.5043915 | 683 | 1937 | 61105 | 603 | 2620 | 61708 | 2540 | 61788 | 96.051486 | 0 | 0 | 53.11042 | 96.927445 | 3.0725548 | 26.06870229 | 99.02281714 | 62.54575971 | TRAIN |

| 0.22 | -615.9647519 | 289 | 829 | 26191 | 263 | 1118 | 26454 | 1092 | 26480 | 96.03946 | 0 | 0 | 52.355072 | 96.931902 | 3.06809771 | 25.84973166 | 99.00582143 | 62.42777654 | VALIDATE |

| 0.21 | -389.4339349 | 685 | 1952 | 61090 | 601 | 2637 | 61691 | 2553 | 61775 | 96.031277 | 2 | 15 | 53.265941 | 96.903652 | 3.09634847 | 25.97648843 | 99.02578982 | 62.50113913 | TRAIN |

| 0.21 | -623.8617359 | 290 | 835 | 26185 | 262 | 1125 | 26447 | 1097 | 26475 | 96.021326 | 1 | 6 | 52.536232 | 96.909697 | 3.09030348 | 25.77777778 | 99.00933943 | 62.39355861 | VALIDATE |

| 0.2 | -394.3634784 | 685 | 1952 | 61090 | 601 | 2637 | 61691 | 2553 | 61775 | 96.031277 | 0 | 0 | 53.265941 | 96.903652 | 3.09634847 | 25.97648843 | 99.02578982 | 62.50113913 | TRAIN |

| 0.2 | -631.7587199 | 290 | 835 | 26185 | 262 | 1125 | 26447 | 1097 | 26475 | 96.021326 | 0 | 0 | 52.536232 | 96.909697 | 3.09030348 | 25.77777778 | 99.00933943 | 62.39355861 | VALIDATE |

| 0.19 | -399.2930219 | 693 | 2020 | 61022 | 593 | 2713 | 61615 | 2613 | 61715 | 95.938005 | 8 | 68 | 53.888025 | 96.795787 | 3.20421306 | 25.54367858 | 99.03757202 | 62.2906253 | TRAIN |

| 0.19 | -639.6557039 | 293 | 865 | 26155 | 259 | 1158 | 26414 | 1124 | 26448 | 95.923401 | 3 | 30 | 53.07971 | 96.798668 | 3.20133235 | 25.30224525 | 99.01945938 | 62.16085231 | VALIDATE |

| 0.18 | -404.2225654 | 817 | 3140 | 59902 | 469 | 3957 | 60371 | 3609 | 60719 | 94.38969 | 124 | 1120 | 63.530327 | 95.019194 | 4.98080645 | 20.64695476 | 99.22313694 | 59.93504585 | TRAIN |

| 0.18 | -647.5526879 | 346 | 1311 | 25709 | 206 | 1657 | 25915 | 1517 | 26055 | 94.498041 | 53 | 446 | 62.681159 | 95.148038 | 4.85196151 | 20.88111044 | 99.20509358 | 60.04310201 | VALIDATE |

| 0.17 | -409.1521089 | 817 | 3140 | 59902 | 469 | 3957 | 60371 | 3609 | 60719 | 94.38969 | 0 | 0 | 63.530327 | 95.019194 | 4.98080645 | 20.64695476 | 99.22313694 | 59.93504585 | TRAIN |

| 0.17 | -655.4496719 | 346 | 1311 | 25709 | 206 | 1657 | 25915 | 1517 | 26055 | 94.498041 | 0 | 0 | 62.681159 | 95.148038 | 4.85196151 | 20.88111044 | 99.20509358 | 60.04310201 | VALIDATE |

| 0.16 | -414.0816524 | 817 | 3140 | 59902 | 469 | 3957 | 60371 | 3609 | 60719 | 94.38969 | 0 | 0 | 63.530327 | 95.019194 | 4.98080645 | 20.64695476 | 99.22313694 | 59.93504585 | TRAIN |

| 0.16 | -663.3466559 | 346 | 1311 | 25709 | 206 | 1657 | 25915 | 1517 | 26055 | 94.498041 | 0 | 0 | 62.681159 | 95.148038 | 4.85196151 | 20.88111044 | 99.20509358 | 60.04310201 | VALIDATE |

| 0.15 | -419.0111958 | 821 | 3183 | 59859 | 465 | 4004 | 60324 | 3648 | 60680 | 94.329064 | 4 | 43 | 63.841369 | 94.950985 | 5.04901494 | 20.5044955 | 99.22916252 | 59.86682901 | TRAIN |

| 0.15 | -671.2436398 | 347 | 1325 | 25695 | 205 | 1672 | 25900 | 1530 | 26042 | 94.450892 | 1 | 14 | 62.862319 | 95.096225 | 4.90377498 | 20.75358852 | 99.20849421 | 59.98104136 | VALIDATE |

| 0.14 | -423.9407393 | 872 | 3820 | 59222 | 414 | 4692 | 59636 | 4234 | 60094 | 93.418107 | 51 | 637 | 67.807154 | 93.940548 | 6.05945243 | 18.58482523 | 99.30578845 | 58.94530684 | TRAIN |

| 0.14 | -679.1406238 | 375 | 1597 | 25423 | 177 | 1972 | 25600 | 1774 | 25798 | 93.565936 | 28 | 272 | 67.934783 | 94.089563 | 5.91043671 | 19.01622718 | 99.30859375 | 59.16241047 | VALIDATE |

| 0.13 | -428.8702828 | 872 | 3820 | 59222 | 414 | 4692 | 59636 | 4234 | 60094 | 93.418107 | 0 | 0 | 67.807154 | 93.940548 | 6.05945243 | 18.58482523 | 99.30578845 | 58.94530684 | TRAIN |

| 0.13 | -687.0376078 | 375 | 1597 | 25423 | 177 | 1972 | 25600 | 1774 | 25798 | 93.565936 | 0 | 0 | 67.934783 | 94.089563 | 5.91043671 | 19.01622718 | 99.30859375 | 59.16241047 | VALIDATE |

| 0.12 | -433.7998263 | 872 | 3820 | 59222 | 414 | 4692 | 59636 | 4234 | 60094 | 93.418107 | 0 | 0 | 67.807154 | 93.940548 | 6.05945243 | 18.58482523 | 99.30578845 | 58.94530684 | TRAIN |

| 0.12 | -694.9345918 | 375 | 1597 | 25423 | 177 | 1972 | 25600 | 1774 | 25798 | 93.565936 | 0 | 0 | 67.934783 | 94.089563 | 5.91043671 | 19.01622718 | 99.30859375 | 59.16241047 | VALIDATE |

| 0.11 | -438.7293698 | 888 | 4074 | 58968 | 398 | 4962 | 59366 | 4472 | 59856 | 93.048128 | 16 | 254 | 69.051322 | 93.537642 | 6.46235843 | 17.89600967 | 99.32958259 | 58.61279613 | TRAIN |

| 0.11 | -702.8315758 | 383 | 1720 | 25300 | 169 | 2103 | 25469 | 1889 | 25683 | 93.148847 | 8 | 123 | 69.384058 | 93.634345 | 6.36565507 | 18.21207798 | 99.33644823 | 58.77426311 | VALIDATE |

| 0.1 | -443.6589132 | 888 | 4074 | 58968 | 398 | 4962 | 59366 | 4472 | 59856 | 93.048128 | 0 | 0 | 69.051322 | 93.537642 | 6.46235843 | 17.89600967 | 99.32958259 | 58.61279613 | TRAIN |

| 0.1 | -710.7285598 | 383 | 1720 | 25300 | 169 | 2103 | 25469 | 1889 | 25683 | 93.148847 | 0 | 0 | 69.384058 | 93.634345 | 6.36565507 | 18.21207798 | 99.33644823 | 58.77426311 | VALIDATE |

| 0.09 | -448.5884567 | 888 | 4074 | 58968 | 398 | 4962 | 59366 | 4472 | 59856 | 93.048128 | 0 | 0 | 69.051322 | 93.537642 | 6.46235843 | 17.89600967 | 99.32958259 | 58.61279613 | TRAIN |

| 0.09 | -718.6255438 | 383 | 1720 | 25300 | 169 | 2103 | 25469 | 1889 | 25683 | 93.148847 | 0 | 0 | 69.384058 | 93.634345 | 6.36565507 | 18.21207798 | 99.33644823 | 58.77426311 | VALIDATE |

| 0.08 | -453.5180002 | 897 | 4280 | 58762 | 389 | 5177 | 59151 | 4669 | 59659 | 92.741885 | 9 | 206 | 69.751166 | 93.210875 | 6.78912471 | 17.32663705 | 99.34236108 | 58.33449906 | TRAIN |

| 0.08 | -726.5225278 | 386 | 1799 | 25221 | 166 | 2185 | 25387 | 1965 | 25607 | 92.873205 | 3 | 79 | 69.927536 | 93.341969 | 6.65803109 | 17.66590389 | 99.34612203 | 58.50601296 | VALIDATE |

| 0.07 | -458.4475437 | 897 | 4280 | 58762 | 389 | 5177 | 59151 | 4669 | 59659 | 92.741885 | 0 | 0 | 69.751166 | 93.210875 | 6.78912471 | 17.32663705 | 99.34236108 | 58.33449906 | TRAIN |

| 0.07 | -734.4195118 | 386 | 1799 | 25221 | 166 | 2185 | 25387 | 1965 | 25607 | 92.873205 | 0 | 0 | 69.927536 | 93.341969 | 6.65803109 | 17.66590389 | 99.34612203 | 58.50601296 | VALIDATE |

| 0.06 | -463.3770872 | 925 | 5113 | 57929 | 361 | 6038 | 58290 | 5474 | 58854 | 91.490486 | 28 | 833 | 71.92846 | 91.889534 | 8.11046604 | 15.31964227 | 99.38068279 | 57.35016253 | TRAIN |

| 0.06 | -742.3164958 | 397 | 2163 | 24857 | 155 | 2560 | 25012 | 2318 | 25254 | 91.59292 | 11 | 364 | 71.92029 | 91.994819 | 8.00518135 | 15.5078125 | 99.38029746 | 57.44405498 | VALIDATE |

| 0.05 | -468.3066306 | 1102 | 11308 | 51734 | 184 | 12410 | 51918 | 11492 | 52836 | 82.135307 | 177 | 6195 | 85.692068 | 82.062752 | 17.9372482 | 8.879935536 | 99.64559498 | 54.26276526 | TRAIN |

| 0.05 | -750.2134798 | 467 | 4841 | 22179 | 85 | 5308 | 22264 | 4926 | 22646 | 82.134049 | 70 | 2678 | 84.601449 | 82.083642 | 17.9163583 | 8.798040693 | 99.61821775 | 54.20812922 | VALIDATE |

| 0.04 | -473.2361741 | 1104 | 11394 | 51648 | 182 | 12498 | 51830 | 11576 | 52752 | 82.004726 | 2 | 86 | 85.847589 | 81.926335 | 18.0736652 | 8.833413346 | 99.64885202 | 54.24113268 | TRAIN |

| 0.04 | -758.1104638 | 467 | 4882 | 22138 | 85 | 5349 | 22223 | 4967 | 22605 | 81.985347 | 0 | 41 | 84.601449 | 81.931902 | 18.0680977 | 8.730603851 | 99.61751339 | 54.17405862 | VALIDATE |

| 0.03 | -478.1657176 | 1156 | 14391 | 48651 | 130 | 15547 | 48781 | 14521 | 49807 | 77.426626 | 52 | 2997 | 89.891135 | 77.172361 | 22.8276387 | 7.435518106 | 99.7335028 | 53.58451045 | TRAIN |

| 0.03 | -766.0074478 | 490 | 6135 | 20885 | 62 | 6625 | 20947 | 6197 | 21375 | 77.5243 | 23 | 1253 | 88.768116 | 77.294597 | 22.7054034 | 7.396226415 | 99.70401489 | 53.55012065 | VALIDATE |

| 0.02 | -483.0952611 | 1194 | 17693 | 45349 | 92 | 18887 | 45441 | 17785 | 46543 | 72.35263 | 38 | 3302 | 92.846034 | 71.934583 | 28.0654167 | 6.321808651 | 99.79753967 | 53.05967416 | TRAIN |

| 0.02 | -773.9044318 | 511 | 7539 | 19481 | 41 | 8050 | 19522 | 7580 | 19992 | 72.508342 | 21 | 1404 | 92.572464 | 72.098446 | 27.9015544 | 6.347826087 | 99.78998053 | 53.06890331 | VALIDATE |

| 0.01 | -488.0248046 | 1204 | 18975 | 44067 | 82 | 20179 | 44149 | 19057 | 45271 | 70.375264 | 10 | 1282 | 93.623639 | 69.901018 | 30.0989816 | 5.966598939 | 99.81426533 | 52.89043213 | TRAIN |

| 0.01 | -781.8014158 | 514 | 8061 | 18959 | 38 | 8575 | 18997 | 8099 | 19473 | 70.625997 | 3 | 522 | 93.115942 | 70.166543 | 29.8334567 | 5.994169096 | 99.79996842 | 52.89706876 | VALIDATE |

| 0 | -492.954348 | 1286 | 63042 | 0 | 0 | 64328 | 0 | 63042 | 1286 | 1.9991295 | 82 | 44067 | 100 | 0 | 100 | 1.999129462 | NaN | NaN | TRAIN |

| 0 | -789.6983998 | 552 | 27020 | 0 | 0 | 27572 | 0 | 27020 | 552 | 2.002031 | 38 | 18959 | 100 | 0 | 100 | 2.002031046 | NaN | NaN | VALIDATE |

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aditya,

Thanks for including your results so clearly. It sure helps!

Comments to your questions

If I read your post right, cost sensitive learning is available in SAS Enterprise Miner using the profit matrix in the Decision Weights options?

It is correct that if a profit matrix is defined, the Decision Tree node will select the tree that has the largest average profit and smallest average loss.

When you say that this pruned tree has "0.5 decision threshold", you mean that the predicted probability cutoff is 0.5? This default cutoff is expected for all models, unless you change it in the SAS cutoff node. The fact that you used a profit matrix in your decision weights to find a pruned tree does not override your 0.5 cutoff for your predicted probabilities.

For 2), you are checking the number of true positives, correct? Is that what you are doing? A suggestion on how to do it below.

Connect a SAS Code node to your diagram, open the editor, paste the below, and run it. You will get tables for the TP FP TN FN of your training and validation data. It will work as long as you have defined a target on your metadata and you have both training and validation data.

title1 "Training data TP FP TN FN";

proc tabulate data=&EM_IMPORT_DATA;

class f_%EM_TARGET i_%EM_TARGET;

table f_%EM_TARGET,i_%EM_TARGET*n;

run;

title1"";

title1 "Validation data TP FP TN FN";

proc tabulate data=&EM_IMPORT_VALIDATE;

class f_%EM_TARGET i_%EM_TARGET;

table f_%EM_TARGET,i_%EM_TARGET*n;

run;

title1"";

Additional comments

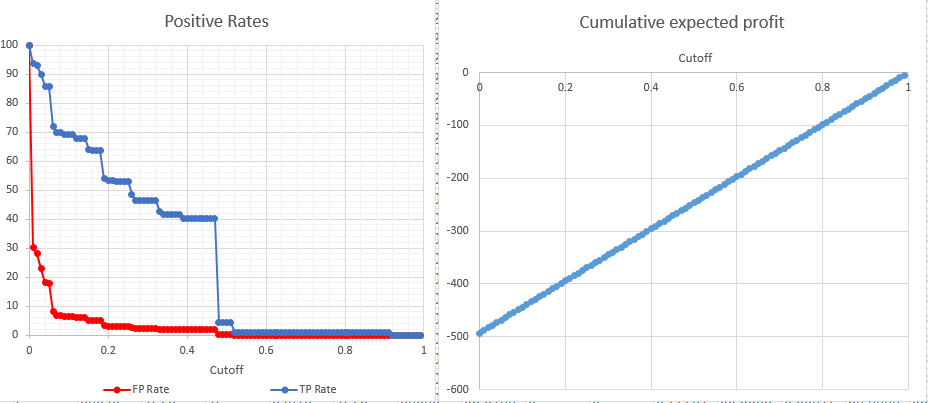

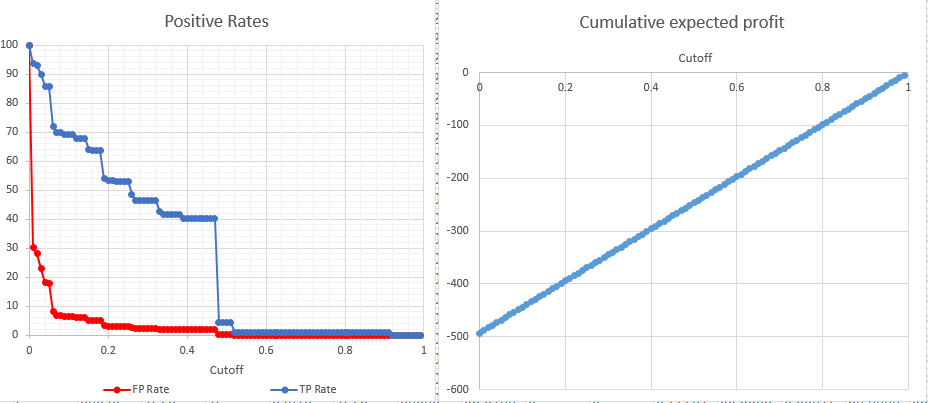

It seems to me that your decision tree is not helping you very much. I plotted your Positive Rates and your Cumulative Expected profit. Your positive curve rates do not look too good. And your Cummulative expected profit is basically telling you "accept everybody so that you don't lose money and break even". I would suggest to try to get a better model before paying too much attention to the cumulative expected profits.

Further reading

For an example of what "good" positive rates look like, see the positive rates of the example in this tip ().

Since you are dealing with a rare target, the only other doc I can think of is the "Detecting Rare Classes" in the SAS Enterprise Miner reference help. It is in the Predictive Modeling chapter.

I hope it helps. Good luck!

Miguel

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aditya,

Thanks for including your results so clearly. It sure helps!

Comments to your questions

If I read your post right, cost sensitive learning is available in SAS Enterprise Miner using the profit matrix in the Decision Weights options?

It is correct that if a profit matrix is defined, the Decision Tree node will select the tree that has the largest average profit and smallest average loss.

When you say that this pruned tree has "0.5 decision threshold", you mean that the predicted probability cutoff is 0.5? This default cutoff is expected for all models, unless you change it in the SAS cutoff node. The fact that you used a profit matrix in your decision weights to find a pruned tree does not override your 0.5 cutoff for your predicted probabilities.

For 2), you are checking the number of true positives, correct? Is that what you are doing? A suggestion on how to do it below.

Connect a SAS Code node to your diagram, open the editor, paste the below, and run it. You will get tables for the TP FP TN FN of your training and validation data. It will work as long as you have defined a target on your metadata and you have both training and validation data.

title1 "Training data TP FP TN FN";

proc tabulate data=&EM_IMPORT_DATA;

class f_%EM_TARGET i_%EM_TARGET;

table f_%EM_TARGET,i_%EM_TARGET*n;

run;

title1"";

title1 "Validation data TP FP TN FN";

proc tabulate data=&EM_IMPORT_VALIDATE;

class f_%EM_TARGET i_%EM_TARGET;

table f_%EM_TARGET,i_%EM_TARGET*n;

run;

title1"";

Additional comments

It seems to me that your decision tree is not helping you very much. I plotted your Positive Rates and your Cumulative Expected profit. Your positive curve rates do not look too good. And your Cummulative expected profit is basically telling you "accept everybody so that you don't lose money and break even". I would suggest to try to get a better model before paying too much attention to the cumulative expected profits.

Further reading

For an example of what "good" positive rates look like, see the positive rates of the example in this tip ().

Since you are dealing with a rare target, the only other doc I can think of is the "Detecting Rare Classes" in the SAS Enterprise Miner reference help. It is in the Predictive Modeling chapter.

I hope it helps. Good luck!

Miguel

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi Miguel,

I would like to reiterate few aspects related to this analysis (which you also have pointed out in your reply above):

1) Using profit/cost matrix is one of the solutions/strategies to address the class imbalance problem (in my case Y:N = 2%:98%).

2) Even if we use the profit/cost matrix, this doesnt override the default probabilities for forming decisions(predictions) Y or N. Hence I have used a cut off node after the model comparison (trees v/s logistic regression).

3) I would like to state that I had used the following weights (with maximize option) in decision properties: 5, -100, -10, 1 for TP, FN, FP and TN respectively. The issue I see here is that FN have been given 20 times more weightage than TP. Hence is there a specific logic by which these weight assignments can be done correctly.

I would like to specify that this analysis is about identification of fraudulent persons which are already low in number than the overall non fraudulent ones. Hence I had given highest penalty (-ve profit) for those people who commit fraud but are tagged as non fraudulent by the model. Do suggest me if there is an alternative scheme of correctly specifying the profit/loss matrix in SAS E-Miner.

Thanks a lot for your comments in this regard.

Aditya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi Miguel,

As a follow up reply, I forgot to mention that one of the methods which tells us how to specify the optimal cut off (which could be sub 0.5) is as follows:

1) We first calculate the total profit at each cut off value (from 0.01 to 0.99) as: weight for TP*TP count + weight for FN*FN count + weight for FP*FP count + weight of TN*TN count (note in my case weights for FN and FP are negative, and should be so considered while deriving the total profit).

2) Then we calculate the average profit at each cut off node as follows: Total Profit/Predicted Positives (where predicted positives are sum of TP and FP).

3) We pick that cut off value which gives us the highest average profit in validation dataset and is almost similar to the average profit in training dataset as well.

Do let me know if you could help me with assigning of proper weights to each outcomes as well as if the above formula needs to be modified for calculation for average profit. I think in general for fraud detection we need to have high TP and low FP and low FN isnt it?

Thanks,

Aditya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi Miguel,

I would like to reiterate few aspects related to this analysis (which you also have pointed out in your reply above):

1) Using profit/cost matrix is one of the solutions/strategies to address the class imbalance problem (in my case Y:N = 2%:98%).

2) Even if we use the profit/cost matrix, this doesnt override the default probabilities for forming decisions(predictions) Y or N. Hence I have used a cut off node after the model comparison (trees v/s logistic regression).

3) I would like to state that I had used the following weights (with maximize option) in decision properties: 5, -100, -10, 1 for TP, FN, FP and TN respectively. The issue I see here is that FN have been given 20 times more weightage than TP. Hence is there a specific logic by which these weight assignments can be done correctly.

I would like to specify that this analysis is about identification of fraudulent persons which are already low in number than the overall non fraudulent ones. Hence I had given highest penalty (-ve profit) for those people who commit fraud but are tagged as non fraudulent by the model. Do suggest me if there is an alternative scheme of correctly specifying the profit/loss matrix in SAS E-Miner.

As a follow up reply, I forgot to mention that one of the methods which tells us how to specify the optimal cut off (which could be sub 0.5) is as follows:

1) We first calculate the total profit at each cut off value (from 0.01 to 0.99) as: weight for TP*TP count + weight for FN*FN count + weight for FP*FP count + weight of TN*TN count (note in my case weights for FN and FP are negative, and should be so considered while deriving the total profit).

2) Then we calculate the average profit at each cut off node as follows: Total Profit/Predicted Positives (where predicted positives are sum of TP and FP).

3) We pick that cut off value which gives us the highest average profit in validation dataset and is almost similar to the average profit in training dataset as well.

Do let me know if you could help me with assigning of proper weights to each outcomes as well as if the above formula needs to be modified for calculation for average profit. I think in general for fraud detection we need to have high TP and low FP and low FN isnt it?

Thanks,

Aditya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

Could someone please provide me with pointers on how to construct the misclassification matrix and how to choose the optimal cut off probability other than 0.5 in SAS Enterprise Miner, for the detailed explained in my earlier reply?

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aditya,

Sorry, I haven't had time to look at all your questions in detail. See my crosspost in the discussion .

Also, check out this paper. The authors use the Cutoff node and custom coding to do something similar than what I think you are trying to do. Link to the paper: http://support.sas.com/resources/papers/proceedings12/127-2012.pdf

I hope it helps,

Miguel

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

ajosh,

Modeling rare events (which is actually quite common) is often challenging for several reasons:

* The null model is highly accurate (2% response rate means any model assigning all to the nonevent is 98% accurate)

* Failing to put any additional weight on correctly predicting the rare event can lead to a null model (for the reasons above)

* Increasing the weight on correctly predicting the rare event results in picking far more observations having the event than actually do

It might be helpful to separate the tasks of modeling an outcome and taking action on the outcome. When modeling a rare event, you must often either oversample the rare event, add weight to correctly predicting the rare event, choose a model selection criteria that is not based on the classification, or some combination of these. For reason stated above, misclassification is typically not a good selection criteria for modeling. SAS Enterprise Miner always provides a classification based on which outcome is most likely. When a target profile is created and decision weights are employed, SAS Enterprise Miner will also create variables containing the most profitable outcome based on the target profile you created. The meaningfulness of that prediction is directly related to the applicability of the target profile weights.

In general, modeling itself is more clear cut in that each analyst can pick and choose their criteria for building the 'best' model and then build the model. The resulting probabilities can then be used to order the resulting observations. Unfortunately for decision tree models, all of the observations in a single node are given the same score which is why some people run additional models within each terminal node to further separate the observations. The choice of what to do with the ordered observations typically involves business decisioning. The choice to investigate fraud can be costly, particularly if the person investigated is an honest loyal customer who just had an unusual situation. The amount of money at stake, the customer's longevity/profitability with the business, and the future expected value of the customer are just a few things that might be considered. This business decisioning usually creates far more complex criteria than can be simplified to a misclassification matrix which does not take the amount of money at risk into account.

Simply put, whether you take the default decision based on the most likely outcome (typically inappropriate in a rare event), use the decision-weighted predicted outcome (assuming the decision profile accurately represents the business decisioning), or use some other strategy for selecting cases to investigate (based on available resources, amount at risk, likelihood of fraud, etc...), the TP and FP come from the strategy you employ. I clearly advocate business decisioning in determining how to proceed because the simple classification rate itself is not meaningful enough in rare events. Even looking at the expected value of money at risk (e.g. the product of the probability of fraud and the amount at risk) will yield a different ordering of observations. So there isn't a great answer to the question which cutoff to use without fully understanding the business objectives and priorities. I tend to use some oversampling (but not to 50/50 because it under-represents the non-event) and decision weights with priors to allow variable selection and to get reasonable probabilities but then combine those probabilities with other information to determine the final prioritization/action for observations based on some more complex rules.

Use this tutorial as a handy guide to weigh the pros and cons of these commonly used machine learning algorithms.

Find more tutorials on the SAS Users YouTube channel.