- Home

- /

- Analytics

- /

- SAS Data Science

- /

- Re: How to calculate cross validation error using the Start and End Gr...

- RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi

I am trying to use the model comparison node.

Basically I am running 5 model for the same dataset, and for each of them, I have three data pre-processing. One branch for which I std the interval variables, another one where I log them, and a last one in which I actually do not modify them. My target is interval-scaled. So basically, I have 5*3 model outputs. When I connect these outputs to a Model Comparison node, in the statistics, I ususally do not have all of them displayed. Basically, only the method with the log-transformed data are displayed. I am wondering why? Is the data transformation affects this node to the extent of not showing anything else. If I disconnect the 5 outputs from the log portion, same thing happens: the model comparison only displays the results of the models using std variables. It is weird. Any ideas? I'd appreciate some help here. Thanks. Nicolas

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

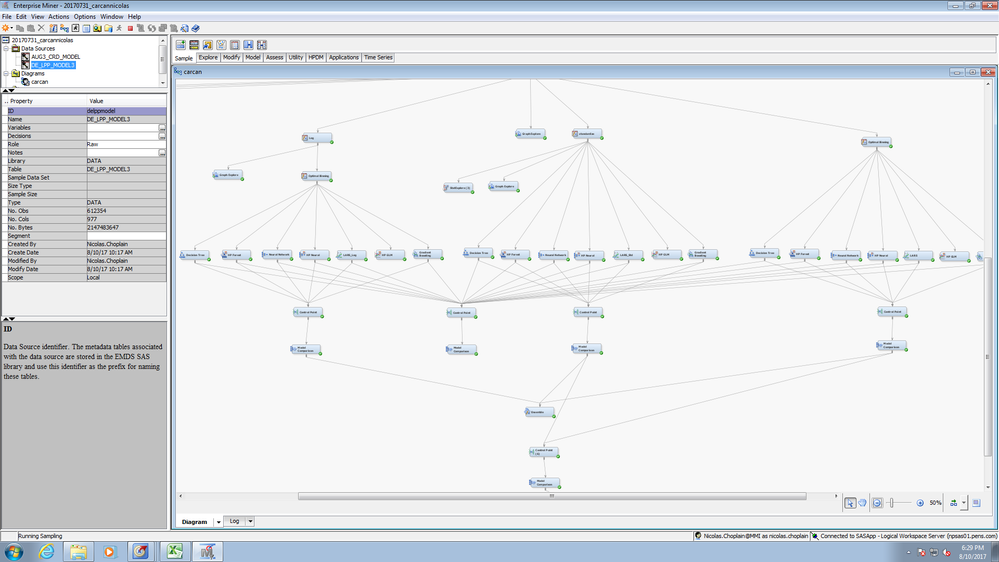

Can you provide a screenshot of your diagram?

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

I did not reconnect all the branches to take the screenshot bu tI think you'll get the idea of what i am trying to do.

The main four model comparaisons (same level) are connected to the Ensemble, and also to the last model comparison. Many thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

My only initial thought is to try renaming the nodes so they don't have the same name, e.g. if you have 2 Decision Tree nodes, rename one of them to be "Decision Tree 2". Let me know if that doesn't help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

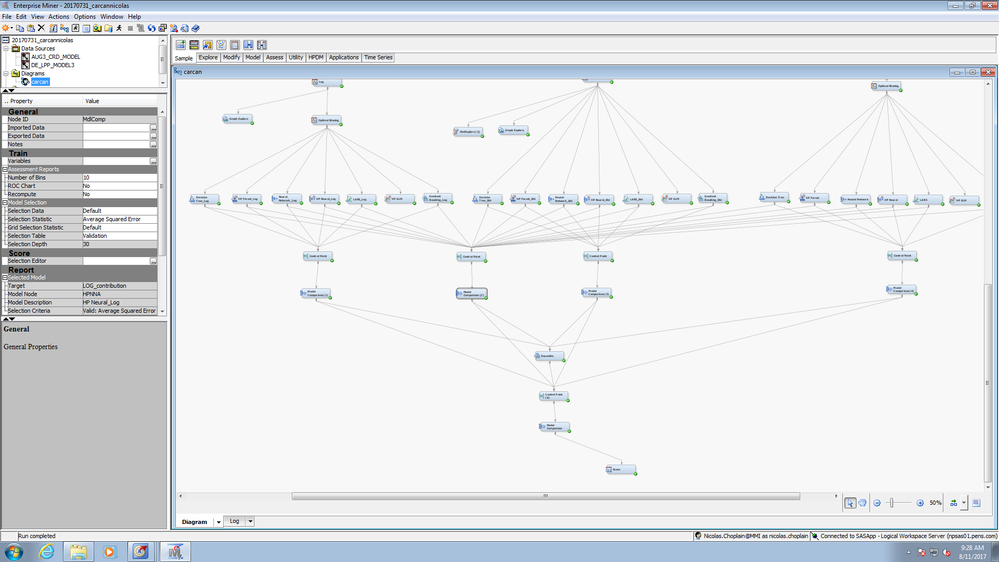

Thanks for your suggestion. I renamed all of the method according to the previous data processing, as well as the model decision nodes. The model decision (1) (3) (4) work perfectly, the (2) only display results from the _log models. Basically, it gives the same results as the model comparison (1) node.

The final model comparison node displays only one model (in this example the Decision Tree_Std which was the model selected from model comparison (3)) as the best one although it has an average squared error larger than the selected model in model comparison (1) (HP Neural_Log here). I really have no idea where this comes from. Do you have more thoughts?

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

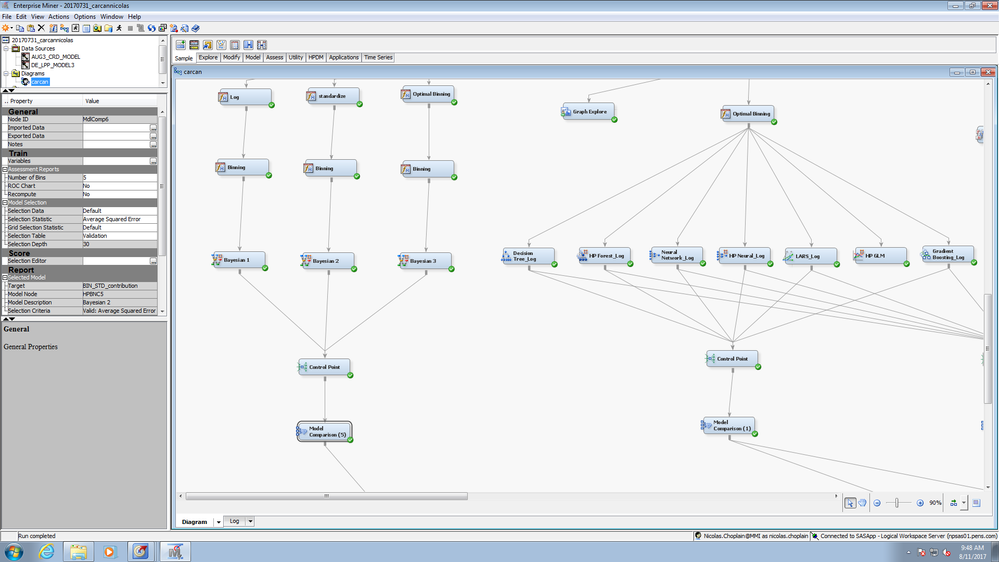

To follow my previous reply, I also want to run a model comparison (5) from 3 bayesian models, all of them with previous interval-scaled inputs transformation (as before), and a binning on the interval-scaled target so that the bayesian models can run. The model comparison (5) just displays 1 result.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

I also wonder how whether the variable transformation is taken into account by the Model Comparison Node. If the selection criteria is based on the average squared error for instance, it is more likely that we'll get lower error for standardized variables (inputs and targets) than that from not standardized variables. So the model comparison will - I assume - choose the model with lower error but this does not mean that this is the one performing better. Any thoughts? I am new in SAS Miner, coming from Python and R I am very curious about how these nodes work - Many thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for sharing the screenshot. Due to the complexity and size of your diagram, we would really need a model package to look into this further. If you are able to post it here, please do so. Otherwise, I would encourage you to open a track with SAS Technical Support.

One question -- I'm not sure I understand why you have included 3 separate pre-processing paths for your variables. It is possible to create multiple transformations in the same flow using multiple Transform Variables nodes. In your example, you would need two Transform Variables nodes -- the first node creating a Log transform for a variable and the second creating the standardized variables from the original variables -- and then you can consider using a Variable Selection node (for models like Neural Networks or Regression models) and sending all the variables directly to the Tree-based nodes to determine which combination of transformations will provide you the best utility in modeling the target.

In order to retain the original variable as an Input variable, be sure you set the appropriate options in the Score section of the Transform Variables node properties. Specifically, be sure to set the following properties:

* Set the Hide property to No -- this will make sure the original variables are visible in subsequent nodes

* Set the Reject property to No -- this will make sure the original variables is still being considered an Input variable in subsequent nodes.

This would greatly simplify your flow and does not cause any problems since subsequent variable selection is being done. This approach has the benefit of allowing you to consider different transformations (or no transformation) for different variables.

I might also offer that unless you have variables that are very poorly conditioned, you might not see much (if any) benefit from simply standardizing your variables. You might consider using an Optimal Binning transform in addition to (or instead of) the standardization of your interval variables. I like to use this type of transformation and then consider both the transformed variable and the non-transformed variable in further variable selection since the binned variable will provide information on any non-linear relationship in the variable when fitting less flexible models like a Regression. The Neural Network will handle the interval variables without needing to be transformed because it is so flexible, and the tree-based methods do their own binning.

I hope this helps!

Doug

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the tip in using the optimal binning before the Variable Selection (by keeping binned and non-binned variables). I have never thought of that, always using it after any Variable Clustering and/or Variable Selection. Will give it a go. Nicolas

Use this tutorial as a handy guide to weigh the pros and cons of these commonly used machine learning algorithms.

Find more tutorials on the SAS Users YouTube channel.