- Home

- /

- Analytics

- /

- SAS Data Science

- /

- Credit Scorecard Model issues

- RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi, guys.

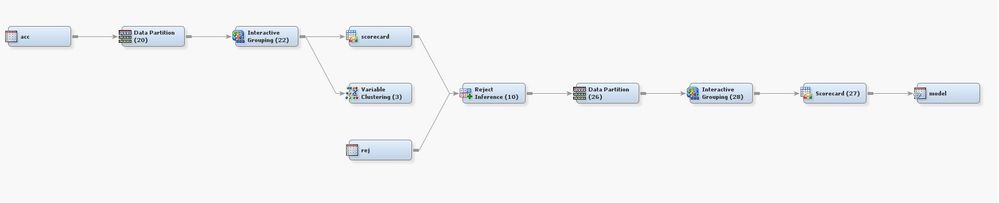

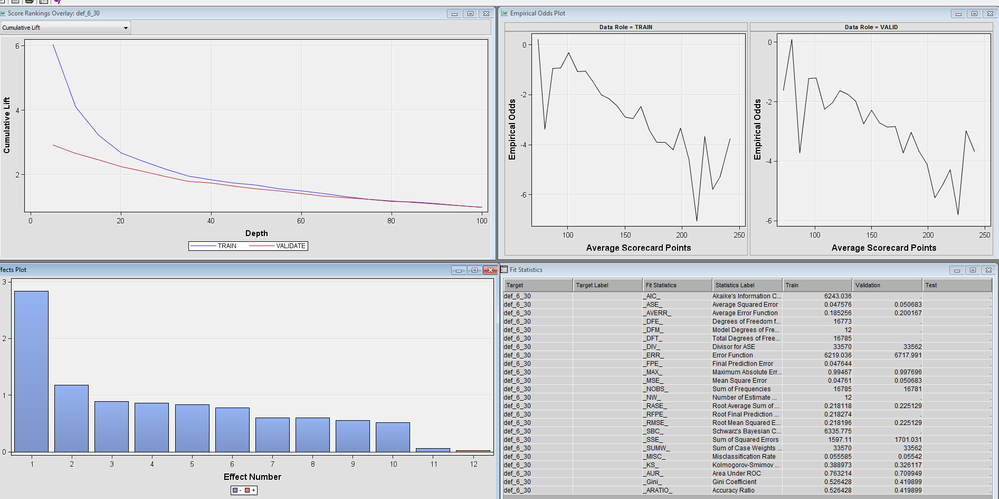

I am building a scorecard model in Enterprise Miner:

And I meet several problems:

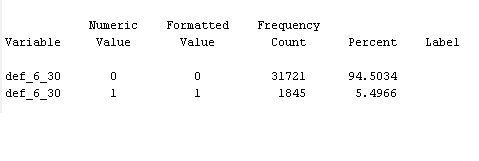

1) very few target events:

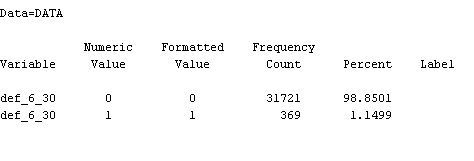

to overcome this limitaion I involved frequency variable, but I suspect model to bias:

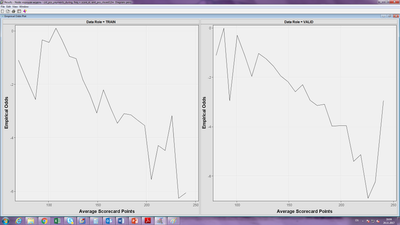

2) cannot get stable model

3) train / validation gini varies drastically: before reject inference, data partition 50/50 stratified, train gini=0.52, validation gini=0.49

And some questions:

1) How to estimate bad rate and approval of scorecard model?

All I need - is to improove old scorecard model, to archive this I tried to exclude predictors (start from the lowest information value) and add new ones (with high IV) . Honestly speaking have no other ides of doing that.

P.s. I used Naim Siddiqui's book and this:

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Unfortunately, there is only so much stability you can get out of the small number of observations you are working with. I also would be concerned that there would be great variability in the model metrics for Train/Validate for slight changes in the model (again, a stability issue). Inflating the frequency improves the calculated percentage of events but it doesn't really represent more information to the model.

You have few enough observations that I would consider not splitting at all and then doing some type of bootstrap modeling to try and get at a small set of predictors for fitting a model. It also might be useful to consider a one-split Tree model to see if there are any of the nodes that almost never have the event. You could then attempt to do your analysis on the remaining nodes which would by definition have a higher percentage of the event of interest. In this way, you could pre-filter the data in a second flow where you could model the observations having a higher chance of the target event. This could provide a better solution than fitting against the whole data set given how rare your event is. You would have to deploy the score code conditionally depending on whether a given observation was pre-filtered or whether it was subject to be scored by the Tree model.

Again, this doesn't solve your problem with having so few events to begin with but you might find it easier to model the remaining observations after removing those with almost no chance of having the target event. SAS Enterprise Miner was designed for data mining data sets which are often extremely large, so this would require a bit more work but should be easy to implement.

Hope this helps!

Doug

Use this tutorial as a handy guide to weigh the pros and cons of these commonly used machine learning algorithms.

Find more tutorials on the SAS Users YouTube channel.