- Home

- /

- Solutions

- /

- Data Management

- /

- Re: SAS/Access Hadoop String Columns - Field Length Options

- RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Greetings! First time caller, long time listener. Was hoping you all could help me with some field length issues we're having and, as a follow on, help determine why certain table properties won't pass on implcit SQL but do on explicit SQL.

My company uses Cloudera/Impala (ODBC version 2.5.29, ImpalaD 2.1.3-cdh5.3) to access Hadoop via SAS/ACCESS. We're currently running SAS 9.4 and have the STAT 13.2 and ETS 13.2 analytical packs. Consider a Hadoop View (VIEW_A) that has a field (FIELD_Z) defined as VARCHAR(10).

I run the following code:

LIBNAME IMP SASIOIMP SERVER="server" PORT=21050

USER=&HAD_USER PASSWORD=&HAD_PW DATABASE=DEV_SL;

68 PROC SQL;

69 CREATE TABLE ORDER_HDR_IMP_1 AS

70 SELECT

71 FIELD_Z

72 FROM VIEW_A;

73 QUIT;

NOTE: Compressing data set WORK.ORDER_HDR_IMP_1 decreased size by 99.78 percent.

Compressed is 47 pages; un-compressed would require 21144 pages.

NOTE: Table WORK.ORDER_HDR_IMP_1 created, with 148002 rows and 1 columns.

89 QUIT;

NOTE: PROCEDURE SQL used (Total process time):

real time 11.09 seconds

cpu time 8.48 seconds

When I note the field length of FIELD_Z, it is $32767...even though the view is defined as VARCHAR(10).

Now, I try the explicit SQL method:

90 PROC SQL;

91 CONNECT TO SASIOIMP (DATABASE=DEV_SL USER=&HAD_USER PASSWORD="&HAD_PW"

91 ! SERVER="server" SQL_FUNCTIONS=ALL);

92 CREATE TABLE ORDER_HDR_EXP_2 AS

93 SELECT * FROM CONNECTION TO SASIOIMP

94 (SELECT FIELD_Z

95 FROM VIEW_A);

96 QUIT;

NOTE: Compression was disabled for data set WORK.ORDER_HDR_EXP_2 because compression overhead would

increase the size of the data set.

IMPALA_8: Executed: on connection 2

Prepared statement IMPALA_7

NOTE: Table WORK.ORDER_HDR_EXP_2 created, with 148002 rows and 1 columns.

114 QUIT;

NOTE: PROCEDURE SQL used (Total process time):

real time 1.29 seconds

cpu time 0.24 seconds

The above results in a proper length of FIELD_Z of $10. Strange.

I can note that the query returned the exact same records, but ran in 90% less time and took up 99.99% less space (before compression). We are currently not using the SASFMT metadata option as our IT department is a little.....hesitant.....to add this metadata tag, and I have not be able to find much on the web regarding its usage. Our Hadoop install is pretty new (traditionally a DB2 shop, Teradata now as well), so we're still working out the kinks. Right now, we've got a handful of tables with the largest having about 17M records and 25 columns, relatively small compared to our DB2/Teradata tables. We use ETL to load the Hadoop tables and SAS to perform analytics. However, pulling data from these tables is extremely inefficient due to these field length issues. So a few questions:

1. Why the differences in dataset field length between Implicit SQL and Explicit SQL?

2. Anybody currently using the SASFMT metadata option? If so, any concerns/issues? Is it meeting your requirements?

3. For those not using SASFMT, how do you get around the $32767 default length for STRING fields?

Thanks in advance, appreciate the help! Feel free to ask for any additional information and I'm happy to provide whatever I can.

Matt-

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Matt

The option is DBMAX_TEXT=, but that won't help you. It appears that there is a defect lurking here. I tried this code:

/* This does not work!!! */

libname myimp impala server=domo7 user=myuser pw=mypasswd dbmax_text=255;

proc sql;

connect using myimp;

execute (create table jefftest(x string)) by myimp;

execute (insert into jefftest values('a')) by myimp;

quit;

data work.jefftest;

set myimp.jefftest;

run;

Unfortunately, the above code does not work. Frustrating, but not catastrophic. I am going to enter a defect on this.

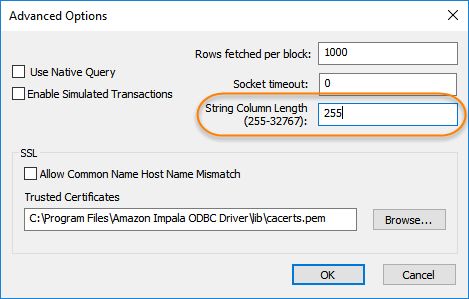

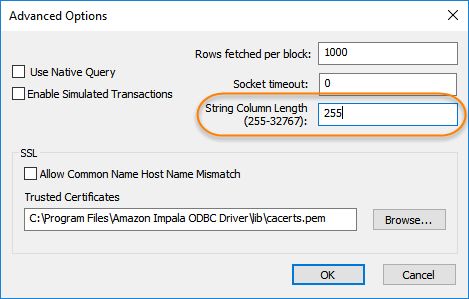

You can tell the ODBC driver to limit the length of the strings. Here is a SAS note that discusses it:

http://support.sas.com/kb/53/835.html

This code does work:

/* notice I am using a DSN */

/* I limited the string length using it */

libname myimp impala dsn=domo7dsn user=myuser pw=mypasswd ;

proc sql;

connect using myimp;

execute (create table jefftest(x string)) by myimp;

execute (insert into jefftest values('a')) by myimp;

quit;

data work.jefftest;

set myimp.jefftest;

run;

Here is what I did in the driver setup (Advanced Options tab):

If you are using a recent version of Impala you can use the VARCHAR data type instead of string. It doesn't have the 32K string problem.

This may help others who stumble upon this thread - take a look at the course materials from the SGF SAS and Hadoop workshop. They are not specific to Impala, it is explained there:

https://github.com/Jeff-Bailey/SGF2016_SAS3880_Insiders_Guide_Hadoop_HOW

Althought the DBMAX_TEXT= discussion turns-out to be a huge lie, this SGF 2016 paper may help with Impala:

https://sasglobalforum2016.lanyonevents.com/connect/sessionDetail.ww?SESSION_ID=3960

The workshop materials explain the 32k String Thing (trademark pending 😉 in great detail. It is for SAS/ACCESS Interface to Hadoop but the concepts are the same. It does not include the DBMAX_TEXT= (which appears to not work) option.

Update: I am being told that this option is documented to only work with blob-type data, and Impala doesn't technically support any blob/clob, so it doesn't apply for Impala.

I am not buying into this, but it may be legit. In the meantime, changing the configuration of the ODBC DSN does work. So that is a possiblity.

Here are the options that will work (In order from best to worst - in my opinion):

- Use VARCHAR data type instead of STRING

- Cast STRING columns to something else (this is a lot of work and could be seen as the worst solution)

- Limit the length of strings via the ODBC driver (see above)

Adding extended table attributes to the table may help. I will have to test this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Extended table attributes (SASFMT) are only applied to implicit pass-thru. If the table is defined with VARCHAR() SAS should (depending on the version you are running) honor it. There are some Hive weirdnesses with views. Plus, SAS has optimization issues with them. I would try creating a HIVE EXTERNAL table pointing to the underlying file and see if that helps.

There are other strategies for avoiding the "32k string thing" (my catchy name for the "problem"). The DBMAX_TEXT= LIBNAME statement option is one way to do this. It limits all string lengths to the value specified. It applies to all strings encountered in the SAS library. It is not a great solution, but it does help, a lot.

Check out the slides I created for an SGF 2015 workshop, "An Insider's Guide to SAS/ACCESS to Hadoop." They are available on the SAS Communities site. Click here to get the slides.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the info! Given the software we use, I'd say we went straight to the source for good advice! I've passed along the SASFMT details to our IT department and we're working on getting some test data set up so we can play around a bit.

In the interim, I've tried to use the DBMAX_TEXT syntax in my libname reference as well as my explicit pass-thru but didn't have any luck. Here's the syntax I used:

LIBNAME IMP SASIOIMP SERVER="server" PORT=21050

USER=&HAD_USER PASSWORD=&HAD_PW DATABASE=DEV_SL DBMAX_TEXT=200;

PROC SQL;

CONNECT TO SASIOIMP (DATABASE=DEV_SL USER=&HAD_USER PASSWORD="&HAD_PW" SERVER="server" SQL_FUNCTIONS=ALL

DBMAX_TEXT=200);

CREATE TABLE ORDER_HDR_EXP_2 AS

SELECT * FROM CONNECTION TO SASIOIMP

(SELECT FIELD_Z

FROM VIEW_A);

QUIT;

You had mentioned SASFMT only works on implicit pass-thru queries and not with explicit pass-thru?

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

I assumed (Whoops) that you were using ACCESS to Hadoop. You can use IMPALA for the database name.

You had mentioned SASFMT only works on implicit pass-thru queries and not with explicit pass-thru?

This is correct. Extended table attributes only work for implict pass-thru. This is only for ACCESS to Hadoop, not Impala. They are different.

Here is a SAS Note for SAS 9.4M2. It applies to SAS 9.4M3, too.

DBMAX_TEXT= will work with Implicit Passthru (when you use a LIBNAME statement). ACCESS to Impala is an ODBC-based SAS/ACCESS engine. This means that you can (I stole this from the SAS Note mentioned above)...

To limit string length under Windows, set the drivers option String Column Length in the Advanced Options of the ODBC data source.

On a UNIX system, set the DSN (data source name) property StringColumnLength.

Do you think it would help if I wrote a small note on this and added it to the communities site as a note/paper/whatever it is called?

Let me know if you need more help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Jeff,

It appears that HIVE EXTERNAL table is one of the best way to handle 32767 length issue however the problem I am running into is data truncation since the source base (hadoop) table has data stored as a string and new external table that I created has varchar, char and int.

Did you face this issue or have any suggestions/recommendations?

Thanks

Matt

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Maybe this thread will help?

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Can you please share the sample code with DBMS_TXT= option in libname and dataset name? I tried but length comes as 32767 even with DBMS_TXT= option.

Thank You!

Mathur

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Matt

The option is DBMAX_TEXT=, but that won't help you. It appears that there is a defect lurking here. I tried this code:

/* This does not work!!! */

libname myimp impala server=domo7 user=myuser pw=mypasswd dbmax_text=255;

proc sql;

connect using myimp;

execute (create table jefftest(x string)) by myimp;

execute (insert into jefftest values('a')) by myimp;

quit;

data work.jefftest;

set myimp.jefftest;

run;

Unfortunately, the above code does not work. Frustrating, but not catastrophic. I am going to enter a defect on this.

You can tell the ODBC driver to limit the length of the strings. Here is a SAS note that discusses it:

http://support.sas.com/kb/53/835.html

This code does work:

/* notice I am using a DSN */

/* I limited the string length using it */

libname myimp impala dsn=domo7dsn user=myuser pw=mypasswd ;

proc sql;

connect using myimp;

execute (create table jefftest(x string)) by myimp;

execute (insert into jefftest values('a')) by myimp;

quit;

data work.jefftest;

set myimp.jefftest;

run;

Here is what I did in the driver setup (Advanced Options tab):

If you are using a recent version of Impala you can use the VARCHAR data type instead of string. It doesn't have the 32K string problem.

This may help others who stumble upon this thread - take a look at the course materials from the SGF SAS and Hadoop workshop. They are not specific to Impala, it is explained there:

https://github.com/Jeff-Bailey/SGF2016_SAS3880_Insiders_Guide_Hadoop_HOW

Althought the DBMAX_TEXT= discussion turns-out to be a huge lie, this SGF 2016 paper may help with Impala:

https://sasglobalforum2016.lanyonevents.com/connect/sessionDetail.ww?SESSION_ID=3960

The workshop materials explain the 32k String Thing (trademark pending 😉 in great detail. It is for SAS/ACCESS Interface to Hadoop but the concepts are the same. It does not include the DBMAX_TEXT= (which appears to not work) option.

Update: I am being told that this option is documented to only work with blob-type data, and Impala doesn't technically support any blob/clob, so it doesn't apply for Impala.

I am not buying into this, but it may be legit. In the meantime, changing the configuration of the ODBC DSN does work. So that is a possiblity.

Here are the options that will work (In order from best to worst - in my opinion):

- Use VARCHAR data type instead of STRING

- Cast STRING columns to something else (this is a lot of work and could be seen as the worst solution)

- Limit the length of strings via the ODBC driver (see above)

Adding extended table attributes to the table may help. I will have to test this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Thank You JBailey for the suggestions and sample code. I have issues connecting to IMPALA with server name as well it works fine with DSN.

Based on what I found from http://support.sas.com/documentation/cdl/en/acreldb/69580/HTML/default/viewer.htm#n0usrxwbz3hw7qn1nq... page, it seems like with varchar the default SAS format would be $65355 so it actually going to take more space and time to process than string data type. Please correct me if I mis interpreted.

Data Conversion from Impala to SAS

CHAR | character | $255. |

STRING | $32767. | |

VARCHAR | $65355 | |

BOOLEAN | numeric | 1. |

BIGINT | 20. | |

DOUBLE | none | |

FLOAT | none | |

INT | 11. | |

SMALLINT | 6. | |

TINYINT | 4. |

I was informed about CAST function from SAS TS but as you said it's a very time consuming specially when there are multiple tables and 100's of columns in each table that needs to be modified.

I looked at the SAS notes which talks about limit the length of the strings in ODBC driver but the issue is it is going to limit the length to one that user mention in StringColumnLength= within odbc.ini for each variable regardless the actual value of particular variable. Let's say I define length in StringColumnLength=250 in odbc.ini then all string fields will have 250 length and the fields which has data more than 250 character bytes will get truncated.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Matt

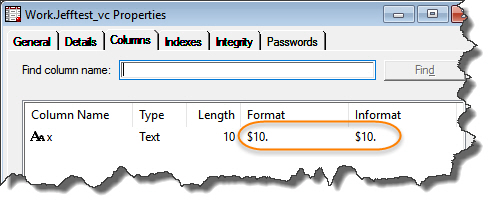

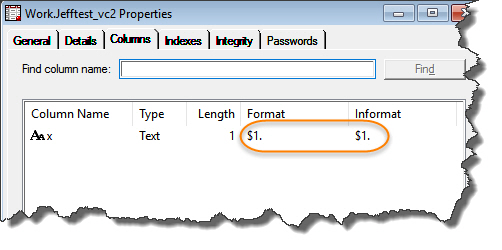

CHAR and VARCHAR supported were added in SAS 9.4M3. I used SAS 9.4M4 for the following test. I don't see a problem with VARCHAR. It works great!

Here is all the code.

libname myimp impala server=impserv user=myuser pw=mypasswd;

proc sql;

connect using myimp;

execute (create table jefftest(x string)) by myimp;

execute (insert into jefftest values('a')) by myimp;

quit;

data work.jefftest;

set myimp.jefftest;

run;

/* varchar(10) */

proc sql;

connect using myimp;

execute (create table jefftest_vc(x varchar(10))) by myimp;

execute (insert into jefftest_vc values('1234567890')) by myimp;

quit;

data work.jefftest_vc;

set myimp.jefftest_vc;

run;

/* varchar with no length specified */

/* Results in a length of 1 */

proc sql;

connect using myimp;

execute (create table jefftest_vc2(x varchar)) by myimp;

execute (insert into jefftest_vc2 values('1234567890')) by myimp;

quit;

data work.jefftest_vc2;

set myimp.jefftest_vc2;

run;

varchar(10)

varchar - with no length specified (I was seeing if it would produce the 65k length string).

Best wishes,

Jeff

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Thank you Jeff for your prompt response with details. We are on M2 and planning to upgrade to M4 soon. Your code will help me once we are on M4.

Do you buy any chance know the root cause of the issues I am facing while trying to connect Impala with server= instead of DSN?

Here is the libname and error message. Our impala cluster is kerberized and I do have valid ticket.I am getting same error with or without user name and password.

libname myimp impala server='fqdn' user=test password='xxxxx' schema=default;

data myimp.test;

set sashelp.class;

run;

/*libname myimp impala server='fqdn' user=test password=XXXXXXXXXXXXXXXXXX schema=default;

ERROR: CLI error trying to establish connection: [unixODBC][Cloudera][ImpalaODBC] (100) Error from the Impala Thrift API: No more data to read.

ERROR: Error in the LIBNAME statement.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Matt

You are quite welcome. I am more than happy to help solve your problem.

I think your DSN= vs SERVER= issue has to do with some configuration that is present in the odbc.ini file. I bet it is related to the Kerberos setup. There is something being handled for you in your DSN. If you want to resolve this you may want to give SAS Tech Support a call.

Best wishes,

Jeff

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Thank you Jeff. I was able to get hold of Peter at SAS TS. It looks like I don't have write access to Impala. I was under wrong impression it seems. I am going to reach out to our hadoop admins and take it from there.

Thank you for your help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Jeff,

I am back to bother you. It's getting more complicated now it seems.

I am trying to write my output SAS dataset to Impala and it appears all character fields are being saved as string so while pulling data back to SAS all character fields come with 32767 length.

DBMAX_TEXT and defining a length StringColumnLength= in odbc.ini is not going to work for me, due to the nature of the data.

This leaves me with only one option Alter Table property with SASFMT. Let me know if you know other better ways to handle the length issue while writing output to Impala.

Also, I read about SASFMT metadata option for Implicit sql. Can you please share some information how it can be used and where it can be used?

Thank you so much for all your help.

Matt

April 27 – 30 | Gaylord Texan | Grapevine, Texas

Registration is open

Walk in ready to learn. Walk out ready to deliver. This is the data and AI conference you can't afford to miss.

Register now and save with the early bird rate—just $795!

Need to connect to databases in SAS Viya? SAS’ David Ghan shows you two methods – via SAS/ACCESS LIBNAME and SAS Data Connector SASLIBS – in this video.

Find more tutorials on the SAS Users YouTube channel.