- Home

- /

- Solutions

- /

- Data Management

- /

- Re: SAS/ACCESS to Hadoop libname read returns java errors

- RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

We are using SAS 9.4TS1M2 and Hortonworks 2.5 Hadoop cluster. Our libname stmt is working:

24 options set=SAS_HADOOP_JAR_PATH ="/sas/sashome/SAS_HADOOP_JAR_PATH";

25

26 libname hdp hadoop server='hwnode-05.hdp.ateb.com' port=10000

26 ! schema=patient user=hive password=XXXX SUBPROTOCOL=hive2;

NOTE: Libref HDP was successfully assigned as follows:

Engine: HADOOP

Physical Name: jdbc:hive2://hwnode-05.hdp.ateb.com:10000/patient

But Proc SQL is not reading from Hadoop:

24 proc sql;

25 select * from hdp.atebpatient where clientid=4 and

25 ! atebpatientid=xxxxxxxxxxx;

One user gets these errors:

ERROR: javax.xml.parsers.FactoryConfigurationError: Provider org.apache.xerces.jaxp.DocumentBuilderFactoryImpl not found

ERROR: at javax.xml.parsers.DocumentBuilderFactory.newInstance(DocumentBuilderFactory.java:127)

ERROR: at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:2555)

ERROR: at org.apache.hadoop.conf.Configuration.loadResources(Configuration.java:2532)

ERROR: at org.apache.hadoop.conf.Configuration.getProps(Configuration.java:2424)

ERROR: at org.apache.hadoop.conf.Configuration.set(Configuration.java:1149)

ERROR: at org.apache.hadoop.conf.Configuration.set(Configuration.java:1121)

ERROR: at org.apache.hadoop.conf.Configuration.setBoolean(Configuration.java:1457)

ERROR: at com.dataflux.hadoop.DFConfiguration.<init>(DFConfiguration.java:95)

ERROR: at com.dataflux.hadoop.DFConfiguration.<init>(DFConfiguration.java:72)

ERROR: Caused by: java.lang.ClassNotFoundException: org.apache.xerces.jaxp.DocumentBuilderFactoryImpl

while another user receives:

ERROR: java.lang.NoClassDefFoundError: org/w3c/dom/ElementTraversal

ERROR: at java.lang.ClassLoader.defineClass1(Native Method)

ERROR: at java.lang.ClassLoader.defineClass(ClassLoader.java:800)

ERROR: at

java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142)

ERROR: at java.net.URLClassLoader.defineClass(URLClassLoader.java:449)

ERROR: at java.net.URLClassLoader.access$100(URLClassLoader.java:71)

ERROR: at java.net.URLClassLoader$1.run(URLClassLoader.java:361)

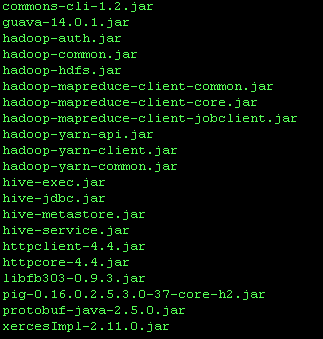

We suspect we are missing or have incorrect .jar file(s). The list of .jar files we are using are

attached.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi @MALIGHT,

READ_METHOD=jdbc bypasses HDFS processing. This is an indication that you are missing some JARs.

You may want to try setting SAS_HADOOP_RESTFUL=1 environment variable. I think this option first appeared in SAS 9.4M3, but I could be wrong. This option enables HDFS processing to use the REST interface. This limits the need for certain JARs, too. If you set it and don't see a change in behavior it is probably because it wasn't implemented at M2.

I think your best bet is to go through the process of getting the correct set of JARs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi @MALIGHT

Was the hadooptracer program used to pull the JAR files? I don't think there are nearly enough JARs listed in the PNG file to connect to the Hadoop cluster.

Is Apache Knox being used in this HDP cluster?

Best wishes,

Jeff

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jeff Bailey (from many moons ago testing SAS/ACCESS)!

I'm tracking down how we got hold of the JAR files we point to, since I wasn't involved in this end of the process. Knox was not installed as part of our HDP cluster.

After an adjustment on the Hive serve node by our IT department this morning, my original PROC SQL step still failed. I decided to add

the read_method=jdbc option to the Libname statement, and the PROC SQL step now works. From the S/A doc, there is no default for

the read_method= option but if I remove it comepletely or assign read_method=hdfs, it fails with errors as listed in original post.

Does read_method=jdbc bypass need to use JAR files?

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi @MALIGHT,

READ_METHOD=jdbc bypasses HDFS processing. This is an indication that you are missing some JARs.

You may want to try setting SAS_HADOOP_RESTFUL=1 environment variable. I think this option first appeared in SAS 9.4M3, but I could be wrong. This option enables HDFS processing to use the REST interface. This limits the need for certain JARs, too. If you set it and don't see a change in behavior it is probably because it wasn't implemented at M2.

I think your best bet is to go through the process of getting the correct set of JARs.

April 27 – 30 | Gaylord Texan | Grapevine, Texas

Registration is open

Walk in ready to learn. Walk out ready to deliver. This is the data and AI conference you can't afford to miss.

Register now and save with the early bird rate—just $795!

Need to connect to databases in SAS Viya? SAS’ David Ghan shows you two methods – via SAS/ACCESS LIBNAME and SAS Data Connector SASLIBS – in this video.

Find more tutorials on the SAS Users YouTube channel.