- Home

- /

- Solutions

- /

- Data Management

- /

- Performance, joining two tables 14m rows

- RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi SAS Performance Experts,

I am doing a join of two SAS datasets.

SASDWHB.DMPERG ERG about 160 Gb and 110 m rows

SASDWHB.DMPSTA STA about 17 Gb and 6m rows.

Doing and inner join for a partricular year results in about 14m rows.

Our virutal sas server has 6vCPUs and 64 GB vRAM running SUSE Linux v11 64 bit

and yet the below query runs since 3 hours but I expect something like

3 minuts maximum.

Where do we start to analyse why the performance is so bad?

we do use SASBASE library.

proc sql;

select year, office, country, office_name,car,car_name,residue,residuename,

count(distinct STA.sta_id), count (distinct ERG.erg_id), count(distinct STA.cartypeID)

FROM SASDWHB.DMPSTA STA

INNER JOIN SASDWHB.DMPERG ERG

ON STA.STA_ID = ERG.STA_ID

where year='2015'

;

QUIT;

I am the only person using the server right now.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

You do a join and use 3 count distincts.

This is one of the usual cases where SQL performance in SAS goes from bad to incredibly slow (on the order of watching paint dry and grass growing)

(Four different sorts need to be done, and remerging all counts back into the dataset)

All this is done in one utility file that includes everything (kitchen sink!) and causes massive load on the WORK disk(s)

My suggestion:

Sort each data set separately by sta_id (if not yet sorted that way)

When sorting the dataset that contains year, use a keep= and where= dataset option to subset by year and reduce observation size, and create a new intermediate dataset. (keep= on both datasets, if unneeded columns are present)

Merge in a datastep. While doing that, you can already create a secondary dataset with the count of sta_id.

Did you omit a group by deliberately, or is that a mistake?

And one final consideration: you expect 3 minutes (180 seconds) for a process that requires at least 300 GB to be moved physically. Is your I/O subsystem capable of > 2GB/sec throughput when doing random read/writes?

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the fast reply.

Yes, the GROUP BY was cut off while transfering the statement. I have no idea what aour I/O subsystem in capable of. I come from the Oracle world and Oracle would use the filter in the WHERE clause first and work with the 14m rows which are then handled - at least in my experience much faster than what I see here in SAS but then...as you suggest. Using proc sql might not be the best way to go forward here. I should use the sas base syntax. I give it a try.

The dataset is sorted by year and I would start working only with the 2015 data before sorting by IDs. Does that make sense?

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

@PhilipH wrote:

Hi,

Thanks for the fast reply.

Yes, the GROUP BY was cut off while transfering the statement. I have no idea what aour I/O subsystem in capable of. I come from the Oracle world and Oracle would use the filter in the WHERE clause first and work with the 14m rows which are then handled - at least in my experience much faster than what I see here in SAS but then...as you suggest. Using proc sql might not be the best way to go forward here. I should use the sas base syntax. I give it a try.

The dataset is sorted by year and I would start working only with the 2015 data before sorting by IDs. Does that make sense?

Yes. Reducing data as early as possible in the analytic chain is the first key to performance tuning. Both vertically (where conditions or subsetting if) and horizontally (keep/drop).

Depending on your data structure, using the compress=yes dataset option will at least reduce the disk space needed for storage; utilty files (sort, SQL) will always be uncompressed.

While RDBMS systems tend to have the whole DB in memory (or in a structure that is close to that), with lots of defined indexes, SAS keeps tables in individual files on disk. Indexes in SAS won't help if they can't be used to extract a rather tiny slice out of the dataset.

My first step would be

proc sort

data=table_a (

keep=year stat_id /* other variables */

where=(year = 2015)

)

out=int_a

;

by stat_id;

run;and then proceed from there

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

If YEAR is in ERG I would strongly suggest moving to SPDE as test at least. This since SPDE has a more powerful index technology than base SAS (make sure YEAR is indexed). Also, SPDE has better I/O threading possibilities (given that your current constraint isn't I/O).

PROC SQL _method;

will give you the chosen SQL plan.

OPTIONS FULLSTIMER;

will give somewhat detail information about resource consumption.

Be sure to set MEMSIZE and SORTSIZE to appropriate values (utilizing your physical memory as much as possible - join means sorting, and is memory intense).

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

There may be issues with your data structure. Could you provide the two proc contents and the join key.

A 17gb dataset with only 6 million rows means that the record length is almost 3,000 bytes.

It is a commmon practice to use codes for long text and even 8 byte bymerics to reduce the width of records.

Both datasets seem very fat?

How many rows and what is the width of the resultant dataset.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

@LinusHThanks for your reply. No, YEAR is not in ERG 🙂

@rogerjdeangelisindeed there are about 40 variables (some with lots of texts) in those

datasets BUT for my particular query I only use year, office, country, office_name,car,car_name,residue,residuename

and they are not patriculary long or complex variables.

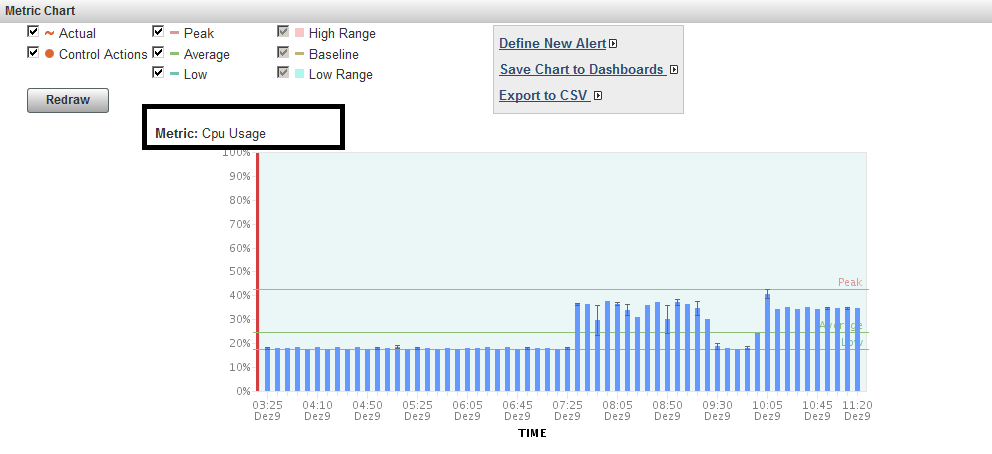

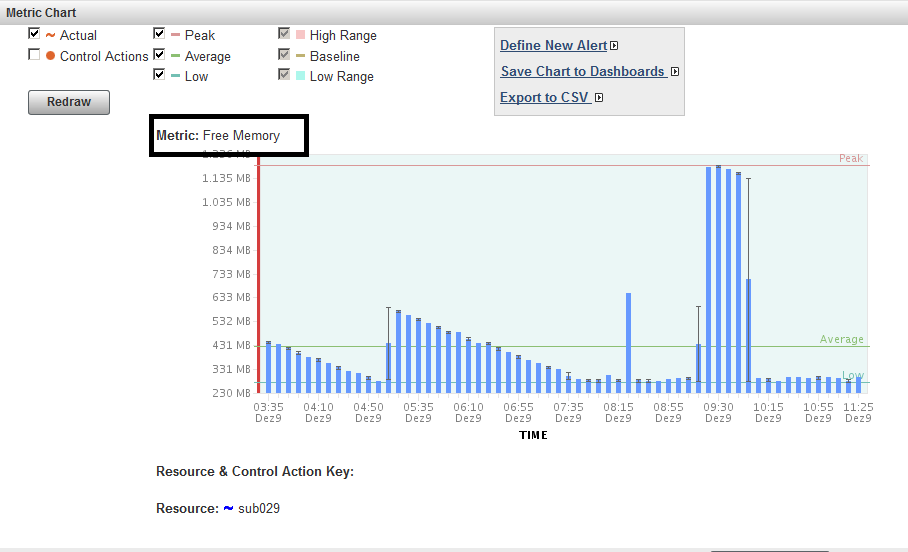

Size statistics are attached. I canceled the Proc SQL so cant really tell you how much it would have

taken. There result set would have hadto count 14m rows and resulted in maybe 400 grouped by

variables like year, office, country, office_name,car,car_name,residue,residuename.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

I have to admit after my Windows client just crashed (see last proc sql) I am in

a bit of a faith crisis here. The whole exercise is just a test and does not

even closely represent to the actual work load of the server once 20 developers

starting working with the data and the system goes down left right and centre.

Why are we using SAS again?

I have been developing 8 years with Oracle and SQL Server and never had any of those issues.

I feel worried that I have to do all this pre-sorting and merging. Future

developers and end-users will shoot me if I have to tell them that they have to do that 😄

I try to run the statements on Monday again.

proc sort /*1 minute*/

data=DMPSTAM (

keep=sta_id year office country office_name car car_name cartypeID

where=(year = '2015')

)

out=DMPSTA_SORTED

;

by sta_id;

run;

proc sort /*7 minutes*/

data=DMPERG (

keep=sta_id erg_id residue residuename

)

out=DMPERG_SORTED

;

by sta_id;

run;

/*client EG crashed after trying to load over 6gb of data into memory- the code executed on the server*/

OPTIONS MSGLEVEL=I;

proc sql _METHOD;

select year, office, country, office_name,car,car_name,residue,residuename,

count(distinct STAMM.sta_id), count (distinct erg_id), count(distinct cartypeid)

FROM DMPSTA_SORTED STAMM

INNER JOIN DMPERG_SORTED ERG

ON STAMM.STA_ID = ERG.STA_ID

where year ='2015'

group by year, office, country, office_name,residue,residuename,car,car_name

;

QUIT;

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

client EG crashed after trying to load over 6gb of data into memory

Always have a create table in your SQL as else you're creating a report which gets transferred in full to the client side. If you create a Work table in SAS then data remains on the server and if you open the table in EG then only small slices of data get transfered to the client side.

In EG under Options/Results I also always untick "Automatically open data or results when generated". If you open the data then only a sub-set gets transferred to the client side (as it would when you run code using SQL Developer or the like).

If coming from using Oracle then yes, the SAS SQL compiler isn't as powerfull as Oracle's and with the data volumes you're dealing with my ideal setup would be to have the data in a database like Oracle accessed via SAS and then use the best from both worlds.

As with any other environment/application: You want to make sure that it's configured to optimally support what you're doing. With SAS what's important when dealing with high data volumes is to have WORK and UTILLOC pointed to a disk with I/O as fast as possible. Ideally WORK and UTILLOC are also on different disks.

If you join tables then there is always a sort involved (and it's not different with a DBMS). Proc SQL will save the temporary files in the UTILLOC area and the sort is multi-threaded (I believe).

When joining a big table with one or several small tables then one technique in SAS is to use a data step with a hash lookup to the small table. This removes the need to sort the big table and that's the reason why it's often very fast.

If the look-up is only a key/value pair then creating and using a SAS Format is another very efficient way of doing things.

You might be more used to SQL than data step syntax; it's not that hard though to code a SAS hash table lookup as part of a SAS data step once you've got used to the syntax.

Of course creating and using indexes can also speed up things dramatically. Unlike Oracle SAS can't make partial use of an index or use multiple indexes at the same time for the same join condition, so the index must be created over exactly the variables you're using in your join (there can be more variables in the join condition and SAS will then just have table scans on the remaining sub-set after using the index).

And yes, I'd also expect that a WHERE clause gets applied before the join and sub-sets the data sources. On a side note: I'd always use the alias together with variable names as it makes code just so much more readable.

It's no more proper SQL but alternative syntax to sub-set data when using SAS files is:

Proc SQL;

create table ... as

from

libref.mytable(where=(year='2015'))

.....

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

I think it is best for Hash Table.

Can you post an example better be data step code, and don't forget post the output.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

This does not solve your problem but I

am having trouble coming with example data.

I was able to join a 160,000,000 11 variable table

with a 6,000,000 2 variable table in under 2 minutes.

I have a very slow old 2008 computer with DDR2 ram.

Newer computers should cut the time in half.

I was unable to create a 14m result?

Do not see a need to parallelize the code(yet)

/* 60k result is a far cry from 14m but I don't see how to get 14m

My resulting table

Result of join of 160,000,000 and 6,000,000

NOTE: Table SD1.RES created, with 60000 rows and 10 columns.

261 ! quit;

NOTE: PROCEDURE SQL used (Total process time):

real time 2:03.27

cpu time 3:19.24

*/

Maybe you only need one variable in the potential 1:1 relationships?

Here are my assumed cardinalities

3 Years

4 Countries

10,000 erg_ids ( algorithm is relatively insensitive to this variable)

1,000 different offices (assume office_name is 1:1 with office)

1,000 different office names (assume office_name is 1:1 with office)

100 different cars 1:1 car names

100 different car names

400 redidues 1:1 with residue name

400 residue name

INPUTS

Up to 40 obs from spde.dmperg160m total obs=160,000,000

OFFICE_

STA_ID OFFICE COUNTRY NAME CAR CAR_NAME RESIDUE RESIDUENAME ERG_ID YEAR

1 A049; C01 D049; E049 F049 G04C H04C 2 2005

1 A0324 C02 D0324 E032 F03F G03< H03< 61 2004

1 A0348 C02 D0348 E034 F03> G03> H03> 81 2003

1 A0;32 C04 D0;32 E0;3 F0;3 G0;= H0;= 49 2004

1 A0252 C01 D0252 E025 F025 G025 H025 80 2005

1 A035: C02 D035: E035 F03S G03? H03? 21 2003

1 A053: C02 D053: E053 F05= G05= H05= 83 2005

1 A069: C04 D069: E069 F069 G0@9 H0@9 54 2003

.....

Up to 40 obs from spde.dmpsta17m total obs=6,000,000

CARTYPE_

Obs STA_ID ID

1 1 33

2 2 44

3 3 55

4 4 66

5 5 77

6 6 88

7 7 99

8 8 ::

9 9 ;;

10 10 <<

WANT ( Cannot get 14m which may imply exact duplicate records)

Up to 40 obs SD1.RES total obs=60,000

OFFICE_ CARTYPE_

Obs OFFICE COUNTRY NAME CAR CAR_NAME RESIDUE RESIDUENAME STA_IDS ERG_IDS IDS

1 A0222 C01 D0222 E022 F022 G022 H022 280 2 43

2 A0222 C01 D0222 E022 F022 G02< H02< 282 2 42

3 A0222 C01 D0222 E022 F022 G0<2 H0<2 152 1 38

4 A0222 C01 D0222 E022 F022 G0<< H0<< 170 1 37

5 A0222 C01 D0222 E022 F02< G022 H022 318 2 42

6 A0222 C01 D0222 E022 F02< G02< H02< 306 2 46

7 A0222 C01 D0222 E022 F02< G0<2 H0<2 159 1 39

8 A0222 C01 D0222 E022 F02< G0<< H0<< 140 1 31

9 A0222 C01 D0222 E022 F02F G022 H022 275 2 45

10 A0222 C01 D0222 E022 F02F G02< H02< 274 2 42

11 A0222 C01 D0222 E022 F02F G0<2 H0<2 156 1 35

12 A0222 C01 D0222 E022 F02F G0<< H0<< 145 1 35

13 A0222 C01 D0222 E022 F02P G022 H022 303 2 43

14 A0222 C01 D0222 E022 F02P G02< H02< 301 2 46

15 A0222 C01 D0222 E022 F02P G0<2 H0<2 146 1 36

16 A0222 C01 D0222 E022 F02P G0<< H0<< 173 1 40

WORKING CODE

============

group

by r.office

,r.country

,r.office_name

,r.car

,r.car_name

,r.residue

,r.residuename

FULL SOLUTION

=============

libname spde spde

('c:\wrk\spde_c','d:\wrk\spde_d','e:\wrk\spde_e','g:\wrk\spde_g','h:\wrk\spde_h')

metapath =('c:\wrk\spde_c\metadata')

indexpath=(

'c:\wrk\spde_c'

,'d:\wrk\spde_d'

,'e:\wrk\spde_e'

,'g:\wrk\spde_g'

,'h:\wrk\spde_h')

datapath =(

'c:\wrk\spde_c'

,'d:\wrk\spde_d'

,'e:\wrk\spde_e'

,'g:\wrk\spde_g'

,'h:\wrk\spde_h')

partsize=500m

;

proc datasets lib=spde kill;

run;quit;

* CREATE INPUT;

data spde.dmperg160m (drop=ran: rec sortedby=country index=(country));

retain sta_id 0;

length

office $5

country $3

office_name $5

car $4

car_name $4

residue $4

residuename $4

erg_id $4;

do rec=1 to 40000000;

do country='C01','C02','C02','C04';

if mod(rec,10) = 0 then sta_id=sta_id+1;

ran=int(100*uniform(5731));

ran1=int(100*uniform(5731));

ran2=int(100*uniform(5731));

year=put(2003+mod(ran,3),4.);

office = cats('A0',byte(mod(ran,10)+50),byte(mod(ran1,10)+50),byte(mod(ran2,10)+50));

office_name = cats('D0',byte(mod(ran,10)+50),byte(mod(ran1,10)+50),byte(mod(ran2,10)+50));

car = cats('E0',byte(mod(ran,10)+50),byte(mod(ran1,10)+50));

car_name = cats('F0',byte(mod(ran,10)+50),byte(mod(ran1,50)+50));

residue = cats('G0',byte(mod(ran,20)+50),byte(mod(ran1,20)+50));

residuename = cats('H0',byte(mod(ran,20)+50),byte(mod(ran1,20)+50));

erg_id = put(mod(ran,9999),4.);

output;

end;

end;

run;quit;

/*

NOTE: The data set SPDE.DMPERG160M has 160000000 observations and 11 variables.

NOTE: DATA statement used (Total process time):

real time 4:47.34

user cpu time 7:01.88

system cpu time 4:11.58

memory 63209.31k

OS Memory 89692.00k

Timestamp 12/09/2016 10:24:40 AM

Step Count 145 Switch Count 9766

*/

data spde.dmpsta17m(index=(sta_id));

retain sta_id 0;

length cartype_id $2;

do sta_id=1 to 6000000;

cartype_id = cats(byte(mod(sta_id,50)+50),byte(mod(sta_id,50)+50));

output;

end;

run;quit;

/*

NOTE: The data set SPDE.DMPSTA17M has 6000000 observations and 2 variables.

NOTE: DATA statement used (Total process time):

real time 3.54 seconds

cpu time 5.67 seconds

1280! quit;

*/

proc sql;

create

table sd1.res as

select

r.office

,r.country

,r.office_name

,r.car

,r.car_name

,r.residue

,r.residuename

,count(distinct l.sta_id) as sta_ids

,count (distinct r.erg_id) as erg_ids

,count(distinct l.cartype_ID) as cartype_IDs

from

spde.dmpsta17m as l

,spde.dmperg160m as r

where

r.year='2005'

and l.sta_id = r.sta_id

group

by r.office

,r.country

,r.office_name

,r.car

,r.car_name

,r.residue

,r.residuename

;quit;

NOTE: Table SD1.RES created, with 60000 rows and 10 columns.

261 ! quit;

NOTE: PROCEDURE SQL used (Total process time):

real time 2:03.27

cpu time 3:19.24

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

car = cats('E0',byte(mod(ran,10)+50),byte(mod(ran1,10)+50));

car_name = cats('F0',byte(mod(ran,10)+50),byte(mod(ran1,50)+50));

should be

car = cats('E0',byte(mod(ran,10)+50),byte(mod(ran1,10)+50));

car_name = cats('F0',byte(mod(ran,10)+50),byte(mod(ran1,10)+50));

Car and vcar_name are not 1:1

Need to connect to databases in SAS Viya? SAS’ David Ghan shows you two methods – via SAS/ACCESS LIBNAME and SAS Data Connector SASLIBS – in this video.

Find more tutorials on the SAS Users YouTube channel.