- Home

- /

- Solutions

- /

- Data Management

- /

- Re: DIS: Parallel Processing running sequentially - can't figure out w...

- RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

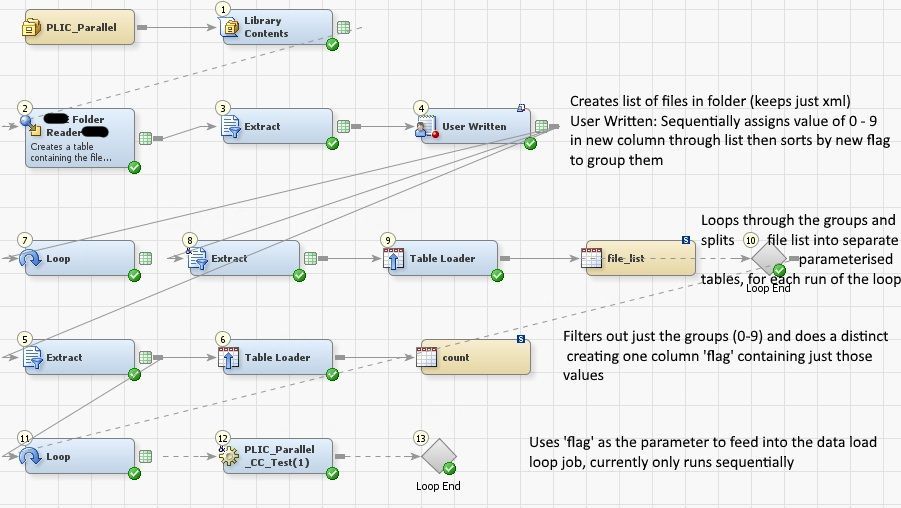

Hi, I'm trying to optimise a process by using parallel processing via a loop. I've done it in the past [mostly] without issue and have mostly copied the loop/parallel bit verbatim but am having no joy. It works but is running sequentially rather than concurrently, I presumed something was locked that it was waiting for but I've checked and everything is as parameterised as it can be, or on the work library.

Basically I'm trying to load in X number of XML files and SAS is incredibly slow at it, for testing I'm using 200 files, I'm breaking these down into 10 lists of 20 files and then would like it to process these 10 batches in parallel. I've checked in batch and interactively in DI and get the same.

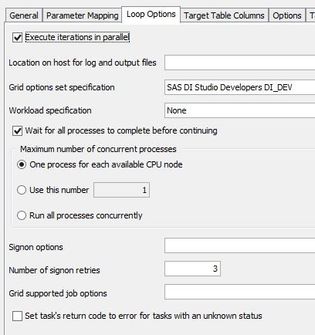

Settings on the loop [step 11] (work on my previous job), unticking 'wait for all processes to complete before continuing' makes no difference, I've also tried running the parameter list off the extract (step 5) and the 'count' table, both did the same:

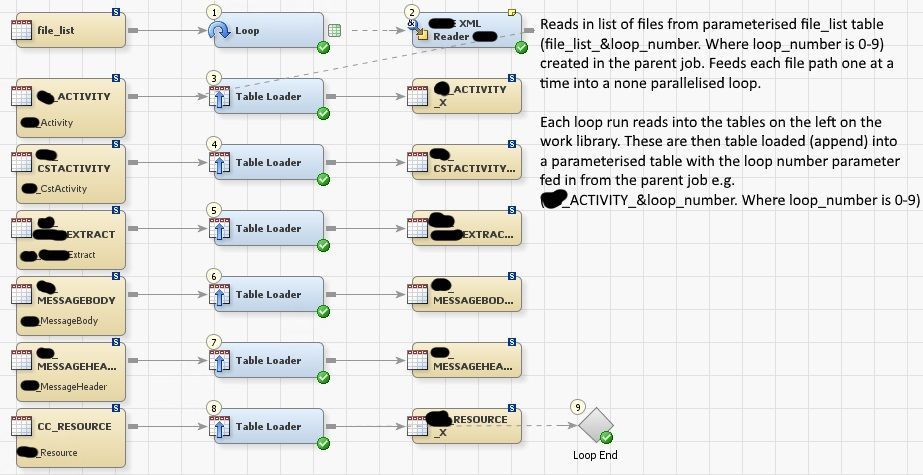

Inside the sub job:

Each loop is writing to work or it's own parameterised table only used by that loop so I'm baffled why it's running sequentially and not concurrently, I've checked everything I can think of so hopefully you can spot something. Nothing at all in the log to indicate that it isn't running concurrently. I get the same in batch and DI and can see in the output tables and grid sessions that it is doing it one at a time.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Anyone got any ideas?

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

It's really hard - at least for me - to come up with some ideas just by looking at your screenshots and reading your description.

If this is about reading a large set of XML files using parallel processing then I would expect to see a single loop transformation. The outer job populates a control table with the list of all the XML files, the inner job then uses this control table to populate parameters (2 vars: path and file name name) and then reads a single XML per iteration into SAS tables (and there of course you can select parallel processing so you've got multiple inner jobs at once executing).

The outer job then eventually collects the results of the inner job into consolidated tables - or you implement this within the inner job directly (just make sure to also use FILELOCKWAIT and a quick process like Proc Append without maintenance of indeces to avoid file locking issues), or you keep the resulting tables separate and just follow some naming convention and then simply create a view over these tables in the outer job after the loop.

I don't understand why you've ended up with that many loops and especially why you've got also a loop in the inner job.

The other bit is environment: How many CPU's do you actually have? And if it's a SAS Grid: How many jobs does the queue actually allow you to process in parallel?

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

I'm essentially doing what you outlined except the XML are being done sequentially and I split the list into 10 in the outer job and run those 10 lists in parallel. I then have a final job at the end to put the 10 lists together. It's not very efficient to read each file in as its own parallel loop as it takes 15-45 seconds to connect to grid each time and it would have to do that for every file, rather than just for my ten batches.

So say I have 100 files (in reality I have a lot more), it's broken into 10 batches of 10, these 10 batches are done in parallel and each batch will sequentially read in 10 xml files.

Our grid setup has 30 cores, 120 slots and I'm restricted to using 10 (hence 10 batches).

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

I'm eventually missing something but in my mind if you're running in parallel then each parallel process uses rsubmit and creates it's own session with it's own connection to the grid - so there isn't really an option to "cheat the queue" by having 10*10 jobs run in parallel. If you really need this then talk to your SAS admin to get a different queue which allows for that many processes in parallel.

I'm myself not a big fan of loop processes in parallel as I find dealing with multiple parallel child processes adds a lot of complexity for debugging in case something goes wrong. I certainly wouldn't nest loops like you've done it by also using a loop in the inner job.

I'm very much about performance optimized jobs but I'm at least as much also about keeping things simple and maintainable. For this reason I'd go for a simple design with a single loop job and if connection to the grid takes too long then I'd eventually choose to run 15 jobs in parallel so I'm circumventing the wait time at least partially - and all the other problems like jobs queuing up too much is something that shouldn't happen and if it does then it is something for the SAS admin to resolve.

If going for a simple design then investigation what goes wrong and why the inner jobs aren't executing in parallel (if that still happens) should also become much simpler.

Need to connect to databases in SAS Viya? SAS’ David Ghan shows you two methods – via SAS/ACCESS LIBNAME and SAS Data Connector SASLIBS – in this video.

Find more tutorials on the SAS Users YouTube channel.