- Home

- /

- SAS Communities Library

- /

- SAS ESP Failover Setup

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

SAS ESP Failover Setup

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

As the focus on the Internet of Things intensifies, SAS Event Stream Processing (ESP) momentum continues to build. A public SAS web page highlights this growing area of streaming analytics by explaining a relatively new offering named SAS Analytics for IoT and its capabilities, of which SAS ESP is the centerpiece. One can expect that additional offerings will follow as other solutions explore and identify ways integrate SAS ESP that provide value and a competitive edge.

Driven by an internet that never sleeps, corporations are becoming more global in nature resulting in an increased demand in continuous accessibility. Customers implementing these offerings and solutions will integrate them with live streaming sources and likely expect key portions of related offerings be highly available. Given this expectation, it may be beneficial to familiarize yourself with the concepts and components of SAS ESP failover. In this blog we will identify the components, their prerequisites and setup, and step through a brief test of failover created within a SAS internal environment. This blog assumes familiarity with basic SAS ESP and RabbitMQ architecture and components.

ESP Failover Basics

Most people are familiar with some of the basic concepts of highly available systems and failover. These terms are not synonymous, but are related. Failover is one mechanism implemented to improve availability. The idea is to ensure uninterrupted processing of data in the event of a failure within a business critical environment.

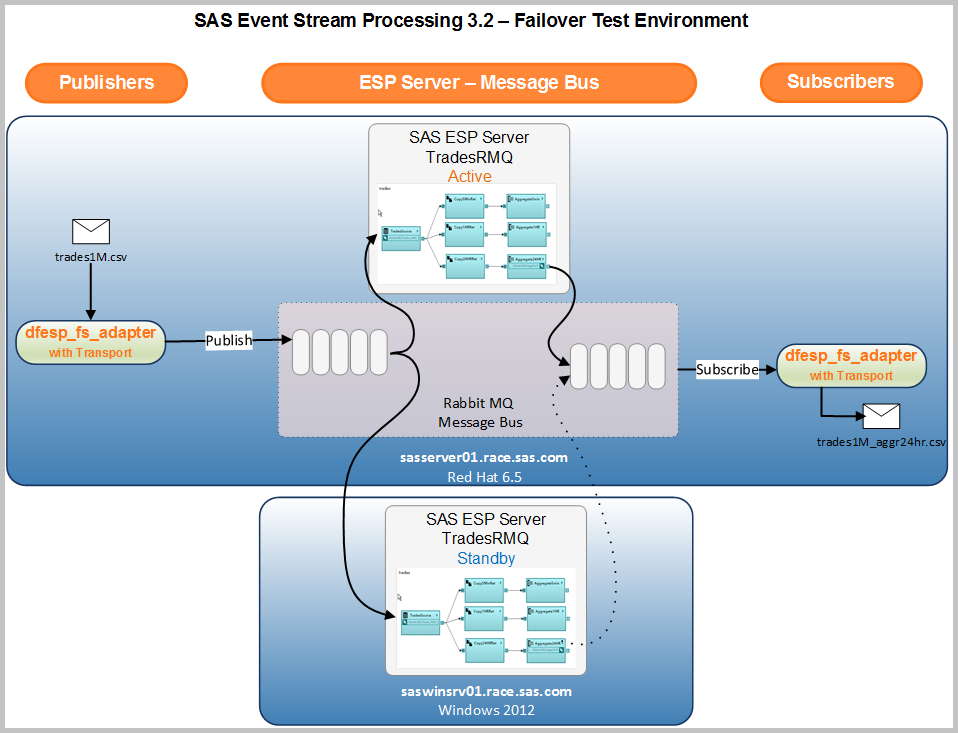

While Hadoop duplicates data blocks and then shifts processing to the nodes with the duplicate blocks in the event of a node failure to enhance availability, SAS ESP employs the concept of active/standby to improve availability. In an active/standby environment, one host acts as the active node and one or more hosts provide standby capability waiting to take over processing in the event of a failure. The following diagram depicts the basic components of SAS ESP failover setup.

The following list highlights the key components and steps for establishing a failover environment.

- SAS ESP failover setup requires a message bus (a message queueing system). There are five supported flavors, of which one must be selected

- RabbitMQ (Open source software)

- Kafka (Open source software)

- Solace (Appliance)

- Tervela (Appliance)

- Solace VMR (Software)

- The message bus is architected as a primary and secondary pair for messaging appliance failover. In the diagram above the two message buses may be on the same appliance, but a second appliance would be required for availability.

- SAS ESP uses 1+N cluster failover. A 1+N cluster is defined a one active ESP server and one or more standby servers, where active/standby identifies the state of the event stream processors.

- Each active and standby ESP instance processes event blocks retrieved from the message bus, but only the active ESP instance sends blocks to the subscriber message bus.

- In the event of a failure on the active ESP instance, the message bus detects that the ESP server is no longer present and one of the standby instances becomes the active instance.

- RabbitMQ, Tervela and Solace connectors within an ESP engine communicate with the message bus. These connectors subscribe from and publish to a highly-available message bus.

This section only briefly touched on the basics of ESP failover. For a more complete description and understanding please review Chapter 21, "Implementing 1+N-Way Failover" in the SAS® Event Stream Processing 3.2 - User’s Guide.

Failover Prerequisites and Setup using RabbitMQ

Since message bus appliances are not readily available in within our environment, testing a failover scenario required using the RabbitMQ message bus. As noted earlier RabbitMQ is readily available as open source software. Therefore these prerequisites were completed for a RabbitMQ environment. Greater detail can be found in the SAS ESP User's Guide.

- RabbitMQ server - Download, install and configure the software. Instructions can be found at the following link.

Requires Erlang.

https://www.rabbitmq.com/install-rpm.html

- RabbitMQ client (ESP requires V0.5.2) - Download the client and compile the client libraries. Requires CMake and openssl-devel packages

https://github.com/alanxz/rabbitmq-c

http://support.sas.com/kb/56/208.html

- LD_LIBRARY_PATH - Add path of the client libraries to the LD_LIBRARY_PATH environment variable

- RabbitMQ presence-exchange plug-in - Required for hot failover operation. After downloading, copy the plugin to the directory containing the RabbitMQ libraries. Enable via rabbitmq-plugins command

https://github.com/tonyg/presence-exchange

- $DFESP_HOME/etc/connectors.excluded - Remove rmq from this file to load the RabbitMQ plugin so that the RabbitMQ connector is available

- rabbitmq.cfg - RabbitMQ client applications require a configuration file containing connection parameters. This file must be in the current directory of the client

ESP Model Setup

Once RabbitMQ has been installed and the various related components configured, the next step is to create a model with appropriate connectors and adapters in a failover environment. Of course we will need at least two machines. An environment meeting this requirement was created for an internal course. This collection contains two machines, one Red Hat and one Windows. A model, based on the VWAP model (tradesDemo) available in the ESP examples, was created on the Red Hat machine and tested in SAS ESP Studio. The following screen shot from SAS ESP Studio shows the windows defined in the model. This model was initially tested using the file and socket connectors.

The model was then modified to receive data from a dynamic queue bound to a specific routing key by adding a connector to the source window. When data is published by the file and socket adapter using the transport option it publishes to a dynamic RabbitMQ queue that is subsequently consumed by a connector defined in the source window. In order to publish to a RabbitMQ exchange an exchange was created manually. An exchange serves the role of a message router. In this test the same exchange was also used for subscribing.

In a similar manner, a RabbitMQ connector was defined in one of the aggregate windows that will push events to a second RabbitMQ queue via the exchange. A second file and socket adapter was then executed to subscribe to events by creating a dynamic queue for an exchange and routing key and write them to a file. The model was tested on the Red Hat machine using the trades1M.csv sample file to ensure events successfully flow through the model and are written to a CSV file.

To summarize the following steps were taken to setup the failover environment

- The original ESP Studio project was copied to the second machine (in this case the Windows server)

- The ESP Studio JSON file was modified to use an XML server on the Windows machine

- XML servers were started on both machines

- A RabbitMQ exchange (Trades) was defined with type of Topic and durability as Transient

- RabbitMQ connectors were defined for a source window (TradesSource) and an aggregate window (Aggregate24HR)

- A file and socket adapter was used to publish events to a source window (TradesSource) using the transport option

- A file and socket adapter was used to subscribe from an aggregate window (Aggregate24HR) using the transport option

The following diagram provides an overview of the failover environment that was created. TradesRMQ is the engine instance and trades is the project name, not to be confused with the exchange name. The RabbitMQ message bus, SAS ESP adapters and one instance of the ESP engine ran on the Red Hat machine, sasserver01.race.sas.com. The CSV "event" files, published and subscribed, were also located on the Red Hat machine. The only thing that runs on the Windows machine is the second instance of the TradesRMQ engine.

Testing parallel models

Now that RabbitMQ has been installed and configured and connectors in the models have been modified to use RabbitMQ queues, it is time to test. As you might imagine, at least two tests are necessary: one test without a failed ESP server and one test with a failed ESP server.

Earlier in our model setup the XML servers were started. The next step loaded the modified model in Test mode in SAS ESP Studio on each host and start it. At this point the adapters have not been started so there are no events flowing.

The web-based RabbitMQ management tool can be used to monitor and watch queues. It was noted earlier that the Trades exchange was defined. Queues are bound to this exchange and routing keys are used to direct events within RabbitMQ. After starting the models in Test mode, the list of exchanges can be viewed using the management tool and it appeared as follows.

We discussed that the Trades exchange was manually created. However, once the model was started the Trades_failoverpresence exchange was automatically created by the subscriber connectors. The subscriber connector is configured with the “hotfailover” option which triggers the creation of the Trades_failoverpresence exchange. Notice the exchange Type is x-presence, which is the plug-in that was added after RabbitMQ was installed. This is the mechanism that detects which engine is active and binds to a Standby engine if the Active engine fails.

Even though no events have been published to the model, queues have been created and appear in the following screen shot of the queues status. Although it is beyond the scope of this blog, there are queues created for connectors, subscribers, failover and metadata This metadata includes information about messages. Additional information about each queue can be viewed by selecting the queue name.

Now that the model is running in test mode, the next step is to start the file and socket adapter to subscribe to the Aggregate24HR window within the model using the transport option. The command is shown below.

Notice the last parameter. The -l parameter specifies the transport type. When rabbitmq is specified it looks for the rabbitmq.cfg configuration file in the current directory for RabbitMQ connection information. This was configured in an earlier step. The subscriber is started before the publisher to ensure all events are captured from the model.

The subscriber adapter creates a new associated queue that is specified as the “Output” queue, as indicated by the last character in the queue name shown below. Events processed by the Aggregate24HR window are placed in this queue where the adapter retrieves them and writes them to the trades1M_aggr24hr.csv file.

Now that the model is running in test mode and a subscriber adapter has been started, a file and socket adapter is started to publish events into the model. Again the -l option is specified to indicate using the transport option using rabbitmq as the message bus. The command to accomplish this is shown below.

Typically the block parameter is specified to reduce overhead, but in this scenario blocking was not specified resulting in a blocking factor of one. This was done to intentionally slow the throughput.

As events start flowing through the model it is possible to review the number of events blocks incoming to the queue and delivered to the connector.

After all events have been published, an HTTP request to HTTP admin port on each XML server can be used to provide confirmation that both engine instances processed the same number of records. It is also possible to acquire this information using the UNIX curl command and a REST call.

Each model processed the same number of records and all window counts match. This is what we expected. One last thing to check is to review the file containing the events from the Aggregate24HR window using the subscribe adapter.

-rw-r--r--. 1 sasinst sas 98345849 Apr 15 10:41 trades1M_aggr24hr.csv

Failover Testing

Of course we want to ensure that processing continues if one of the XML servers fail. Therefore the previous test is repeated and the active XML server was terminated in the middle of processing events. In this case the active XML server, running on the Red Hat machine, was terminated by using the Ctrl-C interrupt. As expected ESP Studio issued a message that it can no longer communicate with the XML server.

Notice that several queues associated with the terminated XML server have also been removed, but the subscriber output queue is still delivering messages to the adapter.

A quick way to confirm that the Windows XML server transitioned to the active server is review the network traffic associated with the Windows machine. The screen shot below shows the host traffic received when events started flowing through the Windows engine. The received traffic, ~20MB/sec, represents the events retrieved from the publish queue (i.e. input to the model). After the failover occurs, you can see that sent traffic spikes to ~18MB/sec, which represents the traffic sent from the connector on Windows to the queue on Linux.

A check of the subscriber file created by the file and socket adapter shows that it has been replaced. The size is the same as the file created when no failover scenario was created.

-rw-r--r--. 1 sasinst sas 98345849 Apr 15 10:49 trades1M_aggr24hr.csv

Although not shown here, the first output file was renamed and Linux diff command was used to compare to the one created in the failover scenario. The results indicated the files are identical. As a result, we have a successful failover.

The same test was repeated and an active Windows XML server was terminated. This scenario produced the same result. The model on the Red Hat engine continued to run and the adapter on the Red Hat machine continued to write events to the CSV file.

Final Thoughts

In this blog we introduced the basics of SAS ESP failover using RabbitMQ as the message bus and performed a simple failover test. Hopefully it has successfully introduced the prerequisites for installing and configuring RabbitMQ as well as establishing a failover SAS ESP environment on multiple hosts. In most if not all cases the ESP hosts will reside on the same operating system. By testing in our homegrown virtual environment it has been proven that failover also works in a heterogeneous environment.

Please note that this testing was performed with SAS ESP 3.2, but the same holds true for subsequent ESP versions. Also note that SAS ESP 4.1 and 4.2 are not supported on Windows. Support for Windows was reintroduced in SAS ESP 4.3.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi @MarkThomas ,

Great article, helping a lot to us, have one question where we are stuck if you can help on the same.

How to install / compile Rabbitmq client as other depended packages have been in place, i went to rabbitmq website and there are multiple client libraries in place developed with different technology.

So do we need to use any of them as well?

Thanks,

Kaushal Solanki

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Kaushal,

Thanks for reading.

Would you provide a little more detail on your comment "there are multiple client libraries in place developed with different technology"?

Depending on which version of ESP you are using you may have to compile older client run-time libraries. For ESP 6.1 you can use the rabbitmq-c V0.9.0. Once compiled use the LD_LIBRARY_PATH to reference them. Check the "Connectors and Adapters" section of the doc for more info.

https://go.documentation.sas.com/?cdcId=espcdc&cdcVersion=6.1&docsetId=espca&docsetTarget=p1k84sxazg...

Hope this helps.

Thanks,

Mark

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi @MarkThomas,

Thanks for the response, have resolved the same with rabbitmq-c V0.5.0. as we are having ESP 3.2.

We successfully tested both the scenarios Fail over testing and parallel models thanks to you.

Thanks,

Kaushal Solanki

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Great explanatory article.

I have some queries,

1) If the db_adapter is running on both the servers with '-l rabbitmq' parameter, then data will be store in DB from only active server?

2) if the rabbitmq subscriber connector is placed for output, the 'hotfailover' parameter is sufficient to ensure only one output from active server to RabbitMQ queue?

Thanks in advance.

SAS AI and Machine Learning Courses

The rapid growth of AI technologies is driving an AI skills gap and demand for AI talent. Ready to grow your AI literacy? SAS offers free ways to get started for beginners, business leaders, and analytics professionals of all skill levels. Your future self will thank you.

- Find more articles tagged with:

- GEL