- Home

- /

- SAS Communities Library

- /

- Reinforcement Learning with Custom Environments on SAS Viya Visual Dat...

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Reinforcement Learning with Custom Environments on SAS Viya Visual Data Mining and Machine Learning

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Reinforcement learning, a branch of machine learning, can be used to solve a great multitude of problems. Applications span from stock market trading bots to automated medical diagnosis. In this post, we will be exploring the reinforcement learning tools provided with SAS Viya Visual Data Mining and Machine Learning.

This is the first post in my series on working with Reinforcement Learning with Custom Environments on SAS Viya VDMML:

- Reinforcement Learning with Custom Environments on SAS Viya Visual Data Mining and Machine Learning (this blog)

- Deep Q-Learning with Custom Reinforcement Learning Environments on SAS Viya Visual Data Mining and M...

What is Reinforcement Learning?

Adapted from the SAS Reinforcement Learning Programming Guide:

Reinforcement learning (RL) is a branch of machine learning that focuses on performing a series of actions on a given environment. While other branches of machine learning focus on labeling data (supervised learning) or finding patterns in data (unsupervised learning), reinforcement learning focuses on reward signals for feedback. These reward signals can be positive or negative and are automatically rewarded based on the actions made by a model.

RL algorithms aim to improve a model such that it always makes the best decisions. To do so, the model aims to maximize the total reward accumulated over a series of actions. When a model is trained on an environment, it learns to associate a paired observation and action with a reward. If an action continually returns a positive reward, the model learns to prioritize said action. Notably, models can learn to make short-term sacrifices for the sake of a long-term reward, allowing them to make complex, human-like decisions.

Reinforcement learning can be compared to the classic science experiment where a lab rat is taught to navigate a maze. When the rat is first exposed to the maze (its environment) it may take a while to reach the end, but when it does, it receives a positive reward: a piece of cheese. When the rat is exposed to the maze more times, it strives to reach the cheese faster. Inside its brain, the rat is developing a model over time, encouraged to do so by a positive reward.

Reinforcement Learning on SAS Viya Visual Data Mining and Machine Learning

SAS Viya Visual Data Mining and Machine Learning (VDMML) features an RL action set named reinforcementLearn. This action set contains a series of actions that allow you to use SAS Viya VDMML to train an RL model using one of many different algorithms. As of LTS 2022.1, the action set has five total algorithms: Deep Q-Network, Fitted Q-Network, Actor-Critic, REINFORCE, and REINFORCE-RTG, all of which can be applied to an environment or historical data to generate a model. For now, we will be focusing on the Deep Q-Network (DQN) algorithm. For more information on the five algorithms provided in the RL action set, check out the SAS Reinforcement Learning Programming Guide.

Alongside training actions, the reinforcementLearn action set also provides actions for scoring and exporting trained models. Scoring allows us to expose a fully trained model to an environment, which can be used to determine the effectiveness of the model.

The RL action set also provides a built-in environment that you can expose the algorithms to. This environment can be run entirely inside SAS Viya without any external tools. SAS also has provided an open-source python package named sasrl-env, which is used for running RL environment servers. This allows you to expose SAS’s RL action set to custom environments that you create yourself.

Using Built-In Environments

SAS Viya’s reinforcementLearn action set contains a built-in RL environment courtesy of OpenAI Gym, an open-source project that sets the standard for creating RL environments. This built-in environment, CartPole, is incredibly useful for testing out the reinforcement learning actions.

CartPole is an environment composed of a pole balanced atop a moving cart. An RL algorithm must push the cart left or right at any given time step, meaning the cart must always be in motion. The goal is to keep the pole upright for as long as possible.

Select any image to see a larger version.

Mobile users: To view the images, select the "Full" version at the bottom of the page.

Being that the goal is for the pole to remain upright, the environment gives a positive reward of +1 for every time step that the pole remains upright. A given CartPole episode can end one of three ways: if the cart moves too far left or right, if the pole exceeds 12 degrees off the vertical, or if the total reward exceeds 195.

Run the code below to train a DQN with the CartPole environment:

Train a DQN with the Built-In CartPole Environment

# Not shown: creating a SWAT connection (sess = swat.CAS(...))

# Load in our RL action set:

sess.loadactionset('reinforcementLearn')

# Trains our RL model using a Deep Q-Network

sess.reinforcementLearn.rlTrainDqn(

# Chooose our environment: builtin: CartPole-v0

environment=dict(type='builtin', name='CartPole-v0'),

# Creates our neural net that is used to estimate the Q value

# Two layers of 32 fully-connected nodes

QModel=[32,32],

# The number of "runthroughs" the algorithm will use to train itself

numEpisodes = 151,

# Specifies the optimizer used with the deep learning model

optimizer=dict(method='ADAM', miniBatchSize=128),

# The minimum and maximum number of records kept in the replay memory

minReplayMemory = 1000,

maxReplayMemory = 100000,

# Specifies the RL learning exploration type

exploration=dict(type="linear",

initialEpsilon=1.0,

minEpsilon=0.05),

# How often to reset the target Q model parameters

targetUpdateInterval = 100,

# How often to test the DQN

testInterval = 50,

# How many "runthroughs" when the DQN is tested

numTestEpisodes = 10,

# Specifies the table that the DQN structure/weights will be saved to once it is done training

modelOut=dict(name='dqnWeights', replace=True),

)

You can now score the newly made model using the code below:

Score a DQN with the Built-In CartPole Environment

sess.reinforcementLearn.rlScore(

# Use 1 CPU thread

nThreads=1,

# Chooose our environment: builtin: CartPole-v0

environment=dict(type='builtin', name='CartPole-v0'),

# We use the model we just created

model='dqnWeights',

# 5 'runthroughs'

numEpisodes=5,

# Writes the Q values at each observation to our table

writeQValues=True,

# Creates our table with our scoring data

casout=dict(name='scoreTable', replace=True),

)

# Loads in our table that we just created, and prints some relevant columns

print(sess.CASTable('scoreTable').loc[:, ['_Episode_', '_QVal_0_', '_QVal_1_', '_Action_']].to_string())

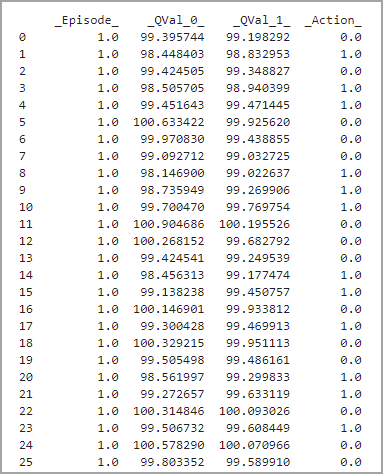

Examining the output table shows how the model is capable of making decisions. At every given time step, the model consults its Q-table, which contains two Q-values (QVal_0 and QVal_1). These Q-values are generated by your model at each time step based on its training. The model always executes the action with the highest Q-value. At each time step, the Q-values will be slightly different, as they change based on environmental observations. When a model has been given ample training, these Q-values will be tuned to ensure that the action the model initiates will maximize the total reward.

CartPole is great for testing out and learning the RL action set. In real-life customer examples, though, environments often have to be built from scratch. Moving forward, we will work with a custom environment server, allowing us to use custom-made environments. As well, the custom environment server will allow us to view an RL model interact with the environment in real-time via rendering. This real-time rendering is not an option when using built-in environments.

Setting up sasrl-env

SAS offers an open-source python package called sasrl-env used for running RL environment servers. This allows the SAS reinforcement learning action set to be used with custom RL environments.

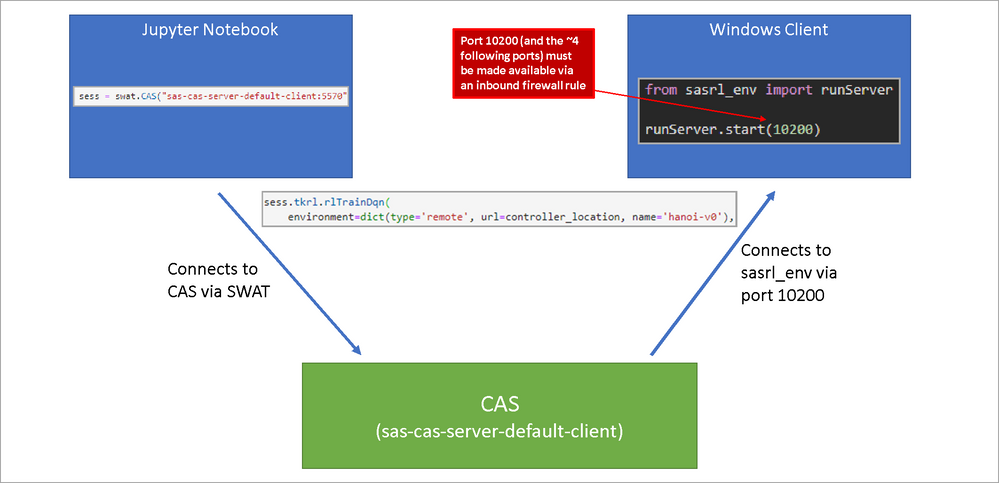

When using sasrl-env with the SAS RL action set, there are three actors that are utilized to train the RL model:

- sasrl-env Client

- CAS Server

- CAS Client

In my implementation, I utilized a Jupyter Notebook on Jupyter Hub as the CAS Client, a CAS Server made available to me on my network and my windows machine as my sasrl-env client. My solution is not the only one out there: many options exist for a CAS Client and a sasrl-env client. For instance, you could use SAS Studio as a CAS Client, or use Jupyter Hub as the sasrl-env client. I chose my setup as the Jupyter Notebook is easy to use and running the sasrl-env server on my windows machine will allow me to easily view my rendered environment.

It is important that communication between the sasrl-env server and the CAS server is enabled. If you are running the sasrl-env server on a Windows client, you must check your firewall rules to ensure inbound traffic is possible through the ports sasrl-env uses. Before altering your firewall, though, be sure to talk with your system administrator.

Running sasrl-env

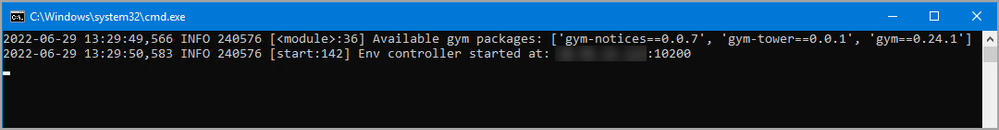

In my implementation, I created a batch file named startEnvironment.bat to automate all of the tasks necessary to create a remote environment server. Running startEnvironment.bat starts the sasrl-env server and displays the following message:

When running a sasrl-env server, make sure to take note of the controller location (the blurred ip address and port seen after “Env controller started at:”), as you will need this later.

Training/Scoring a Built-In Environment

By default, sasrl-env servers are preloaded with OpenAI Gym environments. Alongside CartPole, which we’ve already explored, there are nearly 50 other environments that come with OpenAI gym. To view the full list, you'll have to install the gym package and run the following code:

Explore the OpenAI Gym Environments

pip install gym# Open Source Env Names

from gym import envs

envids = [spec.id for spec in envs.registry.values()]

for envid in sorted(envids):

print(envid)

With the sasrl-env server set up, you can train and score on the CartPole environment again, this time with rendering enabled. Run the code below to train and then score CartPole using your remote environment. Make sure to change 0.0.0.0:10200 to your environment controller location that startEnvironment.bat returned earlier.

Train and Score a DQN with CartPole using sasrl-env

controller_location = "0.0.0.0:10200"

# First we train our model:

sess.reinforcementLearn.rlTrainDqn(

# Chooose our environment: remote at controller_location: CartPole-v0

# Now we have the option to render, but we choose not to. This is because rendering

# when training slows it down significantly.

# By default, render=False, but I have chosen to highlight it here.

environment=dict(type='remote', url=controller_location, name='CartPole-v0', render=False),

#We use the same exact parameters as earlier:

# Creates our neural net that is used to estimate the Q value

# Two layers of 32 fully-connected nodes

QModel=[32,32],

# The number of "runthroughs" the algorithm will use to train itself

numEpisodes = 151,

# Specifies the optimizer used with the deep learning model

optimizer=dict(method='ADAM', miniBatchSize=128),

# The minimum and maximum number of records kept in the replay memory

minReplayMemory = 1000,

maxReplayMemory = 100000,

# Specifies the RL learning exploration type

exploration=dict(type="linear",

initialEpsilon=1.0,

minEpsilon=0.05),

# How often to reset the target Q model parameters

targetUpdateInterval = 100,

# How often to test the DQN

testInterval = 50,

# How many "runthroughs" when the DQN is tested

numTestEpisodes = 10,

# Specifies the table that the DQN structure/weights will be saved to once it is done training

modelOut=dict(name='dqn-CartPole-remote', replace=True),

)

# Then we score it:

sess.reinforcementLearn.rlScore(

# Use 1 CPU thread

nThreads=1,

# Chooose our environment: remote at controller_location: CartPole-v0

# We choose to render our scoring, so we can watch the model interact with the environment directly

environment=dict(type='remote', url=controller_location, name='CartPole-v0', render=True),

# We use the model we just created

model='dqn-CartPole-remote',

# 5 'runthroughs'

numEpisodes=5,

# Writes the Q values at each observation to our table

writeQValues=True,

# Creates our table with our scoring data

casout=dict(name='scoreTable', replace=True),

)

Custom Environments: The Tower of Hanoi

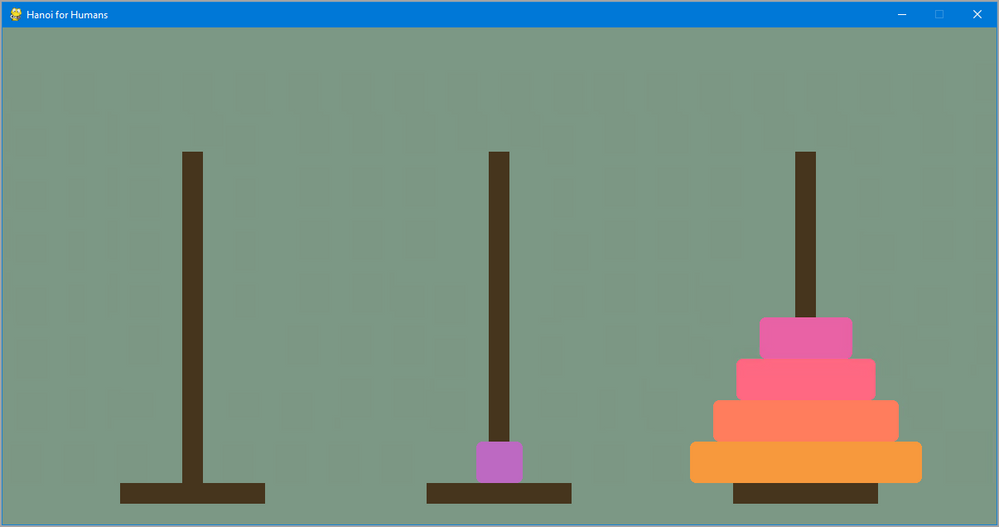

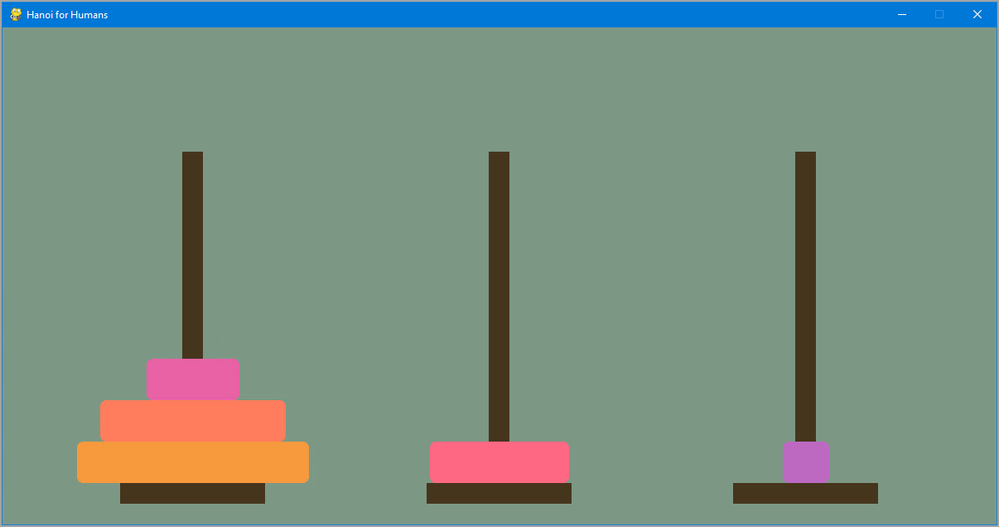

I have created a custom environment called The Tower of Hanoi, based on the classic mathematical puzzle of the same name. In this puzzle, there are three pegs and many rings of varying sizes. The top ring on a given peg can be moved to another peg as long as no ring is placed on top of one smaller than it. Traditionally, the puzzle always starts with all of the rings stacked on the leftmost peg, where the goal is to move the fully stacked tower onto the rightmost peg. In my custom environment, though, the starting state is completely randomized, and the goal is to stack all the rings onto any given peg.

Exploring The Tower of Hanoi

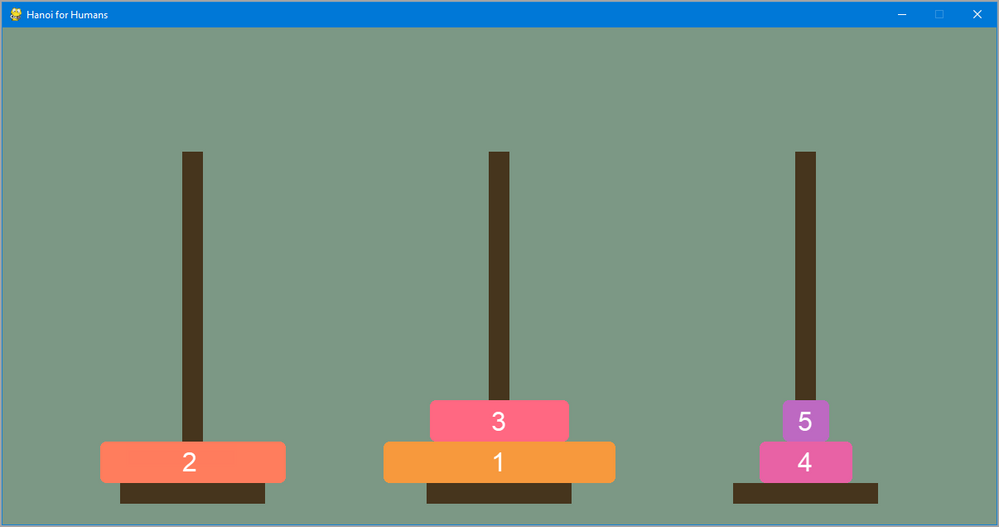

My custom environment is designed to be compliant with the OpenAI gym standards, which allows reinforcement learning algorithms to interact with it predictably. Similar to how CartPole had two discrete actions, Hanoi has six discrete actions:

| Action | Movement |

| 0 | Move the top ring from peg 0 to peg 1 |

| 1 | Move the top ring from peg 0 to peg 2 |

| 2 | Move the top ring from peg 1 to peg 0 |

| 3 | Move the top ring from peg 1 to peg 2 |

| 4 | Move the top ring from peg 2 to peg 0 |

| 5 | Move the top ring from peg 2 to peg 1 |

Being that there are six total actions for our model to execute, there will be six Q-value columns in our Q-table.

The reward system for The Tower of Hanoi is a bit more complex than CartPole. CartPole’s reward system was designed to maximize the time the pole remains upright, while the goal for Hanoi is to minimize the number of moves it takes for the algorithm to build a complete tower. In order to encourage this with rewards, there are two reward mechanisms: the invalid move checker and the gradient, both of which I describe below.

While there are six total actions available at any given time for the model to execute, not all actions are valid at every given observation. For instance, in the state seen below, action 1 (move ring from peg 0 to peg 2), is not possible, as the top ring on peg 0 is larger than the one on peg 2. Whenever an action is executed and it is invalid, a reward of -1 is given out. While the model initially is unaware of the existence of invalid actions, this negative reward is designed to train it to avoid them.

There are also positive rewards that encourage the process of building the tower. When building the tower, the incremental goal the actor is always acting towards is stacking the ring with the next-largest size atop the partially completed tower. For instance, in the state seen below, the incremental goal is to stack ring 2 on top of ring 1. Only after this goal is reached can you attempt to stack ring 3 on top of ring 2. To encourage this process of incremental goals, I have designed a reward system that increases the reward as these incremental goals are achieved.

This reward system manifests itself as a reward gradient, where the reward increases as the model gets closer to achieving the goal. When the second-largest ring is stacked on the largest ring, a reward of +0.2 is given out. When the three largest rings are stacked on top of each other, this is doubled to +0.4. If the four largest rings are stacked on top of each other, this is doubled again to +0.8. The generalized formula for the positive reward at a given state is R = 0.2 * 2n-1 where n is the number of next-largest rings stacked on top of the largest ring when n is greater than zero.

There is also a final reward given for the successful completion of the tower on any given peg of +200. This value may seem large, but this is put in place to ensure that the model does not exploit the continuous reward of +0.8 each timestep for an almost-completed tower.

Training/Scoring a Custom Environment

With the code below, you can train a DQN on the Hanoi environment, making sure to replace 0.0.0.0:10200 with the IP and port that the environment controller was started on.

Train a DQN with Hanoi using sasrl-env

controller_location = "0.0.0.0:10200"

sess.reinforcementLearn.rlTrainDqn(

# Chooose our environment: remote at controller_location: hanoi-v0

environment=dict(type='remote', url=controller_location, name='hanoi-v0'),

# Creates our neural net that is used to estimate the Q value

# Two layers of 32 fully-connected nodes

QModel=[32,32],

# The number of "runthroughs" the algorithm will use to train itself

numEpisodes = 150,

# Specifies the optimizer used with the deep learning model

optimizer=dict(method='ADAM', miniBatchSize=128),

# The minimum and maximum number of records kept in the replay memory

minReplayMemory = 1000,

maxReplayMemory = 100000,

# Specifies the RL learning exploration type

exploration=dict(type="linear",

initialEpsilon=1.0,

minEpsilon=0.05),

# How often to reset the target Q model parameters

targetUpdateInterval = 100,

# How often to test the DQN

testInterval = 50,

# How many "runthroughs" when the DQN is tested

numTestEpisodes = 10,

# Specifies the table that the DQN structure/weights will be saved to once it is done training

modelOut=dict(name='dqn-hanoi', replace=True),

)

Notice how this code uses a small number of episodes. We should score this model to see if it is trained enough to solve the puzzle efficiently. Using the code below, you will be able to score the model with rendering on, allowing you to see the model interact with the environment directly.

Score a DQN with Hanoi using sasrl-env

controller_location = "0.0.0.0:10200"

sess.reinforcementLearn.rlScore(

# Uses 1 CPU thread

nThreads=1,

# We use the same environment that we trained on

# Note how render=True is present

environment=dict(type='remote', url=controller_location, name='hanoi-v0', render=True),

# We use the model we just created

model='dqn-hanoi',

# Does 5 "runthroughs"

numEpisodes=5,

# Writes the Q values at each observation to our table

writeQValues=True,

# Creates our table with our scoring data

casout=dict(name='scoreTable', replace=True),

)

Based on the results returned by rlScore, you should be able to tell that your model is having trouble efficiently solving the puzzle. Oftentimes, the environment will freeze, meaning that the model is continually attempting to make an illegal move. The model's ineffectiveness is caused by the small amount of training episodes.

I have created a model (dqn-hanoi-fully-trained) that has been trained for 32,500 episodes. This amount of episodes led to an increased training time (a little under an hour), but made the model incredibly efficient. With both the model file and the model attributes file (dqn-hanoi-fully-trained.sashdat and dqn-hanoi-fully-trained-attr.sashdat) in the same folder as your CAS client (i.e., your Jupyter notebook) you can run the code below to load this model into CAS for scoring.

Score a DQN with Hanoi using sasrl-env

sess.upload_file("dqn-hanoi-fully-trained.sashdat", casout=dict(name='dqn-hanoi-fully-trained', replace=True))

sess.upload_file("dqn-hanoi-fully-trained-attr.sashdat", casout=dict(name='dqn-hanoi-fully-trained-attr', replace=True))

sess.table.attribute(task='ADD', name='dqn-hanoi-fully-trained', attrtable='dqn-hanoi-fully-trained-attr')Run the code below to score the model you just loaded in.

Score an External DQN with Hanoi using sasrl-env

sess.reinforcementLearn.rlScore(

# Uses 1 CPU thread

nThreads=1,

# We use the same environment that we trained on

environment=dict(type='remote', url=controller_location, name='hanoi-v0', render=True),

# We use the model we just created

model='dqn-hanoi-fully-trained',

# Does 5 "runthroughs"

numEpisodes=5,

# Writes the Q values at each observation to our table

writeQValues=True,

# Creates our table with our scoring data

casout=dict(name='scoreTable-fully-trained', replace=True),

)

When you score this model, you can see how efficient it is at solving the puzzle. Notice how altering the number of episodes when training can dramatically change the effectiveness of a model.

In case you’d like to run or analyze the training parameters used to create the dqn-hanoi-fully-trained model, the code below was used to create the model. Feel free to run it yourself, but recognize it will take about an hour.

Train an Effective DQN with Hanoi using sasrl-env

# Train our model with the correct hyperparameters

sess.reinforcementLearn.rlTrainDqn(

environment=dict(type='remote', url=controller_location, name='hanoi-v0'),

optimizer=dict(method='ADAM', miniBatchSize=32),

# The explorationFraction parameter causes the initalEpsilon to drop to the minEpsilon over a fraction of the episodes.

# In this implementation, the epsilon value is dropped from .9 to .05 for the first 60% of episodes, then remains at .05

# for the remaining 40% of episodes.

exploration = dict(type="linear", initialEpsilon=0.9, minEpsilon=0.05, explorationFraction=.6),

numEpisodes = 32500,

gamma = 0.9,

testInterval = 10000,

numTestEpisodes = 50,

targetUpdateInterval = 128,

minReplayMemory = 1000,

maxReplayMemory = 100000,

modelOut=dict(name='dqn-hanoi-fully-trained-self-ran', promote=True),

finalTargetCopy=True,

QModel=[64, 64],

)

# Score immediately after training

sess.reinforcementLearn.rlScore(

# Uses 1 CPU thread

nThreads=1,

# We use the same environment that we trained on

environment=dict(type='remote', url=controller_location, name='hanoi-v0', render=True),

# We use the model we just created

model='dqn-hanoi-fully-trained-self-ran',

# Does 20 "runthroughs"

numEpisodes=20,

# Writes the Q values at each observation to our table

writeQValues=True,

# Creates our table with our scoring data

casout=dict(name='scoreTable-fully-trained-self-ran', replace=True),

)Conclusion

Reinforcement learning is an incredible field with lots of opportunity. With ample time, an algorithm can train a model to solve a unique problem. Just as we applied a Deep Q-Network to solve the Tower of Hanoi problem, we can train the same algorithm to automate robotic machinery or generate a personalized healthcare treatment. The beauty of reinforcement learning lies in its great swath of applications and incredible potential, making it a great addition to the SAS Viya VDMML catalog.

Additional Resources

The Tower of Hanoi

Custom RL Environments

SAS Viya VDMML reinforcementLearn Action Set

Special Thanks

While creating my post, I was assisted by many colleagues, all of which provided me their incredible breadth of knowledge. These posts truly would not exist if it were not for the following people:

- Jordan Bakerman

- Christa Cody

About the Author

Hello! I'm Michael Erickson, the author of this blog and the Summer 2022 Intern with the Global Enablement and Learning (GEL) team. I'm a rising sophomore at NC State University studying Computer Science.

Find more articles from SAS Global Enablement and Learning here.

SAS AI and Machine Learning Courses

The rapid growth of AI technologies is driving an AI skills gap and demand for AI talent. Ready to grow your AI literacy? SAS offers free ways to get started for beginners, business leaders, and analytics professionals of all skill levels. Your future self will thank you.