- Home

- /

- SAS Communities Library

- /

- Deep Q-Learning with Custom Reinforcement Learning Environments on SAS...

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Deep Q-Learning with Custom Reinforcement Learning Environments on SAS Viya VDMML

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

In my previous post, I covered some of the highlights of the reinforcementLearn action set provided with SAS Viya Visual Data Mining and Machine Learning. In this post, I will be doing a deep dive into working with custom environments using Deep Q-Learning. I will address the various hyperparameters used for rlTrainDQN, as well as some of the intricacies of custom RL environment creation. This deep dive will explore many of the external and open source tools necessary for working with custom RL environments, including sasrl-env and OpenAI Gym.

In this post, I address:

- Custom Environment Directory Design

- Hyperparameter Tuning with rlTrainDQN

- Escaping Infinite Episodes with Custom Environments

- Creating Reward Functions with Custom Environments

- Creating Observation and Action Spaces when Using sasrl-env

This is the second post in my series on working with Reinforcement Learning with Custom Environments on SAS Viya VDMML:

- Reinforcement Learning with Custom Environments on SAS Viya Visual Data Mining and Machine Learning

- Deep Q-Learning with Custom Reinforcement Learning Environments on SAS Viya Visual Data Mining and Machine Learning (this post)

Custom Environment Directory Design

For my post and exercise, I created the custom environment The Tower of Hanoi. To explore this further, view my post on using custom environments. OpenAI Gym sets the standards for creating reinforcement learning environments by providing the gym.Env class. All custom RL environments should be a subclass of gym.Env and then made into a local Python package. To do so, there is a very specific directory design that must be used.

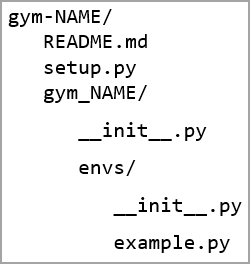

The entire python package should be encapsulated inside an overarching folder called gym-NAME. Inside this folder, there should be a README.md file and a setup.py file, as well as a subfolder named gym_NAME. Inside gym_NAME, there should be a __init__.py (two underscores around “init”) file and a subfolder named envs. Inside envs, there should be an __init__.py file and your custom environment file, named something unique.

Select any image to see a larger version.

Mobile users: To view the images, select the "Full" version at the bottom of the page.

Inside gym-NAME, the README.md file can remain empty, but setup.py should contain the following code:

from setuptools import setup

setup(name='gym_NAME', version='0.0.1', install_requires=['gym'])

The install_requires parameter should include any other required packages used inside of example.py. For instance, my project utilizes both numpy and pygame, so I make sure to say

install_requires=[‘gym’, ‘numpy’, ‘pygame’]

Inside gym-NAME/gym_name, __init__.py should include the following code:

from gym.envs.registration import register

register(id='example-v0', entry_point='gym_NAME.envs:ExampleEnv')

The id parameter should be set to the same unique name as your custom environment, followed by “-v0” to denote its version. The entry_point parameter should be set to gym_NAME.envs:ExampleEnv is the name of the environment class that you will create inside example.py.

Inside gym-NAME/gym_name/envs, __init__.py should include the following code:

from gym_NAME.envs.example import ExampleEnv

Where example is replaced by the unique name of your example.py file and ExampleEnv is the name of the environment class that you will create in example.py.

Inside example.py you should write the code for your custom environment. There are specific standards for creating a custom environment that can interact with Reinforcement Learning tools, including SAS's reinforcementLearn action set. The instructions for following the OpenAI Gym standards are described on their website.

Hyperparameter Tuning

When using rlTrainDQN, there are many different hyperparameters that you can alter. Many of these deal with the complex mathematics behind RL, and understanding how altering them can change your model can be hard to understand. I’ve broken a few of them down:

numEpisodes

NumEpisodes determines the number of training episodes (“run-throughs”) used for training. Increasing the number of episodes gives the model more opportunity to learn, but increases the time spent training.

testInterval and numTestEpisodes

TestInterval determines how often testing will be run on the model, while numTestEpisodes determines the number of testing episodes that will be used when running test. When running test, the partially-trained model will be scored, allowing you to view the effectiveness of the model after a certain amount of episodes. The model will be scored m times every n training episodes, where n is the testInterval and m is the numTestEpisodes. Decreasing the testInterval results in more test output in exchange for more training time. Increasing the numTestEpisodes increases the accuracy of the test output, but also increases the training time.

gamma

Gamma focuses on how future rewards are weighted relative to instant rewards. Tuned between 0-1, values closer to 1 focus on future reward, while values closer to 0 focus on instantaneous reward. By default, this value is set to 0.99, meaning future reward is valued highly. This value can be decreased to fix a model that is sacrificing the present (in favor of the future) too much.

exploration/minEpsilon/maxEpsilon

As the model goes through training, it uses an epsilon-greedy algorithm to determine whether to explore or exploit at any given timestep. When choosing an action during training, the algorithm will choose between a random action (exploration) or the best action (exploitation). Epsilon is described as the percentage chance that exploration is chosen over exploitation.

The exploration parameter determines how epsilon changes over time during training: either linearly or exponentially. When exploration is linear, you can choose the initialEpsilon and minEpsilon values. As well, you can choose the explorationFraction, or the fraction of training episodes that epsilon decreases from initialEpsilon to minEpsilon. By default, epsilon decreases from .99 (initialEpsilon) to .05 (minEpsilon) over all of the training episodes (explorationFraction=1.0).

In my implementation, I utilized linear exploration and recognized that many of my models would get stuck during scoring. To train my models to avoid getting stuck, I decreased my explorationFraction to 0.6. This led my model to run with a low chance (5%) of exploration for 40% of my testing episodes, allowing it to have the opportunity to teach itself how to get unstuck, dramatically improving my resulting model.

qModel

When training a DQN, a neural network is used to develop the Q-Table. The neural network’s input and output layers are set, as the input layer is dependent on the environment observation space and the output layer is dependent on the number of Q-Values. The shape of the hidden layers, though, are fully customizable with the QModel hyperparameter.

When creating the QModel, all that is required is the number of neurons for each layer. For example, a valid QModel can be created with the parameter [32, 32], which specifies that there are two hidden layers of 32 neurons a piece. By default, both layers are fully connected, meaning each neuron in one layer has a connection to all the neurons in the next. A layer can also be convolutional, where it is connected to only a few neurons. Implementing a convolutional layer can be much more difficult, and often needs to be tailored to your environment.

Seen below is an example neural network with two fully connected hidden layers, both composed of four neurons. This can be accomplished by setting the qModel hyperparameter to [4, 4]. In reality, using that small of a number of neurons in each layer is likely to be ineffective.

When creating a QModel, it is often best to use the default option and create fully connected layers, due to their adaptability. Be aware, though, that they are often not very efficient. As well, increasing the number of neurons per layer (as well as the number of layers) can potentially increase the effectiveness of the model, but often in exchange for a longer training time. Oftentimes, layers of sizes 16, 32, or 64 are used, depending on the difficulty of the problem being solved.

minReplayMemory/maxReplayMemory/miniBatchSize

Deep-Q Networks use the concept of experience replay to train. When the model is training, it is recording a set of experiences inside a replay memory. Once there are a certain number of experiences held inside of the replay memory, these experiences are sampled in minibatches and are used for training the model. This process is done to prevent training errors due to correlations between subsequent observations.

When creating a replay memory, there are lower and upper bounds to how many memories can be stored. Training using experience replay only starts after minReplayMemory experiences are stored in the replay memory. As well, the replay memory will begin pushing out old memories once there are maxReplayMemory experiences stored in the replay memory.

The optimizer is responsible for updating the underlying neural network for the DQN. SAS offers two optimizers: ADAM and SGD. The optimizer field has many parameters, but these are best left to their defaults most of the time. The only hyperparameter contained inside the optimizer field that should be considered is the miniBatchSize. When using experience replay, minibatches are the small samples of experiences that are used for training the model at each time step. The miniBatchSize determines how many experiences are sampled for each minibatch.

The minReplayMemory and maxReplayMemory should be tuned to the number of training episodes and observations per episode for your environment. If you are training with a small number of episodes, reducing your minReplayMemory to ensure there is ample training time is important. Increasing the miniBatchSize can increase the amount of training data the optimizer is exposed to, while also increasing the training time.

targetUpdateInterval

When using experience replay, the DQN actually uses two neural networks, one that is actively being trained and one deemed the target. The neural network that is constantly being trained is updated very rapidly, making it inefficient to constantly evaluate. As a result, the target neural network is evaluated by a loss function for each experience, while the training neural network is updated based on these results. This process increases training efficiency, but the target neural network and training neural network cannot stray too far from each other. The target neural network needs to be updated to match the training network every few training iterations, determined by the targetUpdateInterval.

This process is the most complicated concept related to DQN that I will address in this post. Understanding what is going on in the background is not necessary to develop a strong DQN model, but it is important to recognize how altering the targetUpdateInterval can impact your model. By increasing this value, the training time can be improved, but model quality can be harmed.

Escaping Infinite Episodes

In my custom environment, the goal was to minimize the amount of time it takes to complete a certain task. At first, there was no upper bound for the length of time for one episode to complete. Having no upper time bound meant there was the opportunity for a given episode to continue infinitely without ever reaching the terminal state.

Using epsilon-greedy exploration when training does ensure that, given enough time, the model should, via random actions reach the terminal state. For more complicated problems, though, this process of relying on random actions to reach the terminal state can be incredibly inefficient. Just as the infinite monkey theorem states a monkey randomly hitting keys at a typewriter will eventually compose Hamlet, an under-trained RL model is bound to eventually reach the terminal state. This does not mean the process of reaching the terminal state will not be incredibly time-consuming.

To ensure that training is done in an efficient manner and infinite episodes are not possible, escapes can be implemented into a custom environment to limit the length of an episode. The maximum number of steps in an episode (meaning one observation + action), can be limited with the parameter max_episode_steps. As well, the maximum reward value for an episode can be limited with reward_threshold.

For my custom environment, I implemented the max_episode_steps parameter, which allowed me to limit my training time while still ensuring the model has ample time to explore. When choosing the max_episode_steps, it is important to keep in mind the minimum number of steps that are needed in order to reach the terminal state in the worst-case scenario. For my environment, a perfectly trained model needs, at maximum, 15 steps to reach the terminal state. As a result, I set my max_episode_steps value to 100 steps, which gives the model plenty of time to explore.

Of all the parameters and hyperparameters used in RL, the max_episode_steps have the most significant impact on training time. In my experience, doubling the max_episode_steps translates to doubling the training time. Be wary when setting this value, as it is very possible to experience diminishing returns when increasing it.

To implement either max_episode_steps, navigate to your custom environment directory and edit gym-NAME/gym_NAME/__init__.py. Inside the register() statement for your custom environment, add in max_episode_steps and/or reward_threshold as follows:

After installing your local custom environment package again, your new escape parameters should be implemented.

Choosing a Reward System

Choosing rewards is an integral part of custom environment creation. You want to encourage the RL model to head towards the solution while punishing actions that have a negative effect. As well, it is very common to implement a “grand prize” reward to be rewarded when a positive terminal state is reached.

It can be beneficial to implement a gradient of reward, where more reward is given the closer you are to a desired terminal state. This ensures that the model earns an instantaneous reward for moving towards the solution, as well as the “grand prize” for reaching the solution.

As showcased in my previous post, I created a custom environment called The Tower of Hanoi, based on the based on the classic mathematical puzzle of the same name. In this puzzle, there are three pegs and many rings of varying sizes. The top ring on a given peg can be moved to another peg as long as no ring is placed on top of one smaller than it. Traditionally, the puzzle always starts with all of the rings stacked on the leftmost peg, where the goal is to move the fully stacked tower onto the rightmost peg. In my custom environment, though, the starting state is completely randomized, and the goal is to stack all the rings onto any given peg.

When creating this custom environment, I implemented three types of reward: one negative, one gradient, and one “grand prize”.

When building the tower, the incremental goal the actor is always acting towards is stacking the ring with the next-largest size atop the partially completed tower. For instance, in the state seen below, the incremental goal is to stack ring 2 on top of ring 1. Only after this goal is reached can you attempt to stack ring 3 on top of ring 2. My positive reward gradient increases as these incremental goals are achieved.

When the second-largest ring is stacked on the largest ring, a reward of +0.2 is given out. When the three largest rings are stacked on top of each other, this is doubled to +0.4. When the four largest rings are stacked on top of each other, this is doubled again to +0.8. The generalized formula for the positive reward at a given state is where n is the number of next-largest rings stacked on top of the largest ring when n is greater than zero.

My “grand prize” reward of +200 is given for the successful completion of the tower on any given peg. This value may seem large, but this is put in place to ensure that the model does not exploit the continuous reward of +0.8 each timestep for an almost-completed tower.

Creating your Observation and Action Spaces for sasrl-env

When creating a custom environment, you must create an observation space and an action space. OpenAI Gym provides the space superclass to make this simple, with six predefined space types. If you are looking to create a custom environment server using sasrl-env, though, only four spaces are available for use (as of sasrl-env version 1.1.0): Box, Discrete, Dict, and Tuple. The two spaces that are unavailable, MultiDiscrete and MultiBinary, are possible to mimic using the Box space type.

The MutliDiscrete space type can be used for a space where there are multiple elements that are best represented as discrete integer values instead of a variable range. For my Tower of Hanoi custom environment, my observation space was best described as a MultiDiscrete space. There were multiple rings that must be on one of three pegs. When there are five rings, for instance, they can be represented as a MutliDiscrete space of size five where each value can be 0, 1, or 2.

A MultiBinary space is very similar to MultiDiscrete, but the discrete value for each element must be either 0 or 1.

To mimic a MultiDiscrete or MultiBinary space, a Box space can be used. To limit the values to discrete integers, the dtype parameter can be set to int, and then the low and high parameters can be used to limit your discrete values.

Box(low=a, high=b, dtype=int, shape=(SIZE, ))

This implementation of the box type creates a one-dimensional space containing SIZE elements where each element can be the value [a, b-1]. To mimic the MultiBinary space, then, implement the Box type like so:

Box(low=0, high=2, dtype=int, shape=(SIZE, ))

Additional Resources

OpenAI Gym

- OpenAI Gym Documentation

- OpenAI Gym | Environment Creation

- OpenAI Gym | Spaces

- Getting Started With OpenAI Gym

Custom RL Environments

- SAS Tutorial | Reinforcement Learning with Christa

- Reinforcement Learning Tips and Tricks — Stable Baselines3

- pygame

Deep Q-Learning

- Playing Atari with Deep Reinforcement Learning

- SAS VDMML: Reinforcement Learning Programming Guide | Deep Q-Learning

- Replay Memory Explained

SAS Viya VDMML reinforcementLearn Action Set

Special Thanks

While creating my posts, I was assisted by many colleagues, all of which provided me their incredible breadth of knowledge. These posts truly would not exist if it were not for the following people:

- Jordan Bakerman

- Christa Cody

- Reza Nazari

- Alex Phelps

About the Author

Hello! I'm Michael Erickson, the author of this blog and the Summer 2022 Intern with the Global Enablement and Learning (GEL) team. I'm a rising sophomore at NC State University studying Computer Science.

Find more articles from SAS Global Enablement and Learning here.

SAS AI and Machine Learning Courses

The rapid growth of AI technologies is driving an AI skills gap and demand for AI talent. Ready to grow your AI literacy? SAS offers free ways to get started for beginners, business leaders, and analytics professionals of all skill levels. Your future self will thank you.