- Home

- /

- SAS Communities Library

- /

- Kubernetes Primer for SAS Viya: Storage for CAS

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Kubernetes Primer for SAS Viya: Storage for CAS

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Running SAS Viya in Kubernetes requires a major shift in our approach to software deployment planning. Storage - that is disk-based or network file systems or other storage mediums - are all available for use in k8s, but we must decide which to use and how to configure SAS Viya to find it. For this post, we'll look at the storage that the SAS Cloud Analytic Services expects to find its configuration in k8s.

Directories CAS expects to use

Let's consider an MPP deployment of SAS Cloud Analytic Services with one controller and multiple workers. In many cases, the same set of directories are defined, but their actual use will vary on the CAS node's role. Further, some of those directories are meant to be local to the host machine (or k8s node) whereas others should be shared across multiple hosts. Which is which? The documentation is your friend here because the directory names themselves provide little help in understanding their architectural requirement.

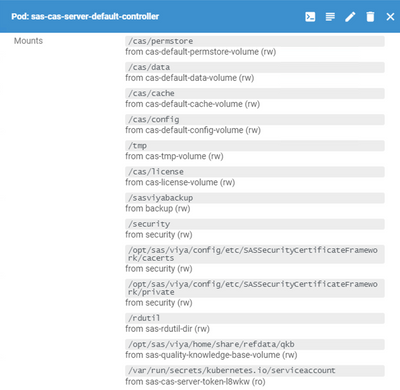

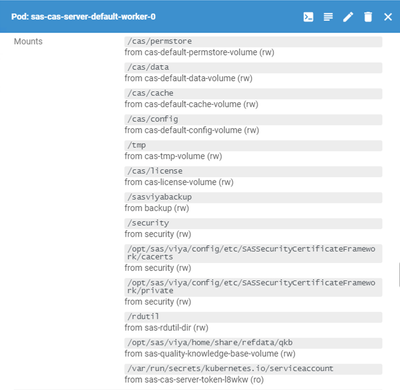

Let's fire up Lens Kubernetes IDE and take a quick look at the mounts we can see defined for CAS in a Viya 2020.1.2 deployment:

|

|

Select any image to see a larger version.

Mobile users: To view the images, select the "Full" version at the bottom of the page.

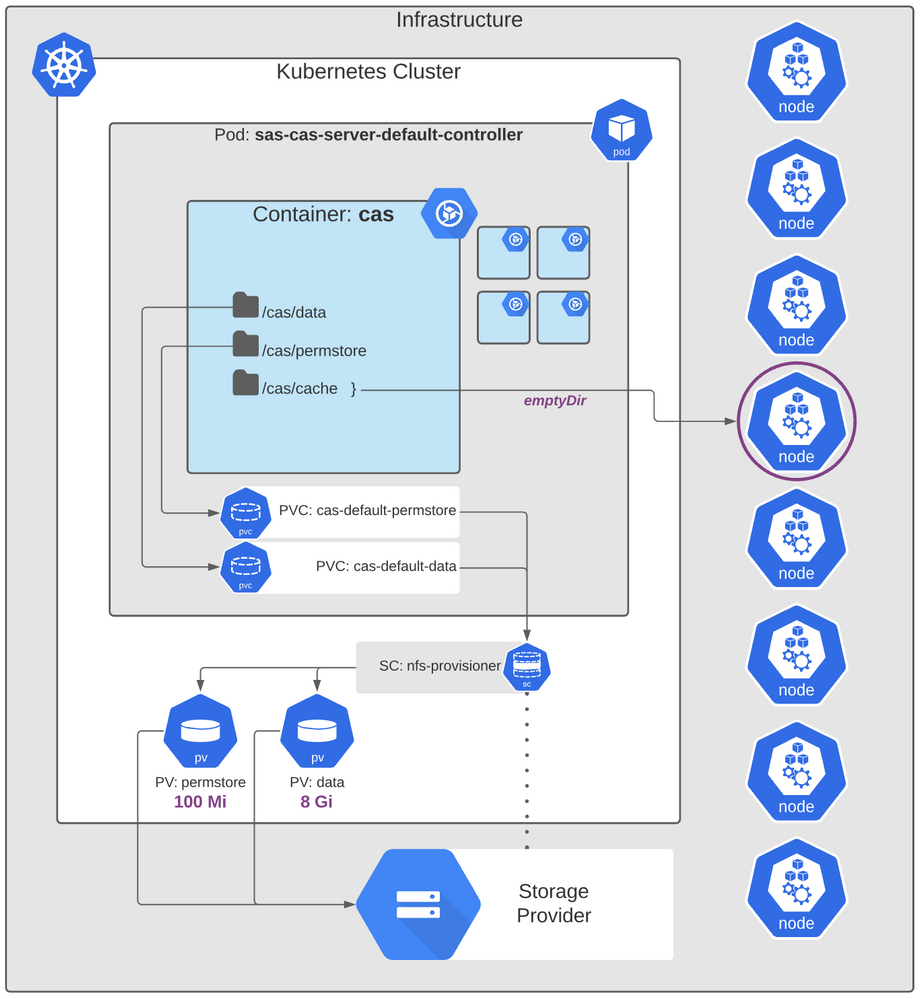

Basically, the CAS controller and CAS workers define the same directory paths… but they don't use them the same way.

Now it's critical to understand that these mount locations are inside the CAS containers. You won't see them on any k8s node's host directly. Even so, ultimately those mount locations resolve to something real and physical … so let's track 'em down.

For this article, we will focus on the first 3 in the list:

/cas/permstore- permissions and other meta info regarding caslibs and tables/cas/data- the default location for new caslibs/cas/cache- backing store for in-memory data and replicated blocks

site.yaml

I prefer to use Lens when I can, but for some things, it's helpful to go to the source: your SAS Viya site.yaml. You'll recall that the site.yaml file is the manifest which describes how the SAS Viya components are deployed into k8s. And it's not trivial. In my deployment, my site.yaml has over 1,700 definitions in it. And with the indentation-heavy syntax, it can be tricky to figure out exactly which piece is where.

Looking at the mounts listed in Lens above, you can see that each directory is also referenced by a name listed as "from". So let's start of to see where /cas/permstore, referred to as "cas-default-permstore-volume" will take us. And that is in the "default" CAS server's CASDeployment custom resource definition (CRD). I can't show the entire 400+ line definition here, but here's the header:

---

apiVersion: viya.sas.com/v1alpha1

kind: CASDeployment

metadata:

annotations:

sas.com/component-name: sas-cas-operator

sas.com/component-version: 2.6.13-20201113.1605280128827

sas.com/config-init-mode: initcontainer

sas.com/sas-access-config: "true"

sas.com/version: 2.6.13

labels:

app: sas-cas-operator

app.kubernetes.io/instance: default

app.kubernetes.io/managed-by: sas-cas-operator

app.kubernetes.io/name: cas

sas.com/admin: namespace

sas.com/backup-role: provider

sas.com/cas-server-default: "true"

sas.com/deployment: sas-viya

workload.sas.com/class: cas

name: default

namespace: gelenv

... and so on.

Later on in this CASDeployment CRD, the information we're looking for regarding the directory paths referenced by CAS in its containers are laid out.

VolumeMounts

We need to find where the mount directory and the name are associated with each other in site.yaml. A quick search of the file shows two hits for "cas-default-permstore-volume". And the first hit is in the "default" CAS server's CASDeployment CRD at path spec.controllerTemplate.spec.env.volumeMounts. Here's a snippet:

volumeMounts: - mountPath: /cas/permstore name: cas-default-permstore-volume - mountPath: /cas/data name: cas-default-data-volume - mountPath: /cas/cache name: cas-default-cache-volume ... and so on.

Great - so we've found what Lens has showed us above. Let's follow the breadcrumbs by mapping the names of these volume mounts in the container to the k8s objects.

Volumes

Remember I said above that there were two hits on "cas-default-permstore-volume" in the site.yaml file. Well the second one tells us which PVC is used to provide storage to that mounted volume in the CAS container. That PVC reference is also in the "default" CAS server's CASDeployment CRD at path spec.controllerTemplate.spec.volumes. And here's that snippet:

volumes:

- name: cas-default-permstore-volume

persistentVolumeClaim:

claimName: cas-default-permstore

- name: cas-default-data-volume

persistentVolumeClaim:

claimName: cas-default-data

- emptyDir: {}

name: cas-default-cache-volume

... and so on.

Now, this is where things start to splinter a bit. In this post, we're just following three of the many volumes which CAS relies on. And here you can see that two of the volumes rely on PersistentVolumeClaims to satisfy their storage, but the "cas-default-cache-volume" refers to an emptyDir.

Not surprisingly, different volumes (directories) are used for different things. And the storage for each can be optimized to meet those different needs. From this point, we will first run down the PVC and then the emptyDir to discover what they mean.

PersistentVolumeClaim

A PersistentVolumeClaim (or simply PVC) is a k8s abstraction intended to simplify an pod's request for storage. The pod specifies a few simple attributes, like size and access-mode, and then k8s figures out which storage solution is the right match (and if any is available).

Sticking with the "cas-default-permstore-volume", we see that it's mapped to the "cas-default-permstore" PVC. Where does that PVC go? PVCs are defined elsewhere in the site.yaml (not in the CASDeployment definition). And a quick search finds:

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

annotations:

sas.com/component-name: sas-cas-operator

sas.com/component-version: 2.6.13-20201113.1605280128827

sas.com/version: 2.6.13

labels:

app.kubernetes.io/part-of: cas

sas.com/admin: cluster-local

sas.com/backup-role: provider

sas.com/cas-instance: default

sas.com/cas-pvc: permstore

sas.com/deployment: sas-viya

name: cas-default-permstore

namespace: gelenv

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 100Mi

Notice how this PVC requests 100 Mi (that is, mebibytes) of space with a ReadWriteMany access mode (RWX). The CAS permissions store is used by the CAS controller(s). And of course, it needs to write to that space and read from it. Furthermore, if you're running a Secondary CAS Controller to improve availability, then we need a volume that can be mounted by more than one pod (i.e. many) at a time.

A similar PVC is defined for "cas-default-data" requesting 8 Gi with RWX access. Notably, the CAS data directory is actively used by the CAS workers, too. This is about as far as the site.yaml can take us. From here, k8s will create a PersistentVolume usually with some arbitrary name. To see that what's happening live in the environment, we'll return to using Lens.

PersistentVolume

A PersistentVolume (or PV) is a resource in the k8s environment. A PVC, on the other hand, is a request to use that resource. What makes PVs useful is that their lifespan is independent of any pods that use them. So if you have data which you need to persist across pods, then PVs are handy.

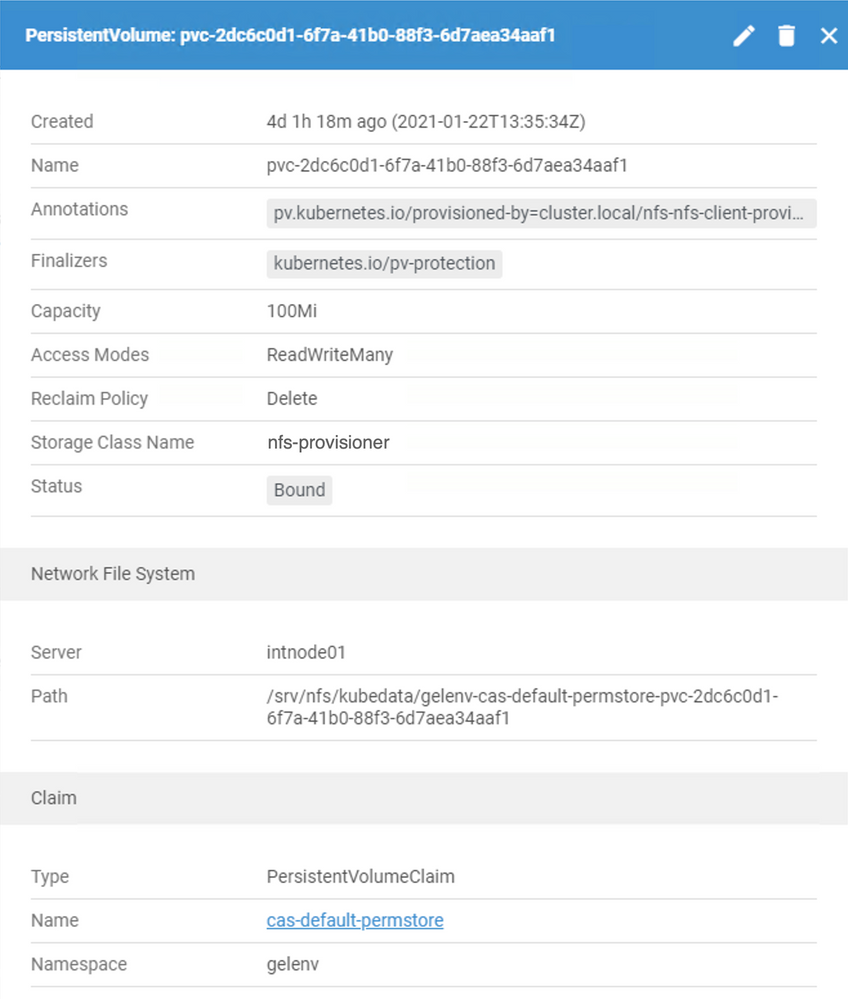

For the CAS permstore, we can see that k8s in my environment has provided this PV with a long, unwieldy name:

PVs are provisioned in a couple ways: manually by an administrator or dynamically on-demand using a StorageClass. And here we can see Lens is telling us this PV is provisioned by the "nfs-provisioner" StorageClass - but if you look at the PVC definition above, the StorageClass isn't mentioned. That's because my k8s environment has "nfs-provisioner" defined as the default StorageClass, so that's what the PVCs end up using (unless they specify something else). Depending on your infrastructure provider, your StorageClass will likely be something different. And of course, your site can configure its own default and go further to specify a StorageClass for each PVC individually, if needed.

In other words, just because my StorageClass example is called "nfs-provisioner", please do not interpret that to mean that all of your PV must be NFS-based. Some will be, but not all are required to be.

A similar-looking, but much larger PV (8 Gi) exists for the "cas-default-data" as well.

emptyDir

Let's backtrack a little. Remember that "cas-default-cache-volume" did not reference a PVC for its volume. Instead, its storage is provisioned by an emptyDir which mounts the directory specified in the container to a physical location on the node running the pod. As a matter of fact, all containers in the pod can read and write files in the same volume, even though it might be mounted to different directories in each container.

But here's the thing, when a pod is removed from a node, then the data in the emptyDir is deleted permanently. It's not persistent (hence no PVC nor PV). This concept of ephemeral storage for the CAS cache makes sense. CAS only needs the files in its cache while its actively running. Once CAS stops, those files are useless and no longer needed.

So where exactly is this emptyDir created on the node? To do that, you need to identify which node the pod is running on. Specifically, we're looking at the sas-cas-server-default-controller pod:

[cloud-user@myhost /]$ kubectl -n gelenv get pods -o wide | grep sas-cas-server-default-controller NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES sas-cas-server-default-controller 3/3 Running 0 4d4h 10.42.2.20 intnode02

[ Note: this textfield can be scrolled horizontally to see the full output ]

Alright, it's on the node named "intnode02". So when an emptyDir is used, the path on the node typically looks like:

/var/lib/kubelet/pods/PODUID/volumes/kubernetes.io~empty-dir/VOLUMENAME

We need to determine the PODUID. Maybe it's obvious? Let's logon to the intnode02 host to get a directory listing up to that point in the path and see:

[root@intnode02 /]# ls -l /var/lib/kubelet/pods drwxr-x--- 5 root root 71 Jan 22 08:32 02c37909-1bc4-4758-ac23-813311ff21ce drwxr-x--- 5 root root 71 Jan 22 08:51 1bc4246e-9a1f-4327-95e3-dd554094f21c drwxr-x--- 5 root root 71 Jan 26 13:37 33cd6906-dad6-4254-9940-0ae0d07b1d28 drwxr-x--- 6 root root 94 Jan 22 08:43 49fc605a-eef5-42be-826d-0020eb370449 drwxr-x--- 6 root root 94 Jan 22 08:42 4fe9595d-5df8-4c31-ba98-cb2d0009daf3 drwxr-x--- 5 root root 71 Jan 22 09:02 5ac4b855-9d7c-43e0-8764-23e0b993dbf7 drwxr-x--- 6 root root 94 Jan 22 09:32 5ddc5f35-c4c7-4963-bdbc-05e263bc5d4a drwxr-x--- 6 root root 94 Jan 22 08:43 7f00b31e-b5e4-4a4e-b0d5-889df2d1efcd drwxr-x--- 6 root root 94 Jan 22 08:52 8b23f6d2-566c-4614-811d-b11014b89b23 drwxr-x--- 6 root root 94 Jan 22 08:52 90b1dbcc-c13a-453f-8dc0-b62f1a6f5b97 drwxr-x--- 6 root root 94 Jan 22 09:24 96055392-0842-4925-94a6-b852bbb832e6

Ewwww. That's a lot of not-human-friendly directory names. Which corresponds to the /cas/cache of our CAS controller container? Let's ask k8s for the UID of the CAS controller pod:

[cloud-user@myhost /]$ kubectl -n gelenv get pods sas-cas-server-default-controller -o jsonpath='{.metadata.uid}'

8b23f6d2-566c-4614-811d-b11014b89b23

So plug that PODUID into the path and let's see how far we can get:

[root@intnode02 pods]# ls -l /var/lib/kubelet/pods/8b23f6d2-566c-4614-811d-b11014b89b23/volumes/kubernetes.io~empty-dir/ drwxrwsrwx 2 root 1001 6 Jan 22 09:43 cas-default-cache-volume drwxrwsrwx 5 root 1001 4096 Jan 22 08:53 cas-default-config-volume drwxrwsrwx 3 root 1001 17 Jan 22 08:52 cas-tmp-volume drwxrwsrwx 7 root 1001 84 Jan 22 08:52 consul-tmp-volume drwxrwsrwx 4 root 1001 104 Jan 22 08:52 security

And there, alongside some other CAS emptyDir destinations, is the ultimate directory we're looking for: cas-default-cache-volume. Now we know exactly where the CAS controller is writing its cache files to… at least for now. If the CAS controller pod is moved to another node, then a new emptyDir will be setup there and the old one, and its contents, deleted.

Each CAS worker will also have its own emptyDir for its respective cache files as well. We want each one to get its own local, unshared disk for this purpose.

All together now

That was a lot of digging. But here's a quick illustration to help tie it together:

To put this into the larger context, note that we only looked at three paths defined for the CAS controller's container (out of 13). The CAS controller's container is placed in a pod with 4 other containers, each of which has additional volume mounts of their own. And multiply that for the total number of CAS workers and controllers deployed. And then we also have many other SAS Viya infrastructure components - and these have significant considerations in terms of volume, availability, etc.

Some takeaways

While we've only chased down three volumes used by CAS, we've already seen a lot - but not all - of the k8s infrastructure responsible for storage. And so far, we've only seen the defaults that SAS Viya ships with. But those defaults are often not what are needed for a production system. Here are a few examples based on what we've seen so far:

- The PV sizes might need to change depending on usage. For example, cas-default-data (what you might recall referring to as CASDATADIR) could see significant usage if certain analytic procedures are employed.

- This k8s cluster referenced for examples here has a default StorageClass defined and Viya is using it without knowing about it. But for a production system, you can define new StorageClasses optimized for the kind of storage performance that SAS Viya needs. Then specify a particular StorageClass to use in the PVC definitions.

- Relying on emptyDir for the CAS cache is fine for demonstrations and testing, however, it can quickly become problematic because as we show above, it resolves to the root partition on the node host. If we fill that partition up, then the node itself will become unstable and probably fail. Furthermore, in Azure Kubernetes Service, emptyDir is limited to 50 Gi and a pod attempting to use more than that is marked for eviction. Since CAS typically backs all of its in-memory data to the cache, this problem is a real concern. Currently SAS does not provide prescriptive guidance to improve this - instead relying on a site's k8s administrator to resolve in a way that suits their needs - but it is an area we're looking at intently.

Find more articles from SAS Global Enablement and Learning here.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

hello Rob,

it is very interesting article. I was able to follow it with my own site.yaml. At one point I did not know how to logon to a node. Could you add that?

Another question: I am seachring for a path where audit files are written to, in the old versions of Viya it was /opt/sas/viya/config/var. How to find that? The objective is to reset auditing process on kubernetes. Pls advise if you can.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Touwen,

I'm glad this article has been useful to you. When working with a Kubernetes environment, it can be helpful to remember that you'll often need to do things remotely -- that is, not from inside the cluster directly yourself. So when you ask about logging into node, it's possible, but I bet what you want can be done better in a different way. 😉

Still - to answer your question - there are a few ways to logon to a node in your k8s cluster:

1. ssh to the node's IP address (or hostname) - assuming you have the private key for the "admin" (or similar) user

2. use the "ssh node" plugin for kubectl - assumes you have a kubeconfig file with cluster-admin privileges

3. use kubectl to "exec" into a container for command-line access there

4. use the Lens IDE, select the node, and open ssh connection - assumes you have a kubeconfig file with cluster-admin privileges -and- that your node can support running a new pod (with your ssh)

I'm not expert with auditing in SAS Viya, but to help with that question, I suggest taking a look at the SAS Viya documentation > SAS® Viya® Administration > 2022.1.2 > Security > Auditing:

https://go.documentation.sas.com/doc/en/sasadmincdc/v_029/calaudit/n0buxigkyloiv2n1s0i5jfhyxunm.htm

There you should find instructions for:

- accessing auditing data placed in CAS

- exporting audit records for review outside of SAS Viya (instead of looking for files on disk)

- guidance to change auditing configuration in SAS Environment Manager

Also, @AjmalFarzam has a helpful Communities article to get you better oriented, A First Look at Auditing in the New SAS Viya:

https://communities.sas.com/t5/SAS-Communities-Library/A-First-Look-at-Auditing-in-the-New-SAS-Viya/....

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

thank you very much for your answer and advice

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

hello Rob,

I have question related to cas disc cache change from empty dir to for example Kubernetes generic ephemeral storage. We have done this and system works much better, for the first time we were able to load some tables in memory, all together good for 215 GB.

At this moment we have 5 cas workers nodes and we added extra cas cache of 300Gi on each node, I would think that we have now 1500Gi of cache. When we used monitoring tool for cas cache, the builtins.getCacheInfo action, it reported that cas cache is for 99% full, but we only loaded 215GB with one redundant copy explicitly stated in the code of SAS Studio. I do not understand why cas cache is 99% full. Do we need to prepare somehow this storage for cas cache? If you have some advises, it would help a lot.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Touwen,

Thanks for sharing your success to date with configuring storage for CAS.

It sounds like you already have a handle on monitoring the disk space used by CAS cache, but just in case you haven't seen it already, we also have some utilities to help as well:

A New Tool to Monitor CAS_DISK_CACHE Usage

The CAS cache is not only used as a backing store for in-memory data and for storing replicated copies of the table. CAS also maintains additional data there, too. My colleague Nicolas Robert has a great post explaining more:

When is CAS_DISK_CACHE used?

https://communities.sas.com/t5/SAS-Communities-Library/When-is-CAS-DISK-CACHE-used/ta-p/631010

So as you can see, depending on your analytics and data management activities using CAS, there could be a number of things that are consuming disk space in the CAS cache area.

CAS relies on its cache as a form a virtual memory to a degree and that's great to reduce administrative overhead when working with volumes of data that combine to be larger than RAM. But depending on your activity, it might be needed to ensure the storage in the CAS cache is several multiples of the amount of RAM. At a bare minimum, SAS recommends starting with 1.5 - 2× disk space for CAS cache as compared to RAM. I suggest it could need a much higher multiplier per your specific needs. Otherwise you might find you need to aggressively drop tables from CAS when they're not immediately in use in order to reduce the CAS cache usage.

HTH,

Rob

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello Rob, thank you very much for your answer and we are happy that we have succeeded to implement a good solution for provisioning of cas disc cache.

The article with example for a table of 100MB at the end is great.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

We had a live session with a SAS consultant and we have discovered that tables with old SAS extension become much larger when loading to CAS. This was the reason that cas disc cache become full very quickly. One more time, thank you for your help.

April 27 – 30 | Gaylord Texan | Grapevine, Texas

Registration is open

Walk in ready to learn. Walk out ready to deliver. This is the data and AI conference you can't afford to miss.

Register now and lock in 2025 pricing—just $495!

SAS AI and Machine Learning Courses

The rapid growth of AI technologies is driving an AI skills gap and demand for AI talent. Ready to grow your AI literacy? SAS offers free ways to get started for beginners, business leaders, and analytics professionals of all skill levels. Your future self will thank you.