- Home

- /

- SAS Communities Library

- /

- Extending change management into Kubernetes

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Extending change management into Kubernetes

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Editor's note: The SAS Analytics Cloud is a new Software as a Service (SaaS) offering from SAS using containerized technology on the SAS Cloud. You can find out more or take advantage of a SAS Analytics Cloud free trial.

This is one of several related articles the Analytics Cloud team has put together on while operating in this new digital realm. These articles address enhancements made to a production Kubernetes cluster by the support team in order to meet customer's application needs. They also provide guidance through a couple of technical issues encountered and the solutions they developed to solve these issues.

Articles in the sequence:

How to secure, pre-seed and speed up Kubernetes deployments

Implementing Domain Based Network Access Rules in Kubernetes

Detect and manage idle applications in Kubernetes

Extending change management into Kubernete (current article)

Extending change management into Kubernetes

Companies rely on IT Service Management (ITSM) to standardize processes for IT Infrastructure life cycle management. When applying change management practices to cloud infrastructure, the ephemeral nature of cloud resources make it easy to overlook the impact on all of its dependent applications and operation teams supporting the infrastructure. That lack of attention to detail affects operations teams, inside those same companies, supporting the infrastructure. Coming from an operations background, I can recall being paged plenty of times on incidents related to infrastructure, yet not owned by my team. Reasons for that ranged from incorrect data entry, to word associations based on configuration item (CI) names.

As the SAS Analytics Cloud team branched into managed Kubernetes infrastructure, we wanted to share our operational experience to ensure clean and reliable data, as well as provide a repeatable on-boarding pattern for the teams relying on our infrastructure to account for critical applications.

The process

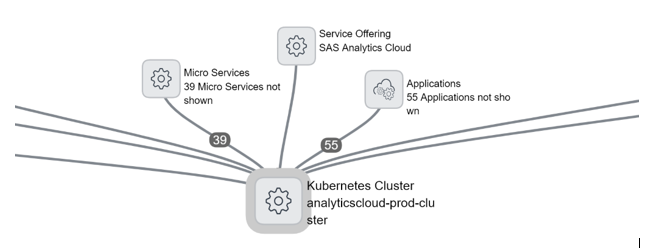

Our objective here is simple. We need to extend our understanding of entities and service dependencies beyond the infrastructure layer. We also want to have more accurate accounting for potential impacted dependent services when making changes. To meet those objectives, we treat each namespace as a separate CI. All existing resources in the namespace are the services on which the application depends on and are also treated as CIs themselves. Using that knowledge, we can populate a configuration management database (CMDB) with the appropriate relationship linkages up to the cluster and infrastructure level as shown below.

Figure 1: CMDB relationship links to the cluster

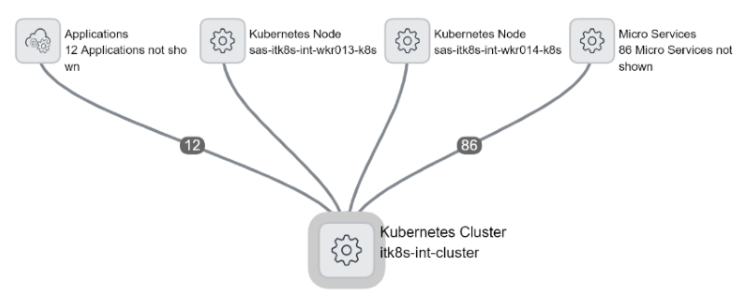

We accomplish this by running our CMDB Watcher process on each Kubernetes cluster we manage. Logical idea of a cluster creates linkages and get tied to the physical and logical representation of a Kubernetes node. Tracking the physical node is standard in a CMDB, but we also decided to track the logical representation of a Kubernetes node (Figure 2). It allows us to store Kubernetes-specific information such as Kubelet version, current running pods, links to metrics, etc.

Figure 2: The Kubernetes node

From the cluster level, as mentioned earlier, we want to break the namespaces into different logical blocks. We take advantage of the Kubernetes API and watch for new namespaces as they come along. When we create new namespaces, we monitor for custom tags such as application name or support group information. These annotations provide immense value to operations groups. Support group information allows rapid routing of incidents, relating to a specific application, to the correct staff to remediate. If a cluster-wide issue were to arise, the groups impacted have built-in, useful notifications.

Examples of the scripts we used for monitoring appear in Figures 3 and 4 below.

# Example snippet of namespace configuration with relevant fields

...

apiVersion: v1

kind: Namespace

metadata:

annotations:

cmdb-watcher/application: reorders

support-group: sask8ssupport@sas.com

... Figure 3: Namespace configuration code

#Code snippet that shows some resources we retrieve in order to convert to Configuration Items

def fetch(self, namespace):

if not namespace:

raise ValueError('Namespace Parameter required')

#Take namespace, check for common resource types to report back

self.namespace = namespace

rc_fetch = self.list_replication_controller()

service_fetch = self.list_services()

deployment_fetch = self.list_deployments()

ss_fetch = self.list_statefulsets()

ds_fetch = self.list_daemonsets()

# Gather results, send back

fetch_list = {}

fetch_list["replicationcontrollers"] = rc_fetch

fetch_list["services"] = service_fetch

fetch_list["deployments"] = deployment_fetch

fetch_list["statefulsets"] = ss_fetch

fetch_list["daemonsets"] = ds_fetch return

fetch_list ch fetch_list["statefulsets"] = ss_fetch

fetch_list["daemonsets"] = ds_fetch

return(fetch_list) Figure 4: Code snippets for converting resources to configuration items

Observed benefits

Administratively, having these links assist in determining impact if a change applied to infrastructure reduces time required to determine impact. The linkages will assist in determining finding a common link if encountering multiple errors. SAS made a concerted effort to coalesce our infrastructure information in a central location and to make sure the data collected is accurate and reliable in our infrastructure repository.

We mentioned life cycle management earlier, and this process assists with that, much like this situation we’ll describe. On a team at SAS managing VMWare, where the concept of clusters is very similar to Kubernetes, a colleague mentioned challenges they ran into in relation to Microsoft Windows Server 2008 retirement. Much like Windows 7, Microsoft Server 2008 faces end of life in January 2020. There were some instances - assuming CMDB data was even present - where no information existed for links to which cluster VMs belonged to, ownership of instances, or which operating system they ran.

As you can imagine, if you are running a project to ensure all end of life software is no longer running, manually validating and tracking down information for a virtual machine with out-of-date data is not a fun task. Now multiply this task over hundreds of orphaned instances, across multiple clusters. Yuck! Without good data, you have no idea if the resource you want to terminate is a critical application.

Finally

One last point: Your understanding of your situation is only as good as your data. As demonstrated above, getting data is key, but collecting the right data is another challenge. From our experiences, if you make a process easy and unobtrusive, users are more likely to participate. So, asking for something as easy as annotations, and letting our automation handle the communication allows us to be more confident in the data we have collected. Our automation allows us to remove a manual layer of changes and be sure that we know the full scope of how our actions affect dependent users.

SAS AI and Machine Learning Courses

The rapid growth of AI technologies is driving an AI skills gap and demand for AI talent. Ready to grow your AI literacy? SAS offers free ways to get started for beginners, business leaders, and analytics professionals of all skill levels. Your future self will thank you.