- Home

- /

- SAS Communities Library

- /

- Deploying SAS Viya using Red Hat OpenShift GitOps

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Deploying SAS Viya using Red Hat OpenShift GitOps

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Many years ago – even before I started my professional life as a software engineer – I became fascinated with Perl. It was the first programming language I ever learned and I really loved it because it allowed for nearly unlimited chaotic creativity (take a look at some results of the annual obfuscated Perl contest if you’re not sure what I am talking about).

I admit that over time I forgot how to code in Perl, but what really stayed in my mind was a quote from Perl’s inventor, Larry Wall, which can be found in his foreword of the famous Camel Book:

“laziness is a virtue” (in programming at least)

I must confess that I always believed this statement to be true and for that reason I was excited to see that SAS released a utility called the SAS Deployment Operator earlier this year. Using the Operator has become the recommended approach of deploying SAS Viya as it can help you to fully automate the software lifecycle management of a SAS Viya environment (deploying, re-deploying, updating …). Put simply: it’s a pod taking care of other pods. Here are some of the key benefits of using the Operator (as opposed to using the traditional, manual deployment approach):

- It’s an approved standard – the Deployment Operator adheres to the standard Kubernetes Operator pattern for managing workloads on Kubernetes clusters.

- It’s GitOps – providing automation, version control and configuration management.

- Clear separation of user privileges – let SAS administrators manage the lifecycle of SAS Viya without the need for elevated permissions and without requiring the Kubernetes administrators to help them out.

In this blog I’d like to provide you with more details on how the Deployment Operator can be integrated into a GitOps workflow on the Red Hat OpenShift Container Platform.

SAS Deployment Operator

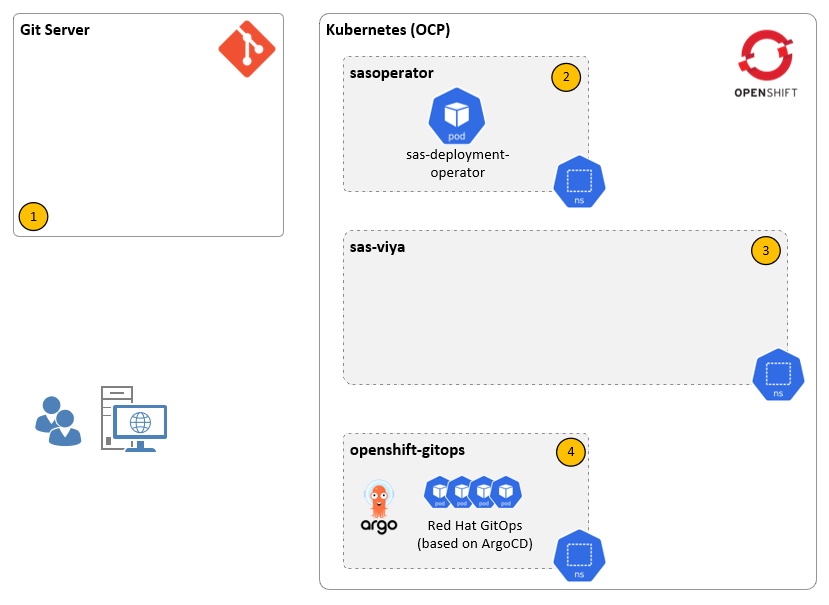

In order to save some space I won’t cover the basics on how to deploy the Operator because this is all well documented in the SAS Operations Guide. I'll just mention that I’ll be using the Operator in cluster mode, so the Operator will have its’ own namespace and will be able to handle multiple SAS Viya deployments on the same cluster. And obviously I’m going to use a Git server for storing the deployment manifests which need to be presented to the Operator. There are alternative options for both things – please have a look at the docs to learn more about them. Our starting point looks like this:

Let me briefly explain the major components of this infrastructure before we move on. In the above diagram you see:

- The Git server. This is the location where we will store the deployment manifests and the custom resource (CR) which triggers the Deployment Operator

- The SAS Deployment Operator (in cluster mode). The Operator runs idle until it gets triggered by a SASDeployment CR (more on this later)

- This is our target namespace, i.e., it’s the namespace where we want SAS Viya to be deployed. For now it’s empty.

- A deployment of Red Hat GitOps, which is OpenShift’s built-in solution for Continuous Delivery tasks. We’ll talk about this in more detail below.

There are some preliminary steps which are not shown on the diagram. Most importantly the OpenShift administrator should already have created the required custom Security Context Constraints (SCCs) and assigned them to the (not-yet-existing) local SAS Viya service accounts. This is a one-time preparation task when setting up the target namespace. Assigning project users, project quotas and storage volumes are examples for other tasks which also might need to be completed beforehand.

Now let’s focus on the Deployment Operator. Like all operators following the standard pattern it watches for Kubernetes configuration objects of the type CustomResource (CR) which are user-defined extensions to the Kubernetes API based on CustomResourceDefinitions (CRDs). SAS has created a CRD named “SASDeployment” and by submitting an instance manifest of this CRD to a target namespace in the cluster, we can trigger the Operator to start working.

But just what does that exactly mean? How does the Operator know what to do and where to find things? This is all part of the CR we’re submitting. It contains the license information, the URLs of the container image registry (if a mirror is used), the cadence we want to deploy (e.g. STABLE or LTS) and – most importantly – the location where to find the manifests and patches to use for the deployment. Here’s a shortened example of a deployment CR:

apiVersion: orchestration.sas.com/v1alpha1

kind: SASDeployment

...

spec:

caCertificate: ...

clientCertificate: ...

license: ...

cadenceName: stable

cadenceVersion: 2021.1.6

imageRegistry: container-registry.org/viya4

userContent:

url: git::https://user:token@gitserver.org/sas/viya-deploy.git

Of course there is no magic involved. You still have to provide the manifests and patches which are required just like you would do for a manual deployment of SAS Viya. However, you do not have to use the kustomize and the kubectl apply commands – this is accomplished by the Operator.

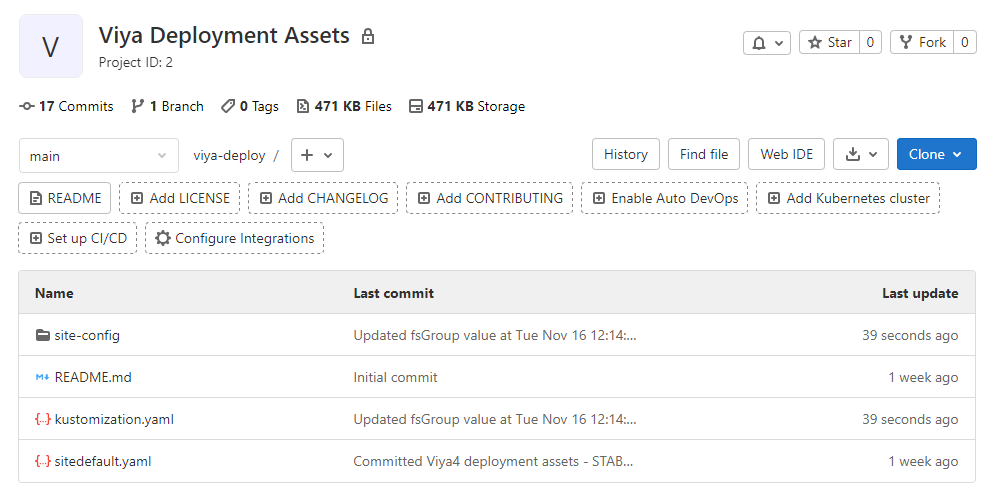

There’s a funny looking URL shown at the end of the abbreviated CR shown above – this is a so-called go-getter URL, which is basically a way to inject information about the source system to a download URL. In our case, the “git::”-prefix tells the Deployment Operator that the userContent resides in a Git repository. The SAS administrator owns the contents of this repository and needs to update it whenever a configuration change is required. The repository is simply a 1:1 copy of the manifests and patches from your site-config folder (note that you do not have to include the sas-bases content – the Operator knows how to get it):

At this point, we nearly have everything we need: there’s the Operator, a target namespace, a Git repository with all manifests and patches and a CR manifest to kick off the Operator. We could actually simply submit the CR file to the cluster with a kubectl command like this:

$ kubectl -n sas-viya apply -f ~/viya-sasdeployment-cr.yaml

This would cause the Operator to deploy a reconcile job to the sas-viya namespace which would start deploying the software. But this is not what we want to do as it would be a manual intervention which we want to avoid if possible. Shouldn’t there be an automated way of managing the lifecycle of a SAS Viya environment? Indeed there is - enter Red Hat OpenShift GitOps.

Red Hat OpenShift GitOps

Red Hat OpenShift GitOps was announced earlier this year as an integrated addon for the OpenShift Container Platform (OCP). Being part of the OCP eco-system, it is very easy to install using the built-in OperatorHub. It provides you with a turnkey-ready tool for automating continuous delivery (CD) tasks. It’s based on the well-known open-source tool ArgoCD, which is often paired with Tekton as a tool for continuous integration (CI) for full CI/CD automation. However for our purposes it’s sufficient to focus on the “CD” part.

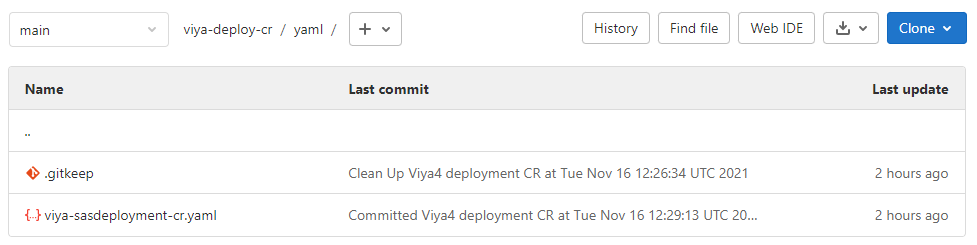

In order to eliminate the last manual step mentioned above we will use another Git repository which only contains our CR manifest. We will instruct OpenShift GitOps to monitor this repository and automatically sync its’ contents to the cluster. In other words: pushing the CR manifest to the Git repository will trigger a sync with OpenShift GitOps. The CR will be deployed to Kubernetes, which in turn triggers the Operator and the deployment will start. This is how our Git repository for the CR could look like:

There couldn’t be much less in it, isn’t it? How do we set up the connection between OpenShift GitOps and Git? This is done by creating an Application object:

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: sas-viya-cr-gitops

namespace: openshift-gitops

spec:

destination:

name: ''

namespace: sas-viya

server: 'https://kubernetes.default.svc'

source:

path: yaml

repoURL: >-

https://user:token@gitserver.org/sas/viya-deploy-cr.git

targetRevision: HEAD

project: default

There’s one caveat – we need to allow the OpenShift GitOps service account to create the CR resource in our target namespace. A simple way to accomplish this is to add the service account to our target project, for example like this:

$ oc project sas-viya

$ oc adm policy add-role-to-user edit system:serviceaccount:openshift-gitops:openshift-gitops-argocd-application-controller

# we need to grant additional privileges, see the attached file at the end of the blog for details

$ kubectl apply -f ~/grant-privileges-to-openshift-gitops-sa.yaml

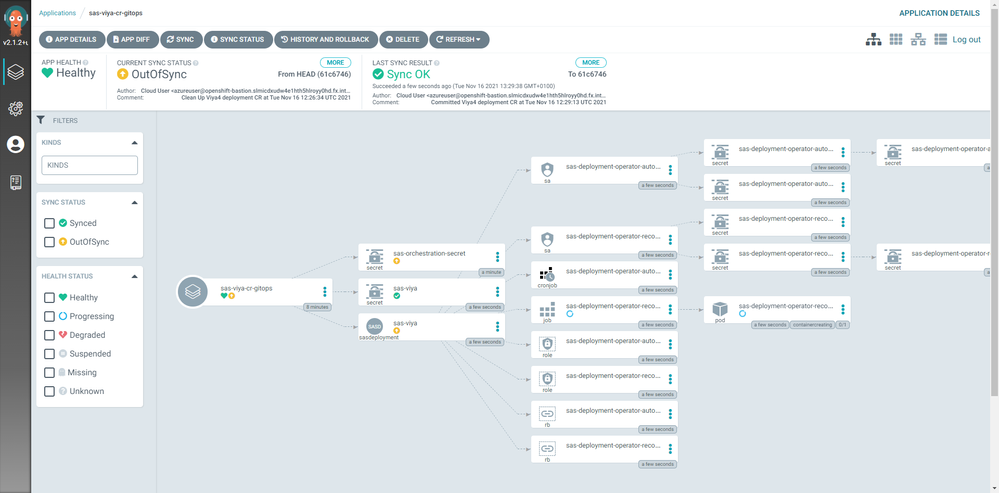

And that’s it – at least as far as we are concerned. Get yourself a cup of tea (or coffee), do a “git push” on the CR repository and watch the system do the heavy lifting for you:

The above screenshot shows the OpenShift GitOps web interface shortly after the Operator has kicked in and deployed the reconcile job. In a few minutes this screen will really look busy as more and more SAS objects start to appear.

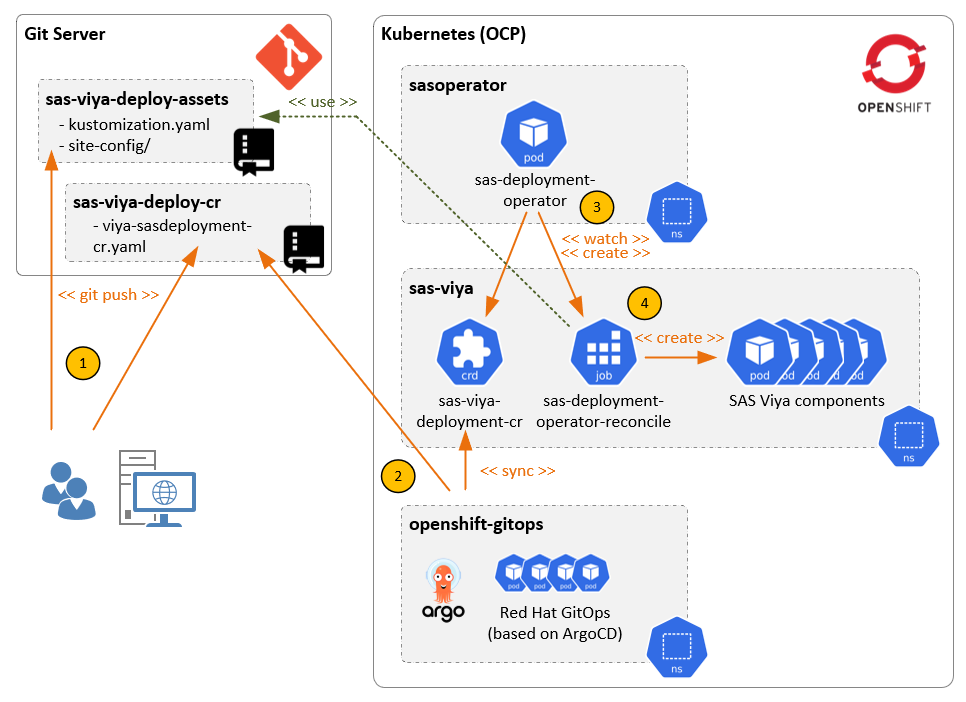

Before I wrap up this blog let me return to the diagram I showed at the beginning. Here’s the extended version of it, showing the major steps of the GitOps process I’ve described so far:

- The SAS administrator pushes the deployment patches and manifests to one Git repository and the deployment CR to a 2nd Git repository.

- OpenShift GitOps monitors the CR repository and starts syncing its’ contents to the designated target namespace. This creates the CR object in the cluster.

- The SAS Deployment Operator monitors the target namespace and creates a reconcile job as soon as it detects the CR object

- The reconcile job will then start deploying SAS Viya into the target namespace.

Wrapping up

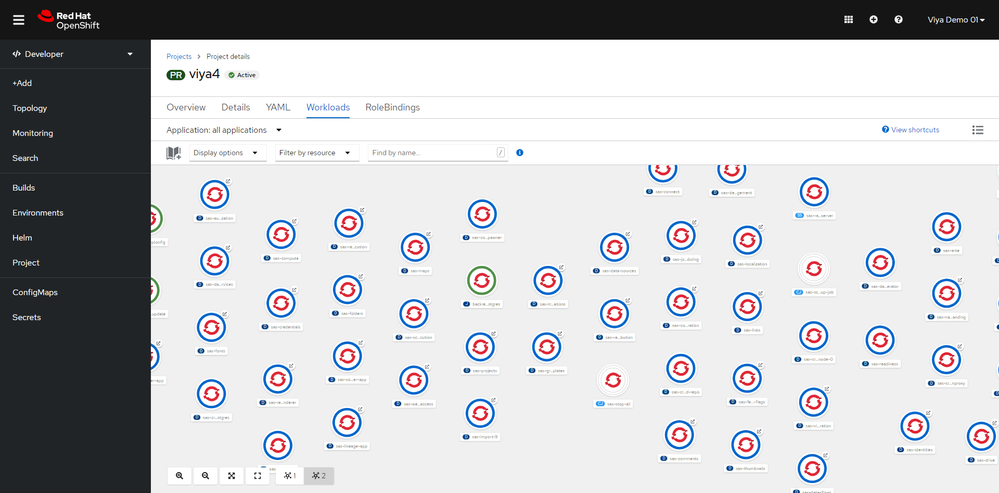

In this blog I have described a way to leverage Red Hat OpenShift GitOps and the SAS Deployment Operator to automate the lifecycle management of SAS Viya on the OpenShift Container Platform. I hope I could whet your appetite to invest some time in getting familiar with these tools as I am fully convinced that it will be worth the effort. Let me close the blog by showing you this fancy view of a SAS Viya deployment on OpenShift. It’s a screenshot taken from the OCP console showing the contents of the target namespace and if you see this it means “mission accomplished” for the Operator.

Leave a comment to let me know what you think about the blog and don’t hesitate to reach out to me if you have any questions.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Some questions:

Do you use one git repository for

the deployment definitions in the site-config and the SAS CR (the order) downloaded ,

or do you separate this in two ?

When I download the SAS CR , let's say with podman,

can I use skopeo for transfer to the git repository ?

SAS AI and Machine Learning Courses

The rapid growth of AI technologies is driving an AI skills gap and demand for AI talent. Ready to grow your AI literacy? SAS offers free ways to get started for beginners, business leaders, and analytics professionals of all skill levels. Your future self will thank you.

- Find more articles tagged with:

- CD

- continuous delivery

- gitops

- Kubernetes

- OpenShift GitOps

- operator

- Red Hat OpenShift