- Home

- /

- SAS Communities Library

- /

- Creating Ensemble Models in SAS Model Studio

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Creating Ensemble Models in SAS Model Studio

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

If one model is good, is a combination of models better? Yes, sometimes it is! Ensemble models combine the results of models in a variety of ways, sometimes gaining considerable accuracy in your results. Model Studio makes it easy to automatically create ensemble models.

Model studio includes a couple of tree ensemble models that are available out of the box for you:

- Forest models

- Gradient boosting models

Both random forest models and gradient boosting models are ensemble models of a bunch of decision trees. The difference is that random forest models use bagging, and gradient boosting models use boosting.

In addition, Model Studio has an Ensemble node that lets you automatically create an ensemble model out of any combination of other models that you already have.

Random Forest

A random forest is created from many decision trees, where each decision tree uses slightly different samples of the training data. Specifically:

- Each tree is built on subset of observations (rows)

- The features (variables) available to each splitting node are also subset

Forest models in SAS Viya use sampling with replacement. Once all the decision trees are run the results are combined.

Select any image to see a larger version.

Mobile users: To view the images, select the "Full" version at the bottom of the page.

Because bagging is a parallel process, random forests take advantage of parallel processing compute power improvements in Viya.

FYI, if you are concerned about reproducibility, you can use a random seed to get the same results repeatedly using a forest model IF you are using the same environment, the same data set sorted in the same way, and the same partitioning. However, by doing these, you are removing the “random” from the random forest.

Gradient Boosting

Gradient boosting also generates many decision trees. However, in this case, the trees are generated sequentially from slightly different subsamples of the training data. That is results from one decision tree are used in constructing the next decision tree. In the end, all models are given a weight depending on their accuracy, and the model results are combined into one consolidated result.

Gradient boosting in SAS Viya trains a decision tree by dividing the data, then dividing each resulting split, and so on. Specific steps are:

1. Select candidate features (inputs, i.e., independent variables)

2.. Compute the association of each feature (input) with the target (outcome, i.e., dependent variable)

3. Search for the best split, i.e., the one that uses the most highly associated features

a. For a nominal target, find the split that maximizes the reduction in the gain

b. For an interval target, find the split that maximizes the reduction in variance

This process continues recursively.

One advantage of gradient boosting is that it can reduce bias and variance in supervised learning. Cross validation is offered within gradient boosting as part of the VDMML.

Both gradient boosting and forest models in SAS Model Studio allow for hyperparameter optimization and cross validation to optimize model properties.

Custom Ensemble Models

You can also develop your own custom ensemble model! The Ensemble node in Model Studio lets you combine any of the models you’ve already created. The Ensemble node will create a new ensemble model as follows.

- for class targets, the posterior probabilities are combined using either the

- average

- geometric mean

- maximum or

- voting

- For interval targets, new models are created using the predicted values

- average or

- maximum

The new Ensemble model can now be treated like any other model in your Model Studio pipeline. You can assess it and compare it to your other models to see if you get any improvement in accuracy.

You can also get score code from this new Ensemble model. The score code from the Ensemble node is a combination of the various models. The score code can be either:

- A single score code file that contains DATA step code if all models produce DATA step score code

- an embedded processing (epcode) file and one or more analytic stores (ASTOREs) if ANY of the models have score code in the form of embedded processing code (epcode) and analytic stores (ASTOREs)

For more information on score code and ASTOREs see my article Creating an ASTORE in SAS VDMML Model Studio on SAS Viya.

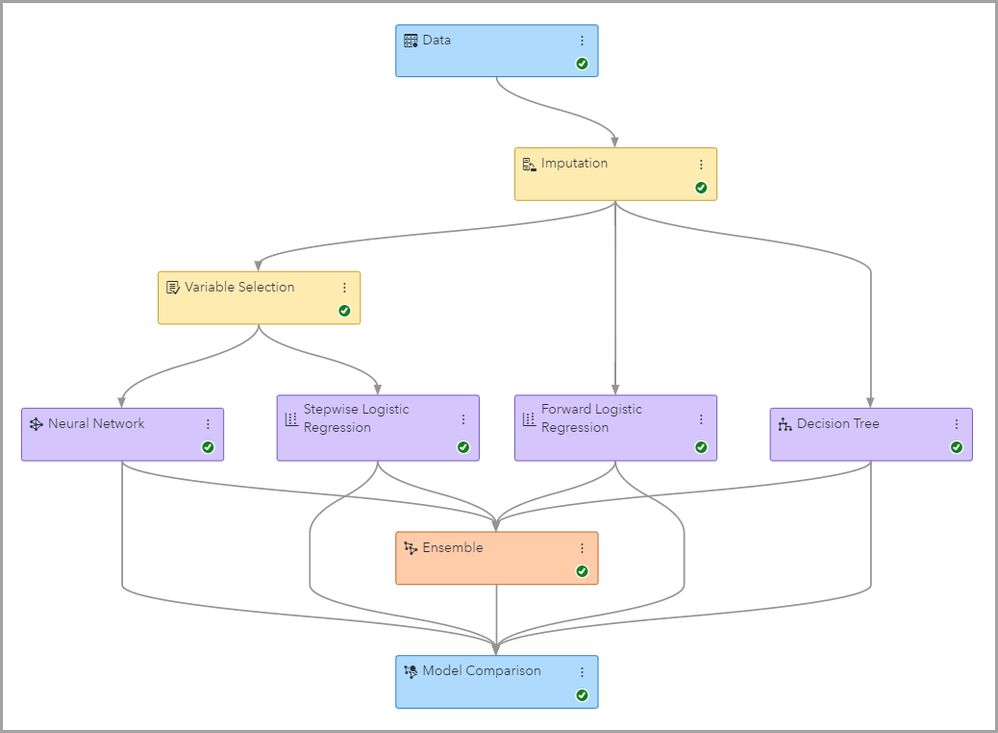

In the Model Studio example below, the Ensemble mode is created from the Neural Network, the Stepwise Logistic Regression, the Forest Model, the Forward Logistic Regression, the Gradient Boosting model, and a Decision Tree.

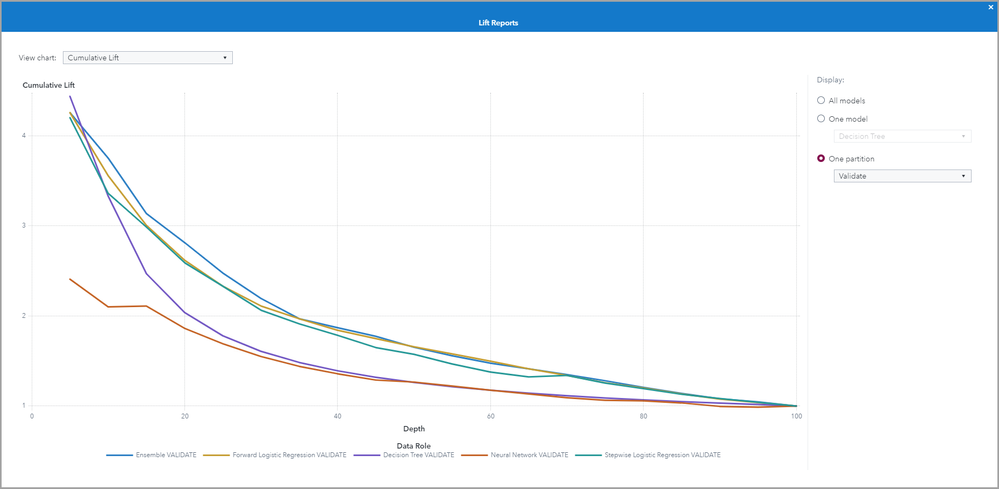

Opening our Model Comparison results we see that the ensemble model that we created did not beat out the system-created forest and gradient boosting models.

Let’s delete the forest and gradient boosting models and rerun our pipeline.

We see that the Ensemble model created from the logistic regression and decision tree models does improve over those models.

The beauty of Model Studio is that we can so quickly and easily compare all of these different models to find the most accurate one.

What is Cross Validation?

To estimate generalization error, cross validation is commonly used. k-fold cross validation is one example, where k is the number of subsets (folds) that the data are divided into. In 5-fold cross validation, for example, the data are split into five equal data sets. The decision tree model is refit on each four-fifths of the data using the remaining one-fifth (holdout) for assessment. The five assessments are then averaged. In this method, all the data are used for both training and assessment.

Interpretability Tools in Model Studio

Because ensemble models are difficult to interpret, you may wish to use the out of the box interpretability techniques available in Model Studio.

See my article LIME and ICE in Viya: Interpreting Machine Learning Models for more information on those interpretability tools. For More

Information:

- Model Studio documentation Ensemble Properties

- Model Studio documentation Overview of Ensemble

- Funda Güneş, Russ Wolfinger, and Pei-Yi Tan, 2017, Stacked Ensemble Models for Improved Prediction A...

- Ricky Tharrington, 2021, Parallel Processing in SAS Viya

Find more articles from SAS Global Enablement and Learning here.

April 27 – 30 | Gaylord Texan | Grapevine, Texas

Registration is open

Walk in ready to learn. Walk out ready to deliver. This is the data and AI conference you can't afford to miss.

Register now and save with the early bird rate—just $795!

SAS AI and Machine Learning Courses

The rapid growth of AI technologies is driving an AI skills gap and demand for AI talent. Ready to grow your AI literacy? SAS offers free ways to get started for beginners, business leaders, and analytics professionals of all skill levels. Your future self will thank you.

- Find more articles tagged with:

- GEL