- Home

- /

- SAS Communities Library

- /

- Azure Storage for SAS Architects

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Azure Storage for SAS Architects

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

SAS and Microsoft announced a strategic partnership that is going to influence the market in the upcoming years. Immediately, this has set Azure as the preferred cloud provider for SAS deployments. It is important for SAS Architects and SAS Deployment Engineers to become familiar with Azure technology. A layer of the architecture that deeply influences SAS performance is the IO stack, and in Azure it is possible to choose from different storage solutions. Which ones are best suited for SAS?

Introduction

In this post I'll do my best to remain high-level enough so that you don't have to be storage experts to follow along; at the same time, I wish to provide enough knowledge to let you understand the how and the why of the different options and choices.

Although SAS on Azure can be deployed both on Windows and on Linux operating system, this post focuses on Linux only. Some of the content is available in the Azure documentation, explained in a clear way. Luckily for you, you don't have to go and dig into all these docs, they are all referenced here.

Since this post was getting too long, I decided to split it in two - this first installment describes Azure disk storage options, while the next one will focus on the options to mount/provide access to external storage resources.

Internal Storage: Disks

Although resources on Azure are "virtual", virtual machines still need the same components as physical ones: CPUs, memory, network interfaces ... and disks. Virtual disks, of course. The good news is that on Azure, virtual disks are managed:

Managed disks are like a physical disk in an on-premises server but virtualized. With managed disks, all you have to do is specify the disk size, the disk type, and provision the disk. Once you provision the disk, Azure handles the rest.

Managed disks are designed for 99.999% availability. Managed disks achieve this by providing you with three replicas of your data

Azure provides different kinds of disks. Let's see how we can describe them.

Disk Roles

Select any image to see a larger version.

Mobile users: To view the images, select the "Full" version at the bottom of the page.

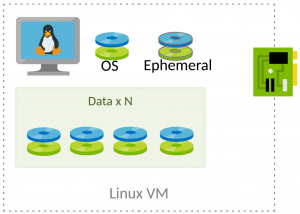

- OS

- As the name implies, it's a disk dedicated to hosting the Operating System. On Linux, it contains the boot and the root partitions. OS disks are created already partitioned, formatted and with the Operating System already installed. You only have to choose the size you prefer, up to 2 TiB. Wow! Obviously, every virtual machine comes with one operating system disk. You can use the OS disk also to install SAS binaries (SASHome) and/or SAS configuration (config/LevN on SAS 9, /opt/sas/viya/config on SAS Viya)

- OS Disks can also be Ephemeral. Evaluate the pros and cons for your specific use case before you choose this option.

- Ephemeral

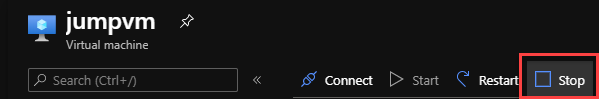

- These disks are already partitioned and formatted, but they are totally empty and, most importantly, they are temporary disks. Temporary as in: when it's gone, it's forever. A simple reboot or even a shutdown is not an issue; these actions preserve temporary disks. But if you de-allocate the virtual machine, when you restart it, you'll get a brand new ephemeral disk. De-allocating may happen to redeploy the machine on different hardware, or during a maintenance event, or to change machine size - or simply by pressing "stop" in the Azure Portal:

Just as with OS disks, every virtual machine comes by default with one temporary disk. With SAS, you can use an ephemeral disk for SASWORK or CAS_DISK_CACHE. Just remember that, after a machine deallocation, not even an empty /mnt/resource/saswork directory will be there. You should use a startup script that recreates the directory at every reboot, similar to this example from the SAS Viya on Azure QuickStart. - TIP: ephemeral storage can be mounted by default in different directories. Machines that use cloud-init provisioning (Ubuntu and SUSE Linux starting from August 2020) mount it at /mnt. Machines that use Azure Linux Agent (RedHat, Centos, SUSE Linux until July 2020) mount it at /mnt/resource. And before you answer "SAS does not run on Ubuntu", just be aware that Ubuntu 18.04 is the default operating system for Azure Kubernetes nodes that SAS Viya 4 will use.

- Persistent

- Also known as Data disks. These are the disks that store your permanent data, such as SAS datasets and CAS tables. The size of the virtual machine determines the maximum number of data disks that you can attach to it: you can choose to have zero, one, or more. Just as with physical SCSI disks, you can stripe multiple ones to increase the available storage and, more importantly, the overall I/O throughput. Like physical disks, when you attach them to your machine, they are totally empty: unpartitioned and unformatted. The first step, to use a data disk, is to create at least a partition, a file system and to mount it. You can find sample instructions to perform these actions in the Azure documentation: here for a single disk, and here to stripe multiple disks into a single volume.

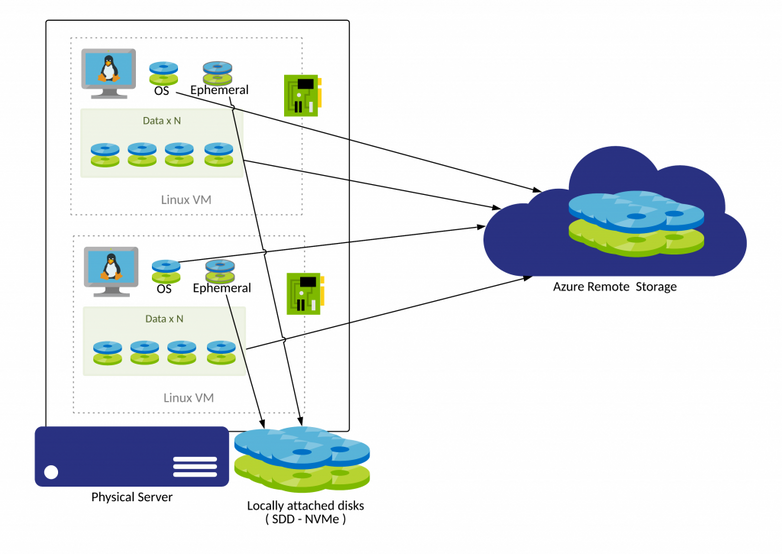

OS and Data disks can survive virtual machine reallocations and even virtual machine deletion. This is possible because disk space is provisioned from remote Azure storage, similarly to a SAN storage on premise.

Ephemeral disks, on the other side, are lost every time a virtual machine is moved to new physical host, because they are created on the local SSD disks attached to the physical host.

Although OS and Data Disks are persistent, they cannot cross Availability Zone (AZ) boundary. As an example, a disk created in AZ 1 cannot be attached to a virtual machine running in AZ 2. By default, if you create a new disk while creating a virtual machine, they are created in the same AZ.

Disk types

Azure currently offers four disk types, each with different performance levels. The size of the virtual machine determines the type of storage you can use to host the disks. The available types of disks are ultra disks, premium solid-state drives (SSD), standard SSDs, and standard hard disk drives (HDD).

- Standard HDD and Standard SSD types are not a good fit for SAS data disks. Although sometimes cheaper than the other choices, they provide lower performance, not enough to reach the SAS I/O requirements of at least 100 MiB/s/core for SAS data, and 150 MiB/s/core for SASWORK. Also, the stated performance is not guaranteed and can vary depending on the traffic patterns.

- Ultra Disks are at the opposite side of the spectrum, with top performance (and top price!). A single disk can provide up to 2000 MiB/s, which is enough to feed 125 MiB/s/core to a 16 cores machine. They also provide the best flexibility, because you can independently configure the capacity and the performance of the disk. You can even scale the desired throughput without restarting your virtual machine. Ultra disks simplify deployments greatly because it is indeed a single disk. Owners do not have to mount and stripe many “premium SSDs” into a logical volume, which simplifies support.

- BUT... yes, there are some caveats. And these can be show-stoppers, that can prevent you from using Ultra disks altogether.

- Ultra disks are only available in selected Azure regions.

- Virtual machines using Ultra disks cannot benefit from some high availability modes (scale sets, availability sets). Only availability zones are supported.

- Only some virtual machines types currently support Ultra disks, and not every VM size is available in every supported region.

- Plus some additional limitations that should not impact SAS too much.

- Premium SSDs are probably the most common choice for SAS deployments.

- Performance is guaranteed and scales according to the disk size. For this reason, it may happen that you have to overprovision disk capacity to get enough throughput. For example, to achieve the same 2000 MiB/s of a single 256 GiB Ultra disk, you may have to stripe 10 P30 disks (tot 10 TiB), or 4 P60 disks (tot 32 TiB), even if you need less disk space. Make sure the Azure VM instance type that you plan to use for your SAS deployment supports Premium storage (usually there is an "s" in the instance type name, for example, Edsv4-series).

Virtual Machines I/O Performance Considerations

The disk performance you get when selecting from the different Azure Disk types has a direct impact on the performance SAS can achieve. Virtual machines can pose a different and independent limit on the maximum I/O throughput. Both the chosen VM and the attached disks should meet SAS throughput requirements, to prevent performance degradation.

It does not matter if you provision a single 2000 MiB/s Ultra disk, or 4 P60 disks when using a Standard_E32s_v3 instance (one of the most popular Azure instances that is being used for SAS): the VM has a maximum limit of 768 MiB/s for "uncached disk throughput" and that will cap any available disk bandwidth.

Azure documentation does a nice job in explaining the different layers of disk caching and how they affect the max available I/O bandwidth, but the key point is to choose a machine type which can provide the required 100 MiB/s/core for data and 150 MiB/s/core for SASWORK/CAS_DISK_CACHE. When doing this selection, you may often find that it is not possible to satisfy these requirements, which have to be relaxed - and user expectations should be relaxed as well! As an example, none of the configuration proposed under "Reference Instances for SAS Compute Nodes" in Margaret Crevar's post can reach even 50 MiB/s/core for data disks.

As a workaround, when selecting a virtual machine instance size, you can utilize Constrained Cores to reduce the number of cores presented to the instance’s operating system. What does this mean for SAS? SAS licensing usually sets the number of CPUs that you can use. Let's say you have a license for 16 cores, which (usually) translates to 32 vCores. You could use a Standard_E32s_v3 instance, but we have just seen that it can only provide 768 MiB/s = 48 MiB/s/core. If, instead, you use a "constrained" Standard_E64-32s_v3, you still have 32 vCores, but you are effectively doubling the I/O bandwidth per core - because the machine is in all other effects a Standard_E64s_v3. Unfortunately, it is a Standard_E64s_v3 also in pricing; therefore, the available bandwidth comes with an associated increase of the bill at the end of the month!

In summary, chose a machine type that can provide enough maximum bandwidth (look at the "uncached disk throughput" column in the machine type specification) and attach enough Premium Disks to achieve that throughput (look at the “Provisioned Throughput per disk” row of this table).

Additional considerations

- Bursting

- Some small Premium SSDs (up to the P20 model) can automatically perform bursting: they can reach a higher throughput than their max nominal speed, for up to 30 minutes of continuous usage. After 30 minutes, they revert back to their nominal performance target. If enough time passes, where the actual utilization is below the provisioned performance target, they accumulate enough "credits" to support additional bursting.

- While this can be a benefit, it's important to be aware of this capability to avoid unintended consequences. For example, the system may perform great until load and usage frequency increase. This can also impact system assessment, for example when running performance tests. If the tests run during the bursting window, the measured performance can be higher than what the virtual machine can actually sustain during a continuous usage, such as with a long-running batch job.

- Note: Azure is introducing a new "explicit bursting request" capability. You will be able to explicitly set a higher performance tier (without increasing disk size) when your application requires this to meet higher demand and return to the initial baseline performance tier once this period is complete. This is not in GA yet, so you should not use this capability with production workloads.

- Caching

- When using managed disks you can specify disk caching. SAS suggestion, aligned with Azure recommendations, is to use read-only caching for data disks, and read-write caching for OS disks.

- Lsv2-series virtual machines (based on AMD chipsets) have a storage stack different than any other type, and some of the above considerations do not apply to them:

- the machines themselves have a 30-minutes I/O bursting capability, independently and/or in addition to the capability provided by the data disks. Some examples of how this works are provided in the Azure documentation.

- in addition to a very small "normal" ephemeral disk, they have directly mapped local NVMe disks. Since these are local, they are ephemeral as well, with the same limitations described above. The advantage over normal ephemeral SSDs are both in terms of throughput (2000 MiB/s each NVMe disk) and storage (1.92 TiB each NVMe disk), but most importantly, scalability: there is 1 disk every 8 vCPUs. The best usage for SAS is for temporary space, such as SASWORK and CAS_DISK_CACHE.

- These NVMe disks are provisioned "raw", just as data disks. You should stripe and format them, using scripts similar to the ones presented above. But, just as with ephemeral disks, these scripts have to run at every reboot, to accommodate the cases when you get blank new disks.

- Not all operating systems can benefit from all these specialized capabilities; amongst the ones supported by SAS, only RHEL 8 can - and that is not supported by Viya 3.x.

- Azure continuously releases new instance types. Always check to see if newer/faster/cheaper options are available. Some of the newest (as of August 2020) include:

- The Edsv4-series, which offers more ephemeral space with a higher throughput (and better CPUs); for example, E32dsv4 instances provide 1200GB of temporary storage with a maximum I/O throughput of 1936 MB/s (120 MB/sec/physical core) at about 10% price increase compared to E32sv3. Unfortunately, these instances are still not available in all regions.

- New instances with no local temporary disks, such as the Esv4 family. Compared to the Esv3 family, they have better CPUs and the same price. Although not the focus of this post, these instances could be interesting for non-compute roles, such as mid-tier servers, metadata servers, Viya microservices hosts, etc.

- Azure shared disks allow you to attach a managed disk to multiple virtual machines simultaneously, but are not suitable for SAS. They require specific operating system support and specialized applications to provide the sharing capability. It may be possible to use them through RedHat GFS2, but this has not been tested nor vetted yet.

Conclusion.

There are many resource and configuration choices available within Azure, in order to select the proper storage to meet the needs of your SAS environment. It is possible you may have to overprovision the storage and use an instance type with more cores than needed in order to get enough I/O throughput required by SAS.

Be sure to read all of Margaret Crevar's latest post with additional Azure consideration and sample instances, and, when it will be published - spoiler alert - the upcoming paper by Raphael Poumarede about SAS Viya 3.5 on Azure.

In the next post, I’ll cover Azure external storage options, such as Azure Files, Azure NetApp Files, Blob storage, and more - including considerations for SAS Grid Manager.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello, very informative. Is this applicable to Viya 3.x or 4.x? Or also for 9.4 (esp. M7/M8)? Is there a caching recommendation for WORK?

Best,

S

SAS AI and Machine Learning Courses

The rapid growth of AI technologies is driving an AI skills gap and demand for AI talent. Ready to grow your AI literacy? SAS offers free ways to get started for beginners, business leaders, and analytics professionals of all skill levels. Your future self will thank you.