- Home

- /

- Programming

- /

- Programming

- /

- Re: SQL LIKE operator and whitespace

- RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi all -

I am joining two tables, one of which has diagnostic events for patients and the other being a lookup table categorizing diagnosis codes as inclusions or exclusions. The first is potentially quite large and the 2nd is generally going to be fairly small and contains some complete diagnosis codes and some truncated ones that might have one or more '%' signs in them to make them compatible with the SQL LIKE operator.

Here's an example:

data claims;

infile cards dlm=',';

length ptid mpid 5 dx $10;

input ptid mpid dx;

cards;

1, 12, H41198

1, 15, C7781

2, 294, H677119

2, 300, G55181

;

run;

data codelist;

infile cards dlm=',';

length code_type $8 code $10 condition $15;

input code_type code condition;

cards;

DX_INCL,C%,CANCER

DX_EXCL,G55181,STUFF

;

run;

proc sql;

select a.ptid, a.mpid, a.dx, b.code_type, b.condition, b.code as lookup_code

from

claims A

inner join

codelist B

on a.dx LIKE trim(b.code); /* <<-- I would like to remove the TRIM function */

quit;The above works fine and produces output as expected:

ptid mpid dx code_type condition lookup_code

| 1 | 15 | C7781 | DX_INCL | CANCER | C% |

| 2 | 300 | G55181 | DX_EXCL | STUFF | G55181 |

...however, being that 'LIKE' is abusive enough, processor-wise, when working with large tables, I would really like to be able to at least get rid of the TRIM( ) function in the join. But removing it from the join, even if I have pre-processed the CODELIST table like this:

data codelist;

set codelist;

code=trim(code);

run;causes the SELECT to return only the exact match (code G55181) and not the C% code. Maybe TRIM/STRIP/COMPRESS and similar don't really add much processor time and I should just ignore, but I am really puzzled by this behavior. Any ideas would be appreciated!

Thanks.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Not sure there is much of a performance hit from having the TRIM() function call there.

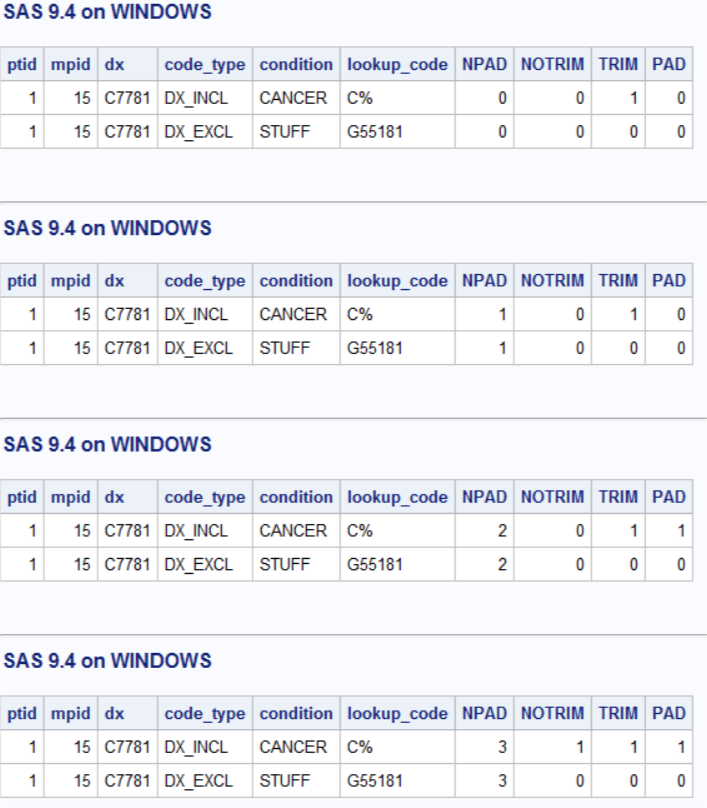

If you prefer you could just add trailing spaces to DX to make sure has enough trailing spaces to match what you might have in your LIKE pattern. A little testing show you need to pad 2 spaces or increase the length by 3 spaces to get the same results as using TRIM(). I think that the value of 2 or 3 spaces is related the relative length of the value of DX and value of CODE since 'C7781' is three bytes longer than 'C%'.

data claims;

infile cards dlm=',';

length ptid mpid 5 dx $10;

input ptid mpid dx;

cards;

1, 15, C7781

;

data codelist;

infile cards dlm=',';

length code_type $8 code $10 condition $15;

input code_type code condition;

cards;

DX_INCL,C%,CANCER

DX_EXCL,G55181,STUFF

;

%macro test(npad);

data claims2;

length dx $%eval(10+&npad);

set claims;

run;

proc sql;

select a.ptid, a.mpid, a.dx, b.code_type, b.condition, b.code as lookup_code

, &npad as NPAD

, a.dx like b.code as NOTRIM

, a.dx like trim(b.code) as TRIM

, a.dx||"%sysfunc(repeat(%str( ),&npad-1))" like b.code as PAD

from claims2 A

, codelist B

;

quit;

%mend ;

%test(0);

%test(1);

%test(2);

%test(3);

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

I don't have a solution for replacing the LIKE operator with anything less invasive.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

@quickbluefish wrote:

Hi all -

I am joining two tables, one of which has diagnostic events for patients and the other being a lookup table categorizing diagnosis codes as inclusions or exclusions. The first is potentially quite large and the 2nd is generally going to be fairly small and contains some complete diagnosis codes and some truncated ones that might have one or more '%' signs in them to make them compatible with the SQL LIKE operator.

I would question the " '%' sigsn in them to make them compatible".

If the code list values are relatively static I would seriously consider having custom formats. Then you get value you need with put(codevariable, codeformat.) as newvariable , if the additional variable is even needed.

And for some things a multilabel format is hard to beat.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

@quickbluefish wrote:

Thanks, yes, I would prefer a format as well, maybe even using the regex options, but the codelist is both messy and dynamic... and potentially too large for a format as well. Appreciate the reply!

Dynamic, if that means the values change often is more of an issue and would relate to the maintenance. Though if the "new" values appear in an organized manner then it might be a simple as appending some data to a cntlin data set for Proc format to use.

I am not sure how "messy" would be an issue unless you mean that a single value potentially gets mapped to multiple values. Which I would think would make SQL joining questionable as well. Single value to result for formats doesn't matter much. And how large is "potentially too large"? SAS has multiple formats for date values that accept every date from years 1581 to 9999, that's over 3 million values (though I suspect some interesting things are done behind the scenes for these) and datetimes have potentially many more.

I suspect a large enough custom format might have performance issues but if it avoids having to go into odd gyrations with "like" and similar it might be worth the performance hit.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Not sure there is much of a performance hit from having the TRIM() function call there.

If you prefer you could just add trailing spaces to DX to make sure has enough trailing spaces to match what you might have in your LIKE pattern. A little testing show you need to pad 2 spaces or increase the length by 3 spaces to get the same results as using TRIM(). I think that the value of 2 or 3 spaces is related the relative length of the value of DX and value of CODE since 'C7781' is three bytes longer than 'C%'.

data claims;

infile cards dlm=',';

length ptid mpid 5 dx $10;

input ptid mpid dx;

cards;

1, 15, C7781

;

data codelist;

infile cards dlm=',';

length code_type $8 code $10 condition $15;

input code_type code condition;

cards;

DX_INCL,C%,CANCER

DX_EXCL,G55181,STUFF

;

%macro test(npad);

data claims2;

length dx $%eval(10+&npad);

set claims;

run;

proc sql;

select a.ptid, a.mpid, a.dx, b.code_type, b.condition, b.code as lookup_code

, &npad as NPAD

, a.dx like b.code as NOTRIM

, a.dx like trim(b.code) as TRIM

, a.dx||"%sysfunc(repeat(%str( ),&npad-1))" like b.code as PAD

from claims2 A

, codelist B

;

quit;

%mend ;

%test(0);

%test(1);

%test(2);

%test(3);

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

The like operator is expensive, as you stated, and I doubt trim() adds much processing time, if any.

What it does, though, is shorten the like operator's match-string.

It would not surprise me that a shorter like string actually saves time.

Expensive string matching operators, such as like, or functions, such as regular expressions, benefit greatly from shorter match-strings.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

If all the % signs are at the rightmost non-blank character of code, than I think you'll be better of using a hash lookup, with a new variable TRIM_CODE (stripped of the % sign) in the codelist dataset. Then for each incoming claims record do a full length (using L for length of the dx value) lookup of DX against a hash object built from CODELIST and using TRIM_CODE as the lookup key. If not found, then shorten L by one character at a time, until either a lookup succeeds (i.e. the hash FIND method returns a zero) or L=0 (meaning no matching trim_code was found):

data claims;

infile cards dlm=',';

length ptid mpid 5 dx $10;

input ptid mpid dx;

cards;

1, 12, H41198

1, 15, C7781

2, 294, H677119

2, 300, G55181

;

run;

data codelist;

infile cards dlm=',';

length code_type $8 code $10 condition $15;

input code_type code condition;

trim_code=tranwrd(code,'% ',' ');

cards;

DX_INCL,C%,CANCER

DX_EXCL,G55181,STUFF

;

run;

data want (drop=L);

set claims codelist(obs=0);

if _n_=1 then do;

declare hash h (dataset:'codelist');

h.definekey('trim_code');

h.definedata(all:'Y');

h.definedone();

end;

do L=length(dx) to 1 by -1 until(h.find(key:substr(dx,1,L))=0);

end;

if L>0;

run;

The hash OUTPUT method will overwrite a SAS data set, but not append. That can be costly. Consider voting for Add a HASH object method which would append a hash object to an existing SAS data set

Would enabling PROC SORT to simultaneously output multiple datasets be useful? Then vote for

Allow PROC SORT to output multiple datasets

--------------------------

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

You have chosen a solution where the like string is longer.

Not too sure how you expect the run time to be faster.

Rather, I'd expect the fastest time might be reached by trimming both side of the like operator.

Please report the results. I am curious now.

April 27 – 30 | Gaylord Texan | Grapevine, Texas

Registration is open

Walk in ready to learn. Walk out ready to deliver. This is the data and AI conference you can't afford to miss.

Register now and save with the early bird rate—just $795!

Learn how use the CAT functions in SAS to join values from multiple variables into a single value.

Find more tutorials on the SAS Users YouTube channel.

SAS Training: Just a Click Away

Ready to level-up your skills? Choose your own adventure.