- Home

- /

- Programming

- /

- Programming

- /

- Re: SAS-Hive (Hadoop) Efficiency with type String

- RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Background

We have a lot of Hive tables that we query using SAS. The problem is that many of the Hive columns are type String. SAS wants to convert each and every column to $32767 which kills performance. We can set a default definition at the ODBC driver level, in our case $255. However, many of our columns are Y/N type flags or the like of one position -- which wastes 254 positions. Some of our tables are close to a billion rows. The majority of our columns are character, and we are using COMPRESS=CHAR. Even with compression, we've found that space is being wasted.

Objective

I want something more space efficient.

Current means

I have a work around, and it does save space but it has performance problems. More on that in a minute.

My work-around:

1. Run a normal SELECT column1, column2, ... columnN. All of the type String columns will default to $255.

2. Prior to the next production run, execute a utility program that reads the output from step 1 and finds the maximum length of each column and creates a dataset listing the column name, type, and length (modeled after the output one can get from exporting a SAS dataset layout using SAS Enterprise Guide).

3. In the next production run, a macro is run to read the layout created in step 2. The macro generates explicit pass through HQL that does the following:

- Selects the column

- Substrings the column using the length from the layout created in step 2.

- Casts the column as VARCHAR again using the length from the layout created in step 2.

For example, if the maximum length of a type String column in Hive named Gmid is 40, then "SELECT Gmid" becomes "CAST(SUBSTR(Gmid, 1, 40) AS CHAR(40)) AS Gmid".

As I say, the work-around does save space -- about 10 Gigabytes per run on the one job I've tried it on, and we have many jobs.

The problem

The problem is that performance suffers. I was hoping that since Hadoop disperses processing across multiple nodes (Hadoop servers) there would not be a performance degradation due to the CASTing and SUBSTRing, but, alas, no. My 3.5 hour job now runs in about 5 hours. I do save disk space but at the cost of performance. We already break the process into a dozen or so sub-processes run in parallel, one for each of our clients, so further sub-division and parallelization would be problematic from a maintenance and re-run/restart perspective.

The question

Here's where you come in. How can I accomplish the same thing more efficiently? I have my layout "metadata" so I know the column names, types, and max lengths in advance. Is there another (better) way? Please brainstorm with me.

I thank you in advance,

Jim

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

What if you set the length in the outer (SAS-side) SQL?

Too much data will be transmitted, but space will be OK.

I would also ask the question on Hadoop forums about why cast() and substr() kill the speed so much.

>The majority of our columns are character, and we are using COMPRESS=CHAR. Even with compression, we've found that space is being wasted.

That should not be the case, trailing spaces should not take much room at all. SAS is slow dealing with long strings though, so trimming the length asap is a must.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

What if you set the length in the outer (SAS-side) SQL?

Too much data will be transmitted, but space will be OK.

I would also ask the question on Hadoop forums about why cast() and substr() kill the speed so much.

>The majority of our columns are character, and we are using COMPRESS=CHAR. Even with compression, we've found that space is being wasted.

That should not be the case, trailing spaces should not take much room at all. SAS is slow dealing with long strings though, so trimming the length asap is a must.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

@ChrisNZ wrote:

What if you set the length in the outer (SAS-side) SQL?

Too much data will be transmitted, but space will be OK.

@ChrisNZ, thanks for your response. I'm trying your suggestion now and will run some tests overnight. I will post the results.

I originally discounted this because I thought the slowness was caused by how much data had to be transmitted from Hadoop to the SAS server, but perhaps I was wrong.

@ChrisNZ wrote:

I would also ask the question on Hadoop forums about why cast() and substr() kill the speed so much.

Good thought. If I come up with anything, I will post here. I did run an overnight test last night after our server use went down, and I got a run only about 10 minutes longer than a regular run, so the performance hit may not be so great as I originally thought.

@ChrisNZ wrote:

>The majority of our columns are character, and we are using COMPRESS=CHAR. Even with compression, we've found that space is being wasted.

That should not be the case, trailing spaces should not take much room at all. SAS is slow dealing with long strings though, so trimming the length asap is a must.

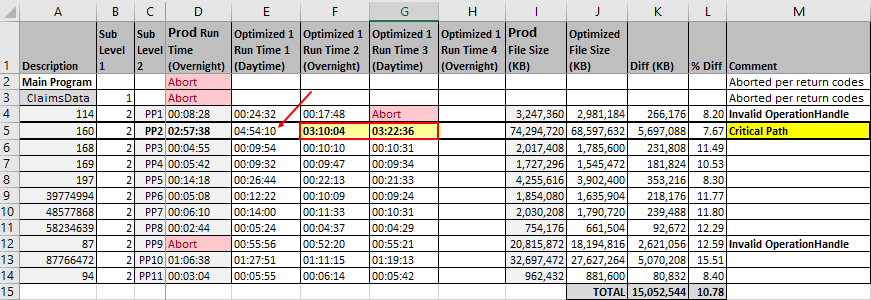

I'm finding about a 10% difference on average. In other words, I use about 10% less space if I optimize the column lengths as compared to simply relying on COMPRESS=CHAR. I pull in about 500 million rows in my monthly queries just for our Claims type (which is our largest category). I save about 15 GB of disk space per run if I optimize the columns. See chart, below.

Jim

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Update to @ChrisNZ and @LinusH:

I've done a couple more runs of my SUBSTR and CAST to character method of limiting columns of type String. See chart, below. I think something big must have been running yesterday which skewed the results. The following two runs are much closer to my 3 hour benchmark -- and each of them uses about 10% less disk space than a run without any optimization of column lengths.

Jim

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

@ChrisNZ wrote:

What if you set the length in the outer (SAS-side) SQL?

@ChrisNZ, that turns out to do the trick. It is both faster than non-optimized SQL and takes 10% less disk space. The fact that my optimizing column widths via a LENGTH parameter on the SAS side SQL actually saves space makes me wonder if SAS character compression isn't quite as efficient as I thought it was.

I also mention these results to @LinusH who is part of this thread and to @SASKiwi, who I discussed some of these ideas on a separate thread about SQL Server, in case they might find them interesting.

Details are below for those who might be interested.

Jim

Steps

- Execute a normal Production run with non-optimized SQL.

- "Offline" (i.e. not as part of a Production run), run a macro that finds the maximum length of each column and creates a SAS dataset listing each column name, length, and type, one row per column I also add the schema and the table name to each row.

- Add a macro to my Production processing that generates optimized SQL based on the SAS dataset created in step 2. The optimization consists of a series of LENGTH parameters applied to the SAS side SQL. The "inner" query -- the query that goes to the database -- does not change.

- Execute the optimized SQL.

Results are shown in the table below. Synopsis:

- There is on average about a 10% savings on disk space.

- The critical path component of this particular Production process runs 30 to 40 minutes faster for the same data using optimized SAS side SQL.

- My previous attempt at optimization, using Substr and Cast to Char in the inner query, did have the same space savings but typically made the process run 10 to 30 minutes slower, and, in one case, 2 hours slower.

I am quite happy with these results. I thank you for your kind assistance.

Final note: This method is not without potential peril: If the data changes, truncation could result. But of course the same peril exists in Data steps or any SQL with Length statements. At least here this is a process that can be automated. In addition, I've added a 4 byte "safety margin" to each field. Hopefully our DBA's will keep us up to date, but I may modify my macro that determines the maximum length of each column such that it issues a warning if the data is using all positions allocated -- which indicate that the data has changed given that I added a 4 byte safety margin. I would need to run the macro periodically and investigate any such warnings.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Glad you have a solution that works.

The V9 engine's compression routine is old. If you really want good compression store your data using the SPDE engine. Its binary compression typically yields the best compression ratio (80% to 90% is common).

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

@ChrisNZ wrote:

If you really want good compression store your data using the SPDE engine.

Well, I've seriously thought about it. The 11 processes I cite above run in parallel and produce 11 SAS data sets which are then combined via a Data step. If I wrote out the results each of the queries using the SPDE engine, I believe I could have all 11 processes writing to the same SPDE table, which would obviate the need for the follow-on Data step. The combined data set is then split by year into four separate data sets. These by year files could be created in parallel via RSUBMIT, all simultaneously pulling from the SPDE table. I believe fashioning the process flow in this manner could reduce the overall run time, but I have not (yet) experimented with it. As it is, the Data step that combines the 11 results sets writes out the four by year data sets as it reads each row, so it's not completely clear ahead of time what the overall performance benefit might be.

To your point, though, the disk space savings might warrant the use of SPDE even if I can't drop overall run time.

Jim

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

> I believe I could have all 11 processes writing to the same SPDE table

I don't think SPDE can do this on its own, like SPDS can.

SPDE is just a (better) data set engine. It'd be a nice feature if different processes could write different partitions, but that's much beyond the scope of the current engine afaik.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

@ChrisNZ wrote:

> I believe I could have all 11 processes writing to the same SPDE table

I don't think SPDE can do this on its own, like SPDS can.

Ah. Well, thank you for that. Better I not go down a dead end.

It sounds like I need to pull out your book again and read through that section. It's been a while since I've looked at it. In practice, I find very few shops are using SPDE.

Jim

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

> I would need to run the macro periodically and investigate any such warnings.

You could maybe try putting your query in a WITH clause, and use that to add a final row to your extract, something like:

with Q1 as (select ... ),

select * from Q1

union

select max(length(VAR1)), max(length(VAR2)) from Q1 and then scan the last row for glitches before deleting it.

I have no idea if Hive would be clever enough not to read the table twice, like it should.

What's the bottleneck now? Network?

Lastly, my experience is similar to @LinusH 's: Impala was faster than Hive for retrieving data when I was using Hadoop.

I created a new ballot entry too.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

@ChrisNZ wrote:

You could maybe try putting your query in a WITH clause, and use that to add a final row to your extract, something like:

with Q1 as (select ... ), select * from Q1 union select max(length(VAR1)), max(length(VAR2)) from Q1and then scan the last row for glitches before deleting it.

Good idea. I'd be afraid of a performance hit, but I won't know unless I try it.

@ChrisNZ wrote:

What's the bottleneck now? Network?

I don't have a definitive means to know, but I don't suspect the network. Were the network the bottleneck, then my SUBSTR and CAST AS CHAR optimization should have improved performance, which it did not. Indeed, performance degraded. I suspect that SAS's writing to overly large character fields is the performance bottleneck, a bottleneck that was alleviated when LENGTH statements were added to the "outer" (SAS side) query. With the addition of LENGTH statements, I save about 10% disk space, and run time is reduced by about 14%.

@ChrisNZ wrote:

Lastly, my experience is similar to @LinusH 's: Impala was faster than Hive for retrieving data when I was using Hadoop.

We aren't using Impala in this shop. I'll have to look into it though and see if it is available to us. I have not heretofore considered it.

Jim

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

As an alternative, have you looked at the option to use the SASFMT attribute in Hive?

Don't know it it's more efficient than CAST, bet perhaps worth a try?

Or the SAS option DBSASTYPE (i line with what @ChrisNZ is suggesting).

For general performance I would recommend giving Impala a try. On the site I worked at it was clearly faster than Hive. (But is has no support for SASFMT though...)

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Unfortunately, in this shop, I am restricted and cannot place SASFMT's in the tables themselves. 😞

I'm going to try the suggestion that @ChrisNZ made of setting a length on the outer SQL (the SAS portion of the SQL) and see how the performance compares.

I think using DBSASTYPE and the suggestion that @ChrisNZ made are doing roughly the same thing: Converting the Hive type String to Character with a specified length on the SAS side. However, if I can't get results with setting a LENGTH= as @ChrisNZ suggests, maybe I'll try using DBSASTYPE next. Thank you for that idea.

Jim

Learn how use the CAT functions in SAS to join values from multiple variables into a single value.

Find more tutorials on the SAS Users YouTube channel.

SAS Training: Just a Click Away

Ready to level-up your skills? Choose your own adventure.