- Home

- /

- Programming

- /

- Programming

- /

- Re: Matching rows based on previous observations

- RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

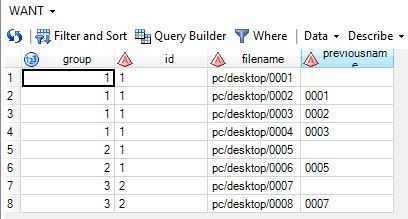

I want to match rows of the data based on a previous ID. For example, I have data looks like below.

I wan to form a group that matches filename of this row with previousname with next row.

Eventually, I want an output that looks like the one below.

Anyone can give me a help regarding this? Thanks in advance!

id group filename previousname

1 1 address.com/0001 .

1 1 address.com/0002 0001

1 1 address.com/0003 0002

1 1 address.com/0004 0003

1 2 address.com/0005 .

1 2 address.com/0006 0005

2 3 address.com/0007 .

2 3 address.com/0008 0007

data have;

length id filename $30 previousname $4.;

input id filename $ previousname $;

datalines;

1 pc/desktop/0001 .

1 pc/desktop/0002 0001

1 pc/desktop/0005 .

1 pc/desktop/0006 0005

1 pc/desktop/0003 0002

1 pc/desktop/0004 0003

2 pc/desktop/0008 0007

2 pc/desktop/0007 .

;

run;

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Both @Patrick's and my solutions will work as long as you adjust for the new variable lengths. e.g.:

data have;

length id filename $40 previousname $30.;

input id filename $ previousname $;

datalines;

1 data/1800/0001104659-09-000055.txt .

1 data/1800/0001104659-09-000005.txt 0001104659-09-000055.txt

1 data/1800/0001104659-09-000003.txt .

1 data/1800/0001104659-09-000006.txt 0001104659-09-000005.txt

1 data/1800/0001104659-09-000002.txt 0001104659-09-000006.txt

1 data/1800/0001104659-09-000004.txt 0001104659-09-000003.txt

2 data/1800/0001104659-09-000010.txt 0001104659-09-000007.txt

2 data/1800/0001104659-09-000007.txt .

;

data need;

set have nobs=num_obs;

length from $30;

from=scan(filename,-1,'/');

if _n_ eq 1 then call symput('num_obs',num_obs);

run;

data for_format (keep=start label fmtname type);

retain fmtname 'groups' type 'C';

set need;

array related(9999) $30.;

if missing(previousname) then do;

group+1;

call missing(of related(*));

j=1;

related(j)=from;

start=from;

label=put(group,4.);

output;

do i=1 to &num_obs.;

set need(keep=from previousname rename=(from=fcompare previousname=pcompare)) point=i;

if not missing(pcompare) then do;

if pcompare in related then do;

j+1;

related(j)=fcompare;

start=fcompare;

output;

end;

end;

end;

end;

run;

proc format cntlin = for_format;

run;

data want (drop=from);

set need;

if missing(previousname) then do;

group=put(from,$groups.);

subgroup=1;

end;

else do;

group=put(from,$groups.);

subgroup=2;

end;

run;

proc sort data=want out=want(drop=subgroup);

by group subgroup;

run;

/* *****Hash solution ******* */

data have;

length id filename $40 previousname $30.;

input id filename $ previousname $;

datalines;

1 data/1800/0001104659-09-000055.txt .

1 data/1800/0001104659-09-000005.txt 0001104659-09-000055.txt

1 data/1800/0001104659-09-000003.txt .

1 data/1800/0001104659-09-000006.txt 0001104659-09-000005.txt

1 data/1800/0001104659-09-000002.txt 0001104659-09-000006.txt

1 data/1800/0001104659-09-000004.txt 0001104659-09-000003.txt

2 data/1800/0001104659-09-000010.txt 0001104659-09-000007.txt

2 data/1800/0001104659-09-000007.txt .

;

data need;

set have;

length currentName $30;

currentName=scan(filename,-1,'/');

run;

data want;

group+1;

set need(where=(missing(previousname)));

output;

if _n_=1 then

do;

dcl hash h1(dataset:"need(where=(not missing(previousname)))");

h1.defineKey('previousname');

h1.defineData(all:'y');

h1.defineDone();

end;

do while(h1.find(key:currentName)=0);

output;

end;

drop currentName;

run;

Art, CEO, AnalystFinder.com

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

If I correctly understand what you have and want, then the following should do it:

data have;

length id filename $30 previousname $4.;

input id filename $ previousname $;

datalines;

1 pc/desktop/0001 .

1 pc/desktop/0002 0001

1 pc/desktop/0005 .

1 pc/desktop/0006 0005

1 pc/desktop/0003 0002

1 pc/desktop/0004 0003

2 pc/desktop/0008 0007

2 pc/desktop/0007 .

;

run;

proc sort data=have;

by id filename;

run;

data want (drop=_: name);

set have (rename=(previousname=_previousname));

name=scan(filename,-1,'/');

previousname=lag(name);

if missing(_previousname) then do;

group+1;

call missing(previousname);

end;

run;

Art, CEO, AnalystFinder.com

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi, Thanks for your reply!

I think I was not clear enough. Sorry about that.

In my example, it is happened to be that filename increases from 0001 to 0008, but it is not always the case.

So I cannot sort by id and filename and compare with lag value.

Maybe this example might give you better understanding.

data have; length id filename $30 previousname $4.; input id filename $ previousname $; datalines; 1 pc/desktop/0055 . 1 pc/desktop/0005 0055 1 pc/desktop/0003 . 1 pc/desktop/0006 0005 1 pc/desktop/0002 0005 1 pc/desktop/0004 0003 2 pc/desktop/0010 0007 2 pc/desktop/0007 . ; run;

id group filename previousname

1 1 address.com/0055 .

1 1 address.com/0005 0055

1 1 address.com/0006 0005

1 1 address.com/0002 0006

1 2 address.com/0003 .

1 2 address.com/0004 0003

2 3 address.com/0007 .

2 3 address.com/0010 0007

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

data have;

length id group filename $30 previousname $4.;

input id group filename $ previousname $;

drop group;

datalines;

1 1 address.com/0055 .

1 1 address.com/0005 0055

1 1 address.com/0006 0005

1 1 address.com/0002 0006

1 2 address.com/0003 .

1 2 address.com/0004 0003

2 3 address.com/0007 .

2 3 address.com/0010 0007

;

run;

data want;

set have;

name=scan(filename,-1,'/');

k=lag(name);

if _n_>1 and previousname ne k then group+1;

retain group 1;

drop k name;

run;

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your reply.

But the input you used is the output that I want to get.

There is no group number in the data set.

What I need to do is to

(1) among observations with 'id'=1, find observation with missing 'previousname'

(2) find if there is any observation with 'id'=1 whose 'previousname' is 'name' I found in (1)

(3) find if there is any observation with 'id'=1 whose 'previousname is 'name'' I found in (2)

(4) do this process until there is no observation whose 'previousname' is other observations' 'name'

(5) then all the rows I found in the previous part form 'group' 1.

(6) then, among observations with 'id'=1, find another observation with missing 'previousname' and do (1)-(4), and this form 'group' 2.

(7) if there are no observations with missing 'previousname' with the same 'id', then move to 'id'=2 and do the same process.

this is what I want to do.. sorry for the complication.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

If you noticed my data have earlier, I dropped your group variable from have. This was just for copy paste convenience. The want code is the one that created following that which creates the variable group.

Please give a sample of HAVE and WANT yet again. Thank you

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Difficult task, but one that I think the Hash object could be used to solve. Unfortunately, I'm not versed in that, thus stole some code that @Ksharp wrote for another question earlier this year. The code can probably be optimized for your task and, if someone can do that, I'd definitely like to see the more compact solution.

Regardless, I think that the following comes awfully close to meeting your requirements:

data have;

length id filename $30 previousname $4.;

input id filename $ previousname $;

datalines;

1 pc/desktop/0055 .

1 pc/desktop/0005 0055

1 pc/desktop/0003 .

1 pc/desktop/0006 0005

1 pc/desktop/0002 0005

1 pc/desktop/0004 0003

2 pc/desktop/0010 0007

2 pc/desktop/0007 .

;

data need1;

set have;

order=_n_;

from=scan(filename,-1,'/');

to=previousname;

if missing(to) then to=from;

run;

data full;

set need1 (keep=from to order) end=last;

if _n_ eq 1 then do;

declare hash h();

h.definekey('node');

h.definedata('node');

h.definedone();

end;

output;

node=from;

h.replace();

from=to;

to=node;

output;

node=from;

h.replace();

if last then h.output(dataset:'node');

drop node;

run;

data need2(keep=node household order rename=(node=from household=gp));

declare hash ha(ordered:'a');

declare hiter hi('ha');

ha.definekey('count');

ha.definedata('last');

ha.definedone();

declare hash _ha(hashexp: 20);

_ha.definekey('key');

_ha.definedone();

if 0 then set full;

declare hash from_to(dataset:'full(where=(from is not missing and to is not missing))',hashexp:20,multidata:'y');

from_to.definekey('from');

from_to.definedata('to');

from_to.definedone();

if 0 then set node;

declare hash no(dataset:'node');

declare hiter hi_no('no');

no.definekey('node');

no.definedata('node');

no.definedone();

do while(hi_no.next()=0);

household+1;

order+1;

output;

count=1;

key=node;

_ha.add();

last=node;

ha.add();

rc=hi.first();

do while(rc=0);

from=last;

rx=from_to.find();

do while(rx=0);

key=to;

ry=_ha.check();

if ry ne 0 then do;

node=to;

order+1;

output;

rr=no.remove(key:node);

key=to;_ha.add();

count+1;

last=to;

ha.add();

end;

rx=from_to.find_next();

end;

rc=hi.next();

end;

ha.clear();

_ha.clear();

end;

stop;

run;

proc sort data=need2;

by from;

run;

proc sort data=need1;

by from;

run;

data want;

merge need1 need2;

by from;

run;

proc sort data=want (keep=id filename previousname gp);

by id gp previousname;

run;

data want (drop=gp);

set want;

by id gp;

if first.gp then group+1;

run;

Art, CEO, AnalystFinder.com

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Under the assumption that there can't be more than one predecessor the following should work.

data have;

length id filename $30 previousname $4.;

input id filename $ previousname $;

length currentName $4;

currentName=scan(filename,-1,'/');

datalines;

1 pc/desktop/0001 .

1 pc/desktop/0002 0001

1 pc/desktop/0005 .

1 pc/desktop/0006 0005

1 pc/desktop/0003 0002

1 pc/desktop/0004 0003

2 pc/desktop/0008 0007

2 pc/desktop/0007 .

;

run;

data want;

group+1;

set have(where=(missing(previousname)));

output;

if _n_=1 then

do;

dcl hash h1(dataset:"have(where=(not missing(previousname)))");

h1.defineKey('previousname');

h1.defineData(all:'y');

h1.defineDone();

end;

do while(h1.find(key:currentName)=0);

output;

end;

drop currentName;

run;

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

@Patrick:Given the OP's second example dataset, your code drops one of the records. Can something be changed in your code to account for that? Here is @Sangho's second dataset:

data have; length id filename $30 previousname $4.; input id filename $ previousname $; length currentName $4; currentName=scan(filename,-1,'/'); datalines; 1 pc/desktop/0055 . 1 pc/desktop/0005 0055 1 pc/desktop/0003 . 1 pc/desktop/0006 0005 1 pc/desktop/0002 0005 1 pc/desktop/0004 0003 2 pc/desktop/0010 0007 2 pc/desktop/0007 . ;

Conversely, your code works just fine if @Sangho had erred in typing the fifth record of the second dataset:

1 pc/desktop/0002 0005

and actually meant it to be:

1 pc/desktop/0002 0006

My non-hash solution works correctly (I think) in both situations.

Art, CEO, AnalystFinder.com

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Here is a datastep solution that doesn't use the hash object. I'm sure that it runs slower than taking advantage of the hash object, but I think that the flow is easier to understand and modify. Instead of using a hash, it uses an array to create (and then apply) a format:

data have;

length id filename $30 previousname $4.;

input id filename $ previousname $;

datalines;

1 pc/desktop/0055 .

1 pc/desktop/0005 0055

1 pc/desktop/0003 .

1 pc/desktop/0006 0005

1 pc/desktop/0002 0005

1 pc/desktop/0004 0003

2 pc/desktop/0010 0007

2 pc/desktop/0007 .

;

data need;

set have nobs=num_obs;

from=scan(filename,-1,'/');

if _n_ eq 1 then call symput('num_obs',num_obs);

run;

data for_format (keep=start label fmtname type);

retain fmtname 'groups' type 'C';

set need;

array related(9999) $;

if missing(previousname) then do;

group+1;

call missing(of related(*));

j=1;

related(j)=from;

start=from;

label=put(group,4.);

output;

do i=1 to &num_obs.;

set need(keep=from previousname rename=(from=fcompare previousname=pcompare)) point=i;

if not missing(pcompare) then do;

if pcompare in related then do;

j+1;

related(j)=fcompare;

start=fcompare;

output;

end;

end;

end;

end;

run;

proc format cntlin = for_format;

run;

data want (drop=from);

set need;

if missing(previousname) then do;

group=put(from,$groups.);

subgroup=1;

end;

else do;

group=put(from,$groups.);

subgroup=2;

end;

run;

proc sort data=want out=want(drop=subgroup);

by group subgroup;

run;

Art, CEO, AnalystFinder.com

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Thank you so much guys for your helpful suggestions.

First of all, sorry for the confusion. As @art297 pointed out, it should be

1 pc/desktop/0002 0006

instead of

1 pc/desktop/0002 0005

and there should not be any one-to-many match.

I think the codes work perfectly fine, but I faced one issue.

I used filename and previousname with some numeric values such as '0055' and '0003' for brevity,

in the actual data I have, filenames are like

data/1800/0001104659-09-017676.txt

data/1800/0001104659-10-014032.txt

and previousnnames are like

0001104659-09-017676.txt

0001104659-10-014032.txt

I think this prevents me from having the result I want when I use the code above.

Any suggestions how I modify the code to get the result when I have 'filename' and 'previousname' like that?

Also, there should be no matching when they have different 'id', so there won't be any case that one observation's 'previous name' matches with other observation's 'name' when these two observations have different 'id's. Is there anyway that reduce the processing time by limiting this matching process to those observation with the same 'id'. I have more than 250,000 observations, so it might take too long if it tries to find matching rows from the entire data.

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Both @Patrick's and my solutions will work as long as you adjust for the new variable lengths. e.g.:

data have;

length id filename $40 previousname $30.;

input id filename $ previousname $;

datalines;

1 data/1800/0001104659-09-000055.txt .

1 data/1800/0001104659-09-000005.txt 0001104659-09-000055.txt

1 data/1800/0001104659-09-000003.txt .

1 data/1800/0001104659-09-000006.txt 0001104659-09-000005.txt

1 data/1800/0001104659-09-000002.txt 0001104659-09-000006.txt

1 data/1800/0001104659-09-000004.txt 0001104659-09-000003.txt

2 data/1800/0001104659-09-000010.txt 0001104659-09-000007.txt

2 data/1800/0001104659-09-000007.txt .

;

data need;

set have nobs=num_obs;

length from $30;

from=scan(filename,-1,'/');

if _n_ eq 1 then call symput('num_obs',num_obs);

run;

data for_format (keep=start label fmtname type);

retain fmtname 'groups' type 'C';

set need;

array related(9999) $30.;

if missing(previousname) then do;

group+1;

call missing(of related(*));

j=1;

related(j)=from;

start=from;

label=put(group,4.);

output;

do i=1 to &num_obs.;

set need(keep=from previousname rename=(from=fcompare previousname=pcompare)) point=i;

if not missing(pcompare) then do;

if pcompare in related then do;

j+1;

related(j)=fcompare;

start=fcompare;

output;

end;

end;

end;

end;

run;

proc format cntlin = for_format;

run;

data want (drop=from);

set need;

if missing(previousname) then do;

group=put(from,$groups.);

subgroup=1;

end;

else do;

group=put(from,$groups.);

subgroup=2;

end;

run;

proc sort data=want out=want(drop=subgroup);

by group subgroup;

run;

/* *****Hash solution ******* */

data have;

length id filename $40 previousname $30.;

input id filename $ previousname $;

datalines;

1 data/1800/0001104659-09-000055.txt .

1 data/1800/0001104659-09-000005.txt 0001104659-09-000055.txt

1 data/1800/0001104659-09-000003.txt .

1 data/1800/0001104659-09-000006.txt 0001104659-09-000005.txt

1 data/1800/0001104659-09-000002.txt 0001104659-09-000006.txt

1 data/1800/0001104659-09-000004.txt 0001104659-09-000003.txt

2 data/1800/0001104659-09-000010.txt 0001104659-09-000007.txt

2 data/1800/0001104659-09-000007.txt .

;

data need;

set have;

length currentName $30;

currentName=scan(filename,-1,'/');

run;

data want;

group+1;

set need(where=(missing(previousname)));

output;

if _n_=1 then

do;

dcl hash h1(dataset:"need(where=(not missing(previousname)))");

h1.defineKey('previousname');

h1.defineData(all:'y');

h1.defineDone();

end;

do while(h1.find(key:currentName)=0);

output;

end;

drop currentName;

run;

Art, CEO, AnalystFinder.com

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

There is only a small tweak to the code required if you want to limit the lookup to records with the same ID. Using a in-memory hash lookup I don't believe this will make a big change to performance though.

Below the code with the tweak using as starting point the code @art297 posted.

/* *****Hash solution ******* */

data have;

length id filename $40 previousname $30.;

input id filename $ previousname $;

datalines;

1 data/1800/0001104659-09-000055.txt .

1 data/1800/0001104659-09-000005.txt 0001104659-09-000055.txt

1 data/1800/0001104659-09-000003.txt .

1 data/1800/0001104659-09-000006.txt 0001104659-09-000005.txt

1 data/1800/0001104659-09-000002.txt 0001104659-09-000006.txt

1 data/1800/0001104659-09-000004.txt 0001104659-09-000003.txt

2 data/1800/0001104659-09-000010.txt 0001104659-09-000007.txt

2 data/1800/0001104659-09-000007.txt .

;

data need;

set have;

length currentName $30;

currentName=scan(filename,-1,'/');

run;

data want;

group+1;

set need(where=(missing(previousname)));

output;

if _n_=1 then

do;

dcl hash h1(dataset:"need(where=(not missing(previousname)))");

h1.defineKey('id','previousname');

h1.defineData(all:'y');

h1.defineDone();

end;

do while(h1.find(key:id,key:currentName)=0);

output;

end;

drop currentName;

run;- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Thanks so much guys!

I both of the codes work great!

I really appreciate for your help.

April 27 – 30 | Gaylord Texan | Grapevine, Texas

Registration is open

Walk in ready to learn. Walk out ready to deliver. This is the data and AI conference you can't afford to miss.

Register now and save with the early bird rate—just $795!

Learn how use the CAT functions in SAS to join values from multiple variables into a single value.

Find more tutorials on the SAS Users YouTube channel.

SAS Training: Just a Click Away

Ready to level-up your skills? Choose your own adventure.