- Home

- /

- Analytics

- /

- Forecasting

- /

- Re: Alternatives to Proc AUTOREG for healthcare time series analysis

- RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

I am conducting a time series analysis on rates of health care utilization, I understand PROC AUTOREG is the most appropriate option in SAS. However, I do not have SAS/ETS. I have read several articles that use PROC Reg and call DWPROB to test for autocorrelation. Other sources have used Proc GLM or Proc GLIMMIX, but I do not think these can account for autocorrelation. We have designed this study well, but the intervention itself may not be strong. Are there any SAS alternative(s) for PROC Autoreg (not in SAS/ETS) that I can leverage.

The proc reg and proc glimmix models are:

PROC REG DATA = DATA;

MODEL CHANGE = TIME POST TIME_AFTER / DWPROB;

RUN;

PROC GLIMMIX DATA = DATA;

MODEL CHANGE = TIME POST TIME_AFTER/ SOLUTION CHISQ CORRB;

RANDOM _RESIDUAL_;

RUN;

The Darwin & Watson test returns a value of 1.980, which suggests to me there is no autocorrelation between these variables and the outcome.

PROC Reg and Prog GLIMMIX produce the same result - I am not sure if this is correct.

This output would then suggest that the intervention resulted in a statically significant reduction of rates, albeit very small (-2% reduction).

Looking forward to your ideas, questions, concerns, clarifications, all!

Description of data:

Change = change in rates of utilization between intervention and control

Time = sequential number for the time period (84 records representing all months between 2011 and 2017)

Post = 0 or 1 value for "no intervention" (0) or "intervention" (1) period

Time_After = sequential number starting on the first intervention month on 01/01/2015

Full data below:

| SRC | TIME | EFFECTIVE_PERIOD | POST | PERIOD | INT_RATE | CONTROL_RATE | INT_CLAIMS | CONTROL_CLAIMS | CHANGE | CLAIMS_CHANGE | TIME_AFTER |

| ALL | 1 | 1/1/2011 | 0 | NO INTERVENTION | 4.6 | 4.06 | 1377 | 1219 | 0.54 | 158 | 0 |

| ALL | 2 | 2/1/2011 | 0 | NO INTERVENTION | 3.91 | 3.67 | 1265 | 1111 | 0.24 | 154 | 0 |

| ALL | 3 | 3/1/2011 | 0 | NO INTERVENTION | 4.6 | 4.43 | 1566 | 1440 | 0.17 | 126 | 0 |

| ALL | 4 | 4/1/2011 | 0 | NO INTERVENTION | 3.42 | 3.86 | 1137 | 1155 | -0.44 | -18 | 0 |

| ALL | 5 | 5/1/2011 | 0 | NO INTERVENTION | 3.88 | 4.04 | 1339 | 1274 | -0.16 | 65 | 0 |

| ALL | 6 | 6/1/2011 | 0 | NO INTERVENTION | 3.69 | 4.28 | 1182 | 1207 | -0.59 | -25 | 0 |

| ALL | 7 | 7/1/2011 | 0 | NO INTERVENTION | 3.79 | 3.56 | 1208 | 1027 | 0.23 | 181 | 0 |

| ALL | 8 | 8/1/2011 | 0 | NO INTERVENTION | 3.62 | 3.62 | 1229 | 1102 | 0 | 127 | 0 |

| ALL | 9 | 9/1/2011 | 0 | NO INTERVENTION | 4.57 | 4.15 | 1386 | 1129 | 0.42 | 257 | 0 |

| ALL | 10 | 10/1/2011 | 0 | NO INTERVENTION | 4.47 | 4.23 | 1354 | 1220 | 0.24 | 134 | 0 |

| ALL | 11 | 11/1/2011 | 0 | NO INTERVENTION | 4.86 | 4.24 | 1459 | 1256 | 0.62 | 203 | 0 |

| ALL | 12 | 12/1/2011 | 0 | NO INTERVENTION | 3.94 | 3.85 | 1233 | 1129 | 0.08 | 104 | 0 |

| ALL | 13 | 1/1/2012 | 0 | NO INTERVENTION | 4.59 | 3.84 | 1473 | 1227 | 0.75 | 246 | 0 |

| ALL | 14 | 2/1/2012 | 0 | NO INTERVENTION | 4.41 | 4.1 | 1369 | 1333 | 0.31 | 36 | 0 |

| ALL | 15 | 3/1/2012 | 0 | NO INTERVENTION | 4.51 | 4.57 | 1487 | 1405 | -0.07 | 82 | 0 |

| ALL | 16 | 4/1/2012 | 0 | NO INTERVENTION | 4.18 | 4.02 | 1346 | 1310 | 0.16 | 36 | 0 |

| ALL | 17 | 5/1/2012 | 0 | NO INTERVENTION | 4.97 | 4.65 | 1664 | 1411 | 0.33 | 253 | 0 |

| ALL | 18 | 6/1/2012 | 0 | NO INTERVENTION | 4.62 | 3.81 | 1409 | 1228 | 0.81 | 181 | 0 |

| ALL | 19 | 7/1/2012 | 0 | NO INTERVENTION | 3.9 | 4.17 | 1254 | 1269 | -0.27 | -15 | 0 |

| ALL | 20 | 8/1/2012 | 0 | NO INTERVENTION | 4.21 | 3.74 | 1267 | 1151 | 0.47 | 116 | 0 |

| ALL | 21 | 9/1/2012 | 0 | NO INTERVENTION | 3.82 | 4.14 | 1104 | 1221 | -0.32 | -117 | 0 |

| ALL | 22 | 10/1/2012 | 0 | NO INTERVENTION | 4.39 | 4.27 | 1223 | 1276 | 0.13 | -53 | 0 |

| ALL | 23 | 11/1/2012 | 0 | NO INTERVENTION | 3.95 | 4.05 | 1128 | 1156 | -0.11 | -28 | 0 |

| ALL | 24 | 12/1/2012 | 0 | NO INTERVENTION | 3.63 | 3.34 | 996 | 978 | 0.29 | 18 | 0 |

| ALL | 25 | 1/1/2013 | 0 | NO INTERVENTION | 5.12 | 3.88 | 1457 | 1025 | 1.24 | 432 | 0 |

| ALL | 26 | 2/1/2013 | 0 | NO INTERVENTION | 3.56 | 3.18 | 1106 | 894 | 0.38 | 212 | 0 |

| ALL | 27 | 3/1/2013 | 0 | NO INTERVENTION | 4.02 | 3.73 | 1206 | 1018 | 0.29 | 188 | 0 |

| ALL | 28 | 4/1/2013 | 0 | NO INTERVENTION | 4.37 | 4.09 | 1337 | 1130 | 0.28 | 207 | 0 |

| ALL | 29 | 5/1/2013 | 0 | NO INTERVENTION | 4.12 | 3.91 | 1204 | 1121 | 0.21 | 83 | 0 |

| ALL | 30 | 6/1/2013 | 0 | NO INTERVENTION | 3.86 | 3.58 | 1037 | 983 | 0.27 | 54 | 0 |

| ALL | 31 | 7/1/2013 | 0 | NO INTERVENTION | 3.83 | 3.89 | 1076 | 1022 | -0.06 | 54 | 0 |

| ALL | 32 | 8/1/2013 | 0 | NO INTERVENTION | 3.96 | 4.07 | 1000 | 1118 | -0.11 | -118 | 0 |

| ALL | 33 | 9/1/2013 | 0 | NO INTERVENTION | 4.7 | 3.93 | 1084 | 991 | 0.77 | 93 | 0 |

| ALL | 34 | 10/1/2013 | 0 | NO INTERVENTION | 4.7 | 4.13 | 1213 | 1147 | 0.57 | 66 | 0 |

| ALL | 35 | 11/1/2013 | 0 | NO INTERVENTION | 4.24 | 3.19 | 1098 | 872 | 1.05 | 226 | 0 |

| ALL | 36 | 12/1/2013 | 0 | NO INTERVENTION | 3.71 | 3.16 | 935 | 831 | 0.54 | 104 | 0 |

| ALL | 37 | 1/1/2014 | 0 | NO INTERVENTION | 4.77 | 3.55 | 1263 | 891 | 1.22 | 372 | 0 |

| ALL | 38 | 2/1/2014 | 0 | NO INTERVENTION | 3.92 | 3.33 | 999 | 903 | 0.59 | 96 | 0 |

| ALL | 39 | 3/1/2014 | 0 | NO INTERVENTION | 5.26 | 4.54 | 1406 | 1135 | 0.72 | 271 | 0 |

| ALL | 40 | 4/1/2014 | 0 | NO INTERVENTION | 5.01 | 4.64 | 1339 | 1219 | 0.37 | 120 | 0 |

| ALL | 41 | 5/1/2014 | 0 | NO INTERVENTION | 5.18 | 4.69 | 1317 | 1203 | 0.49 | 114 | 0 |

| ALL | 42 | 6/1/2014 | 0 | NO INTERVENTION | 4.48 | 4.39 | 1175 | 1061 | 0.09 | 114 | 0 |

| ALL | 43 | 7/1/2014 | 0 | NO INTERVENTION | 4.39 | 4.36 | 1163 | 1092 | 0.03 | 71 | 0 |

| ALL | 44 | 8/1/2014 | 0 | NO INTERVENTION | 3.72 | 3.81 | 1033 | 1003 | -0.09 | 30 | 0 |

| ALL | 45 | 9/1/2014 | 0 | NO INTERVENTION | 5.39 | 4.18 | 1415 | 1079 | 1.21 | 336 | 0 |

| ALL | 46 | 10/1/2014 | 0 | NO INTERVENTION | 5.18 | 4.41 | 1381 | 1210 | 0.77 | 171 | 0 |

| ALL | 47 | 11/1/2014 | 0 | NO INTERVENTION | 4.09 | 3.56 | 1155 | 923 | 0.53 | 232 | 0 |

| ALL | 48 | 12/1/2014 | 0 | NO INTERVENTION | 4.72 | 3.87 | 1278 | 1079 | 0.85 | 199 | 0 |

| ALL | 49 | 1/1/2015 | 1 | INTERVENTION | 5.15 | 4.22 | 1418 | 1106 | 0.93 | 312 | 1 |

| ALL | 50 | 2/1/2015 | 1 | INTERVENTION | 4.3 | 3.73 | 1174 | 994 | 0.57 | 180 | 2 |

| ALL | 51 | 3/1/2015 | 1 | INTERVENTION | 6.13 | 4.56 | 1631 | 1221 | 1.57 | 410 | 3 |

| ALL | 52 | 4/1/2015 | 1 | INTERVENTION | 5.42 | 4.66 | 1473 | 1300 | 0.77 | 173 | 4 |

| ALL | 53 | 5/1/2015 | 1 | INTERVENTION | 4.74 | 3.83 | 1276 | 1150 | 0.91 | 126 | 5 |

| ALL | 54 | 6/1/2015 | 1 | INTERVENTION | 4.81 | 4.15 | 1325 | 1144 | 0.66 | 181 | 6 |

| ALL | 55 | 7/1/2015 | 1 | INTERVENTION | 4.81 | 4.25 | 1391 | 1131 | 0.56 | 260 | 7 |

| ALL | 56 | 8/1/2015 | 1 | INTERVENTION | 4.49 | 4.44 | 1278 | 1221 | 0.05 | 57 | 8 |

| ALL | 57 | 9/1/2015 | 1 | INTERVENTION | 4.53 | 4.46 | 1252 | 1135 | 0.07 | 117 | 9 |

| ALL | 58 | 10/1/2015 | 1 | INTERVENTION | 5.52 | 4.61 | 1493 | 1245 | 0.91 | 248 | 10 |

| ALL | 59 | 11/1/2015 | 1 | INTERVENTION | 4.59 | 4.33 | 1268 | 1200 | 0.26 | 68 | 11 |

| ALL | 60 | 12/1/2015 | 1 | INTERVENTION | 4.68 | 4.63 | 1363 | 1220 | 0.05 | 143 | 12 |

| ALL | 61 | 1/1/2016 | 1 | INTERVENTION | 4.81 | 4.2 | 1267 | 1168 | 0.61 | 99 | 13 |

| ALL | 62 | 2/1/2016 | 1 | INTERVENTION | 4.36 | 4.76 | 1241 | 1258 | -0.41 | -17 | 14 |

| ALL | 63 | 3/1/2016 | 1 | INTERVENTION | 6.01 | 4.84 | 1679 | 1370 | 1.17 | 309 | 15 |

| ALL | 64 | 4/1/2016 | 1 | INTERVENTION | 4.94 | 4.73 | 1408 | 1379 | 0.2 | 29 | 16 |

| ALL | 65 | 5/1/2016 | 1 | INTERVENTION | 4.84 | 4.49 | 1407 | 1263 | 0.35 | 144 | 17 |

| ALL | 66 | 6/1/2016 | 1 | INTERVENTION | 5.9 | 5.07 | 1472 | 1415 | 0.84 | 57 | 18 |

| ALL | 67 | 7/1/2016 | 1 | INTERVENTION | 5.06 | 4.73 | 1316 | 1344 | 0.33 | -28 | 19 |

| ALL | 68 | 8/1/2016 | 1 | INTERVENTION | 5.59 | 4.98 | 1454 | 1338 | 0.61 | 116 | 20 |

| ALL | 69 | 9/1/2016 | 1 | INTERVENTION | 4.99 | 5.14 | 1321 | 1271 | -0.16 | 50 | 21 |

| ALL | 70 | 10/1/2016 | 1 | INTERVENTION | 5.09 | 4.56 | 1343 | 1310 | 0.53 | 33 | 22 |

| ALL | 71 | 11/1/2016 | 1 | INTERVENTION | 4.95 | 4.41 | 1336 | 1174 | 0.54 | 162 | 23 |

| ALL | 72 | 12/1/2016 | 1 | INTERVENTION | 4.61 | 4.43 | 1289 | 1234 | 0.18 | 55 | 24 |

| ALL | 73 | 1/1/2017 | 1 | INTERVENTION | 4.4 | 4.58 | 1185 | 1356 | -0.18 | -171 | 25 |

| ALL | 74 | 2/1/2017 | 1 | INTERVENTION | 4.66 | 4.44 | 1247 | 1187 | 0.22 | 60 | 26 |

| ALL | 75 | 3/1/2017 | 1 | INTERVENTION | 5.59 | 4.89 | 1480 | 1399 | 0.7 | 81 | 27 |

| ALL | 76 | 4/1/2017 | 1 | INTERVENTION | 4.24 | 4.33 | 1170 | 1244 | -0.09 | -74 | 28 |

| ALL | 77 | 5/1/2017 | 1 | INTERVENTION | 5.7 | 4.64 | 1496 | 1336 | 1.06 | 160 | 29 |

| ALL | 78 | 6/1/2017 | 1 | INTERVENTION | 5.16 | 4.46 | 1451 | 1270 | 0.7 | 181 | 30 |

| ALL | 79 | 7/1/2017 | 1 | INTERVENTION | 4.41 | 3.92 | 1318 | 1200 | 0.49 | 118 | 31 |

| ALL | 80 | 8/1/2017 | 1 | INTERVENTION | 5.3 | 4.2 | 1455 | 1268 | 1.11 | 187 | 32 |

| ALL | 81 | 9/1/2017 | 1 | INTERVENTION | 5.17 | 4.65 | 1434 | 1276 | 0.52 | 158 | 33 |

| ALL | 82 | 10/1/2017 | 1 | INTERVENTION | 5.05 | 5.15 | 1379 | 1421 | -0.1 | -42 | 34 |

| ALL | 83 | 11/1/2017 | 1 | INTERVENTION | 5.27 | 4.89 | 1316 | 1367 | 0.38 | -51 | 35 |

| ALL | 84 | 12/1/2017 | 1 | INTERVENTION | 4.35 | 4.2 | 1243 | 1196 | 0.15 | 47 | 36 |

Thank you!

Some References:

1. (USES PROC GLIMMIX) Wong, EC. Analysing Phased Intervention with Segmented Regression and Stepped Wedge Deisngs: https://www.lexjansen.com/wuss/2014/74_Final_Paper_PDF.pdf

2. (USES PROC AUTOREG) Penfold, R. Use of Interrupted Time Series Analysis in Evaluating Health Care Quality Improvements https://www.academicpedsjnl.net/article/S1876-2859(13)00210-6/pdf

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

What's missing in your first reference? It seems to cover the use of GLIMMIX with AR(1) covariance structure for segmented models quite well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

What's missing in your first reference? It seems to cover the use of GLIMMIX with AR(1) covariance structure for segmented models quite well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Thank you, I overlooked including that in my logic. The estimate for Time_After is -0.0223 (p = 0.0105).

How should I interpret the AR(1) output:

AR(1) estimate: 0.05051

AR(1) SE: 0.115

Residual estimate: 0.1655

Residual SE: 0.026

Thank you for your help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

About AR(1) estimate

Estimate +/- SE interval includes zero, so I would consider autocorreletion as insignificant. Keep it in anyway, it doesn't hurt.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Thanks again for the clarification.

I had to update the methodology to count visits in our process, thus changing the values of our outcome variable.

Running the program again, I get an Ar(1) of 03354 (0.1134). This would mean that there is auto-correlation?

I am using PROC GLIMMIX to run the stats - that is the only available procedure I have access too. Code below:

PROC GLIMMIX DATA = OUTFILE._MEDS0_&SRC. PLOTS = RESIDUALPANEL ; MODEL ENCOUNTERS_DIFF = TIME POST TIME_AFTER POST*TIME_AFTER/ SOLUTION CHISQ CORRB; OUTPUT OUT = _GLIMMIX_POPULATION_&SRC. PRED = REGPRED; ODS OUTPUT PARAMETERESTIMATES = _MEDS0_&SRC._GLIMMIX; RANDOM _RESIDUAL_ / TYPE = AR(1); TITLE "PROC GLIMMIX - REGRESSION FOR: &SRC."; RUN;

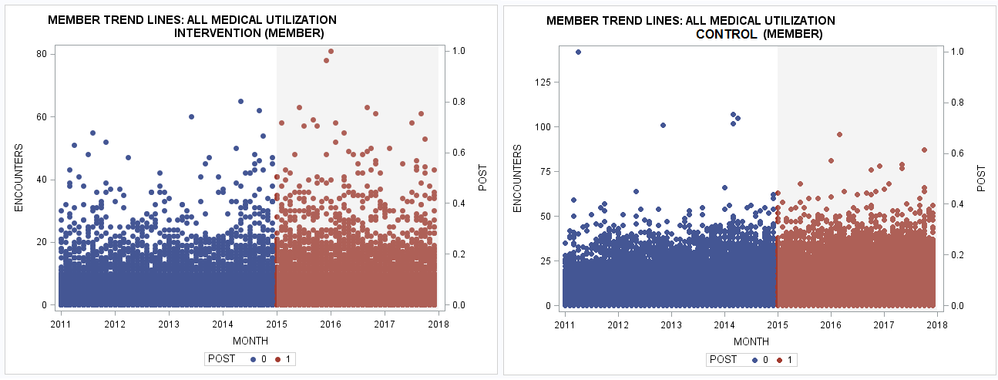

Essentially, this is a time series between an intervention and a control group for 84 months (2011 - 2017). In 2015 (49th month), the intervention began. The goal is to evaluate the impact on the intervention group compared to themselves and the comparison group.

If the AR(1) stat is showing auto-correlation, is this model appropriate? Also, the AIC value is 970 with a Chi-Sq. of 8847.

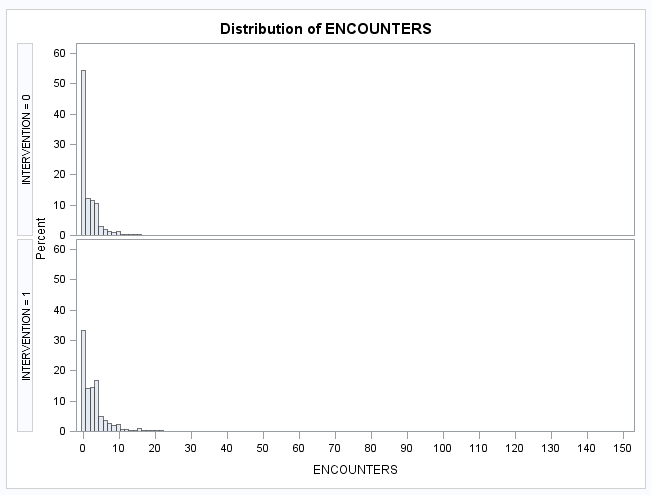

I also have the data t the individual level, but the AR(1) increases, and the AIC value skyrockets. And the distribution isn't Gaussian, it appears to be negative binomial to me (as this is health care utilization data).

Thoughts on how to handle this panel/time series data?

Thank you for your help!

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

That the AR(1) is significant is not a problem at all. Quite the contrary, it ensures the validity of your inference in the presence of first order autocorrelation.

AIC (or better, AICC) is useful for comparing models fitted on the same data. The AIC cannot be used to compare models fitted on different datasets.

If your residuals are far from normal, you can either try to transform the data, or try fitting the data to a different distribution.

hth

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Thanks again.

I am using health care utilization data, which is typically composed of a scattered high utilizers and low utilizers and many zeroes.

This is count data per month per person for 84 months (N = 6,017 person). How would you assess the distribution here?

Raw data:

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Histograms would be a lot more useful to guess the possible distribution(s).

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Apologies, please see below.

Poisson or negative binomial? How would you proceed?

Some thoughts:

1. The data is first order auto-regressive (i.e. what happens last month, influences this month). I rolled up the data to the year-quarter, and it remains auto-regressive. Not surprised. There is no way around it, as this is real-world healthcare data. Still, I need Proc Autoreg - but can I transform the data or account for the autocorrelation somehow in an estimate, akin to a two-step model?. 😞

2. Most members have utilization of 0 at each month, would it make sense to roll the members to the year-quarter or simply the year mark to reduce zeroes?

Thanks for your help!

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Real life data is never pure Poisson, except maybe radioactive counts. So if you try Poisson, add an overdispersion term. The very long tail however would suggest a negative binomial distribution. In either case, there appears to be an overabundance of zeros, so if you constantly get poor fits at zero, you should try fitting a zero inflation term.

My strategy would be: start from the simplest model (Poisson), add overdispersion, then add zero inflation, then switch to NB and ZINB. Use AICC to compare the fits.

If you have access to SAS/ETS, use proc COUNTREG. otherwise, use GLIMMIX.

That said, I was wondering if many of your care utilisation counts refer to the same people over time. And if so, whether you should account for that.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

THANK YOU SO MUCH! I was exploring those option, but I confused myself. Going to start fresh with that plan of action.

Unfortunately, I don't have SAS/ETS, so GLIMMIX it is. I will follow your guidance and report out.

I am looking at the data in two ways: (1) population level, and (2) individual level.

At the population level, there are 84 records, one for each month of the study with columns indicating when the intervention started, utilization for the intervention and control, the difference in utilization rates between intervention and control, and a counter for the time after the intervention. Our outcome variable here is the difference in utilization rates. The rates are nicely distributed, with a normal distribution - but they still arise from count data. However, the difference is always negative - the intervention rates are always less than the control rates. Is Poisson, NegBin, and/or ZBIN still appropriate for negative values?

At the population level, the GLIMMIX is as follows:

PROC GLIMMIX DATA = OUTFILE._MEDS1_&SRC. PLOTS = RESIDUALPANEL ; MODEL RATE_DIFF = TIME POST TIME_AFTER POST*TIME_AFTER / SOLUTION CHISQ CORRB ; OUTPUT OUT = _GLIMMIX_POPULATION_&SRC. PRED = PREDICTED RESID = RESIDUALS; ODS OUTPUT PARAMETERESTIMATES = _MEDS0_&SRC._GLIMMIX; RANDOM _RESIDUAL_ / TYPE = ARMA(1,1); TITLE "PROC GLIMMIX - REGRESSION FOR: &SRC."; RUN;

At the member level, we add the same variables as above, except we include the member identifier. The outcome variable here is the count of utilization. Code:

PROC GLIMMIX DATA = OUTFILE.RATES_MEDS00_&SRC. PLOTS = RESIDUALPANEL; CLASS MEMBNO; MODEL ENCOUNTERS = TIME POST TIME_AFTER / SOLUTION CHISQ CORRB; OUTPUT OUT = _GLIMMIX_SUBJECT_&SRC. PRED = REGPRED; ODS OUTPUT PARAMETERESTIMATES = RATES_MEDS00_&SRC._GLIMMIX; RANDOM _RESIDUAL_ / SUBJECT = MEMBNO TYPE = ARMA(1,1); TITLE "PROC GLIMMIX - MEMBER LEVEL REGRESSION UNADJUSTED FOR: &SRC."; RUN;

So, to your question: yes, the utilization accounts for the same people over time. Am I appropriately account for this with the "SUBJECT = MEMBNO" statement. MEMBNO is the individual identifier.

Ideally, I need the analysis at the individual level so I can adjust for additional variables.

Let me know what you think, I'll be glad to fill in any holes and or share data. Thank you.

Available on demand!

Missed SAS Innovate Las Vegas? Watch all the action for free! View the keynotes, general sessions and 22 breakouts on demand.

Learn how to run multiple linear regression models with and without interactions, presented by SAS user Alex Chaplin.

Find more tutorials on the SAS Users YouTube channel.