- Home

- /

- Solutions

- /

- Data Management

- /

- Re: Parallel Job SAS DIS - Old Version

- RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hello everyone,

I'm new to this forum and I'm new in SAS DIS.

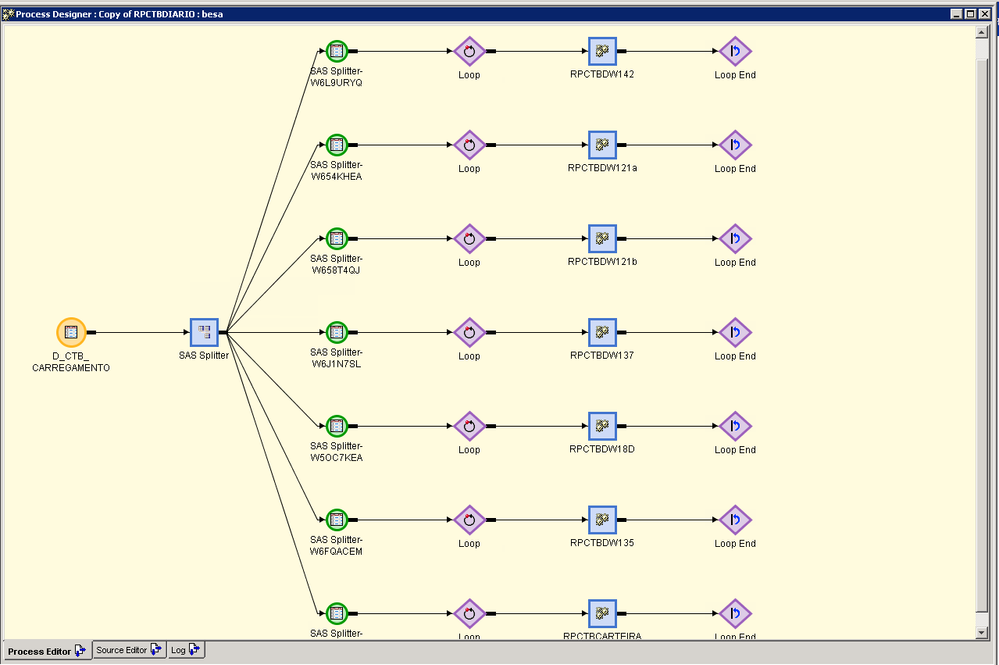

We have a pretty complex Workflow and we would like to parallelize some jobs.

I'm using a old version of SAS DIS (Version 3.4 , yup, don't be scared.. its a pain to do jobs here when you're used to other ETL Tools) and I do not know anything about parellelizing jobs on SAS DIS.

I've done some read about the Enable Parallel option on the Loops object but I really don't know how can I do this or if this will work.

I'm adding an attachment which shows one of the jobs I would like to parallelize.

Can anyone help me?

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

It seems you are calling different jobs in each "leg", so I would simply suggest that you create permanent (non persistant) output from splitter. And then use your scheduler for the parallelization planning.

Unless you are in a grid...?

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi LinusH,

Thank you for your answer.

I didn't understood your response, specially the part about the permanent (non persistant) output from splitter.

I can't place my jobs directly from the splitter without having the loop object.

And I don't have scheduler for the paralleliztion planning.

Regarding the grid question:

1) If you're talking about the server, We have a dedicated server to run SAS jobs, which is a pretty good machine, with some processors (don't remember how many though)

2) If you're talking about Grid in SAS, I don't think we have that configured or even if we have the license for that. Ancient SAS and first time using SAS DIS.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

What I meant was to store the splitter result in a permanent library. Persistent means that you keep some kind of history.

Are you using the splitter outputs as parameter data for the loop?

It seems that you are on a SMP SAS box, which means that MP Connect will be used for paralleliziation.

Scheduling: you do run the jobs in batch, no?

In the Scheduling plugin in Management Console, uou can define flows where jobs can run in parallel - even for cron and Windows Task.

But, by looking in the documentation, I see that parallelization is possible within DI Studio - see chapter 18. Whether this is applicable to your situation, I can't tell.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Firstly and what you already know: SAS 9.1.3 / DIS 3.4 is seriously outdated and your company needs to upgrade. As to my knowledge there is even no more a direct migration path from DIS 3.4 to the most current version (=you need first to migrate to an earlier version and only then you can migrate to the most current version). Not sure why any company still would invest into development using DIS3.4 (unless it's some bridging BAU task).

As for your real question:

The flow you've posted doesn't look right to me. IF DIS 3.4 already provides the "run in parallel" option then the code generated will spawn a new session for the inner job using MP Connect (rsubmit). You will need SAS/Connect licenced on your server for this to work.

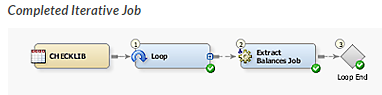

From the current documentation: http://support.sas.com/documentation/cdl/en/etlug/67323/HTML/default/viewer.htm#n0t84jtm1a5wofn1e4qp...

For your flow:

1. Create an inner job which takes parameters

2. Create a control table ("checklib" in above picture) which contains the parameter values

3. In the loop transformation map the columns from the control table "checklib" to the parameters of your inner job

In your example:

What you would pass to the inner job is the selection criteria subsetting your source data ("D_CTB_CARREGAMENTO"). This is then available to your inner job as SAS macro variable. Your inner job could then be defined as follows:

....

where <variable>=¶meter_value

...

Your flow would only have the following objects:

- Source table

- Control table (and eventually a user written node creating & populating the control table)

- a single loop transformation

- a single inner job (parameterized)

Having written all of the above: I don't like to use the loop transformation for parallel runs of jobs. Actually: I don't like the loop transformation at all and I only use it in cases where I don't know in advance how many calls of the inner job I need (=data driven with a control table created dynamically based on some changing upstream data).

Especially when setting the parallel option you'll end up with child processes. What do you do if one of the child processes falls over? How do you re-run your job? Can you re-run everything?

When using a scheduler only the outer job can get scheduled and will be monitored. So if something falls over you just know that something didn't work. You then need to find the log of the child process which didn't work for further investigation.

The approach I would be taking for your example:

1. Create a custom transformation which takes the data selection criteria as a parameter (a prompt)

2. Write separate jobs for each selection (source table, custom transformation with selection criteria populated, target table)

3. Use a scheduler like LSF to run all these jobs in parallel.

This way if something falls over it's directly monitored and reported on by the scheduler, you find the log directly, you can fix and re-run exactly the affected job without the need to touch anything else.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

First of all, let me thank you for your amazing reply. I will try to answer all the points referred in your reply as best as I possibly can.

It's an ancient version and since I'm used to work with more modern versions of ETL tools, like PowerCenter, CloverETL, among others, it really takes me a step back. This version does not have a CTRL+Z option (to begin with) !!!

The job I attached is a regular job with this version. It has a splitter which has a column that serves as a parameter for all the Loops. I cannot nest anymore jobs within a loop, only one.

It does have a option for parallelization, which is referenced on the manual, which is "Execute iterations in Parallel" and from the testings I've been doing, it is increasing (a lot) the performance on my most heavy/longer jobs, so thanks a lot for that!

My question is if this is a good option to keep on mind and to use for a better performance and for the ETL to take less time to execute. The server is a pretty good machine, with a lot of cores (32 i think), so running one job per processor, as indicated in the manual, is not a bad idea.

Another question I have is:

- Is it better to use the Execute iterations in Parallel only, or to use the "Enable parallel processing macros" in the Job Property (within the loop) ? Or is it better to use both ?

Regarding the scheduler, we only have Control-M which runs the SH, which call SAS Jobs in a specific order.

Best regards and thanks!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

So I would split this job inte separata for each loop. Then call these jobs directly from CTRL-M, or in the schedule manger and then have CTRL-M call the shell script. This means that you can parallelized these jobs manually.

It seems that you should be able to do the looping in parallel as well if you like. Why don't you try out the different options at hand and evaluate the results?

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi @LinusH,

Sorry for skipping your other response, got lost because of the other response.

It is not that easy to try that solution. We don't have Control-M in DEV, and the ETL Process is quite complex, and the dependencies are made in the SH and Control-M.

It is true that I could separate the loops into different jobs/shell scripts and parallelize that on Control-M but since it is a harder and more time-consuming solution, I would like to make that one as a last resource.

Regarding the question I've asked on my previous response, can you help me with that?

Thank you once again.

Best regards!

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

I'm happy to help as a part of a service agreement.

April 27 – 30 | Gaylord Texan | Grapevine, Texas

Registration is open

Walk in ready to learn. Walk out ready to deliver. This is the data and AI conference you can't afford to miss.

Register now and save with the early bird rate—just $795!

Need to connect to databases in SAS Viya? SAS’ David Ghan shows you two methods – via SAS/ACCESS LIBNAME and SAS Data Connector SASLIBS – in this video.

Find more tutorials on the SAS Users YouTube channel.