- Home

- /

- SAS Communities Library

- /

- Using SAS PROC S3 to access Amazon S3

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Using SAS PROC S3 to access Amazon S3

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

With SAS 9.4, SAS PROC S3 enables you to access and manage objects at Amazon S3. SAS PROC S3 is a base SAS procedure which enables you to create buckets, folders, and files at S3. The Amazon S3 is an object storage platform with a simple web service interface to store and retrieve any amount of data.

Before you can use PROC S3, you need an Amazon Web Service (AWS) Access Key ID and Secret Access Key. The Access Key Id and Secret Access Key can be generated from the AWS Console window. If you have an AWS account with admin privileges, you can generate the Access Key Id and Secret Access Key.

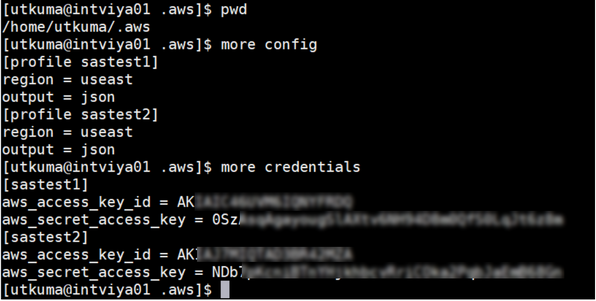

The SAS PROC S3 reads security information from configuration files to make a connection to AWS resources. The configuration files are available and accessible from SAS client machine. The configuration file contains primarily the AWS Access Key Id and Secret Access Key. The configuration file can be either AWS command line Interface (CLI) configuration files or the PROC S3 configuration file. The PROC S3 reads both config file during execution. The AWS CLI configuration allows you to configure the profile based connection at SAS client machine.

Unless specified, PROC S3 reads AWS CLI configuration files from default location i.e. “/userhome/.aws/” and uses the default profile. You can specify an alternate location for configuration and credentials file by using AWSCONFIG= and AWSCREDENTIALS= options in PROC S3 statement. To connect S3 using specific profile use PROFILE= and CREDENTIALSPROFILE= options in PROC S3 statement.

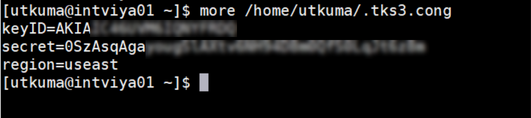

A local PROC S3 configuration file can also be used to connect AWS resources. The default PROC S3 configuration file is tks3.conf on Windows and .tks3.conf on Unix and located under user home directory. To specify customized config file name and location use CONFIG= option with PROC S3 statement.

Configuration options that are specified in AWS CLI configuration files override options specified in the PROC S3 configuration file. Options that are specified in the S3 procedure override options that are set in configuration files.

A sample AWS CLI configuration files:

Mobile users: To view the images, select the "Full" version at the bottom of the page.

A sample local PROC S3 configuration file:

The following list of statements are supported by SAS PROC S3:

- CREATE - To create an S3 bucket.

- COPY - To copy a source S3 location object to destination S3 location.

- DELETE - To delete an S3 location or object.

- DESTORY - To delete an S3 bucket (empty bucket).

- GET - To retrieve an S3 object to SAS client machine.

- GETDIR - To retrieve the contents of an S3 directory.

- INFO - To print information about an S3 location.

- LIST - To print contents from an S3 location

- MKDIR - To create a directory in an S3 location.

- PUT - To copy files or directory from SAS client machine to S3 location.

- PUTDIR - To copy a directory from SAS client machine to S3 location.

- RMDIR - To delete a directory from an S3 location.

The following additional statements are supported in SAS 9.4 M5.

- BUCKET - To set the Acceleration Transfer mode for the specified bucket.

- GETACCEL - To retrieve the Transfer Acceleration status for a bucket.

The following example code describes the creation of an S3 bucket using PROC S3. Note: The first PROC S3 statement is reading AWS CLI configuration files to connect to S3. The second PROC S3 statement is reading a local PROC S3 configuration file to connect S3.

73 PROC S3 PROFILE="sastest1";

74 CREATE "/sasgel";

75 run;

NOTE: PROCEDURE S3 used (Total process time):

real time 0.37 seconds

cpu time 0.06 seconds

OR

74 PROC S3 config="/home/utkuma/.tks3.conf";

75 CREATE "/sasgel";

76 run;

NOTE: PROCEDURE S3 used (Total process time):

real time 0.36 seconds

cpu time 0.03 seconds

The following screenshot describes a new S3 bucket (sasgel) stored in Amazon S3.

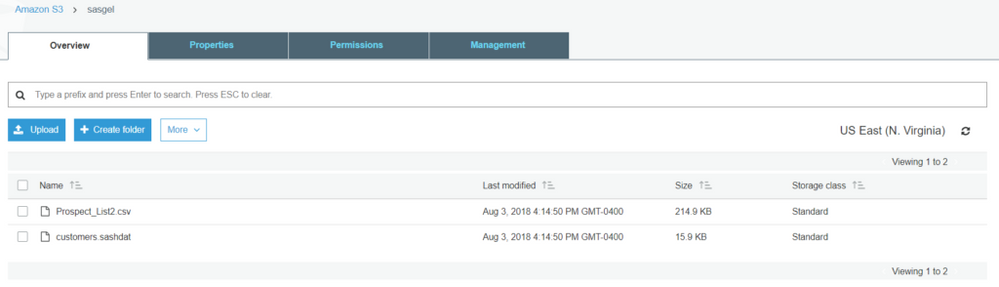

The following example code describes the data file load(write) from SAS Client machine location to an S3 bucket location using PROC S3.

73 PROC S3 PROFILE="sastest1";

74 PUT "/gelcontent/demo/DM/data/customers.sashdat" "/sasgel/customers.sashdat";

75 PUT "/gelcontent/demo/DM/data/Prospect_List2.csv" "/sasgel/Prospect_List2.csv";

76 LIST "/sasgel";

77 run;

Prospect_List2.csv 220088 2018-08-03T20:14:50.000Z

customers.sashdat 16272 2018-08-03T20:14:50.000Z

NOTE: PROCEDURE S3 used (Total process time):

real time 0.95 seconds

cpu time 0.08 seconds

The following screenshot describes an Amazon S3 bucket (sasgel) with data files.

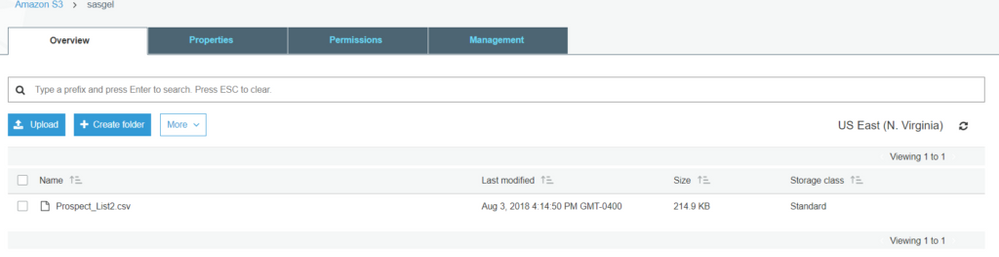

The following example code describes the data file deletion from an S3 bucket(location) using PROC S3.

73 PROC S3 PROFILE="sastest1";

74 DELETE "/sasgel/customers.sashdat";

75 LIST "/sasgel";

76 run;

NOTE: Deleted object /sasgel/customers.sashdat.

Prospect_List2.csv 220088 2018-08-03T20:14:50.000Z

NOTE: PROCEDURE S3 used (Total process time):

real time 0.25 seconds

cpu time 0.06 seconds

The following screenshot describes an Amazon S3 bucket (sasgel) with data files.

The SAS PROC S3 creates additional threads to read and write a data file larger than 5 MB size. These threads enable parallel processing for faster data transfer between SAS and AWS.

Transfer Acceleration:

Transfer Acceleration is a special mode that is used for faster data load to an S3 location. In this case, data is routed by Amazon to S3 location over the optimized network path. To utilize this feature Amazon may charge you additional data transfer fees. For more information see the AWS documentation.

The GET, GETDIR, PUT and PUTDIR statements take advantage of this mode when it’s enabled for an S3 bucket.

Example:

73 proc S3 PROFILE="sastest1";

74 BUCKET "sasgel" ACCELERATE;

75 run;

NOTE: Transfer acceleration set to on for bucket sasgel.

NOTE: PROCEDURE S3 used (Total process time):

real time 10.49 seconds

cpu time 0.03 seconds

Note: Don’t try to use the values for aws_access_key_Id= and aws_secret_access_key= shown in this post. The listed KeyId and SecretKey in the example have already expired.

For more information:

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi,

I must to insert on file from SAS to S3 bucket.

I have only this parameter:

bucket:XXXX-noprod-glhl-ap07073-sapbwhseq4ut

The bucket amministrator told me that other paramaters are not necessary because my server SAS is authorized to access. For the bucket amministrator is not necessary insert access key and secret key.

Can you provide me with an example of code? Or are there any additional parameters needed?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello @UttamKumar

How does PROC S3 perform in data transfer between local storage to Amazon S3 storage?

Can one create a library reference (using libname) using PROC S3 and use it in a local SAS program executing locally?

SAS AI and Machine Learning Courses

The rapid growth of AI technologies is driving an AI skills gap and demand for AI talent. Ready to grow your AI literacy? SAS offers free ways to get started for beginners, business leaders, and analytics professionals of all skill levels. Your future self will thank you.