- Home

- /

- SAS Communities Library

- /

- Snowflake… Another Data Cloud to access

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Snowflake… Another Data Cloud to access

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Amazon Redshift, Google BigQuery, Azure Synapse, Snowflake, Firebolt… The “data warehousing-as-a-service” market is very dynamic, and SAS has to keep pace with these new actors as they start to mature and be heavily used at our customers.

Indeed, we want our customers using SAS Viya or SAS 9 to leverage those modern data sources as soon as possible.

Snowflake is one of those recent cloud data warehouse solutions and SAS has been able to access it natively since last year.

Instead of humbly trying to reinvent the wheel, and repeating existing valuable information, I suggest you take a look at this awesome paper from @JBailey “An Insider’s Guide to SAS/ACCESS® Interface to Snowflake” which introduces and develops what makes Snowflake different and how SAS works with it.

In this post, I will just add two or three cents.

Snowflake Data Connector for CAS

While Jeff’s paper focuses on capabilities available in SAS 9.4 or the SAS Compute Server in SAS Viya 3.5 (aka SPRE), SAS/ACCESS Interface to Snowflake also provides a Snowflake data connector for Cloud Analytic Services (CAS).

So, you can easily and efficiently load Snowflake data into CAS as well as saving CAS data back to Snowflake. Multi-node load and save are supported. You can use bulk-load techniques when saving a CAS table into Snowflake. You can also send a specific SQL query to Snowflake and load the result set into CAS directly (FedSQL explicit pass-through).

See the last part of this article for a complete list of SAS capabilities supported with Snowflake.

How to setup a Snowflake CASLIB?

caslib sf dataSource=(srctype='snowflake'

server='xxxxxxxx.snowflakecomputing.com'

schema="&user"

username="&user"

password="&pw"

database="MYSF_DB") libref=cassf ;

How to multi-node load a Snowflake table?

proc casutil incaslib="sf" outcaslib="sf" ;

load casdata="bigprdsale" casout="bigprdsale" dataSourceOptions=(numreadnodes=0 sliceColumn="pk") replace ;

list tables ;

quit ;

How to “bulk-save” a CAS table to Snowflake (it’s a save with the bulk-load option)?

proc casutil incaslib="sf" outcaslib="sf" ;

save casdata="bigprdsale" casout="bigprdsale_blstage"

dataSourceOptions=(bulkload=true stage="MY_INTERNAL_STAGE/test1" compress=true numwritenodes=0) replace ;

list files ;

quit ;

Notice that for bulk-loading data into Snowflake (CAS save), the data connector supports multiple locations for staging the data. It could be Snowflake internal stages or AWS S3.

Storage and Compute Separation in practice

As Jeff stated in his paper, Compute and Storage are separate and independent. That’s the beauty of Snowflake. The Compute environment in Snowflake is a Warehouse.

You have a SQL query that takes too long to run? No worries. Run it with a bigger warehouse.

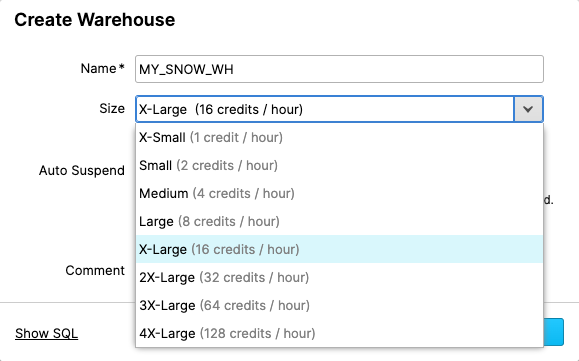

Warehouses range from Extra-Small to 4X-Large.

Select image to see a larger version.

Mobile users: To view the image, select the "Full" version at the bottom of the page.

There’s no need to re-arrange the data. Change the warehouse name and fire up your query again! That’s it. Tuning a SQL query has never been so easy!

Of course, it costs more per hour, but you can run more queries since they are faster.

How does that surface in SAS?

There’s a dedicated option in the Snowflake libname engine.

/* SAS library */

libname sf snow server="xxxxxxxx.snowflakecomputing.com"

db="MYSF_DB" user="&user" pw="&pw" schema="&user"

preserve_tab_names=yes dbcommit=0 readbuff=32767

insertbuff=32767

warehouse="MY_SNOW_WH" ;

For CAS, you have to use the CONOPTS option.

/* CAS CASLIB */

caslib sf dataSource=(srctype='snowflake'

server='xxxxxxxx.snowflakecomputing.com'

schema="&user"

username="&user"

password="&pw"

database="MYSF_DB"

conopts="warehouse='MY_SNOW_WH'") libref=cassf ;

So, changing the Snowflake warehouse in SAS or CAS is as simple as changing an option in the libname or caslib statement.

Running a query in an Extra-Small Warehouse (time to run=4:13.72):

82 proc sql ;

83 connect using sf as sfpt ;

SNOWFLAKE_3: Executed: on connection 2

84 create table a as select * from connection to sfpt (

85 select TXT_1."fake", TXT_1."COUNTRY", TXT_1."DIVISION", SUBSTR(TXT_1."PRODTYPE", 1, 3) as "onTheFly", TXT_1."PRODUCT",

86 TXT_1."YEAR", TXT_1."QUARTER", DIM."label", SUM(TXT_1."ACTUAL") as "sum_actual", SUM(TXT_1."PREDICT") as "sum_predict"

87 from "DEMO"."bigprdsale" TXT_1, "DEMO"."dim" DIM

88 where TO_NUMERIC(TXT_1."fake") >= DIM."low" AND TO_NUMERIC(TXT_1."fake") <= DIM."high"

89 group by TXT_1."fake", TXT_1."COUNTRY", TXT_1."DIVISION", SUBSTR(TXT_1."PRODTYPE", 1, 3),

90 TXT_1."PRODUCT", TXT_1."YEAR", TXT_1."QUARTER", DIM."label") ;

SNOWFLAKE_4: Prepared: on connection 2

select TXT_1."fake", TXT_1."COUNTRY", TXT_1."DIVISION", SUBSTR(TXT_1."PRODTYPE", 1, 3) as "onTheFly", TXT_1."PRODUCT",

TXT_1."YEAR", TXT_1."QUARTER", DIM."label", SUM(TXT_1."ACTUAL") as "sum_actual", SUM(TXT_1."PREDICT") as "sum_predict" from

"DEMO"."bigprdsale" TXT_1, "DEMO"."dim" DIM where TO_NUMERIC(TXT_1."fake") >= DIM."low" AND TO_NUMERIC(TXT_1."fake") <=

DIM."high" group by TXT_1."fake", TXT_1."COUNTRY", TXT_1."DIVISION", SUBSTR(TXT_1."PRODTYPE", 1, 3), TXT_1."PRODUCT", TXT_1."YEAR",

TXT_1."QUARTER", DIM."label"

SNOWFLAKE_5: Executed: on connection 2

Prepared statement SNOWFLAKE_4

NOTE: Table WORK.A created, with 2400000 rows and 10 columns.

91 disconnect from sfpt ;

92 quit ;

NOTE: PROCEDURE SQL used (Total process time):

real time 4:13.72

cpu time 8.60 seconds

Running the same query in a Large Warehouse (time to run=1:11.39):

82 proc sql ;

83 connect using sf as sfpt ;

SNOWFLAKE_33: Executed: on connection 2

84 create table a as select * from connection to sfpt (

85 select TXT_1."fake", TXT_1."COUNTRY", TXT_1."DIVISION", SUBSTR(TXT_1."PRODTYPE", 1, 3) as "onTheFly", TXT_1."PRODUCT",

86 TXT_1."YEAR", TXT_1."QUARTER", DIM."label", SUM(TXT_1."ACTUAL") as "sum_actual", SUM(TXT_1."PREDICT") as "sum_predict"

87 from "DEMO"."bigprdsale" TXT_1, "DEMO"."dim" DIM

88 where TO_NUMERIC(TXT_1."fake") >= DIM."low" AND TO_NUMERIC(TXT_1."fake") <= DIM."high"

89 group by TXT_1."fake", TXT_1."COUNTRY", TXT_1."DIVISION", SUBSTR(TXT_1."PRODTYPE", 1, 3),

90 TXT_1."PRODUCT", TXT_1."YEAR", TXT_1."QUARTER", DIM."label") ;

SNOWFLAKE_34: Prepared: on connection 2

select TXT_1."fake", TXT_1."COUNTRY", TXT_1."DIVISION", SUBSTR(TXT_1."PRODTYPE", 1, 3) as "onTheFly", TXT_1."PRODUCT",

TXT_1."YEAR", TXT_1."QUARTER", DIM."label", SUM(TXT_1."ACTUAL") as "sum_actual", SUM(TXT_1."PREDICT") as "sum_predict" from

"DEMO"."bigprdsale" TXT_1, "DEMO"."dim" DIM where TO_NUMERIC(TXT_1."fake") >= DIM."low" AND TO_NUMERIC(TXT_1."fake") <=

DIM."high" group by TXT_1."fake", TXT_1."COUNTRY", TXT_1."DIVISION", SUBSTR(TXT_1."PRODTYPE", 1, 3), TXT_1."PRODUCT", TXT_1."YEAR",

TXT_1."QUARTER", DIM."label"

SNOWFLAKE_35: Executed: on connection 2

Prepared statement SNOWFLAKE_34

NOTE: Table WORK.A created, with 2400000 rows and 10 columns.

91 disconnect from sfpt ;

92 quit ;

NOTE: PROCEDURE SQL used (Total process time):

real time 1:11.39

cpu time 7.83 seconds

Caching

This word is sometimes over-utilized to explain good or poor performance in a variety of tools. This is magic. Nobody knows how it works but some people have seen it working.

With Snowflake, caching is tangible. You run a SQL query twice. Your second run will be lightning faster. This is because Snowflake implements a result cache which holds the results of every query executed in the past 24 hours, regardless of the warehouse used and the user who issued the query.

Running a query the first time (1:33.25):

82 proc sql ;

83 connect using sf as sfpt ;

84 create table a as select * from connection to sfpt (

85 select TXT_1."fake", TXT_1."COUNTRY", TXT_1."DIVISION", SUBSTR(TXT_1."PRODTYPE", 1, 3) as "onTheFly", TXT_1."PRODUCT",

86 TXT_1."YEAR", TXT_1."QUARTER", DIM."label", SUM(TXT_1."ACTUAL") as "sum_actual", SUM(TXT_1."PREDICT") as

86 ! "sum_predict"

87 from "DEMO"."bigprdsale" TXT_1, "DEMO"."dim" DIM

88 where TO_NUMERIC(TXT_1."fake") >= DIM."low" AND TO_NUMERIC(TXT_1."fake") <= DIM."high"

89 group by TXT_1."fake", TXT_1."COUNTRY", TXT_1."DIVISION", SUBSTR(TXT_1."PRODTYPE", 1, 3),

90 TXT_1."PRODUCT", TXT_1."YEAR", TXT_1."QUARTER", DIM."label") ;

SNOWFLAKE_30: Prepared: on connection 2

select TXT_1."fake", TXT_1."COUNTRY", TXT_1."DIVISION", SUBSTR(TXT_1."PRODTYPE", 1, 3) as "onTheFly", TXT_1."PRODUCT",

TXT_1."YEAR", TXT_1."QUARTER", DIM."label", SUM(TXT_1."ACTUAL") as "sum_actual", SUM(TXT_1."PREDICT") as "sum_predict" from

"DEMO"."bigprdsale" TXT_1, "DEMO"."dim" DIM where TO_NUMERIC(TXT_1."fake") >= DIM."low" AND TO_NUMERIC(TXT_1."fake") <=

DIM."high" group by TXT_1."fake", TXT_1."COUNTRY", TXT_1."DIVISION", SUBSTR(TXT_1."PRODTYPE", 1, 3), TXT_1."PRODUCT", TXT_1."YEAR",

TXT_1."QUARTER", DIM."label"

SNOWFLAKE_31: Executed: on connection 2

Prepared statement SNOWFLAKE_30

NOTE: Table WORK.A created, with 2394015 rows and 10 columns.

91 disconnect from sfpt ;

92 quit ;

NOTE: PROCEDURE SQL used (Total process time):

real time 1:33.25

cpu time 8.15 seconds

Running the same query again using the same warehouse (10.35 seconds):

82 proc sql ;

83 connect using sf as sfpt ;

84 create table a as select * from connection to sfpt (

85 select TXT_1."fake", TXT_1."COUNTRY", TXT_1."DIVISION", SUBSTR(TXT_1."PRODTYPE", 1, 3) as "onTheFly", TXT_1."PRODUCT",

86 TXT_1."YEAR", TXT_1."QUARTER", DIM."label", SUM(TXT_1."ACTUAL") as "sum_actual", SUM(TXT_1."PREDICT") as

86 ! "sum_predict"

87 from "DEMO"."bigprdsale" TXT_1, "DEMO"."dim" DIM

88 where TO_NUMERIC(TXT_1."fake") >= DIM."low" AND TO_NUMERIC(TXT_1."fake") <= DIM."high"

89 group by TXT_1."fake", TXT_1."COUNTRY", TXT_1."DIVISION", SUBSTR(TXT_1."PRODTYPE", 1, 3),

90 TXT_1."PRODUCT", TXT_1."YEAR", TXT_1."QUARTER", DIM."label") ;

SNOWFLAKE_32: Prepared: on connection 2

select TXT_1."fake", TXT_1."COUNTRY", TXT_1."DIVISION", SUBSTR(TXT_1."PRODTYPE", 1, 3) as "onTheFly", TXT_1."PRODUCT",

TXT_1."YEAR", TXT_1."QUARTER", DIM."label", SUM(TXT_1."ACTUAL") as "sum_actual", SUM(TXT_1."PREDICT") as "sum_predict" from

"DEMO"."bigprdsale" TXT_1, "DEMO"."dim" DIM where TO_NUMERIC(TXT_1."fake") >= DIM."low" AND TO_NUMERIC(TXT_1."fake") <=

DIM."high" group by TXT_1."fake", TXT_1."COUNTRY", TXT_1."DIVISION", SUBSTR(TXT_1."PRODTYPE", 1, 3), TXT_1."PRODUCT", TXT_1."YEAR",

TXT_1."QUARTER", DIM."label"

SNOWFLAKE_33: Executed: on connection 2

Prepared statement SNOWFLAKE_32

NOTE: Table WORK.A created, with 2394015 rows and 10 columns.

91 disconnect from sfpt ;

92 quit ;

NOTE: PROCEDURE SQL used (Total process time):

real time 10.35 seconds

cpu time 8.27 seconds

Read more information about Snowflake caching.

Summary of Capabilities

As a conclusion, here is a summary of the SAS/ACCESS Interface to Snowflake capabilities.

| Engine | Capability | Snowflake |

|---|---|---|

| SAS | Bulk-Load | O |

| Implicit Pass-Through | O | |

| Explicit Pass-Through | O | |

| FedSQL | O | |

| SAS Procedures Pushdown | O | |

| Bulk-Unload | O | |

| CAS | Multi-Node Load | O |

| Bulk-Unload | ||

| FedSQL Implicit Pass-Through | ||

| FedSQL Explicit Pass-Through | O | |

| Multi-Node Save | O | |

| Bulk-Load | O |

Special thanks to Chris DeHart for his guidance on using SAS with Snowflake.

Thanks for reading.

SAS AI and Machine Learning Courses

The rapid growth of AI technologies is driving an AI skills gap and demand for AI talent. Ready to grow your AI literacy? SAS offers free ways to get started for beginners, business leaders, and analytics professionals of all skill levels. Your future self will thank you.

- Find more articles tagged with:

- access

- bulk loading

- cas

- cloud

- data access

- Data Connector

- data management

- GEL

- multinode

- snowflake

- viya

- warehouse