- Home

- /

- SAS Communities Library

- /

- SAS High-Performance Analytics tip #3: Example flow diagram in SAS Ent...

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

SAS High-Performance Analytics tip #3: Example flow diagram in SAS Enterprise Miner

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

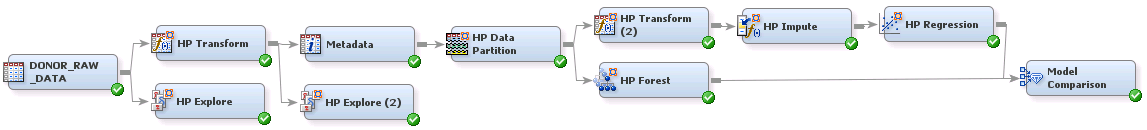

Time to learn by example, so the third tip in this series focuses on an example process flow diagram in SAS Enterprise Miner. The example uses high-performance data mining (HPDM) nodes to build the following modeling flow and requires a SAS High-Performance Data Mining license to run in a distributed mode. Since the data used in the example is not big (approximately 19K observations and 47 inputs), you can choose to run this flow in a single-machine mode.

Data

The data for this example can be downloaded from the SAS Enterprise Miner documentation site. It's available as a ZIP file next to Example Data for Getting Started with SAS Enterprise Miner 14.1 and includes DONORS_RAW_DATA data set.

The DONORS_RAW_DATA data set contains donations from a previous mail solicitation campaign at a charitable organization. The goal of this exercise is to predict profitable donors; the target variable TARGET_B is binary indicating whether a donor contributed to the campaign. This example was tested with SAS Enterprise Miner 14.1 on Windows and distributed environment of 2 worker nodes.

Import Process Flow Diagram

To run this example in a distributed environment, load the data set DONOR_RAW_DATA in the GettingStarted directory onto any SAS supported distributed data appliance (Hadoop, Teradata, Greenplum and so on). Since this process is specific to the data appliance and the access controls of your IT environment, the details are not discussed here.

Open SAS Enterprise Miner and create a new project using File >> New >> Project and name it as HPATip3. Next, create a new library using File >> New >> Library that points to the data appliance (in distributed mode) or the file directory that contains DONOR_RAW_DATA dataset (in single-machine mode). An alternate way of doing this is by placing the corresponding LIBNAME statement in the Project Start Code.

Download the process flow diagram HPPredictiveModelingTip.xml from this Github repository. In SAS Enterprise Miner, right-click on the Diagrams folder in the top left corner and select Import Diagram from XML and browse to the location of this xml file. Right-click Data Sources in the top left corner, and select Create Data Source to create a new data source. In the Data Source Wizard, browse to the newly created library and select the DONOR_RAW_DATA data set and click Next. At Step 4 of 8 Metadata Advisor Options, select Advanced button and click Next. At Step 5 of 8 Column Metadata, select Key, Target and Rejected roles for CONTROL_NUMBER, TARGET_B and TARGET_D variables respectively. Choose defaults everywhere else and complete the data source creation wizard.

Finally, update the process flow diagram with the data source you just created by deleting the existing DONOR_RAW_DATA node (first node in the flow) and by drag and dropping the newly added data source from the previous step (under Data Sources in top left corner). Remember to connect the new DONOR_RAW_DATA node to the HP Explore and HP Transform nodes that follow in the flow.

Setup GRIDHOST and GRIDINSTALLLOC

NOTE: This section can be skipped if you choose to run in single-machine mode.

When running in a distributed environment, the first step is to specify the GRIDHOST and GRIDINSTALLLOC macro variables. The name node of the distributed environment is specified in GRIDHOST and the High-Performance analytics (HPA) install location in GRIDINSTALLLOC.

Select the project name HPATip3 in the top left corner and click on ellipsis button next to Project Start Code property. In Project Start Code window, enter the following code after modifying the values appropriately for your distributed environment.

/* Set up location for grid install as well as the host information */

option set = GRIDHOST ="rdu001.unx.sas.com";

option set = GRIDINSTALLLOC="/opt/v940m3/INSTALL/TKGrid_REP";If you want to control the number of worker nodes used for this project, click on the ellipsis button next to Project Macro Variables property. Under HPDM, enter a desired value for HPDM_NODES macro variable. If this value is not specified, SAS Enterprise Miner uses all available worker nodes for processing. Make sure you specify this value either to be equal or less than the number of worker nodes available in your distributed environment. Lastly, validate the installation and configuration using the HPATEST procedure as described in tip #2: HPDM nodes in SAS Enterprise Miner.

Exploration

The example begins by exploring data using the HP Explore node. Select HP Explore node, right-click and select Run -- remember that the flow is already built for you, so follow the script and just run the nodes. The Results window shows many details -- count of missing observations, minimum and maximum values of variables, class and interval variable statistics and so on. Notice that the minimum value for DONOR_AGE is zero in the Statistics Table. This needs to be changed to missing as it represents unknown age rather than zero age. This task is typically accomplished by a replacement node. Since there is no analogous node for replacement under the HPDM tab, you can use the HP Transform node along with the Metadata node to do the task.

The following SAS code in the SAS Code property of HP Transform node is used to replace zero value with missing value.

new_donor_age = donor_age;

if donor_age = 0 then new_donor_age = .;The Metadata node that follows changes the role of DONOR_AGE variable from Input to Rejected -- this is done to hide DONOR_AGE variable so that the newly created NEW_DONOR_AGE can be used in its place. Use the ellipsis button next to Train property to view this change.

Select the Metadata node, right-click and select Run and do the same with HP Explore (2) node. When you open the Results for HP Explore (2) node and scroll down the Statistics Table, you will notice that the NEW_DONOR_AGE variable has a minimum value of 2 (not zero).

NOTE: The Metadata node and the Model Comparison node belong to the set of traditional nodes that are compatible with HPDM nodes.

Partitioning, Transformation and Imputation

The HP Partition node is used to perform 70/30 partition of the raw data into training and validation data sets. These proportions can be changed using the Training and Validation properties of the node. The default partitioning method for a binary target is a stratified sample -- that is, the training and validation partitions have the same event rate as the raw data.

In the flow, the HP Transform (2) node transforms the inputs FILE_CARD_GIFT, LIFETIME_GIFT_COUNT and MEDIAN_HOME_VALUE using Log transformation method. This is done using the ellipsis button next to Variables property. When the Variables window opens, select the above three variables and click Explore. You will notice that the histograms of these variables are skewed to the right. The Log transformation tries to bring these distributions closer to a normal or bell-shaped curve. After running this node, SAS Enterprise Miner creates three new log transformed variables with prefix LOG_. To view these newly created variables, click the ellipsis next to Exported Data property.

The HP Impute node next is used to impute missing values. The default imputation method replaces missing values with the most frequent category (mode) for class variables and the mean for interval variables. After this node is run, new variables with IMP_ prefix are added.

Select HP Impute node, right-click and select Run. This will run all the preceding nodes that have not yet been executed.

Modeling

The target variable TARGET_B indicates if the donor contributed to the campaign (value=1) or not (value=0). Two models, logistic regression and forest are used to predict this binary target. Logistic regression uses transformed and imputed inputs while forest uses partitioned data. In the example flow, notice that HP Impute node is connected to HP Regression while HP Data Partition is connected to HP Forest. This is because regression model automatically excludes observations with missing values and is affected by skewed distributions and extreme values while forest is not.

In the HP Regression node, the following properties were modified -- under Model Selection, the Selection Method property is changed to Stepwise and Selection Criterion and Stop Criterion were changed to SBC. For the HP Forest node, default settings were used where a maximum of 100 trees are constructed.

Model Comparison

The last step of this exercise is to compare models and pick the champion using the Model Comparison node. For picking the best model, the Grid Selection Statistic and Selection Table properties under Model Selection were changed to Misclassification Rate and Validation respectively.

Select Model Comparison node, right-click and select Run. This action runs all the preceding modeling nodes including the Model Comparison node. The HPDM Assess window under Results includes many charts based on entire data; select Sensitivity in the top left corner drop-down to view ROC curves for comparison.

Conclusion

With big data, it is imperative that manipulation and modeling algorithms exploit the distributed architecture of the underlying hardware to speed up the task. This example highlights the different capabilities provided by HPDM nodes to manipulate, partition, transform, impute and model data. SAS Enterprise Miner supports an extensive set of data mining techniques in the distributed environment -- HP Cluster, HP GLM, HP Neural, HP Principal Components, HP SVM, HP Tree, HP Variable Selection, HP Bayesian Network Classifier, making the transition to big data easier.

The next tip will focus on scoring new data, so stay tuned!

Earlier tips in this series are available at:

SAS AI and Machine Learning Courses

The rapid growth of AI technologies is driving an AI skills gap and demand for AI talent. Ready to grow your AI literacy? SAS offers free ways to get started for beginners, business leaders, and analytics professionals of all skill levels. Your future self will thank you.

- Find more articles tagged with:

- SAS High-Performance Analytics Tips