- Home

- /

- SAS Communities Library

- /

- SAS ESP Connector and Adapter Blocksize - Basic Analysis

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

SAS ESP Connector and Adapter Blocksize - Basic Analysis

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

SAS Event Stream Processing uses adapters and connectors to inject events into source windows of a model as well as subscribe to windows to retrieve events from a model. Each adapter and connector provides numerous parameters to configure how this is accomplished. Depending on the adapter or connector the number and role of parameters can vary significantly, but one parameter, blocksize, is common to most adapters and connectors.

The blocksize parameter, as one might imagine, allows the administrator to configure how many events are transferred as part of a publish or subscribe action. The default setting for this parameter is one. This means that one event per block is published to or subscribed from a window. Similar to other data transfer mechanisms, efficiencies may be gained by "packaging" multiple events per block. In this blog we will take a quick look at two related metrics, CPU and elapsed time, and how modifying block size impact those metrics.

Determining the impact

The method used to assess the impact of modifying the block size is fairly simple. Run multiple iterations of a model and adjust the block size for each iteration. Capture basic performance metrics for each iteration using the Linux time command.

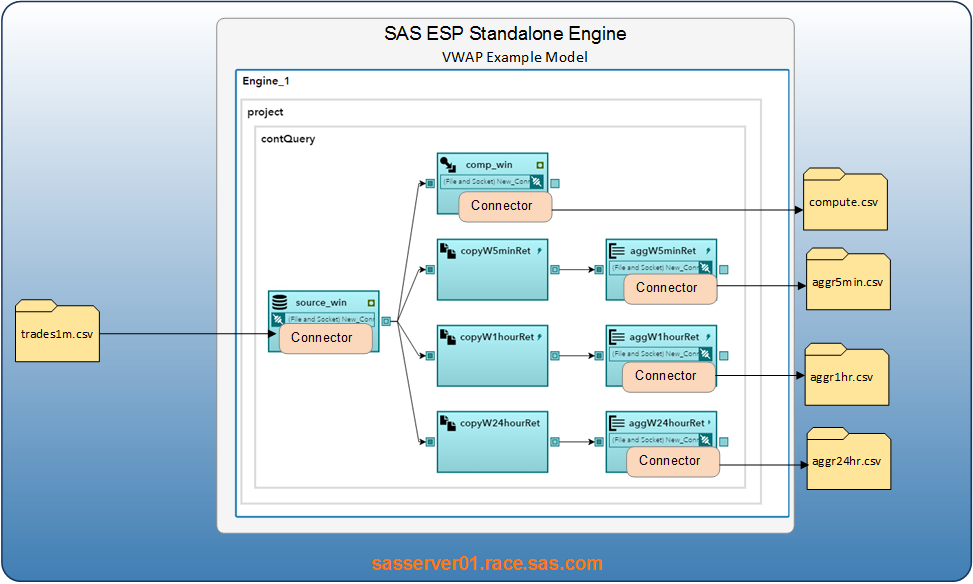

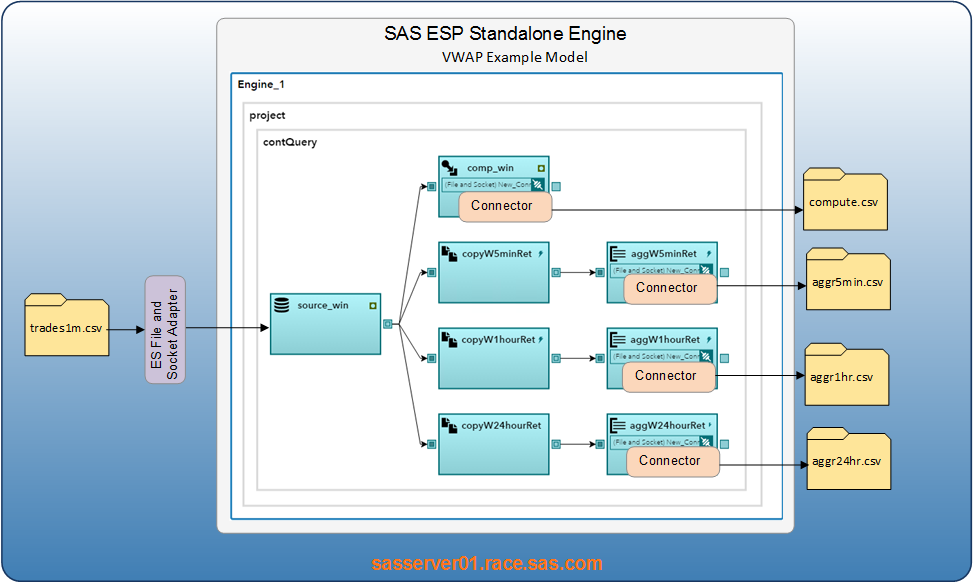

The volume weighted average pricing (VWAP) XML example included with the deployment was used for testing. Two sets of tests were undertaken. The first test used the file and socket connector and the second test used the file and socket adapter. The default sample model uses a connector to inject the events. Once a series of block size tests were completed with the connector, the connector was removed from the model and the adapter was used to publish events. The sample event file containing one million events in the vwap_xml directory was used for all tests. The value of project threads was unchanged at eight.

The objective of testing was not only to determine the impact of block size, but also verify whether there is a significant difference in the performance characteristics between connectors and adapters.

The following images depict the two publish methods and event flows.

Capturing metrics

It is important to note that since testing was done in a virtual environment that some variance can be expected.

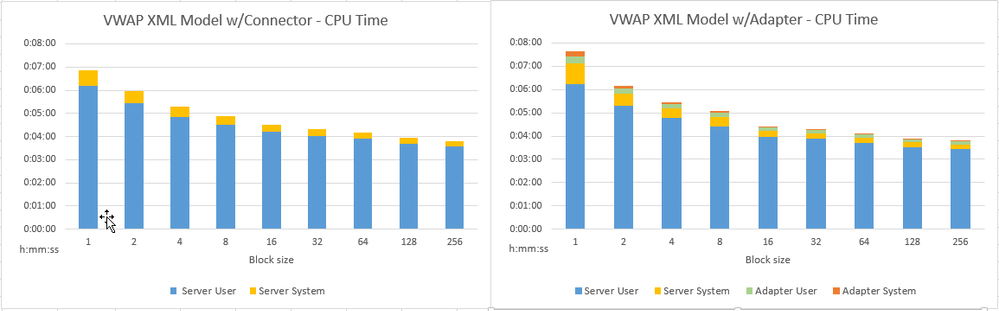

The block size was varied from 1 to 256 by a factor of two. Since connectors are part of an engine, only one process required monitoring for the connector test. When the adapter was used, there were metrics for two processes, the XML server and adapter.

The Linux time command provides an easy way to capture elapsed and CPU times for a process. Simply specify the time command followed by the command you want to measure. Shown below is an example of usage with the output.

real 5m6.814s

user 0m17.925s

sys 0m14.736s

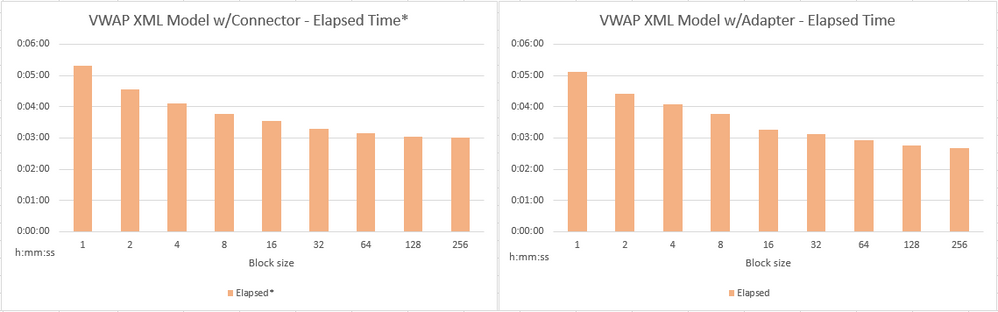

The “real” time shown is the elapsed time of the process (5 minutes and 6.8 seconds) and the “user” and “sys” values are user and system CPU time (17.9 and 14.7 seconds respectively). When using an adapter to publish events into a model, a discrete elapsed time is given as the adapter terminates when it encounters the end-of-file. But when using the publish connector it was necessary to terminate the XML server process after verifying all events have been processed. As a result the elapsed time for the publish connector will be slightly less accurate.

Evaluating CPU Time

Let's first look at CPU time. The charts shown below show the trend of CPU consumption by the model for a connector and adapter while modifying the block size. It should be noted that the VWAP model is configured with four file and socket subscriber connectors that each write to a CSV file. As a result a significant portion of the CPU time is expected to be consumed by those aggregate and compute windows. Nevertheless the CPU time consumed by the model when using a source window connector fell noticeably, 37%, when increasing the block size from one to sixteen. When set to 256, the decrease was 49 percent.

To have a proper comparison with the model using the adapter, it was necessary to combine the CPU consumed by the adapter and the engine. With the block size set to 16, the total CPU time (adapter + engine), fell by 42 percent when compared to measurements taken at one event per block. The improvement continued as the block size was increased, but was diminished.

As for the comparison of CPU time between the use of a connector versus an adapter, the greatest variance occurred with a block size of one. With a block size of one the model using an adapter to publish events used 10% more CPU than a connector. However, as the block size grew, the variance diminished and was negligible at a block size of 16 or greater. This is not too surprising as the two tests are completing the same amount of work, the primary difference is that an adapter is runs as a separate process and the connector is part of the engine (i.e. two processes vs one process).

Reviewing "Elapsed Time"

As noted earlier a measure of elapsed time was captured for each of the tests. The elapsed time in this case is a measure of how long it takes to process 1 million events in the VWAP model on a virtual machine with two cores. In a live production environment this is not a measurement that would be useful. Instead a measure of throughput (events per second) and latency would be more useful.

To get a sense of how block size impacts the time required to process one million events, the elapsed time was captured. Since the CPU time is a major component of elapsed time, it is no surprise that the graphs are similar. When running the model with a source window connector and a block size of 16 the elapsed time fell 33% when compared to a block size of one. Similarly when run with an adapter instead of a connector, the elapsed time fell 36 percent. When executed with a block size of 256 the elapsed times fell 43% and 48% respectively.

As you can be seen in the graphs there is minimal difference between running the model with an adapter or a connector. The adapter was typically slightly faster, but it is suspected that most of the difference is the result of having to monitor the connector tests, which required manual termination of the server process to capture the timings.

Although no tests were run with a block size greater than 256, given the shape of the curve one can expect only small marginal gains when using a larger block size. In addition, large block sizes may increase latency, as the adapter or connector will have to "wait" for enough events to fill the block before it continues processing.

Final Thoughts

A review of these measurements for a file and socket adapter/connector show that increasing the block size can substantially improve the throughput and efficiency of a model. Packing more events into a block reduces the overhead of managing and processing events. Obviously these measurements are specific to a file and socket adapter/connector but one can expect that other adapters and connectors would experience measurable improvement as well. The key takeaway here is to be sure to test a variety of block sizes when building and testing models. As you can tell from these simple tests a single parameter can make a significant difference.

SAS AI and Machine Learning Courses

The rapid growth of AI technologies is driving an AI skills gap and demand for AI talent. Ready to grow your AI literacy? SAS offers free ways to get started for beginners, business leaders, and analytics professionals of all skill levels. Your future self will thank you.