- Home

- /

- SAS Communities Library

- /

- Loading Data from Python into CAS

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Loading Data from Python into CAS

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Loading Data from Python into CAS

There are many ways of loading data into CAS. Some methods simply invoke actions in CAS that load data from files on the server. Other methods of loading data involve connections to data sources such as databases in CASLibs. But the last method is to load data from the client side using a language such as Python.

There are even multiple methods of loading data from Python into CAS. We will be introducing the different ways of getting your data into CAS from the Python CAS client here.

As usual, the first thing we need to do is to import SWAT and create a connection to CAS.

In[1]: import swat

In[2]: conn = swat.CAS(host, port, username, password)Now that we have our connection, let's start loading some data.

Loading Data using Pandas-like Parser Methods

It's possible to load data directly from the connection object that we just created. CAS connection objects support the same data readers that the Pandas package does. This includes read_csv, read_excel, read_json, read_html, etc. The CAS connection versions of these methods are merely thin wrappers around the Pandas versions. The data is read into a DataFrame and then uploaded to a CAS table behind the scenes. The result is a Python CASTable object. Let's look at reading a CSV file first.

In[3]: tbl = conn.read_csv('https://raw.githubusercontent.com/sassoftware/sas-viya-programming/master/data/cars.csv')

In[4]: tbl

Out[4]: CASTable('_PY_66d07017_1af4_4bfc_890a_4f102b294fd3_', caslib='CASUSERHDFS(kesmit)')

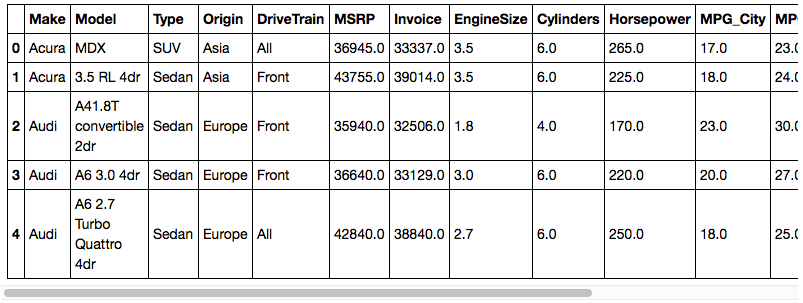

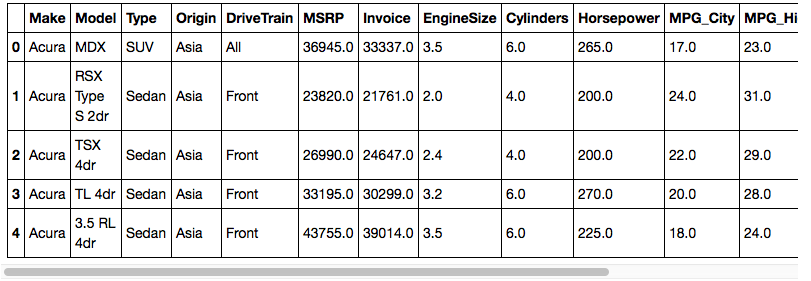

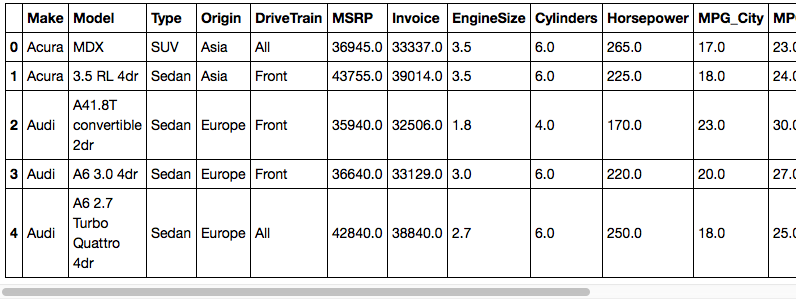

In[5]: tbl.head()

As you can see, getting CSV data into CAS from Python is pretty simple. Of course, if you have a large amount of data in CSV format, you may want to load it from a file on the server, but this method works well for small to medium size data.

To read data from an HTML file, we'll use the read_html method. In this case, we'll point it at a URL. Keep in mind, that this will download the URL to a local file then upload it to CAS. We also have to add the [0] to the end because the read_html will return a CASTable object for each table in the HTML file. We just want the first one.

In[6]: htmltbl = conn.read_html('https://www.fdic.gov/bank/individual/failed/banklist.html')[0]

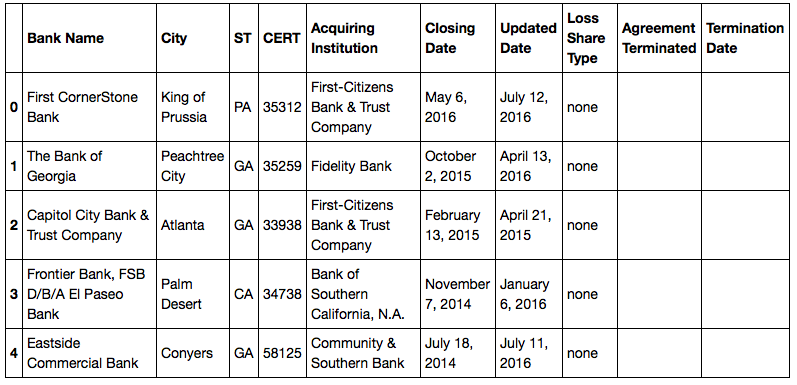

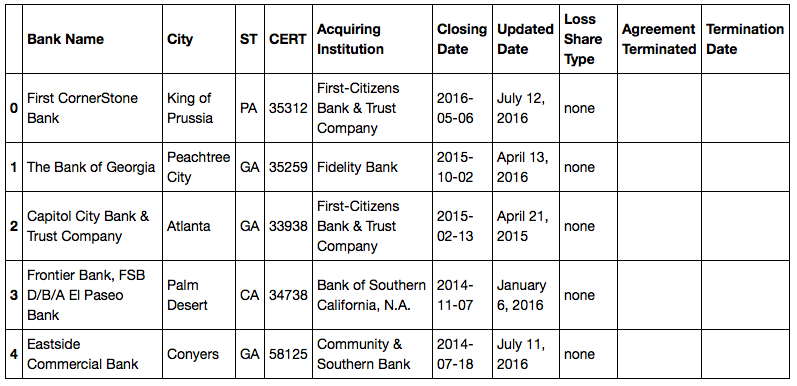

In[7]: htmltbl.head()

You'll notice that the 6th and 7th columns contain dates. We can use the parse_dates= parameter of read_html to convert those columns into CAS datetime values.

In[8]: htmltbl = conn.read_html('https://www.fdic.gov/bank/individual/failed/banklist.html',

parse_dates=[5, 6])[0]

In[9]: htmltbl.head()

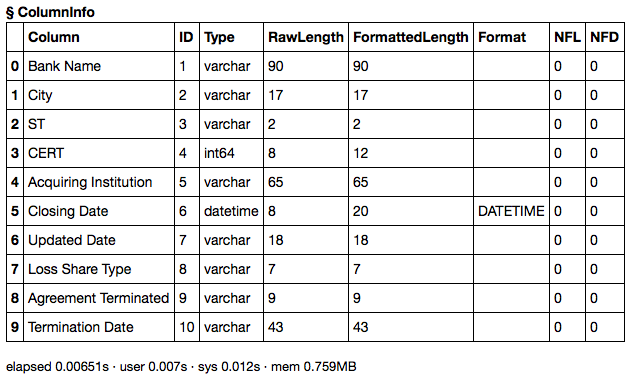

Here is the result of the table.columninfo action showing the data types of each column.

In[10]: htmltbl.columninfo()

All of the other read_XXX methods in Pandas work from CAS connection objects and support the same options. See the Pandas documentation for the other data reader methods and options.

Let's move on to the next method of loading data: the upload method.

Loading Data Using the upload Method

The next way of loading data is by sending a data file to the server using the upload CAS object method. In this style of loading data, the parsing of the file is done on the server-side; the file is simply uploaded as binary data and the table.loadtable action is run in the background. This has the benefit that the parsing can be done with much faster parsers than what can be done in Python. Some parsers can even execute in parallel so the parsing load is split across nodes in the CAS grid. The downside is that there aren't as many parsing options as what the Pandas data readers offer, so both methods still have their place. Let's look at code that uploads data files into CAS tables.

We'll start off with CSV files since they are ubiquitous.

In[11]: out = conn.upload('https://raw.githubusercontent.com/sassoftware/sas-viya-programming/master/data/cars.csv')

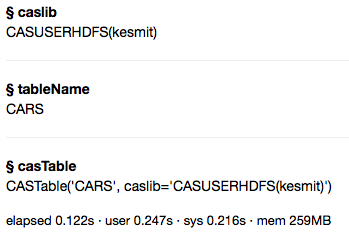

In[12]: out

When using the upload method on the CAS object, you'll get the result of the underlying table.upload action call. To get a reference to the CASTable object in that result, you just grab the casTable key.

In[13]: tbl = out['casTable']

In[14]: tbl.head()

The upload method works with any file type that the table.loadtable action supports, but you can also upload a Pandas DataFrame. One thing to note about doing it this way is that the DataFrame data is exported to CSV and then uploaded to CAS, so the resulting table will follow the same rules and limitations as uploading a CSV file.

In[15]: import pandas as pd

In[16]: import numpy as np

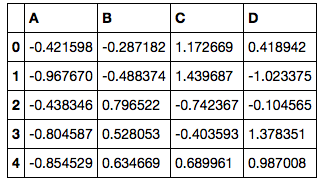

In[17]: df = pd.DataFrame(np.random.randn(50, 4), columns=list('ABCD'))

In[18]: df.head()

In[19]: dftbl = conn.upload(df)['casTable']

In[20]: dftbl

Out[20]: CASTable('TMPE50H5BF4', caslib='CASUSERHDFS(kesmit)')

In[21]: dftbl.head()

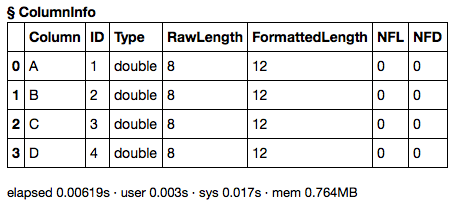

In[22]: dftbl.columninfo()

Using Data Message Handlers

The last method of loading data into CAS from Python is using something called "data message handlers" and the addtable action. The addtable action is a bit different than other actions in that it sets up a two-way communication from the server back to the client. Once the action is invoked, the server asks for chunks of data until there isn't any more data to upload. Doing this communication can be a bit tricky, so the SWAT package has some pre-defined data message handlers that can be used directly or subclassed to create your own.

You may not have realized it, but you have already used data message handlers in the first example. The read_XXX methods all use data message handlers to load the data into CAS. You can see all of the supplied data messsage handlers by looking at the subclasses of swat.cas.datamsghandlers.CASDataMsgHandler.

In[23]: from swat.cas import datamsghandlers as dmh

In[24]: print(dmh.CASDataMsgHandler.__subclasses__())

Out[24]: [<class 'swat.cas.datamsghandlers.PandasDataFrame'>, <class 'swat.cas.datamsghandlers.DBAPI'>]

In[25]: print(dmh.PandasDataFrame.__subclasses__())

Out[25]: [<class 'swat.cas.datamsghandlers.CSV'>, <class 'swat.cas.datamsghandlers.Text'>, <class 'swat.cas.datamsghandlers.HTML'>, <class 'swat.cas.datamsghandlers.SQLTable'>, <class 'swat.cas.datamsghandlers.Clipboard'>, <class 'swat.cas.datamsghandlers.SAS7BDAT'>, <class 'swat.cas.datamsghandlers.Excel'>, <class 'swat.cas.datamsghandlers.JSON'>, <class 'swat.cas.datamsghandlers.SQLQuery'>, <class 'swat.cas.datamsghandlers.FWF'>]You may notice that most of the data message handlers are just subclasses of the PandasDataFrame message handler. This is because they all convert their data into a Pandas DataFrame before uploading the data. This also means that the data must fit into memory to use them. There is one data message handler that doesn't work that way: swat.cas.datamsghandlers.DBAPI. The DBAPI message handler uses a Python DB-API 2.0 compliant database connection to retrieve data from a database and loads that into CAS. It only loads as much data as is needed for a single chunk. We'll just be looking at the CSV data message handler here. The rest follow the same pattern of usage.

To use the CSV data message handler, you just pass it the path to a CSV file. This creates an instance of the data message handler that can be used to generate the arguments to the table.addtable action and also handles the request from the server.

In[26]: cvs_dmh = dmh.CSV('https://raw.githubusercontent.com/sassoftware/sas-viya-programming/master/data/cars.csv')

In[27]: out = conn.addtable('cars', replace=True, **cvs_dmh.args.addtable)The action call arguments may look a little odd if you haven't used Python a lot. The args.addtable property of cvs_dmh contains a dictionary of parameter arguments. When you use the double-asterisk before it, it tells Python to apply the dictionary as keyword arguments to the function. The arguments generated by the data message handler are shown below.

In[28]: cvs_dmh.args.addtable

Out[28]: {'datamsghandler': <swat.cas.datamsghandlers.CSV at 0x7f4af1a70f98>,

'reclen': 160,

'vars': [{'length': 16,

'name': 'Make',

'offset': 0,

'rtype': 'CHAR',

'type': 'VARCHAR'},

{'length': 16,

'name': 'Model',

'offset': 16,

'rtype': 'CHAR',

'type': 'VARCHAR'},

{'length': 16,

'name': 'Type',

'offset': 32,

'rtype': 'CHAR',

'type': 'VARCHAR'},

{'length': 16,

'name': 'Origin',

'offset': 48,

'rtype': 'CHAR',

'type': 'VARCHAR'},

{'length': 16,

'name': 'DriveTrain',

'offset': 64,

'rtype': 'CHAR',

'type': 'VARCHAR'},

{'length': 8,

'name': 'MSRP',

'offset': 80,

'rtype': 'NUMERIC',

'type': 'SAS'},

{'length': 8,

'name': 'Invoice',

'offset': 88,

'rtype': 'NUMERIC',

'type': 'SAS'},

{'length': 8,

'name': 'EngineSize',

'offset': 96,

'rtype': 'NUMERIC',

'type': 'SAS'},

{'length': 8,

'name': 'Cylinders',

'offset': 104,

'rtype': 'NUMERIC',

'type': 'SAS'},

{'length': 8,

'name': 'Horsepower',

'offset': 112,

'rtype': 'NUMERIC',

'type': 'SAS'},

{'length': 8,

'name': 'MPG_City',

'offset': 120,

'rtype': 'NUMERIC',

'type': 'SAS'},

{'length': 8,

'name': 'MPG_Highway',

'offset': 128,

'rtype': 'NUMERIC',

'type': 'SAS'},

{'length': 8,

'name': 'Weight',

'offset': 136,

'rtype': 'NUMERIC',

'type': 'SAS'},

{'length': 8,

'name': 'Wheelbase',

'offset': 144,

'rtype': 'NUMERIC',

'type': 'SAS'},

{'length': 8,

'name': 'Length',

'offset': 152,

'rtype': 'NUMERIC',

'type': 'SAS'}]}The output of the addtable action includes a casTable key just like the upload method.

In[29]: tbl = out['casTable']

In[30]: tbl.head()

Just as with the read_XXX methods on the connection, the PandasDataFrame-based data message handlers take the same arguments as the read_XXX methods to customize their behavior. Using Pandas' HTML reader as an example again, we can upload data with dates in them. Note that in this case, we can specify the index of the HTML table that we want in the index= parameter.

In[31]: html_dmh = dmh.HTML('https://www.fdic.gov/bank/individual/failed/banklist.html', index=0, parse_dates=[5, 6])

In[32]: out = conn.addtable('banklist', replace=True, **html_dmh.args.addtable)

In[33]: tbl = out['casTable']

In[34]: tbl.head()

In[41]: conn.close()

Conclusion

In this article, we have demonstrated three ways of getting data from Python into CAS. The first was using the read_XXX methods that emulate Pandas' data readers. The second was using the upload method of the CAS connection to upload a file and have the server read it. The third was using data message handlers to add data using the addtable action. Each method has its strengths and weaknesses. The upload method is generally the fastest, but has the most limited parsing capabilities. The read_XXX methods are quick and convenient and give you the power of Pandas data readers. While we didn't get deep into data message handlers here, they allow the most power by allowing you to subclass them and write custom data loaders from any data source.

Resources

You can download the Jupyter notebook version of this article at https://github.com/sassoftware/sas-viya-programming/tree/master/communities.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

hello Kevin,

I have followed the steps you described and also tried to get cars.csv to Viya via Python. I have saved it as comma separated csv. In CAS everything has been loaded to first column. Pls advise what went wrong.

regards

Karolina

April 27 – 30 | Gaylord Texan | Grapevine, Texas

Registration is open

Walk in ready to learn. Walk out ready to deliver. This is the data and AI conference you can't afford to miss.

Register now and lock in 2025 pricing—just $495!

SAS AI and Machine Learning Courses

The rapid growth of AI technologies is driving an AI skills gap and demand for AI talent. Ready to grow your AI literacy? SAS offers free ways to get started for beginners, business leaders, and analytics professionals of all skill levels. Your future self will thank you.