- Home

- /

- SAS Communities Library

- /

- LASR Memory – The Round Up

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

LASR Memory – The Round Up

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Numerous articles and blogs have been written over the past couple of years explaining LASR memory and the controls knobs available to the administrator used to manage memory. In many cases the articles were written with a focus on a specific type of LASR server, distributed versus non-distributed, and in other scenarios they were written with from a platform perspective, Windows versus Linux. You may be aware that not all memory controls apply to all configurations, but it may not be obvious how the controls align with the platform and type of LASR server. In this blog we will round up the controls and introduce a view of available controls in one location.

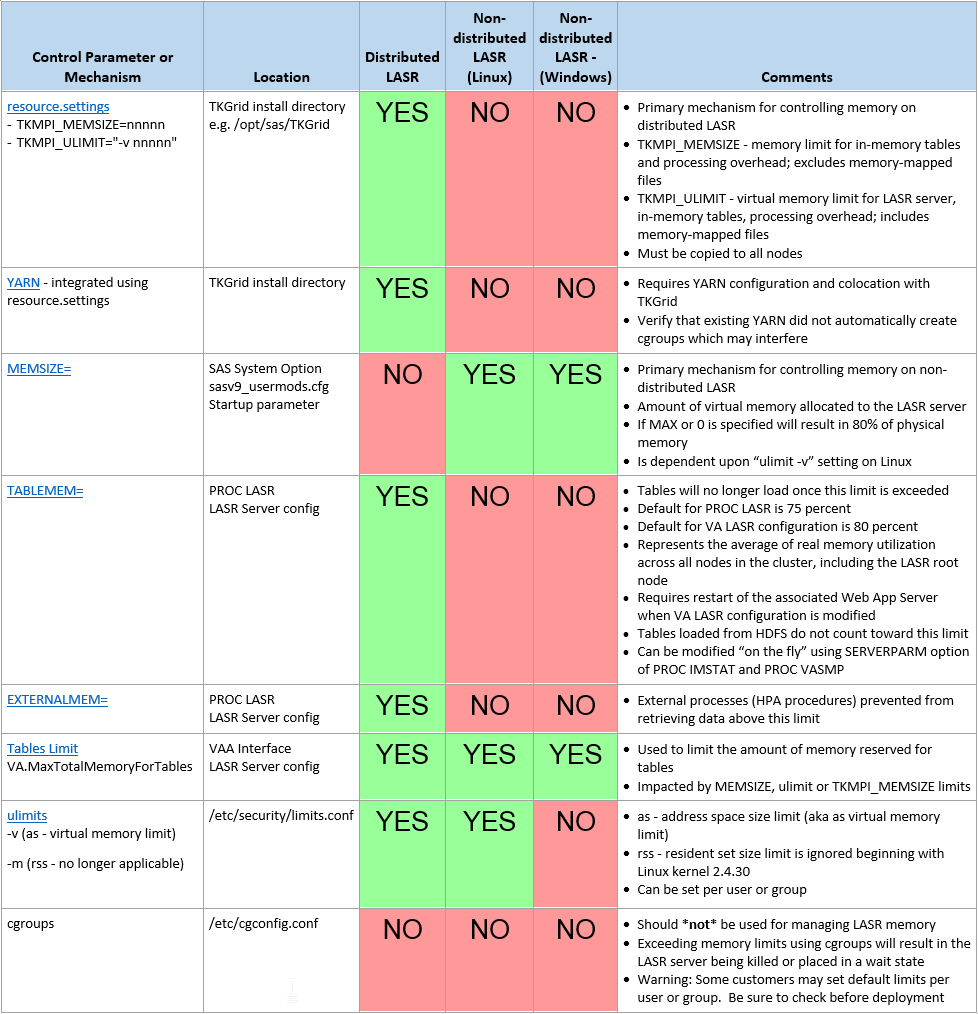

Creating a Controls Reference

While information on each of the memory controls for different configurations is available in our published documentation, it is spread across multiple documents which requires a little extra time to research. After reflecting on this minor inconvenience, it seemed the next logical step was to assemble the topics into a cohesive view that enables the user quickly identify the control knobs available for various configurations.

With this in mind, the following reference table has generated to identify the various flavors of the LASR server and which controls may be used to manage memory. The first two columns identify the control parameter or mechanism and where it can be found. The following three columns show the variety of LASR servers and whether the control is applicable for each combination. The final column provides comments for each control parameter or mechanism. If you click on the image it will open a PDF that contains links to the appropriate documentation for each control.

As you can see there are many options and combinations. So let's walk through a brief review of each control beyond what is noted in the table.

resource.settings (distributed only)

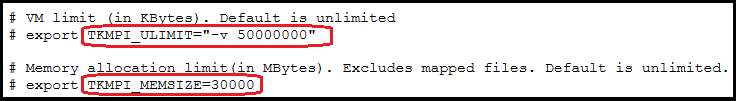

The TKMPI_MEMSIZE and TKMPI_ULIMIT parameters of the resource.settings script are picked up when a distributed LASR starts and is the preferred method of controlling LASR memory allocation. There is a resource.settings file on each node of the cluster within the TKGrid deployment, so if it is changed the it must be propagated to all of the nodes in the cluster. These settings are on a per node basis, so setting TKMPI_MEMSIZE=30000 implies that the maximum amount of real memory used by LASR for data will be 30GB times however many worker nodes are configured. TKMPI_ULIMIT can be used to limit the virtual memory for a LASR process. This limit includes SASHDAT tables loaded from HDFS, where TKMPI_MEMSIZE does not.

A sample resource.settings script is available after TKGrid installation in the root directory. It can easily be tailored to configure memory settings by application name or user. LASR servers must be restarted after making changes to implement new updates. Here is a snippet from the resource.settings file.

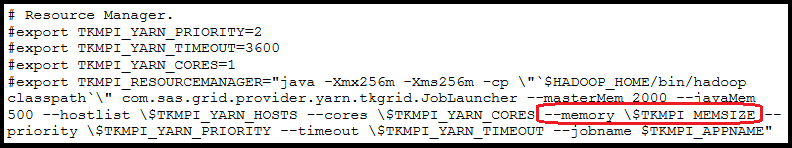

YARN (distributed only)

Integration with YARN is accomplished via configuration of the resource.settings script. Sample variables and settings for YARN are defined in this file but commented out. When configured to interact with YARN, TKGrid requests a reservation for a container on each node but will not work until all containers obtain a reservation. Since configuration is done via resource.settings, this file must be propagated across all nodes of the cluster.

The following options are available in the resource.settings script but are commented out by default.

MEMSIZE (non-distributed only)

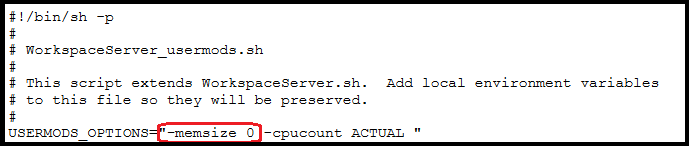

There is a good chance you are familiar with this parameter. Yes this is the standard parameter used to manage memory for non-LASR SAS session. But since a non-distributed LASR server is really a SAS session, it is also the primary parameter to manage the amount of memory allocated to a LASR server. There are multiple locations where this parameter can be specified, but most likely it is defined in a workspace server usermods script or configuration file. Typically this is set to 0 (zero) or MAX by default. When set to MAX, the limit is essentially set to 80% of physical memory. But keep in mind this setting is a limit on the amount of virtual memory, not real memory. Therefore if multiple LASR servers are started with -memsize 0 and no other controls are defined there is a risk of over-committing memory.

Screen shot of WorkspaceServer_usersmods.sh.

Although it may seem logical that the REALMEMSIZE option would be a meaningful control of real memory, it appears it is only useful for specific PROCs. Brief testing indicated that modifying this setting had no impact on LASR server memory.

TABLEMEM (distributed only)

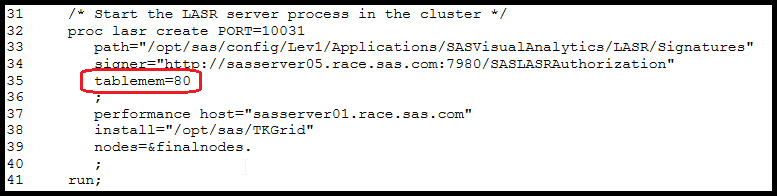

The TABLEMEM parameter specifies a memory utilization limit above which no further tables can be loaded, either permanent or temporary. In this context memory utilization is across all nodes of a cluster. If a LASR server is started via Visual Analytics Administrator using the default configuration the TABLEMEM value will be set to 80 percent. If the LASR is started manually and TABLEMEM is not set in the code using PROC LASR, the default will be 75 percent. An existing value for a running LASR server can be modified using PROC VASMP and PROC IMSTAT.

Here is a snippet from the Last Action Log when starting a distributed LASR server. Notice the TABLEMEM option is set to 80. This is configured in metadata.

Careful consideration should be given to choosing a value in small configurations. For example, in a four node cluster where root node memory utilization is 20% and worker node utilization is 95%, overall memory utilization will be (20+95+95+95) / 4 = 76.25 percent. Since this is below the default threshold it is still possible to load a large table as the limit does not "kick in" until the threshold is exceeded. In this scenario the worker nodes are at risk for overcommitting memory even though overall memory utilization was lower than the default value set when started by VAA.

Finally tables loaded from HDFS to not count toward memory utilization. Since they are memory mapped they are not included in this limit.

EXTERNALMEM (distributed only)

The EXTERNALMEM parameter specifies a memory utilization value above which the server stops transferring data to external processes such as external actions and SAS High Performance Analytics procedures. So this is only used when working with HPA procedures.

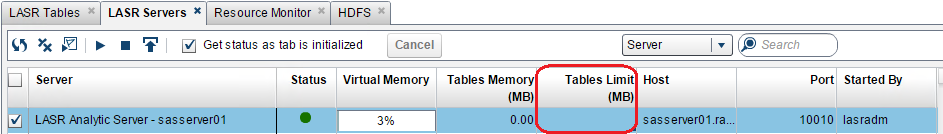

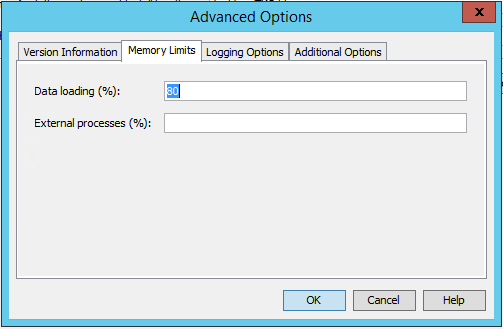

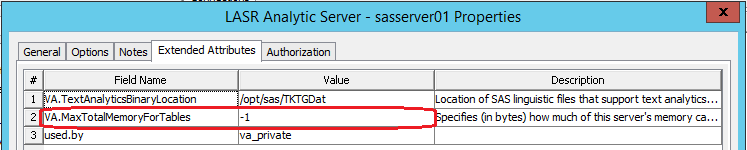

Tables Limit (distributed and non-distributed)

Introduced in Visual Analytics 7.1, this parameter allows the administrator to limit the amount of space for tables within a LASR server. This is the only parameter that applies to all flavors of LASR servers. It can be set within the Visual Analytics Administrator or using the "Extended Attributes" tab of the LASR server properties (screen shots below). Of course this value would be limited if ulimit, MEMSIZE or TKMPI_MEMSIZE was set.

ulimits (distributed and non-distributed on Linux)

Virtual memory limits can be imposed by the Linux operating system using ulimits but this method is one that should be used sparingly. Since the goal is to manage resident(real) memory, this limit would not prevent a LASR server from exceeding the resident memory limit and could result in paging. This limit would likely be used in conjunction with another limit. For example it could be used to set an upper bound on virtual memory and then TKMPI_ULIMIT could be to refine settings for distinct LASR servers. This setting usually defaults to unlimited.

Although the man page for ulimit indicates it is possible to set a resident set size limit (-m), this limit was disabled beginning with Linux kernel 2.4.30.

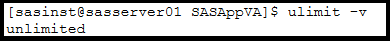

To check the current ulimit for virtual memory, issue the "ulimit -v" command

.

cgroups

It is possible to set a memory limit using cgroups but this control should never be used. Unlike all of the other controls that simply warn you that you have reached a limit and prevent an action, cgroups out-of-memory controller will either place the LASR server in a wait state or kill it, depending on how it is configured. Therefore this control should be avoided for memory management. For new deployments it is worth checking to verify that an existing cgroup doesn't exist to avoid potential issues.

Final Thoughts

Each customer will likely have unique requirements when configuring LASR servers. Sifting through the various methods of managing LASR memory during configuration can be challenging, but once you have a basic understanding of each of the controls it will be easier to spot and resolve issues. Remember that depending on the platform or type of LASR server, there may be more than one control configured.

Resources

Public doc

SAS LASR Analytic Server 2.8: Reference Guide

SAS® Visual Analytics 7.4: Administration Guide

SAS® High-Performance Analytics Infrastructure 3.6: Installation and Configuration Guide

SAS Note

Loading a table into a non-distributed version of the SAS LASR Analytic Server might fail with an "i...

SAS AI and Machine Learning Courses

The rapid growth of AI technologies is driving an AI skills gap and demand for AI talent. Ready to grow your AI literacy? SAS offers free ways to get started for beginners, business leaders, and analytics professionals of all skill levels. Your future self will thank you.

- Find more articles tagged with:

- GEL