- Home

- /

- SAS Communities Library

- /

- How to enable Performance Logging for Data Management Studio / Server

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

How to enable Performance Logging for Data Management Studio / Server

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Sometimes, your Data Management jobs will not run in the expected time, or you need to understand where the time is spent in your job. Performance Profiling exists for this.

How the log works

There are two modes of operation:

- Profile by node type – This will give you each node type (Cluster, Expression Matchcode, etc.) along with how many milliseconds were spent on each of three operations (prepare, pre-execute, execute), and how many times each was entered.

- Profile by node instance – This will give you each node instance and how many milliseconds were spent doing the same 3 operations for each, and how many times each was entered. The ID will correspond to the ID property of your job (as displayed in the node's Basic Settings), and will include the job name so you can see embedded jobs.

The output will be comma delimited and the columns are as follows for type 1 above:

- Operation - Can be one of NX (cumulative time spent processing rows), PR (time spent preparing), PX (time spent pre-executing)

- Node type - The type of node

- Time - Time in milliseconds

- Entries (v 2.2 and later) - Number of times we entered that code

Example output for 1 (action type, node type, milliseconds, entries):

Profiler dump node types NX,CASE,49698,1000001

NX,CLUSTER,63895,910332

NX,DEST_DELIMTEXT,131,6871

NX,EXPRESSION,11860,917203

NX,MATCHCODE,6105360,1000001

NX,MATCHPARSED,530428,3000003

NX,SORT,10851,910332

NX,SOURCE_DELIMTEXT,30970,1000001

NX,STANDARDIZE,1332685,1000001

PR,CASE,0,1

PR,CLUSTER,0,1

PR,DEST_DELIMTEXT,0,1

PR,EXPRESSION,52,2

PR,MATCHCODE,3883,1

PR,MATCHPARSED,0,3

PR,SORT,0,1

PR,SOURCE_DELIMTEXT,27,1

PR,STANDARDIZE,0,1

PX,CASE,0,1

PX,CLUSTER,253,1

PX,DEST_DELIMTEXT,17,1

PX,EXPRESSION,10,2

PX,MATCHCODE,0,1

PX,MATCHPARSED,0,3

PX,SORT,1,1

PX,SOURCE_DELIMTEXT,0,1

PX,STANDARDIZE,20,1

For type 2:

- Operation - Can be one of NX (cumulative time spent processing rows), PR (time spent preparing), PX (time spent pre-executing)

- Filename - The job filename

- Node instance - This corresponds to the id of that node in the job (iid under "step" tag in job xml file)

- Time - Time in milliseconds

- Entries (v 2.2 and later) - Number of times we entered that code

Example output for 2 (action type, job name, instance, milliseconds, entries):

Profiler dump node instances

NX,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,15,1331014,1000001

NX,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,17,207743,1000001

NX,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,18,155413,1000001

NX,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,19,171002,1000001

NX,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,33,131,6871

NX,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,34,10789,910332

NX,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,35,4103,6871

NX,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,36,8063,910332

NX,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,38,30698,1000001

NX,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,4,6102637,1000001

NX,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,5,62961,910332

NX,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,9,51321,1000001

PR,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,15,0,1

PR,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,17,0,1

PR,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,18,0,1

PR,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,19,0,1

PR,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,33,0,1

PR,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,34,0,1

PR,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,35,3,1

PR,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,36,48,1

PR,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,38,26,1

PR,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,4,3883,1

PR,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,5,0,1

PR,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,9,0,1

PX,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,15,20,1

PX,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,17,0,1

PX,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,18,0,1

PX,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,19,0,1

PX,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,33,17,1

PX,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,34,1,1

PX,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,35,10,1

PX,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,36,0,1

PX,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,38,0,1

PX,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,4,0,1

PX,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,5,252,1

PX,HDP_Duplicates_1.7_ServerTestProd27_1M.ddf,9,0,1

Enabling the log

To turn on the functionality, go into your app.cfg and add one or both of the following two lines, depending on which log you want to enable:

STEPENG/PROFILEBYNODE=true

STEPENG/PROFILEBYTYPE=true

On Windows and versions superior to 2.4 only, also set the following:

BASE/HIGH_PRECISION_TIMER=1

If not set, the timings might be less accurate. However, if this IS set, all threads will run on CPU core 1 which will decrease the performance of the program if it's using multiple threads:

Then you’ll need to make sure you are seeing the log output. So in your log xml file, add something like this:

<appender class="RollingFileAppender" name="ProfFile">

<param name="Append" value="false"/>

<param name="ImmediateFlush" value="true"/>

<rollingPolicy class="TimeBasedRollingPolicy">

<param name="fileNamePattern"

value="%S{OSENV.DIS_LOG_DIR}/profiler_service_%d_%S{pid}.log"/>

</rollingPolicy>

<layout>

<param name="ConversionPattern" value="%m"/>

</layout>

</appender>

<logger name="DF.RTProfiler">

<level value="Trace"/>

<appender-ref ref="ProfFile"/>

</logger>

The logs are pretty low volume so they shouldn’t affect performance or disk space.

Analyzing the log results

The output is comma delimited so you can pull it into excel. In order to analyse it, you can use Excel Pivot Table, which requires to have all fields splitted.

| Input | Operation | Node Type | Time | Entries |

| NX,CASE,49698,1000001 | NX | CASE | 49698 | 1000001 |

| NX,CLUSTER,63895,910332 | NX | CLUSTER | 63895 | 910332 |

| NX,DEST_DELIMTEXT,131,6871 | NX | DEST_DELIMTEXT | 131 | 6871 |

| NX,EXPRESSION,11860,917203 | NX | EXPRESSION | 11860 | 917203 |

| NX,MATCHCODE,6105360,1000001 | NX | MATCHCODE | 6105360 | 1000001 |

| NX,MATCHPARSED,530428,3000003 | NX | MATCHPARSED | 530428 | 3000003 |

| NX,SORT,10851,910332 | NX | SORT | 10851 | 910332 |

| NX,SOURCE_DELIMTEXT,30970,1000001 | NX | SOURCE_DELIMTEXT | 30970 | 1000001 |

| NX,STANDARDIZE,1332685,1000001 | NX | STANDARDIZE | 1332685 | 1000001 |

| PR,CASE,0,1 | PR | CASE | 0 | 1 |

| PR,CLUSTER,0,1 | PR | CLUSTER | 0 | 1 |

| PR,DEST_DELIMTEXT,0,1 | PR | DEST_DELIMTEXT | 0 | 1 |

| PR,EXPRESSION,52,2 | PR | EXPRESSION | 52 | 2 |

| PR,MATCHCODE,3883,1 | PR | MATCHCODE | 3883 | 1 |

| PR,MATCHPARSED,0,3 | PR | MATCHPARSED | 0 | 3 |

| PR,SORT,0,1 | PR | SORT | 0 | 1 |

| PR,SOURCE_DELIMTEXT,27,1 | PR | SOURCE_DELIMTEXT | 27 | 1 |

| PR,STANDARDIZE,0,1 | PR | STANDARDIZE | 0 | 1 |

| PX,CASE,0,1 | PX | CASE | 0 | 1 |

| PX,CLUSTER,253,1 | PX | CLUSTER | 253 | 1 |

| PX,DEST_DELIMTEXT,17,1 | PX | DEST_DELIMTEXT | 17 | 1 |

| PX,EXPRESSION,10,2 | PX | EXPRESSION | 10 | 2 |

| PX,MATCHCODE,0,1 | PX | MATCHCODE | 0 | 1 |

| PX,MATCHPARSED,0,3 | PX | MATCHPARSED | 0 | 3 |

| PX,SORT,1,1 | PX | SORT | 1 | 1 |

| PX,SOURCE_DELIMTEXT,0,1 | PX | SOURCE_DELIMTEXT | 0 | 1 |

| PX,STANDARDIZE,20,1 | PX | STANDARDIZE | 20 | 1 |

You can then pull out information of this type:

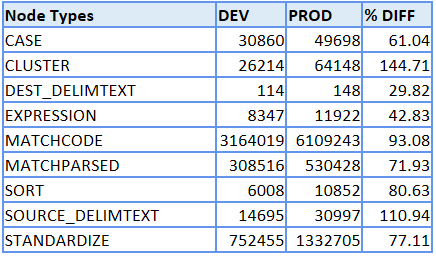

Total Time Spent on DEV, PROD and difference, by node type

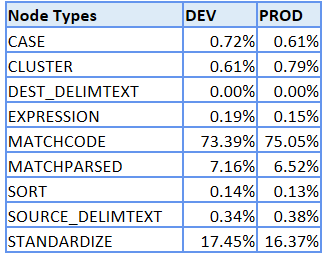

Percentage of Job's Time Spent on DEV, PROD, by Node Type

April 27 – 30 | Gaylord Texan | Grapevine, Texas

Registration is open

Walk in ready to learn. Walk out ready to deliver. This is the data and AI conference you can't afford to miss.

Register now and save with the early bird rate—just $795!

SAS AI and Machine Learning Courses

The rapid growth of AI technologies is driving an AI skills gap and demand for AI talent. Ready to grow your AI literacy? SAS offers free ways to get started for beginners, business leaders, and analytics professionals of all skill levels. Your future self will thank you.