- Home

- /

- SAS Communities Library

- /

- ESP 4.3 Analytics Windows – First Look

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

ESP 4.3 Analytics Windows – First Look

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

What's new in ESP 4.3? Lots of things. What's most interesting to SAS' core analytics users? The analytics windows of course.

Analytics Windows Overview

In ESP 4.3, there are five analytics windows. They are:

- Score

- Train

- Calculate

- Model Reader

- Model Supervisor

Score Window

As the name suggests, the Score window applies scoring and clustering algorithms to incoming events generating output score and clustering events in real time. For example, the window can apply an Enterprise Miner-generated Random Forest scoring algorithm to incoming trade data to predict real-time asset prices.

In the 4.3 release, the ESP Score window can perform DBSCAN and KMEANS clustering as well as utilize ASTORE scoring algorithms.

Train Window

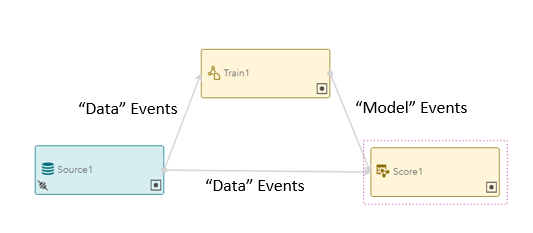

The Train window utilizes incoming events to develop/adjust scoring and clustering algorithms in real time. The Train window works together with the Score window. Once the Train window has trained or adjusted an algorithm, it outputs that algorithm to a Score window for real-time scoring/clustering. The frequency of model output and adjustments is subject to the Train window's parameters.

In the 4.3 release, the ESP Train window can train DBSCAN and KMEANS clustering algorithms (matching the Score window). When paired with the Score window, Train ESP models are referred to as "Online Training" models because the Train window is constantly adjusting the clustering algorithm and sending updates to the Score window "online."

Online Training

Model Reader Window

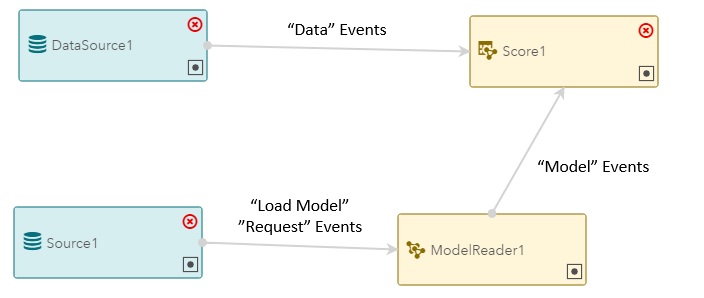

The Model Reader window receives external ASTORE scoring algorithm information in the form of "request" events and passes the ASTORE scoring algorithm information to Score windows in the form of "model" events.

When paired with the Score window, Model-Reader ESP models are referred to as "Offline Training" models because the ASTORE models are developed and updated offline. When a new/updated ASTORE model is ready, it is sent into the Score node via a Model Reader node. This is done as the ESP model is running ("on the fly").

Offline Training

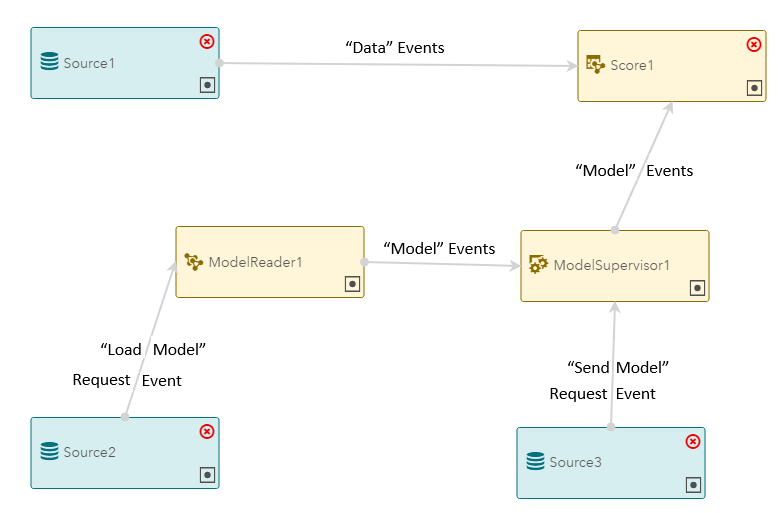

Model Supervisor Window

The Model Supervisor window stores multiple ASTORE algorithms and passes those algorithms to Score windows on demand. This way, scoring rules can be changed on the fly.

For example, a particular ESP scoring model might have numerous candidate scoring algorithms (say 10). As conditions change throughout the day, one algorithm might fit the situation better than the others, so a Send request would be sent to the Model Supervisor window to send that algorithm to the Score window. Later in the day, a different algorithm might fit better so a Send request would be sent for that one....

Model Supervisor

Calculate Window

The Calculate window creates real-time, running statistics based on a number of statistical/analytical techniques.

In the 4.3 release, the ESP Calculate window supports the following analytic algorithms:

- Correlation

- Distribution Fitting

- Segmented Correlation

- STFT

- Summary - Min, Max, Mean, Sum, Std, USS, ...

- Tokenization - String parsing

- Tutorial

Example -- Offline Training, Model Supervisor

While I'd like to provide examples for each of the above, I need to keep this post to a manageable size. So I think a model supervisor example with offline ASTORE files probably hits most of the points people need to get any of this working. So here we go...

The astoreScoreRepository Model

The astoreScoreRepository model looks identical to the "Model Supervisor Window" picture above except that the window names are more descriptive.

The model xml is below. It contains all of the Source window schema definitions you'll need to send requests into the Model Reader and Model Supervisor windows.

The astoreScoreRepository Inputs

The model reads the trades1M.csv file from the ESP Examples directory. On my system, this located at /opt/sas/viya/home/SASEventStreamProcessingEngine/4.3.0/examples/xml/vwap_xml. It will be somewhere similar in yours.

Beyond the input trades event stream, two requests are sent into the model. A "Load Model" request is sent via the modeRequestSource window to the ModelReader1 window. It registers 2 different ASTORE scoring algorithms. Additionally a "Send Model" request is sent via the modelSupRequestSource window to the ModelSupervisor1 window. It sends one of the two scoring algorithms to the Score window.

The Load Model Request

The "Load Model" request is below. It loads two different ASTORE models into the Model Reader node -- score.sasast and score2.sasast. As the model XML shows, the message published into the ESP model via a connector. In the model, it is located at, /opt/sas/espcoursefiles/modelRequest2.csv.

(Note: You need the blank line at the end or the source window will not read the final message row.)

The Send Model Request

The "Send Model" request is below. It directs the Model Repository window to send the score.sasast ASTORE scoring algorithm to the Score node.

(Note: As before, you need the blank line at the end or the source window will not read the final message row.)

Unlike, the load request, the send request is not delivered to its Source window via a Connector. Instead the ESP XML client is used. This allows time for Model Supervisor window to register the two ASTORE algorithms. It also makes for a more realistic scenario. Requests to change the scoring algorithm will come-in after the model is up and running via the REST API (e.g. the ESP XML Client). The command used is shown below.

The ASTORE file

The ASTORE file used in this model was generated in Enterprise Miner. It uses the other variables in the trades1M.csv file to predict the price variable. The file uses a random forest methodology.

Since the example ESP model uses 2 ASTORE files, score.sasast and score2.sasast, simply copy and rename the ASTORE file linked above to make both files.

Odds and Ends

In addition to the overview and the example, here are some other information you'll need to make this all work.

The ESP XML Analytic Client

When integrating AStore files into ESP, you need to know two sets of values:

- The ASTORE Input Map - The list of input variables

- The ASTORE Output Map - The list of output variables

You can get these lists by registering the ASTORE file in ESP Studio (Score node). You can also get them using the ESP XML Analytics client. The following command reveals the input and output maps of the ASTORE used in the example above.

You'll get an output that looks like this:

When using an ASTORE, the Score node expects the input event stream to contain each field required by the ASTORE. It maps the input event stream to the ASTORE input map by field name.

The ASTORE output fields are mapped to the Score node's output event stream using the output-map XML tag. You can see this in the example XML above. ESP Studio populates this map for you using drop-down boxes.

Reconfig Requests

In addition to all of the functionality above, users can also issue requests to reconfigure ESP Analytics window parameters while models are running. Both the Train and Calculate windows accept reconfig requests. As an example, a user might wish to adjust the WindowLength parameter of a Correlation analysis. That user would send a reconfig request into that window (via a Source window).

A reconfig request has the same schema as any other request. An example looks like this:

Edge Roles

To facilitate both "data" events and "request" events (as well as "model" events), ESP 4.3 is enhanced with a new Edge parameter, Role. The role identifies the incoming event stream as either "data," "request," or "model." ESP Studio takes care of adding the correct role for you but when you're building your own XML or when you're debugging your ESP Studio models, be sure to check that the role assigned matching the event stream. An example is shown below.

ESP Analytics Software Package

SAS ESP Analytics is a separate package that must be licensed in addition to the base ESP engine. In order to run the analytics package, the ESP server needs access to the SAS TK shared objects. This is done using the plugindir command line option. See below for an example.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi, thank you very much for this article! It's very helpful!

I'm working on a project which requires to score the data stream with a model developed in Viya Model Studio. I tried to follow the steps in your article, but I have a few questions and I wish you could give me some advises.

For your information, our current ESP server (version 6.2) is not connected to our Viya environment. Therefore, I have downloaded the ASTORE file using the codes below in SAS Studio:

libname mrstore cas caslib=ModelStore;

proc cas;

table.loadTable

caslib="ModelStore"

casOut={caslib="ModelStore" name="<model name>"}

path="<model name>.sashdat";

run;

proc astore;

download rstore=mrstore.<model name>

store=" <path>/<model name>.astore";

run; My questions are:

1. Is the modelRequest2.csv described in your example need to be created manually? I don't have that file at the moment, should I create one csv in a similar format?

2. I noticed that your astore file has an extension of ".sasast", but mine is ".astore" (the code is provided by our SAS consultant). Does the file extension matter?

I'm a new user of ESP Studio, so any helps would be much appreciated!

Thanks,

Yiping

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

It looks like you are on a newer version than I wrote about here. The following two posts should provide you with more applicable information on your particular version:

https://communities.sas.com/t5/SAS-Communities-Library/Demystifying-ESP-5-2-Streaming-Analytics/ta-p...

http://sww.sas.com/blogs/wp/gate/28158/esp-5-2-model-manager-integration/franir/2019/03/13

I realize you said your Viya and ESP envs were not integrated but wanted to point out the 2nd post in case you ever get there.

As for your questions,

1. Yes, you’ll need to inject a “Load Model Request” event into your ESP server somehow. This could come from a message broker or just a manually created static CSV file as shown in the example. Either way, you’ll need to create it. I realize not every project is using sophisticated components like message brokers so I chose the “lowest common denominator” (a static CSV file) to illustrate the concept.

2. The extension shouldn’t matter.

Steve

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Steve,

Thank you very much for your replies!

I have come across the first post when I was searching for suggestions online, but I'll check out the second post.

For "Load" Model Request" event, we don't have a message broker so I'll try to create one csv file as you suggested.

May I ask you one more question about models like Decision Trees if you don't mind? I need to import two models into my ESP project at the moment, one is a Random Forest model and the other one is a Decision Tree. For Random Forest, I have downloaded its ASTORE file using the SAS code I mentioned previously. However, I realized that there isn't an ASTORE file for Decision Tree as ASTORE files are only available for a few algorithms. In this case, do you have any suggestions on how to import the Decision Tree model into ESP Studio? Have you come across this before?

Thank you very much! Have a nice day ahead!

Thanks,

Yiping

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

You can integrate DS2 code using an ESP Calculate window. An example in the documentation is here, https://go.documentation.sas.com/?cdcId=espcdc&cdcVersion=6.2&docsetId=espan&docsetTarget=p0wtq76jm9....

If the code is DATA Step, you’ll need to convert it to DS2. You can see about that here, https://go.documentation.sas.com/?docsetId=ds2ref&docsetTarget=n1cqq0sufrlezjn168xd4l5wuhuc.htm&docs....

You can also integrate DATA Step (without converting to DS2) using the Procedural window. However using DS2 will give you better performance and scaling as it is thread capable. Info on DATA Step in the Procedural window is here, https://go.documentation.sas.com/?cdcId=espcdc&cdcVersion=6.2&docsetId=espcreatewindows&docsetTarget....

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Stephen,

Thank you very much for the links!

I developed the Decision Tree using Model Studio, and I'll check out those documentations.

Have a nice day ahead!

Thanks,

Yiping

April 27 – 30 | Gaylord Texan | Grapevine, Texas

Registration is open

Walk in ready to learn. Walk out ready to deliver. This is the data and AI conference you can't afford to miss.

Register now and lock in 2025 pricing—just $495!

SAS AI and Machine Learning Courses

The rapid growth of AI technologies is driving an AI skills gap and demand for AI talent. Ready to grow your AI literacy? SAS offers free ways to get started for beginners, business leaders, and analytics professionals of all skill levels. Your future self will thank you.

- Find more articles tagged with:

- GEL