- Home

- /

- SAS Communities Library

- /

- Creating custom SAS Viya topologies – realizing the workload placement...

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Creating custom SAS Viya topologies – realizing the workload placement plan

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

In this article, we will look at creating custom SAS Viya deployment topologies, realizing your workload placement plan. In doing this we will look at a couple of examples as a way of sharing some configuration specifics of using custom labels and taints.

The first scenario we will examine is where an organization wants to dedicate nodes to functions like SAS Micro Analytic Service (MAS), SAS Event Stream Processing (ESP) and/or SAS Container Runtime (SCR) pods. To illustrate this, we will examine the MAS configuration. The second scenario is when running a shared cluster (having multiple SAS Viya environments running in the cluster) and you want to dedicate nodes to an environment. For example, dedicating a different set of nodes to CAS for each environment.

Let’s look at the details…

Create your workload placement plan

Part of planning the target topology is to create (design) the workload placement plan. So, before we get into the details of the SAS Viya configuration, we should first take a look at my test environment.

My desired topology is to have two SAS Viya environments running on my Kubernetes (K8s) cluster, being production and discovery.

I wanted to isolate and dedicate nodes to the CAS Server in each environment. To implement the plan, I needed to use different node instance types for the two CAS node pools. My production environment is to run a SMP CAS Server, so I wanted to use a larger node instance type, and the discovery environment is to run a MPP CAS Server, so I used a smaller node instance type as the plan is to scale out running multiple CAS Workers. Another reason you might use different node pools is if one CAS Server needed GPUs and the other CAS Server didn’t.

In this example we have the “CAS (prod)” node pool for production and the “CAS (nonprod)” node pool for discovery.

In addition to dedicated CAS nodes, I also wanted to dedicate nodes to running the SAS Micro Analytic Service (sas-microanalytic-score) pods. This leads to having an additional node pool for these pods, I called this node pool “realtime”. You might do this to protect and dedicate resources to running the MAS pods.

The default configuration declares SAS Micro Analytic Service pods as a member of the “stateless” workload class, so the MAS pods would be scheduled to run on the same nodes as all the other stateless pods in SAS Viya. This is not desirable when the models and decision flows have been embedded into real-time transactions supporting business processes.

The rest of the node pools (nodes) are shared by both SAS Viya environments.

Figure 1 illustrates my workload placement plan.

Select any image to see a larger version. Mobile users: To view the images, select the "Full" version at the bottom of the page.

Figure 1. Target topology running two SAS Viya environments

Now that we have looked at the drivers for creating this topology, let’s discuss how to implement it.

To achieve this strategy, we will coordinate the use of Kubernetes node labels and node taints with fields for pod node affinity and tolerations. At the node level the two key tools are node labels and taints. Remember node labels are used to attract pods, but a node label doesn’t stop Kubernetes from scheduling pods to a node, while taints are used to keep pods away from a node. Once you have applied a node taint, the pods need to be given a toleration for the desired taint, which enables a pod to run on the tainted node(s). Without the toleration the pods will be unable to be scheduled on the tainted node.

To achieve the workload plan, node affinity can be implemented in a strict or preferred way. Enforcing strict placement rules, by using the requiredDuringSchedulingIgnoredDuringExecution field, would mean that a pod will only be scheduled to run if a node is available with the exact label(s) to match what has been defined for the pod’s node affinity. This could be the existing SAS labels or any label(s) that have been added to the pod’s node affinity via the transformers. If a node isn’t available the pod will stay in a pending state, with an event message that says there are no nodes available that match the scheduling criteria.

Using the preferred method, via the preferredDuringSchedulingIgnoredDuringExecution field means the pods might run on a node outside of the target node pool. The plus side of “preferred scheduling” is that the pod will run somewhere if resources (nodes) are available, the downside of preferred scheduling is that the pod may run on a node that was not the first (preferred) choice.

There isn’t a right or wrong way to implement the workload placement plan, but you can definitely get “unexpected” results!

Creating the Kubernetes cluster

The first step was to create my Kubernetes cluster. For this I used the Microsoft Azure Kubernetes Service (AKS) and the SAS Viya 4 Infrastructure as Code (IaC) for Microsoft Azure GitHub project. There are also IaC projects available for Amazon Web Services (AWS) and Google Cloud Platform (GCP).

As previously discussed, as part of creating the workload placement plan you need to define the node pools and the labels and taints to be used.

When creating the custom labels and taints you need to define the naming. While it would be possible to extend the SAS definitions (workload.sas.com/class), I do not recommend this for the following reasons:

- Defining new classes under workload.sas.com could lead to confusion when working with SAS Technical Support, and

- There is no guarantee that SAS might not use a key that you have defined in the future.

Therefore, I recommend using a new naming scheme.

You should create your labels so that they can be “stacked”, that is, you can have more than one label on a node. The problem with following a schema like ‘workload.sas.com/class’ is that label key ‘workload.sas.com/class’ can only have one value on a given node or node pool. For example, ‘workload.sas.com/class=stateful’ or ‘workload.sas.com/class=stateless’, the node can’t have both applied at the same time.

Let me explain a little more by extending my example. Perhaps I had a second non-production environment called ‘UAT’ for testing and I wanted to share the “CAS (nonprod)” node pool for both discovery and UAT. Then I could create a set of environment labels. For example, I could create the following labels:

- ‘environment/prod’

- ‘environment/discovery’

- ‘environment/uat’

I could then use (apply) both non-production environment labels (environment/discovery and environment/uat) to the nodes in the “CAS (nonprod)” node pool. The labels can be “stacked” as there isn’t any collision between the two labels (the key/value pairs). In fact, in this example all three labels could be applied to a node.

Unlike the ‘workload.sas.com/class’ label, where you would be unable to put workload.sas.com/class=stateful AND workload.sas.com/class=stateless on the same node because their key values would collide, a key label may only have a single key value per node.

Finally, I would avoid giving labels values like: “true” or “false”, “yes” or “no”, “1” or “0”, or any reserved keyword, as this can cause errors when you build the site.yaml (kustomize build) or at deployment time.

In my example deployment, I include the custom labels that indicate which nodes are for the production and non-production (discovery) environments, and the realtime (MAS) nodes. As can be seen from the following table I have “stacked” my labels to use both approaches (the original workload.sas.com/class schema and an additional environment schema) for the CAS node pools.

For my testing I used the following:

| Node pool name | Labels | Taints |

|---|---|---|

| cas |

workload.sas.com/class=cas environment/prod=only |

workload.sas.com/class=cas |

| casnonprod | workload.sas.com/class=cas environment/nonprod=shared |

workload.sas.com/class=cas |

| realtime | workload/mas=realtime | workload/class=realtime |

For the environments, I created the ‘environment/prod’ and ‘environment/nonprod’ labels. As discussed above, I could have also used ‘environment/discovery’. If I had multiple non-production environments, using environment/<name> provides a very flexible approach, and allows the labels to be “stacked” on the nodes.

You might notice from the table above that I haven’t added an environment taint on the cas node pools. While this is possible, it brings me back to preferred scheduling rules which I will discuss a bit later.

It is also important that you don’t over taint your nodes as this can cause additional administration and maintenance work. For example, if you are using a monitoring application that needs a pod running on each node in the cluster, you would have to add tolerations for ALL the taints that have been applied to the nodes for the monitoring application to be scheduled and run successfully.

So, this is definitely a case of “less is more”. That is, the fewer taints you have the less additional work you will have to do. In my example, you will notice that I defined a new label and taint for realtime, but leveraged the existing workload.sas.com/class=cas taint for the isolated cas pools. This was possible because CAS already tolerates that node taint out of the box.

Finally, I would like to explain my approach to the realtime label. I could have created a label called ‘workload/class’, but I wouldn’t be able to put ‘workload/class=realtime’ and workload/class=batch’ on the same node. Therefore, thinking about the types of real-time processing I decided on the following naming:

- workload/mas=realtime

- workload/scr=realtime

- workload/esp=realtime

You could use the key value to indicate the type of workload. For example, realtime, batch, critical, etc. Hence, the ‘workload/mas=realtime’ label. The realtime configuration also demonstrates that labels and taints are independent and can be different.

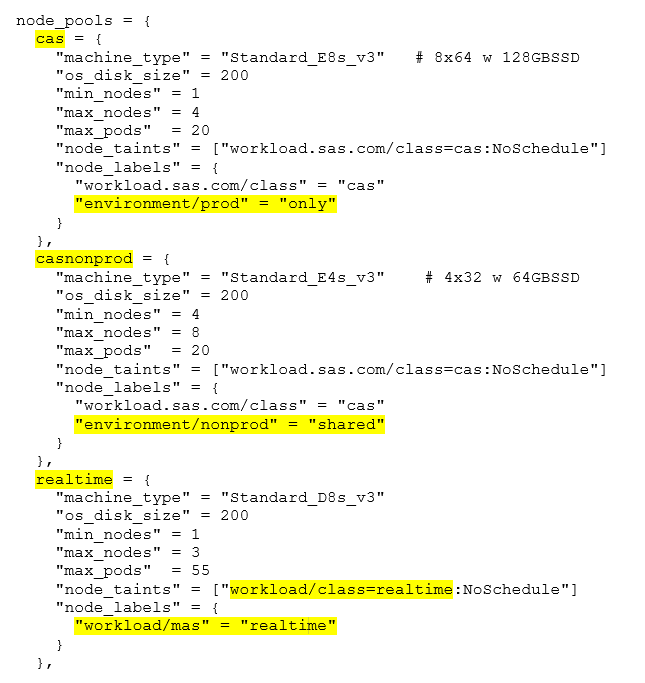

Creating the node pools (using the IaC)

The good news is that the IaC projects allows you to create additional node pools, it is not limited to the five standard SAS node pools. You can also add your own labels and taints.

The only restriction that I found is that the node pool names must use lowercase (a-z) and 0-9 characters. Punctuation and special characters are not allowed. My original idea was to call the second cas node pool ‘cas-nonprod’, but this is not possible. There is also a limit of 12 characters for the node pool names.

The configuration is shown in the following extract from the Terraform configuration.

Figure 2. Terraform IaC configuration snippet

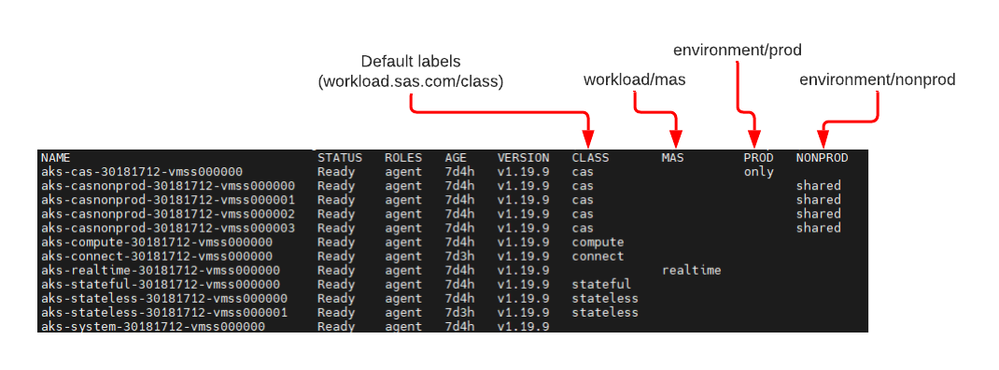

To confirm the configuration of the nodes, the labels that have been assigned, I used the following command to list the node labels:

kubectl get nodes -L workload.sas.com/class,workload/mas,environment/prod,environment/nonprod

The sample output is shown in Figure 3.

Figure 3. Displaying node labels

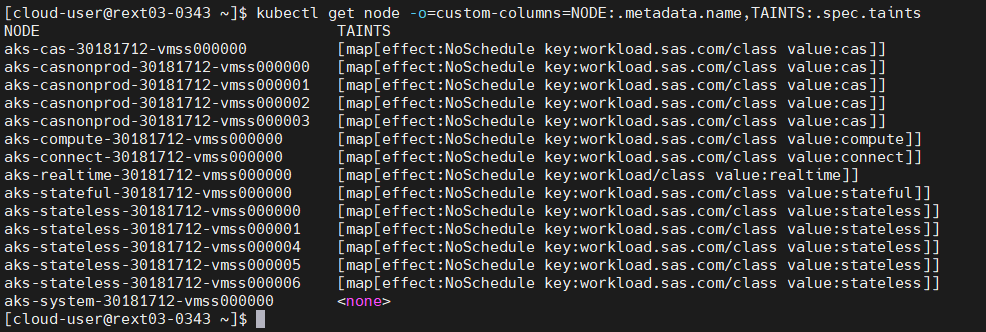

To confirm the taints that have been applied use the following command:

kubectl get node -o=custom-columns=NODE:.metadata.name,TAINTS:.spec.taints

This gave the following output for my AKS cluster.

Once I had my Azure Kubernetes Service (AKS) cluster the next step was to create the SAS Viya configuration for the target state.

Updating the SAS Viya Configuration

The default configuration uses preferred scheduling, this is specified using the nodeAffinity ‘preferredDuringSchedulingIgnoredDuringExecution’ field. This, in part, drives the need for having multiple node taints.

However, if the ‘requiredDuringSchedulingIgnoredDuringExecution’ node affinity definition is used, this specifies rules that must be met for a pod to be scheduled onto a node.

To demonstrate this, I will show examples of using both preferred and required affinity types. While I tested both options, the images are from a deployment using the ‘requiredDuringScheduling’ option.

While I’m explaining this, the other important thing to note is the second part of the field (IgnoredDuringExecution). This does what is says, meaning that the label on the node is ignored while the pod is running. Changing the node labels will not impact the running pods.

So, I hear you ask, what about “requiredDuringSchedulingRequiredDuringExecution” ? The Kubernetes documentation states the following:

In the future we plan to offer requiredDuringSchedulingRequiredDuringExecution which will be identical to requiredDuringSchedulingIgnoredDuringExecution except that it will evict pods from nodes that cease to satisfy the pods' node affinity requirements.

See the Kubernetes documentation: Assigning Pods to Nodes.

Configuring MAS (sas-microanalytic-score)

By default, the sas-microanalytic-score pods are defined as a member of the “stateless” workload class. This means that the sas-microanalytic-score pods are scheduled on the same nodes as the rest of the stateless services in SAS Viya.

The objective is to cause the MAS pods to use the nodes in the realtime node pool. The information below describes the ways to achieve this using the “preferred” and “required” fields.

The following example is a patch transformer that can be used to update the existing node affinity to use the ‘workload/mas’ label and to add the toleration for the ‘workload/class=realtime’ taint. You will note that the target for the patch is specified as the sas-microanalytic-score Deployment object so that no other components of SAS Viya are modified this way.

# Patch to update the sas-microanalytic-score configuration, using preferred scheduling

---

apiVersion: builtin

kind: PatchTransformer

metadata:

name: set-mas-tolerations

patch: |-

- op: replace

path: /spec/template/spec/affinity/nodeAffinity/preferredDuringSchedulingIgnoredDuringExecution

value:

- preference:

matchExpressions:

- key: workload/mas

operator: In

values:

- realtime

matchFields: []

weight: 100

- preference:

matchExpressions:

- key: workload.sas.com/class

operator: NotIn

values:

- compute

- cas

- stateful

- stateless

- connect

matchFields: []

weight: 50

- op: replace

path: /spec/template/spec/tolerations

value:

- effect: NoSchedule

key: workload/class

operator: Equal

value: realtime

target:

group: apps

kind: Deployment

name: sas-microanalytic-score

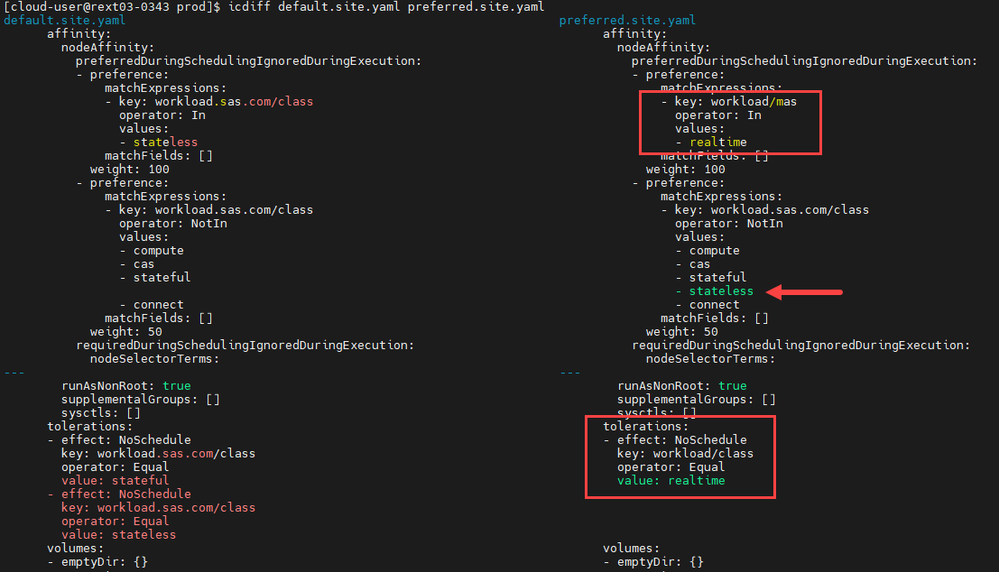

version: v1To make it easier to see the changes I used ‘icdiff’ to compare the default configuration (default.site.yaml) and the new configuration that was produced (preferred.site.yaml). This is shown in Figure 4.

Figure 4. Preferred scheduling compare

If you look closely at the image, you will see that the patch transformer has made the following changes:

- Update for the node affinity preference to schedule to nodes with the ‘workload/mas=realtime’ label,

- Added the stateless class to the ‘NotIn’ nodeAffinity section, and

- Removed the existing tolerations, by default the tolerations are for the stateless and stateful taints, and added a new toleration for the realtime taint.

Remember, using the preferred scheduling does NOT guarantee that the pods will only be scheduled to the nodes in realtime node pool. The node affinity is a preference, not a requirement. For example, if the K8s cluster includes nodes without any taints the pods could be scheduled to run on these nodes. The pods could “drift” to any un-tainted nodes.

The second example is a patch transformer to use the ‘requiredDuringSchedulingIgnoredDuringExecution’ scheduling.

# Patch to update the sas-microanalytic-score configuration to use required scheduling

---

apiVersion: builtin

kind: PatchTransformer

metadata:

name: mas-set-tolerations

patch: |-

- op: remove

path: /spec/template/spec/affinity/nodeAffinity/preferredDuringSchedulingIgnoredDuringExecution

value:

- preference:

matchExpressions:

- key: workload.sas.com/class

operator: In

values:

- stateless

matchFields: []

weight: 100

- preference:

matchExpressions:

- key: workload.sas.com/class

operator: NotIn

values:

- compute

- cas

- stateful

- connect

matchFields: []

weight: 50

- op: add

path: /spec/template/spec/affinity/nodeAffinity/requiredDuringSchedulingIgnoredDuringExecution/nodeSelectorTerms/0/matchExpressions/-

value:

key: workload/mas

operator: In

values:

- realtime

- op: replace

path: /spec/template/spec/tolerations

value:

- effect: NoSchedule

key: workload/class

operator: Equal

value: realtime

target:

group: apps

kind: Deployment

name: sas-microanalytic-score

version: v1

Again, to make it easier to see the changes I used ‘icdiff’ to compare the default configuration (default.site.yaml) and the new configuration that was produced (required.site.yaml).

This is shown in Figure 5.

Figure 5. Required scheduling compare

Looking at the image you will see that the patch transformer has made the following changes:

- Removed the preferred scheduling to simplify the manifest,

- Added the definition in the required scheduling section, and

- Removed the existing tolerations and added a new toleration for the realtime taint.

This is the configuration that I used in my deployments because it provides the simplest approach and ensures the MAS pods will not drift and only land on the explicitly labeled nodes.

To use the transformer, an update to the kustomization.yaml is required to refer to the patch transformer. The entry is added after the required transformers. For my configuration the update was as follows:

transformers:

...

- site-config/sas-microanalytic-score/add-required-realtime-label.yaml

...

Configuring the SAS Cloud Analytic Services (CAS) Server

We know that the CAS pods are scheduled onto the nodes in the ‘cas node pool’ by default. The target state is to dedicate nodes to each SAS Viya environment. This allowed me to use different node instance types for each environment.

To recap, in this fictitious example, the Production environment is to run an SMP CAS Server, and the Discovery environment is to run an MPP CAS Server.

In the discussion above, you can see that the CAS node pools only have the ‘workload.sas.com=cas’ taint. We will drive the pods to the desired CAS node pool (desired nodes) using the node labels and the ‘requiredDuringSchedulingIgnoredDuringExecution’ scheduling.

The following patch transformer makes the required changes for the production environment.

# This transformer will add a required label to the CASDeployment

apiVersion: builtin

kind: PatchTransformer

metadata:

name: add-required-prod-label

patch: |-

- op: add

path: /spec/controllerTemplate/spec/affinity/nodeAffinity/requiredDuringSchedulingIgnoredDuringExecution/nodeSelectorTerms/0/matchExpressions/-

value:

key: environment/prod

operator: Exists

target:

kind: CASDeployment

name: .*Examining the patch transformer in more detail, you will see that I’m only checking that the ‘environment/prod’ label exists, the patch doesn’t evaluate against the value of the label. The label could have a NULL value or some other string value like “foo” and the patch will still work.

The reason I gave the environment labels a value was to make it easier to display the labels, as shown in Figure 3 (using the kubectl get nodes -L command).

To loop back to the MAS patch transformer for a moment, it would have been possible to take a similar approach and just test for the existence of the ‘workload/mas’ label. This would look like the following:

- op: add

path: /spec/template/spec/affinity/nodeAffinity/requiredDuringSchedulingIgnoredDuringExecution/nodeSelectorTerms/0/matchExpressions/-

value:

key: workload/mas

operator: ExistsAgain, to use the transformer an update to the kustomization.yaml is required to refer to the patch transformer. The entry is added after the required transformers. For my production configuration the update was as follows:

transformers:

...

- site-config/cas/add-required-prod-label.yaml

...

The results, verifying the deployments

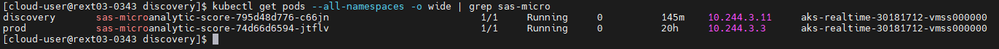

After both environments were running, I used the following commands to verify the deployment. To confirm the location of the running sas-microanalytic-score pods I used the following command:

kubectl get pods --all-namespaces -o wide | grep sas-micro

As you can see, the realtime node is being shared by both SAS Viya environments. To confirm the location of the running cas pods I used the following command:

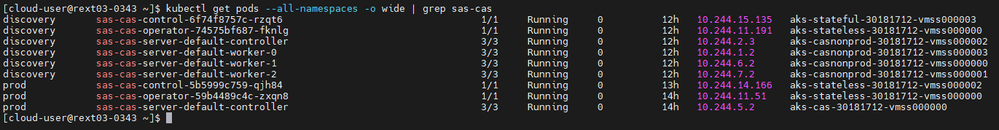

kubectl get pods --all-namespaces -o wide | grep sas-cas

As you can see, the production and discovery CAS pods are running on the desired nodes. The SMP CAS server (sas-cas-server-default-controller pod) in the “prod” namespace is running on the aks-cas-30181712-####### node and MPP CAS pods (controller and worker pods) in the “discovery” namespace are running on the aks-casnonprod-30181712-####### nodes.

Finally, another useful command to list all the pods running on a node is the following:

kubectl get pods --all-namespaces --field-selector spec.nodeName=<node_name>

Conclusion

It’s probably time to wrap this up, so some final thoughts…

It is possible to create custom deployment topologies and we have looked at a couple of examples and the drivers for doing so.

Avoid extending the default workload definitions ('workload.sas.com') as the basis for your custom labels and taints to prevent unexpected conflicts with the SAS software. Instead define your own labels, using a schema so that the labels can be stacked to provide a flexible solution.

I would use dedicated node pools carefully; you don’t want to create an administration nightmare by splitting out too many services into their own node pool and/or over tainting the nodes. For example, if you were trying to split out the stateless services for each SAS Viya environment it may be better to use a dedicated Kubernetes cluster for each SAS Viya deployment.

I describe this as “effort vs reward”, as you would have to create a patchTransformer for each stateless microservice. The effort for this is very high and makes the deployment susceptible to changes in the SAS Viya packaging.

When using a cluster for multiple SAS Viya environments, extend where it makes sense and re-use nodes where it's appropriate. For some services, it could be better to “keep it simple” and size the node pools appropriately to run the workload for multiple environments, rather than splitting things out. It all comes back to the customer’s requirements and what they are trying to achieve.

Finally, using a cluster for multiple SAS Viya environments (using a shared cluster), if you are deploying different SAS Viya cadence versions always confirm that the different versions can coexist.

I hope this is useful and thanks for reading.

References

- Kubernetes node affinity

- The SAS Viya Operations Guide describes the workload classes, see here.

Related GEL Article

- Workload placement in SAS Viya 4 for the installation engineer – part 1 by Raphaël Poumarede.

Find more articles from SAS Global Enablement and Learning here.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thanks for this well explained blog!

Can you please clarify this sentence you wrote below?

Finally, using a cluster for multiple SAS Viya environments (using a shared cluster), if you are deploying different SAS Viya cadence versions always confirm that the different versions can coexist.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi @EyalGonen

The key reasons for possible incompatibilities are around the system requirements and there could be changes introduced to the cluster-wide resources that would break the older Viya deployments.

Therefore, when sharing a cluster for multiple SAS Viya deployments it is important to ensure that they can coexist. For those reasons I also wouldn't recommend collocating Stable cadence and LTS cadence versions on the same cluster. As that would / may increase the risk of incompatibilities.

I hope that helps.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thanks @MichaelGoddard for replying!

What is your opinion about sharing two different SAS solutions (in two different namespaces) but both with same cadence on the same cluster?

In my example we are talking about SAS VA and SAS VTA to be deployed in two namespaces on the same cluster. Both will use now and in the future the same cadence to avoid the issues you mentioned in your previous reply. Do you see a possible pitfall with this?

SAS AI and Machine Learning Courses

The rapid growth of AI technologies is driving an AI skills gap and demand for AI talent. Ready to grow your AI literacy? SAS offers free ways to get started for beginners, business leaders, and analytics professionals of all skill levels. Your future self will thank you.

- Find more articles tagged with:

- GEL