- Home

- /

- SAS Communities Library

- /

- CAS Cross Loading

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

CAS Cross Loading

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Have you heard the term, "cross loading?" It's an important concept in CAS.

The Dual Nature of CASLibs

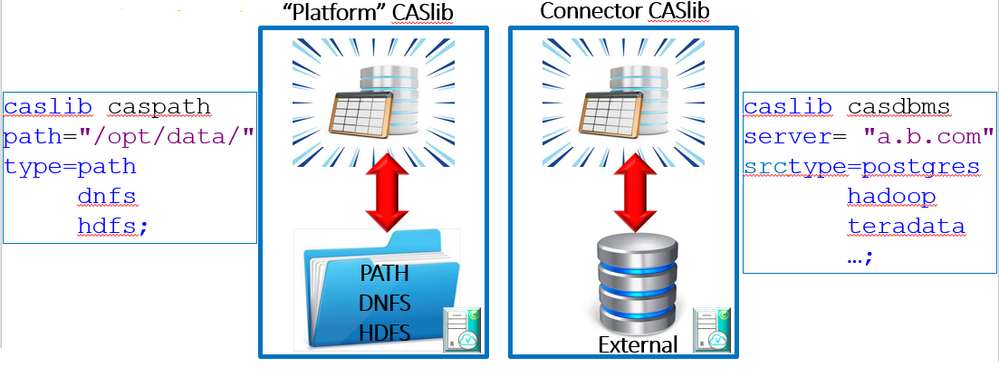

CAS Libraries (CASLibs) utilize two separate areas:

- A temporary in-memory space to hold CAS tables.

- A permanent "dataSource" to source and/or backup those CAS tables.

As a temporary (in-memory) data server, CAS utilizes the dataSource (as its designated in the CASLib statement), as a permanent, enduring data copy. When the CAS server stops, the in-memory data disappears but the dataSource copy remains. The dataSource can be any number of physical data servers including connector sources like SQL Server, Oracle, and Redshift as well as "platform" sources like linux PATH files, DNFS, and HDFS.

The graphic below depicts this dual nature showing both the temporary, in-memory location as well as the permanent "dataSource" location together in a single construct with data moving back and forth (loading and saving) between the two locations:

| Platform and Connector CASLibs |

When the Data You Need isn't in the DataSource

This setup works as designed when utilizing tables from the CASLib's defined dataSource. For example, when the CAS table needed in VA is identical to a Teradata table in the Teradata CASLib dataSource, you simply load the Teradata table into CAS. You can even just push a button in Environment Manager.

What if, however, you want to load tables from an Oracle database to your Teradata CASLib (cross load)? Well, CAS can do that too. The graphic below depicts cross-loading from a platform CASLib to a connector CASLib and vice-versa:

| Cross Loading |

But why would you do this? Why not just create an Oracle CASLib and load your Oracle data there? Let's look at some scenarios to better understand cross-loading.

Scenario 1: Multi-Source Data Mart

In this scenario, you need to load data from numerous, disparate data sources (say Oracle, Hadoop, and an ODBC source) and use CAS' massively parallel data processing capabilities to combine and transform the data into a single-table CAS data mart. We've decided to back our data mart with SASHDAT on DNFS. So our data mart will exist in a DNFS CASLib.

To access the remote data, we create connector CASLibs to each data source. Each CASLib contains, not only the connection to the remote data but also the in-memory logical location as well (even though we don't plan on using the connector CASLibs for in-memory data).

We could, of course, load each source to its own CASLib in-memory space but this creates an extra step and extra I/O since we still need to get the data into the target DNFS CASLib. It's more optimal to load it from the source directly into its final location, transforming it on the way. As the graphic below illustrates, this scenario results in three separate cross loads.

| Multi-Source Data Mart Load |

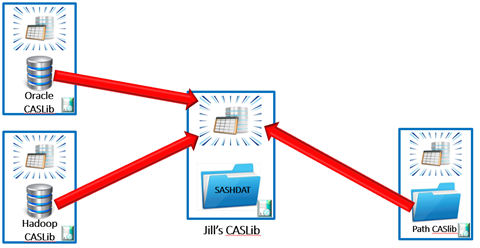

Scenario 2: Power-User CASLib

In this scenario, we are not motivated by a desired data model but by end-user privileges. Here we have a power-user who has access to every data source in the organization. Our power user, Jill, creates ad-hoc reports and analyses as well as analyzing data to identify issues. To facilitate her initiatives, Jill has her own CASLib. Currently her company has built an Oracle CASLib to access their ERP system, an Hadoop CASLib to access their data lake, as well as a PATH CASLib to access their SAS Data Warehouse. Jill has rights to read from each of the data sources but does not have rights to write into any of the CASLib's in-memory spaces as they are used for production reporting. Given these restrictions, she loads data from the other CASLib's dataSources directly into her own CASLib as shown below.

| Power User CASLib |

These two examples are certainly not meant to be exhaustive. They are just meant to give you an idea of why cross-loading is possible.

How to Code a Cross Load

Motivations aside, coding a cross load is quite easy. The CASUTIL LOAD statement has two CASlib parameters, INCASLIB and OUTCASLIB to facilitate reading from one CASLib dataSource into another CASLib in-memory space. A graphic below shows a cross load running in SAS Studio.

| Cross Load Code |

Concluding Thoughts

The dataSource concept in CAS facilitates two related but separate purposes, data backup for in-memory data as well as data access. The way CAS was built, being able to utilize almost any data server as its permanent data store, these two concepts are so interdependent and intertwined that they were implemented together. They manifest exactly the same way after all. Both are simply connection information to some data/file server.

With these two concepts both implemented in the CASLib dataSource, however, we must be prepared to accept and understand cross loading.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Interesting . What happens if you have 2 tables of the same name in disparate data sources? Can they both be loaded to memory in the same caslib ?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

SAS AI and Machine Learning Courses

The rapid growth of AI technologies is driving an AI skills gap and demand for AI talent. Ready to grow your AI literacy? SAS offers free ways to get started for beginners, business leaders, and analytics professionals of all skill levels. Your future self will thank you.

- Find more articles tagged with:

- cas

- CASLIB

- cross load

- datasource

- GEL