- Home

- /

- SAS Communities Library

- /

- CAS Accessing Azure DataBricks SPARK cluster

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

CAS Accessing Azure DataBricks SPARK cluster

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

With SAS Viya 2021.2 LTS release(Stable 2021.1.5/6), Viya users can access the Azure Databricks SPARK (Ver 3 onwards) cluster using the SPARK data connector. The SPARK Data connector enables the user to read and write data tables to the Azure Databricks database. The SPARK Data connector supports serial data transfer between CAS and Azure Databricks database.

Azure Databricks is optimized for Azure data lakes and provides an interactive workspace to set up the environment and collaborate amongst the data scientists. Azure Databricks uses the SPARK engine to process the data.

This article is about CAS accessing the Azure Databricks SPARK (Ver 3) database table using the SPARK data connector.

Pre-requisites

- SAS SPARK Data Connector at CAS controller (comes with Viya deployment).

- Access token from Azure Databricks Workspace.

- Azure Databricks with SPARK (Ver 3..) cluster.

- Databricks JDBC Jar file available at CAS controller.

- SPARK (Ver 3) cluster and JDBC URL information.

Data access path

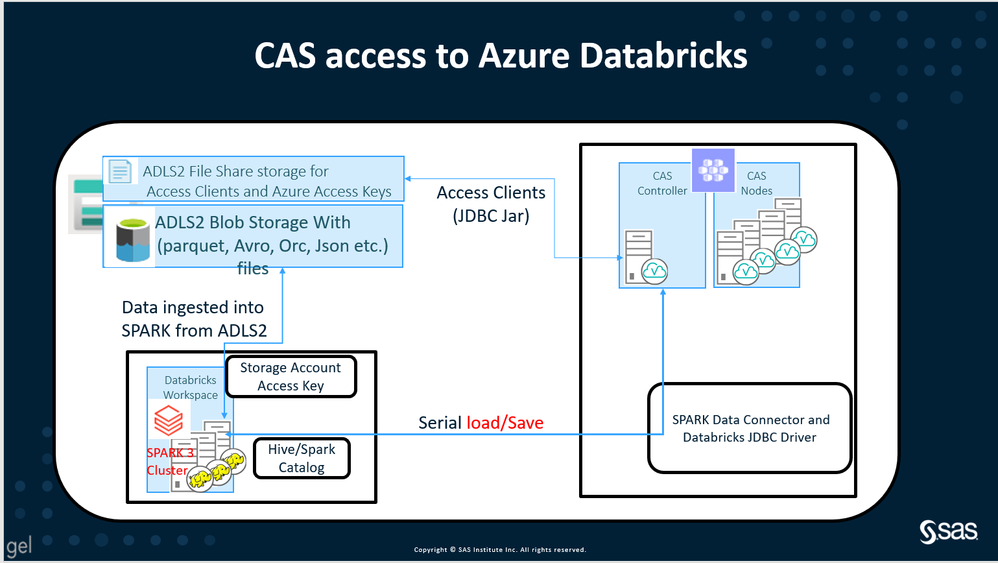

The following picture describes the SAS Viya(CAS) environment access to the Azure Databricks database table.

Select any image to see a larger version.

Mobile users: To view the images, select the "Full" version at the bottom of the page.

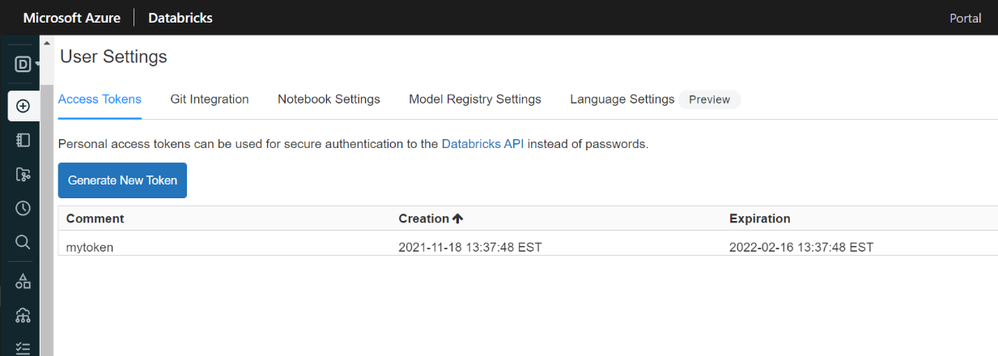

Azure Databricks workspace Token

The Databricks workspace is the entry point for external applications to access the objects and data from the Databricks SPARK cluster. The Databricks workspace user credential is required to connect to the SPARK cluster from an external application.

The following screen describes the user credential (Token) to access the SPARK cluster.

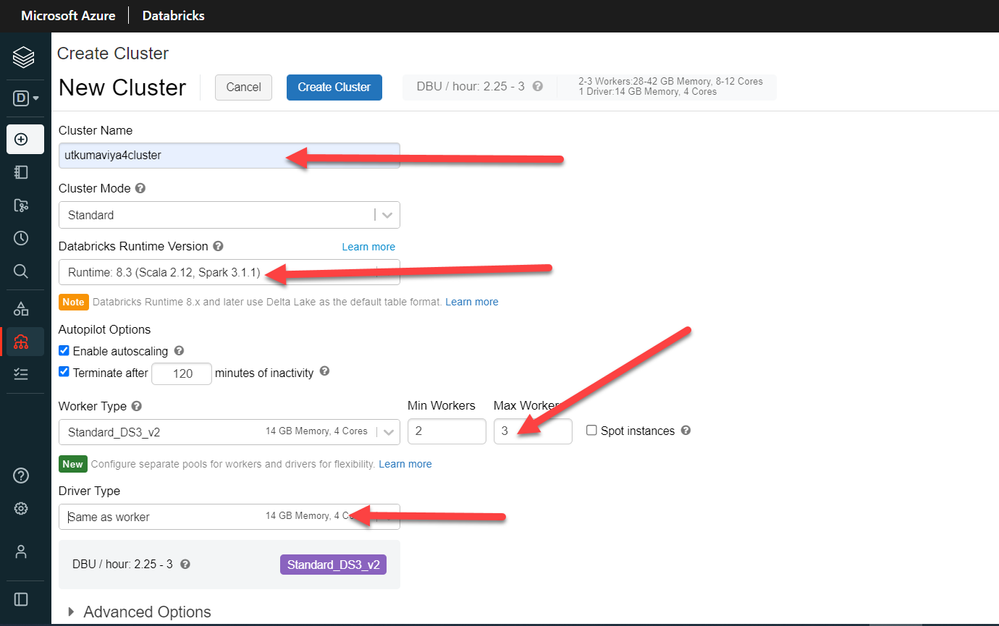

Azure Databricks SPARK 3 cluster

The SPARK data connector supports the data access from a cluster started with SPARK Ver 3 and up. The following screen describes the creation of the SPARK 3 cluster under Azure Databricks Workspace.

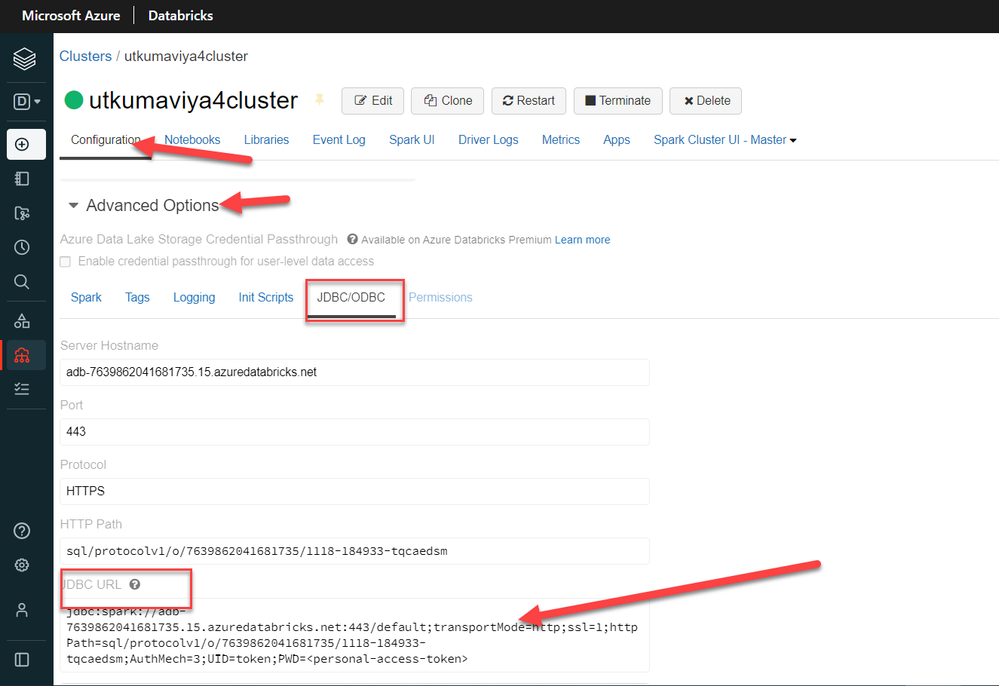

Azure Databricks SPARK cluster connection information is available at the cluster configuration tab.

Azure Databricks JDBC driver

The third-party application can access to Databricks table using the JDBC driver. The JDBC Driver is available at the following link.

Databricks JDBC Driver download

CAS Access to Azure Databricks SPARK cluster

With Azure Databricks Workspace Token, SPARK-3 Cluster, and Databricks JDBC driver in place, you can use the following code for serial load/save to CAS from the Azure Databricks table. The Azure Databricks Workspace token (key) is used as the password to authenticate to the Databricks environment.

Code:

/* Note : variable value in quotes generate errors, So keep it without quotes. */

%let MYDBRICKS=adb-7060859955656306.6.azuredatabricks.net;

%let MYPWD=dapiaa66843abadb51775a9dd7858d6980aa-2;

%let MYHTTPPATH=sql/protocolv1/o/7060859955656306/0210-155120-shop163;

%let MYUID=token;

CAS mySession SESSOPTS=( CASLIB=casuser TIMEOUT=99 LOCALE="en_US" metrics=true);

caslib spkcaslib dataSource=(srctype='spark',

url="jdbc:spark://&MYDBRICKS:443/default;transportMode=http;ssl=1;httpPath=&MYHTTPPATH;AuthMech=3;UID=&MYUID;PWD=&MYPWD"

driverclass="com.simba.spark.jdbc.Driver",

classpath="/mnt/myazurevol/config/access-clients/JDBC",

BULKLOAD=NO,

schema="default" );

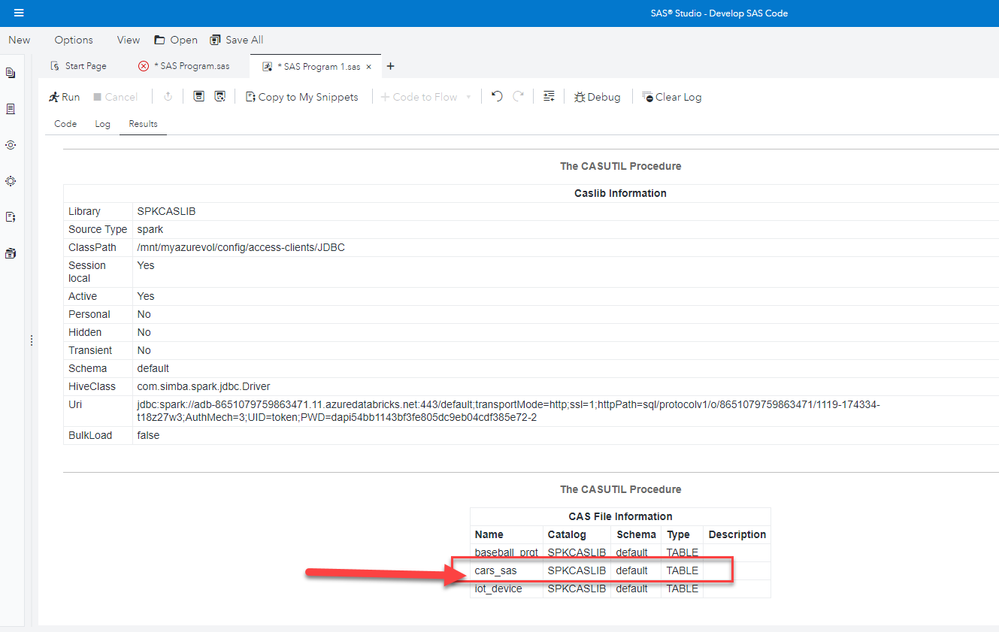

proc casutil outcaslib="spkcaslib" incaslib="spkcaslib" ;

list files ;

quit;

/* Load CAS from DataBricks database table */

proc casutil outcaslib="spkcaslib" incaslib="spkcaslib" ;

load casdata="iot_device" casout="iot_device" replace;

list tables;

quit;

/* Save CAS table to DataBricks database */

proc casutil outcaslib="spkcaslib" incaslib="spkcaslib";

load data=sashelp.cars casout="cars" replace;

save casdata="cars" casout="cars_sas" replace;

list files;

quit;

CAS mySession TERMINATE;

Log extract :

....

..............

88 caslib spkcaslib dataSource=(srctype='spark',

89 url="jdbc:spark://&MYDBRICKS:443/default;transportMode=http;ssl=1;httpPath=&MYHTTPPATH;AuthMech=3;UID=&MYUID;

89 ! PWD=&MYPWD"

90 driverclass="com.simba.spark.jdbc.Driver",

91 classpath="/mnt/myazurevol/config/access-clients/JDBC",

92 BULKLOAD=NO,

93 schema="default" );

NOTE: Executing action 'table.addCaslib'.

NOTE: 'SPKCASLIB' is now the active caslib.

NOTE: Cloud Analytic Services added the caslib 'SPKCASLIB'.

....

..............

99 /* Load CAS from DataBricks database table */

100 proc casutil outcaslib="spkcaslib" incaslib="spkcaslib" ;

NOTE: The UUID '4eaf8859-dc77-ee40-8259-41e1b897e44f' is connected using session MYSESSION.

101

101! load casdata="iot_device" casout="iot_device" replace;

NOTE: Executing action 'table.loadTable'.

NOTE: Performing serial LoadTable action using SAS Data Connector to Spark.

NOTE: Cloud Analytic Services made the external data from iot_device available as table IOT_DEVICE in caslib spkcaslib.

....

..............

105 /* Save CAS data to DataBricks database */

106 proc casutil outcaslib="spkcaslib" incaslib="spkcaslib";

NOTE: The UUID '4eaf8859-dc77-ee40-8259-41e1b897e44f' is connected using session MYSESSION.

107 load data=sashelp.cars casout="cars" replace;

NOTE: The INCASLIB= option is ignored when using the DATA= option in the LOAD statement.

NOTE: Executing action 'table.addTable'.

NOTE: SASHELP.CARS was successfully added to the "SPKCASLIB" caslib as "CARS".

108 save casdata="cars" casout="cars_sas" replace;

NOTE: Executing action 'table.save'.

NOTE: Performing serial SaveTable action using SAS Data Connector to Spark.

NOTE: Cloud Analytic Services saved the file cars_sas in caslib SPKCASLIB.

....

..............

.................

Result Output:

CAS table saved to Azure Databricks database.

Important Links:

Accessing Azure Databricks from SAS Viya (CAS)

Accessing Azure Databricks from SAS 9.4

Find more articles from SAS Global Enablement and Learning here.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi

I'm not sure if Databricks driver has been changed but when tested I got error when trying to list files from the first code. I'm using JDBC42-2.6.32 driver.

The working CASLIB statement in my case is as follows:

CASLIB dbspark dataSource=(srctype='spark',

url="jdbc:databricks://ServerHostname:443/default;transportMode=http;ssl=1;httpPath=sql/protocolv1/o/8982188031797693/0201-012252-gaq83b6a;AuthMech=3;UID=token;PWD=AccessToken",

driverclass="com.databricks.client.jdbc.Driver",

classpath="/data-drivers/jdbc/databricks",BULKLOAD=NO,schema="default" );

Regards

Zbyszek

April 27 – 30 | Gaylord Texan | Grapevine, Texas

Registration is open

Walk in ready to learn. Walk out ready to deliver. This is the data and AI conference you can't afford to miss.

Register now and lock in 2025 pricing—just $495!

SAS AI and Machine Learning Courses

The rapid growth of AI technologies is driving an AI skills gap and demand for AI talent. Ready to grow your AI literacy? SAS offers free ways to get started for beginners, business leaders, and analytics professionals of all skill levels. Your future self will thank you.

- Find more articles tagged with:

- GEL