- Home

- /

- SAS Communities Library

- /

- Assessing Models by using k-fold Cross Validation in SAS® Enterprise M...

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Assessing Models by using k-fold Cross Validation in SAS® Enterprise Miner ™

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

My previous tip on cross validation shows how to compare three trained models (regression, random forest, and gradient boosting) based on their 5-fold cross validation training errors in SAS Enterprise Miner. This tip is the second installment about using cross validation in SAS Enterprise Miner and builds on the diagram that is used in the first tip.

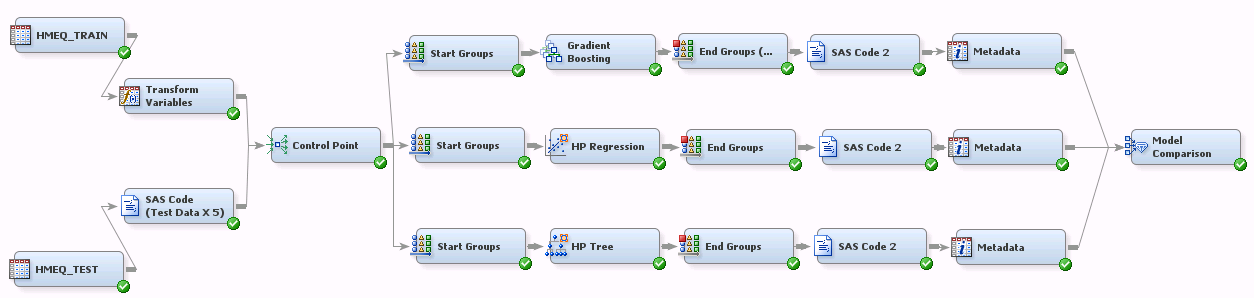

In addition to comparing models based on their 5-fold cross validation training errors, this tip also shows how to obtain a 5-fold cross validation testing error; so it provides a more complete SAS Enterprise Miner flow (shown below).

First a quick note about how k-fold cross validation training and testing errors are calculated:

- k-fold cross validation training error is calculated by using the predictions on the whole training set which is obtained by combining the k sets of cross validation holdout predictions.

- k-fold cross validation testing error is calculated by using the average of k different sets of test predictions that come from the k trained models of cross validation.

Following is a step-by-step explanation of the preceding Enterprise Miner flow. You can run this process flow by using the attached xml file.

- The Data Source node HMEQ_TRAIN includes about two-thirds of the HMEQ data, in which the Role property is specified as Train. The HMEQ_TEST node includes the remaining one-third of the data, in which the Role is specified as Test. In both nodes, the target variable is BAD, whose level is binary.

- Similar to the first tip, to make sure that looping through the Start Groups and End Groups nodes perform k-fold cross validation on the training and test set properly, you need to do some simple tricks. For the training set, this involves using the Transform Variables node as explained in step 3. You need to create 5 replications of test set. In the first test set, the _fold_ variable takes a value 1, in the second test set it takes a value 2 and so on. The following statements in SAS Code node (Test Data X 5) create these five test sets. Note also that it first defines a unique ID for each row in the original test set. The IDs will be used in the next SAS Code nodes (those that come after the End Groups nodes) for averaging the five test set predictions per ID.

data temptest; set &EM_import_TEST; ID = _N_; run; data &EM_EXPORT_TEST; set temptest (in=in1) temptest (in=in2) temptest (in=in3) temptest (in=in4) temptest(in=in5); if in1 then _fold_= 1; else if in2 then _fold_=2; else if in3 then _fold_=3; else if in4 then _fold_=4; else if in5 then _fold_=5; run; - The Transform Variables node (which is connected to the training set) creates a k-fold cross validation indicator as a new input variable, _fold_ which randomly divides the training set into k folds, and saves this new indicator as a segment variable. More information about this node can be found in the first tip.

- The Control Point node establishes a control point within the process flow diagram.

- The next three nodes a Start Groups node, a Modeling Node, and an End Groups node train models for each of the five training sets of 5-fold cross validation (each set omits one fold) and obtain predictions on the holdout (omitted) sets. They also obtain predictions on the test set for each of the five trained models of 5-fold cross validation.

- For each modeling algorithm, the SAS Code 2 node averages the test scores that come from the 5 trained models of 5-fold cross validation by executing the following statements:

proc sort data=&EM_IMPORT_TEST out=&EM_EXPORT_TEST; by ID; run; data &EM_EXPORT_TRAIN; set &EM_IMPORT_DATA; run; data test1 test2 test3 test4 test5; set &EM_IMPORT_TEST; if _fold_ = 1 then output test1; if _fold_ = 2 then output test2; if _fold_ = 3 then output test3; if _fold_ = 4 then output test4; if _fold_ = 5 then output test5; run; data &EM_EXPORT_TEST; merge test1(rename=(P_BAD1 = P_BAD_1)) test2(rename=(P_BAD1 =P_BAD_2)) test3(rename=(P_BAD1 = P_BAD_3)) test4(rename=(P_BAD1 = P_BAD_4)) test5(rename=(P_BAD1 = P_BAD_5)); by ID; P_BAD1 = (P_BAD_1 + P_BAD_2 + P_BAD_3 + P_BAD_4+P_BAD_5)/5; run; - The Metadata node assigns a new role of “Prediction” for the average predictions of the test set, “P_BAD1”. This new role will be used for performance metric calculation by the Model Comparison node. The Metadata node also rejects the other test set prediction variables with the “Predition” role, P_BAD_1, …, P_BAD_5, so that the Model Comparison node knows which one exactly to use for calculating test set errors.

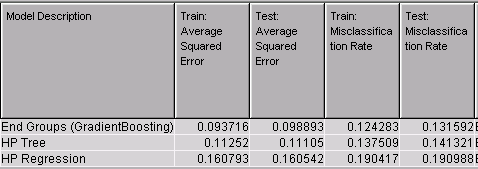

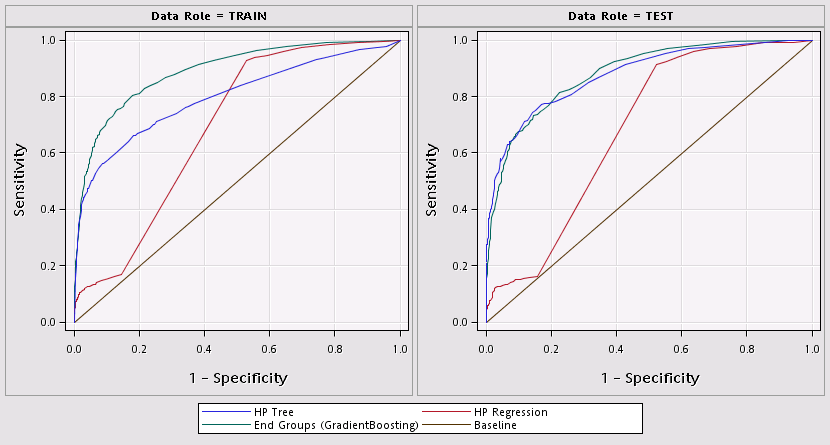

- The last node, Model Comparison, compares the gradient boosting, regression, and decision tree models based on the 5-fold training and test set cross validation errors. It provides the following output table, in which the training and testing errors are actually the 5-fold cross validation training and the 5-fold cross validation testing error.

Note that you can obtain the cross validated predictions of the test set by saving the exported data (&EM_EXPORT_TEST) of the SAS Code 2 node.

If you run this flow diagram or replicate this analysis for your own data, make sure that you run each Start Groups/End Groups block separately, because multiple looping actions do not work at the same time.

Thanks a lot to Ralph Abbey for his help in putting this together.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi @Funda_SAS, thanks for the great article. I've tried implementing the flow diagram but seem to be facing an issue, namely:

the ID variable generated in the bottom code snippet doesn't seem to be parsed to the start group nodes, resulting in an error in SAS CODE NODE 2 when trying to sort the imported test data set by ID.

data temptest;

set &EM_import_TEST;

ID = _N_;

run;

Would appreciate any help on the aforementioned issue. Thanks!

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi @abelroch ,

If you are directly using the attached xml file, try creating your own data sources. Also, make sure the Input Data Node has the Test role, by default Role property is set to Raw.

Hope this helps!

Funda

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Can this approach be used for HP Forest when optimization is used?

I was reading the Enterprise Miner reference, and noticed the following:

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

What is stated in the documentation is true. The cross validation mode in Start and End Groups nodes does not support the HP Forest node mainly because scoring for HP FOREST and HP SVM is done differently than other HP nodes.

SAS AI and Machine Learning Courses

The rapid growth of AI technologies is driving an AI skills gap and demand for AI talent. Ready to grow your AI literacy? SAS offers free ways to get started for beginners, business leaders, and analytics professionals of all skill levels. Your future self will thank you.