- Home

- /

- SAS Communities Library

- /

- Regression Methods: Supervised Learning in SAS Visual Data Mining and ...

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Regression Methods: Supervised Learning in SAS Visual Data Mining and Machine Learning

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

In a previous post, I summarized the unsupervised learning category, which currently hosts two tasks: Kmeans and Kmodes Clustering, and Principal Component Analysis. In this post, I'll explore some of the supervised learning models: the regressions.

About Supervised Learning

Supervised learning models are built from training data for which the response (target, dependent) values are known. These models are subsequently used to score (classify or predict) response values for new data.

Ordinary Least Squares Models (PROC REGSELECT)

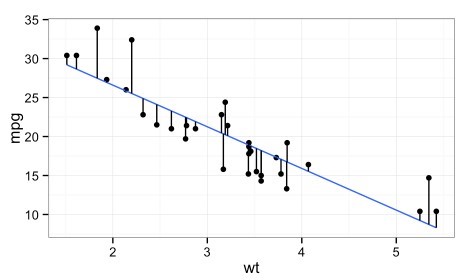

Ordinary Least Squares (OLS) regression is probably one of the first models we learned in school. We simply fit a line that minimizes the error between the observed response and the model, as illustrated in the graph aboe. A simple example is predicting gas mileage (y, the dependent variable, i.e., target) by vehicle weight (x, the independent variable, i.e., feature). Remembering basic algebra, the formula for a line is Y = β0 + β1X1, where β0 is the intercept, and β1 is the slope of the line. So Y = β0 + β1X1 is our simple linear model.

PROC REGSELECT fits and performs model selection for ordinary linear least squares models in SAS Viya. These models are standard iid (independently and identically distributed) general linear models, which can contain main effects that consist of both continuous (numeric) and classification (categorical) variables, as well as the interaction effects of these variables. PROC REGSELECT also provides a variety of regression diagnostics. PROC REGSELECT provides traditional as well as cutting-edge regression selection options via a SELECTION statement:

- FORWARD selection starts with no effects in the model and adds effects.

- BACKWARD elimination starts with all effects in the model and deletes effects.

- STEPWISE regression is a combination of forward selection and backward elimination.

- LAR, least angle regression, like forward selection, starts with no effects in the model and adds effects. The parameter estimates at any step are “shrunk” when compared to the corresponding least squares estimates.

- LASSO, least absolute shrinkage and selection operator, adds and deletes parameters based on a version of ordinary least squares in which the sum of the absolute regression coefficients is constrained.

- HYBRID versions of the LAR and LASSO methods are also supported. They use LAR or LASSO to select the model, but they estimate the regression coefficients by ordinary weighted least squares.

Be aware that PROC REGSELECT does not perform model selection by default. If you do request model selection by using the SELECTION statement, then the default selection method is stepwise selection based on the Schwarz Bayesian information criterion (SBC). This default matches the default method in PROC GLMSELECT.

Unlike PROC REG in SAS/STAT, PROC REGSELECT lets you include classification (categorical) variables and supports the LAR and LASSO selection methods, as well as the ability to use external validation data and cross validation as selection criteria. Because PROC REGSELECT runs on CAS, it exploits all the available cores and concurrent threads to run in parallel.

Nonlinear Regression Models (PROC NLMOD)

Nonlinear models include the introduction of nonlinearity in the parameters, in our example the βs. PROC NLMOD uses either nonlinear least squares or maximum likelihood to fit nonlinear regression models in SAS Viya. PROC NLMOD enables you to specify the model by using SAS programming statements. This gives you greater flexibility in modeling the relationship between the dependent (target) variable and independent (input) variables than SAS procedures that use a more structured MODEL statement.

Like PROC NLIN (but unlike PROC NLMIXED) in SAS/STAT, PROC NLMOD can estimate parameters by using least squares minimization. However, PROC NLMOD can also perform maximum likelihood estimation when information about the response variable’s distribution is available. PROC NLMOD uses different optimization techniques from PROC NLIN, so be aware that you will get different parameter estimates in PROC NLMOD, even if you use the same models and data!

Because it runs on CAS, PROC NLMOD is highly multithreaded. It allocates data to different threads and calculates the likelihood function, gradient, and Hessian matrix by accumulating the values from all threads.

Logistic Regression Models (PROC LOGSELECT)

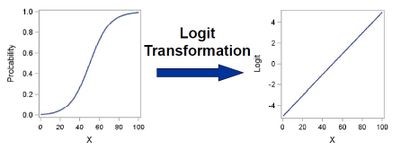

Logistic regression is appropriate where the dependent variable is categorical (for example, the response might be “cheated” or “didn’t cheat”). PROC LOGSELECT provides link functions that are widely used in practice such as logit, probit, log-log, and complementary log-log functions. So for example, if you have a binary response, you take the logit and—voila—you have a continuous criterion to run linear regression on. One advantage of the logit function over other link functions is that differences on the logistic scale are interpretable regardless of whether the data are sampled prospectively or retrospectively.

PROC LOGSELECT uses maximum likelihood to estimate the model parameters. PROC LOGSELECT procedure is both similar to and different from PROC LOGISTIC in SAS/STAT software. See the documentation for details.

Generalized Linear Models (PROC GENSELECT)

PROC GENSELECT uses maximum likelihood to provide model fitting and model building for generalized linear models in SAS Viya. Note that generalized linear models are not to be confused with general linear models.

- A general linear model requires that the response variable follows the normal distribution

- A generalized linear model is an extension of the general linear model that allows the specification of models whose response variables follow different distributions, including the normal, but also including Poisson, binomial distributions, beta, and negative binomial distributions.

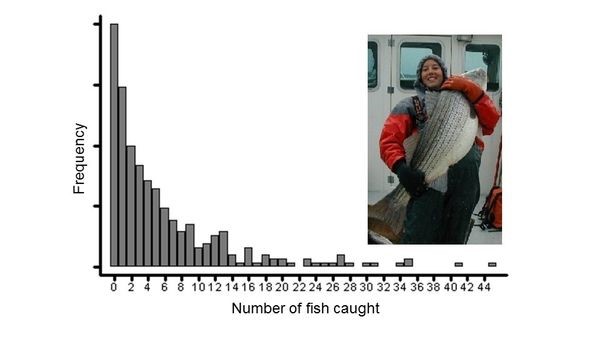

Let’s take the Poisson distribution, for example. A Poisson distribution is appropriate where the dependent variable is a count variable. An example might be the number of fish caught each day. The number of soldiers killed by mule-kicks each year in the Prussian cavalry in the late 1800s also follows a Poisson distribution (Ladislaus von Bortkiewicz, 1898).

Selection methods for PROC GLMSELECT are the same as those for PROC REGSELECT.

Visual Statistics 7.3 uses the GENMOD statement to fit generalized linear models. For details of the difference between PROC GENSELECT and the myriad linear modeling procedures in SAS/STAT see the documentation. For a nice explanation of SAS’s various procs for linear models in SAS 9 and how they compare to each other, see Rodriguez 2016, Stokes 2015 and Cerrito 2010.

I hope that this has been helpful. In future posts, I'll describe the tasks that make up the Decision Trees (PROC TREESPLIT), another supervised learning model.

SAS AI and Machine Learning Courses

The rapid growth of AI technologies is driving an AI skills gap and demand for AI talent. Ready to grow your AI literacy? SAS offers free ways to get started for beginners, business leaders, and analytics professionals of all skill levels. Your future self will thank you.

- Find more articles tagged with:

- GEL

- SAS Visual Data Mining and Machine Learning