- Home

- /

- SAS Communities Library

- /

- Machine Learning and Explainable AI in Forecasting - Part II

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Machine Learning and Explainable AI in Forecasting - Part II

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Explainability Methods in Forecasting

Table of Content

|

|

Introduction

What are Shapley Values?

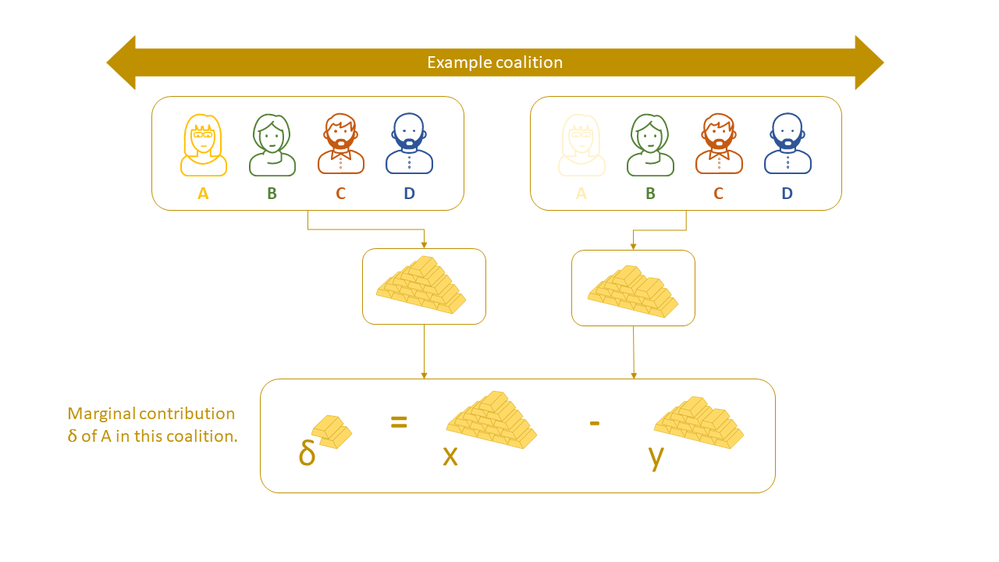

How to fairly attribute member's contribution? The solution by Lloyd Shapley satisfies the following properties:

- EFFICIENCY: All individual awards should add up to the total earning

- DUMMY: If including an individual brings no additional earning in any situation, then this individual should receive zero award

- SYMMETRY: If including two individuals add the same amount of additional earnings, then they should receive the same award

- ADDITIVITY: If including individual A inceases the earning by the same amount of two other individuals B and C, then A should receive the sum of B's and C's award.

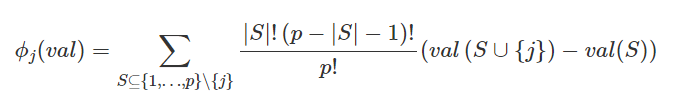

Written as formula:

See: https://christophm.github.io/interpretable-ml-book/shapley.html

In the formula above, p is the total number of members and S is the number of members in the coalition excluding the member of interest.

The weight is inversely proportional to the size of a coalition “group” where each “group” includes all coalitions with the same number of members.

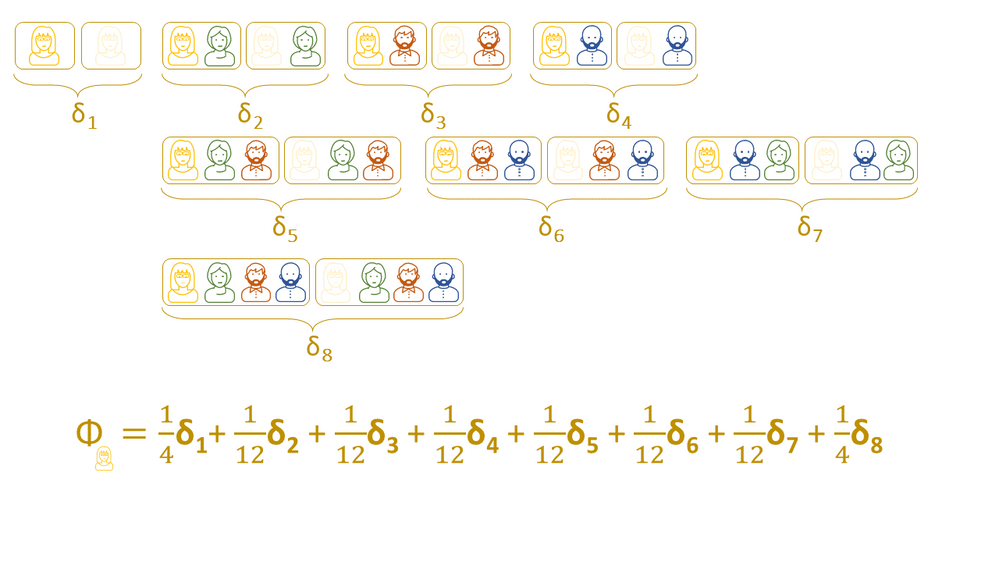

- Group 1: Adding 0 other person, size 1

- Group 2: Adding 1 other person, size 3

- Group 3: Adding 2 people, size 3

- Group 4: Adding 3 people, size 1

Each group ends up in having the same total weight of 1/4 and all weights add up to 1.

Using the linearExplainer Action for Time Series Data

- Pick a single observation (query)

- Generate random observations by sampling from each variable's distribution separately

- Apply the model score code that was generated by a previous step to the new observations

- Weight the observations based on their coalitions

- Run a weighted linear regression on model's prediction

- Interpret the linear regression model coefficients

proc cas;

explainModel.linearExplainer result=shapr / table = {name='PRICEDATA_ID', caslib='PUBLIC'}

query = {name='QUERY', caslib='CASUSER'}

modelTable = {name='GB_PRICEDATA_MODEL_ID', caslib='MODELS'}

modelTableType = 'ASTORE'

predictedTarget = 'P_sale'

seed =1234

preset = 'KERNELSHAP'

dataGeneration = {method='None'}

inputs= {{name = "sale_lag3"},

{name = "sale_lag2"},

{name = "sale_lag1"},

{name = "discount"},

{name = "price"}}

;

run;

- the accuracy depends on how well the original data cover the coalitions,

- the Shapley values of highly correlated features may bleed into each other,

- this method can be seen as approximation of the Shapley coalition/cohort values in [3].

| Note: If you are interested in a global explanation of your machine learning model for time series data, you can just adapt the preset parameter to 'GLOBALREG' to create a surrogate model for a global explanation of your model. |

References

April 27 – 30 | Gaylord Texan | Grapevine, Texas

Registration is open

Walk in ready to learn. Walk out ready to deliver. This is the data and AI conference you can't afford to miss.

Register now and lock in 2025 pricing—just $495!

SAS AI and Machine Learning Courses

The rapid growth of AI technologies is driving an AI skills gap and demand for AI talent. Ready to grow your AI literacy? SAS offers free ways to get started for beginners, business leaders, and analytics professionals of all skill levels. Your future self will thank you.