It’s no secret that Snowflake, after enjoying years of early success with its data platform, has made waves (likely chilly water, though!) expanding their capabilities into the developer world. Snowpark is its developer’s playground offering, allowing users to develop in Python, Java, and Scala “runtimes and libraries that securely deploy and process non-SQL code in Snowflake.”

I was fortunate enough to be among the team of SAS representatives at this year’s Snowflake Summit at the end of June. There, among many other exciting announcements, Snowflake dropped news for the Snowpark community: Snowpark Containers – a solution allowing deployment of containerized applications within a (OCI compliant) Snowflake registry and the access of said services directly through the Snowflake UI. While this is exciting in itself, the collaborative solution SAS and Snowflake have developed in tandem with the Snowpark Containers release raises potentials to a new level.

The long story short: our new partner initiative (in private preview as of July 2023) allows you to publish decisions and models from SAS Intelligent Decisioning and SAS Model Manager directly into the Snowflake ecosystem! If your data stream (real-time or batched) is flowing into Snowflake, worry no more – you can now run that data through SAS-powered decisioning without the data ever leaving your Snowflake instance. Bringing the analytics closer to your data will be a quick, cost-saving solution that brings amazing potential to modelling pipelines, allowing reduced latency, ease of data management, and reduction of redundancy all at once.

So how did this develop, and how does it work, in long form?

The Python Prototype

This project began at the turn of the 2023 calendar year, as a Pythonic prototype. The task at hand was initially just a compatibility connector: Snowpark Containers, currently in a private preview following its announcement at the 2023 Snowflake Summit, outputs data in a JSON format vastly different from that which the SAS Container Runtime (SCR) accepts data. Additionally, Snowflake, with its SQL-based code, pushes batches, while SCR has historically been a single-record operator. What does that mean? If you stored your data in Snowflake and wanted to score it using a SAS model, you had to do your own intermediate steps to connect the two, and you were only able to score one row at a time.

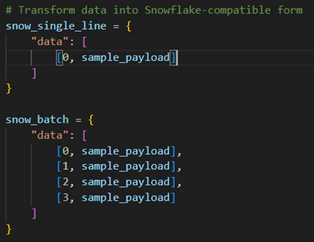

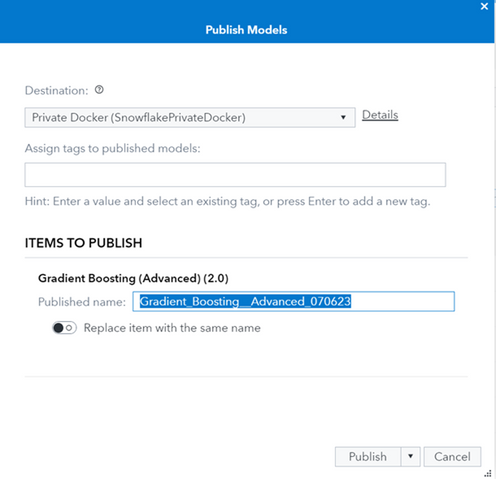

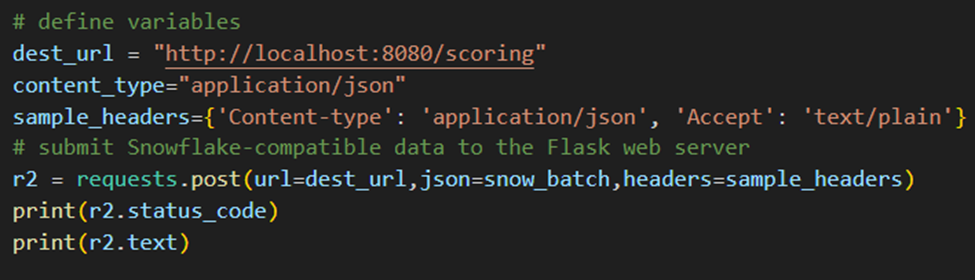

The prototype I developed addressed both these issues, and in doing so, also minimized the number of REST calls necessary to score a given subset of data. Using a Flask-based wrapper, the prototype simply accepted data in Snowflake’s format, parsed and fed it record-by-record to the contents of the SCR container, collated the results, and pushed them back to Snowflake in the original format. Here’s a snippet of sample data and what the formatting looked like:

Figure 1: (a) Sample Payload for SCR, (b) Sample Payloads from Snowflake

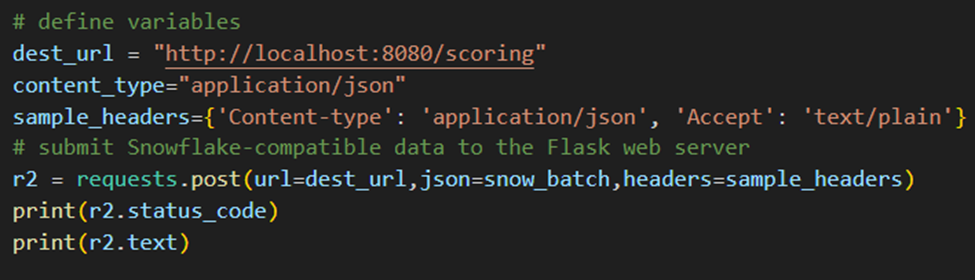

Nothing wild as far as data transformations go. Figure 1(a) showcases a sample record from the all-empowering HMEQ dataset – a commonly used decisioning set designed for home equity loan classification models. The dataset can be found and downloaded at HMEQ_Data | Kaggle. Figure 1(b) shows what a single record and a small (4-row) batch look like when outputted by Snowflake. Using a Flask app, the latter was received and parsed for records meeting the form in Figure 1(a), which were then fed to the SCR container around which Flask was wrapped. Figure 2 below shows the simplicity of engaging this containerized solution with local data, thus emulating the Snowflake-to-SCR-to-Snowflake pipeline.

Figure 2: Scoring a Batch

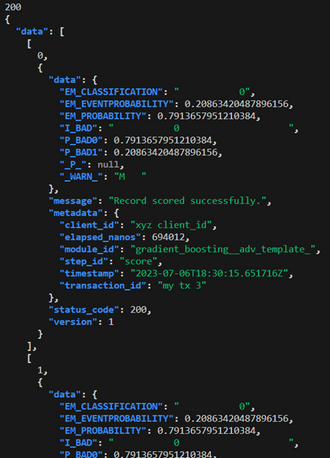

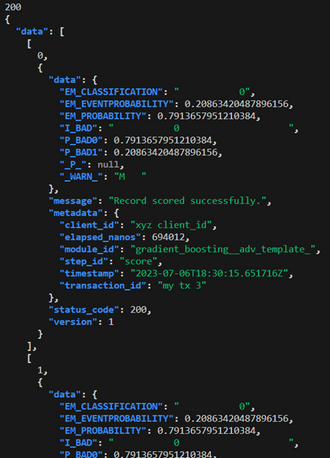

The results follow the exact format of the input, as seen in Figure 3. Extra fields were added to the data dictionary in each record’s iteration to assist Snowflake users in debugging any potential issues when they receive their results in the Snowsight UI.

Figure 3: Batch Scoring Results

This prototype served as a launching pad from which SAS could develop a partnering solution to Snowpark Containers directly into the Viya 4 offerings. This solution mimicked the prototype functionality, but also added publishing features that rendered the entire process seamless to the user. Let’s take a live look at SAS Model Manager to see this in action:

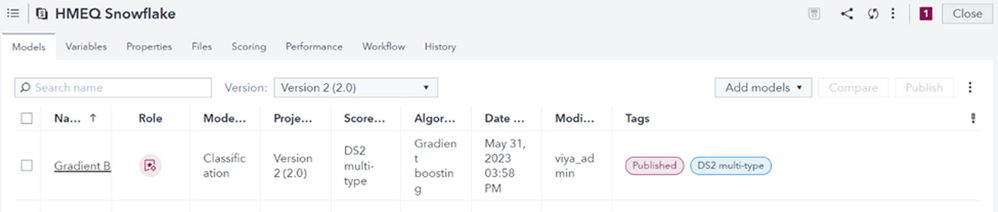

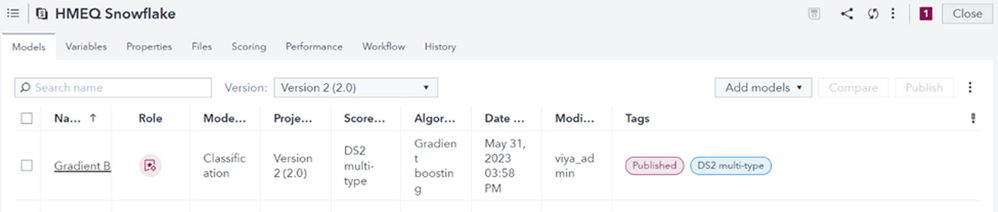

Figure 4: SAS Model Manager Project View

Figure 4: SAS Model Manager Project View

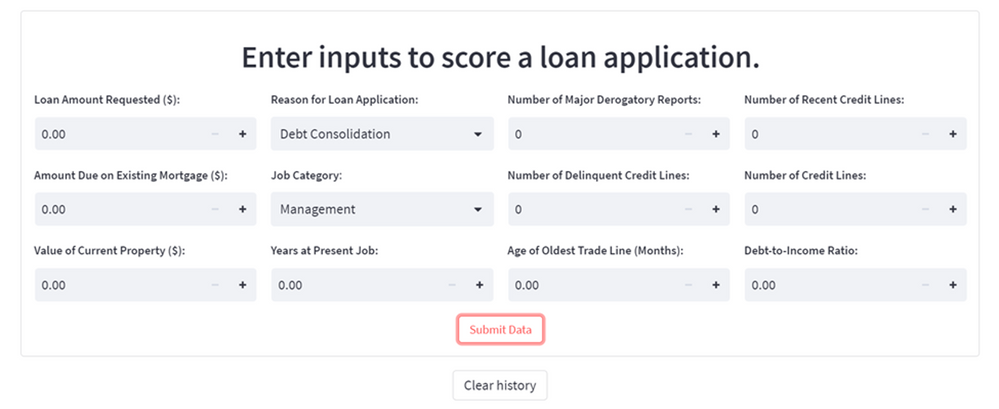

The project above is a one-model competition (and thus, really no competition at all) to accurately predict loan defaults on the aforementioned HMEQ data set. As the only model available, the Gradient Boost developed in SAS Model Studio is the landslide champion. We want to publish this model directly into the Snowflake Registry, but we also want the SCR container to have the ability to intake data batches properly from Snowflake. Fear not – a new configurable publishing destination does just that!

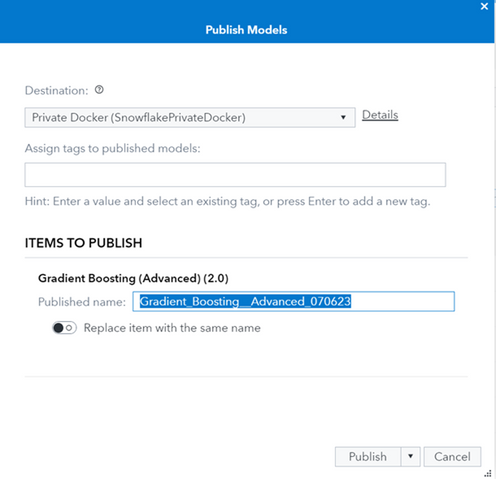

Figure 5: Model Publish View in SAS Model Manager

Another time, I’ll dig deeper and showcase step-by-step how to configure this publishing destination in your own Viya 4 environment. Just keep in mind – the Snowpark functionality to which I’m connecting is not generally available as of July 2023, so stay tuned! In short, once you publish to this new destination, you’ll be able to use the Snowsight UI (or SnowSQL) to work with images published in the ecosystem’s registry and create services, from which you can really bring the power of Snowflake data and SAS analytics to life.

In a simple Snowflake worksheet, you can create and configure the functionality using SQL objects in a manner very familiar to Snowflake users. The ease of setup is just one of the many benefits of leveraging SCR and Snowpark. Try using SAS analytics to generate your decisioning pipelines, then automating the scoring of data using Snowflake’s tasks & streams, and just like that, you have an automated pipeline retrieving data as it arrives in your cloud provided storage of choice and providing cutting-edge analysis courtesy of SAS.

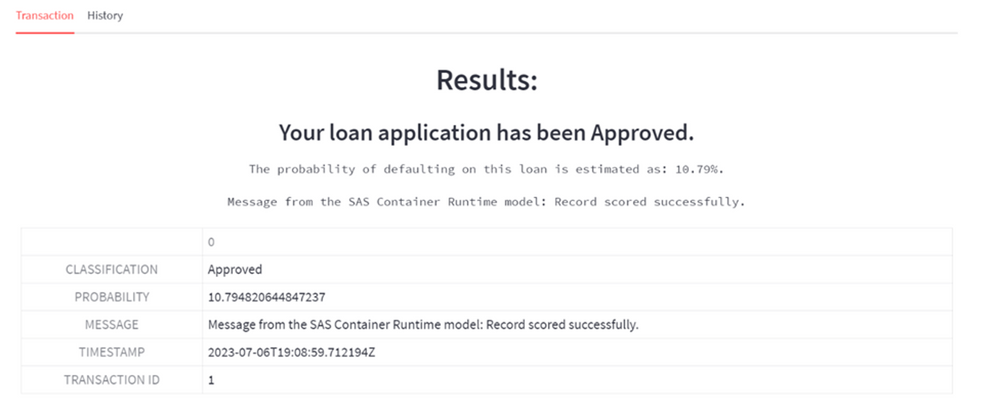

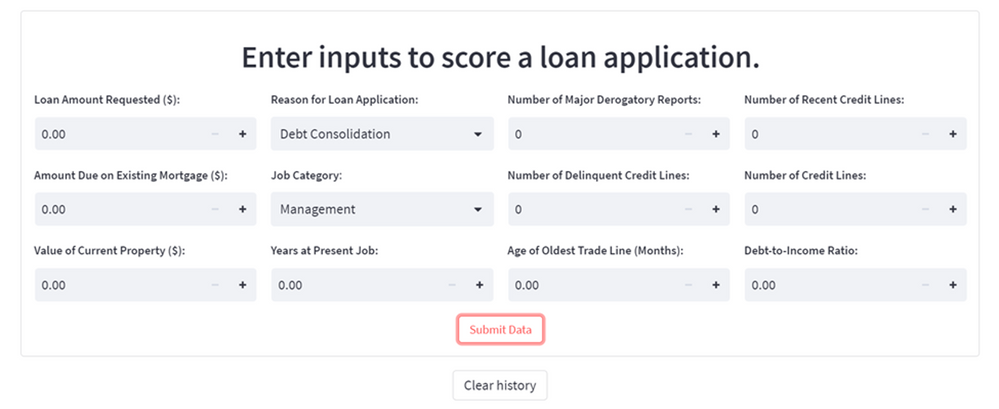

You can even run your own apps leveraging the services you create in Snowpark. I’ve created a simple loan-scoring app using Streamlit to showcase just that:

Figure 6: Inputs to Loan Scoring App

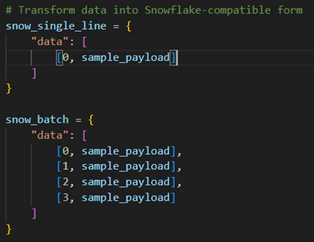

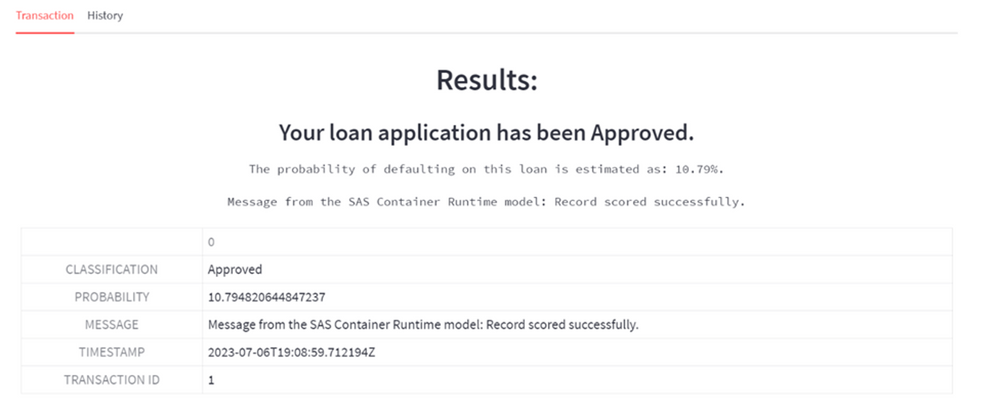

Leveraging the containerized solution in the Snowflake registry, we can push a single record (technically a batch of 1 row) directly to the deployed model, receiving results like the following output:

Figure 7: Loan Scoring App Sample Outputs

The possibilities here are limitless. I strongly encourage you to sit tight while the final products mature into general availability. Know that not only is this a huge step forward for integrating top-of-the-market analytics and data warehousing solutions, but it is also just one indicator of great things to come between SAS & Snowflake.

Thanks for reading, and happy modeling!

Questions, comments, ideas for future integrations? Email joe.cabral@sas.com