- Home

- /

- SAS Communities Library

- /

- SAS Viya Temporary Storage on Red Hat OpenShift – Part 2: CAS DISK CAC...

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

SAS Viya Temporary Storage on Red Hat OpenShift – Part 2: CAS DISK CACHE

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

In the first article of the series, we have seen how to leverage the OpenShift Local Volume Operator to make Azure VM ephemeral disks available to the pods running in the OpenShift Container Platform cluster. Now that this is done, the next step is to use them for SAS Viya temporary storage.

In this article we will focus on CAS: how can you configure CAS to use the local storage backed by the Azure ephemeral disk as your CAS DISK CACHE?

A quick summary

Here is the TL;DR recap of where we left in the previous article:

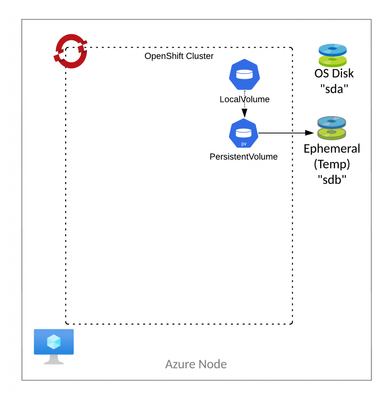

- We are working in a test environment based on OpenShift deployed on Azure virtual machines

- The virtual machines we selected provide a fast local SSD disk that we want to use for temporary storage

- We configured those disks and the cluster so that the OpenShift Local Storage Operator creates a PersistentVolume (PV) on each node to reference them, through a custom Storage Class called

`sastmp`.

A PersistentVolume referencing an Azure ephemeral disk as configured in the last article.

Select any image to see a larger version.

Mobile users: To view the images, select the "Full" version at the bottom of the page.

The process

Just as with every endeavor, let’s plan our steps before starting the journey. Configuring CAS DISK CACHE is described in the official documentation, so it will be quite simple:

- Verify the current (default) configuration

- Configure CAS DISK CACHE

- Resolve issues

- Verify the new configuration

Since we are in a test environment, we use a “crash test method”: perform the changes, apply, and, if something does not work, fix the issues. In a production environment, steps 2 and 3 should be inverted: you should find and fix issues in your dev/test environments so that you can apply the right sequence in production.

1. Verify the current (default) configuration

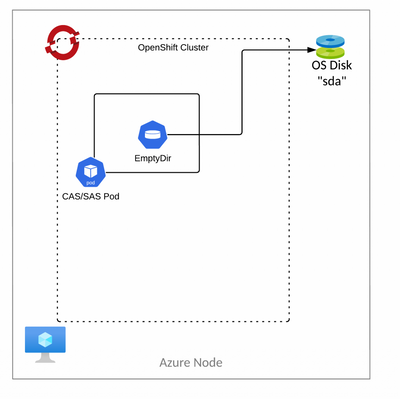

From the official documentation we know that, by default, CAS servers are configured to use a directory that is named `/cas/cache` on each controller and worker pod. This directory is provisioned as a Kubernetes emptyDir and uses disk space from the root volume of the Kubernetes node.

With the default CAS DISK CACHE configuration, a CAS pod uses an empyDir that points to the node root disk.

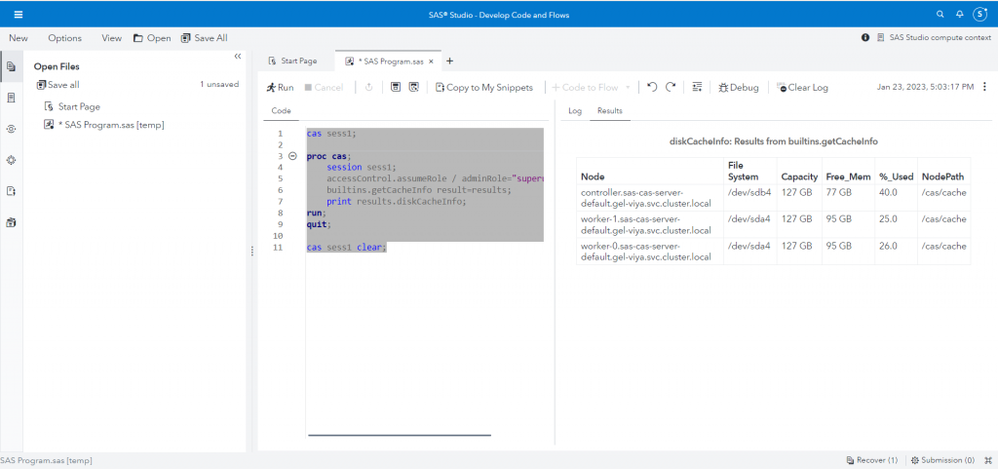

We can run some code in SAS Studio to verify this setup so that we will be able to confirm the changes after applying the new configuration. No need to reinvent the wheel, we can take an example from @Rob Collum’s article A New Tool to Monitor CAS_DISK_CACHE Usage. Here is the code:

cas sess1;

proc cas;

session sess1;

accessControl.assumeRole / adminRole="superuser";

builtins.getCacheInfo result=results;

print results.diskCacheInfo;

run;

quit;

cas sess1 clear;Sample code in SAS Studio to show the default CAS configuration.

The Results page confirms our knowledge:

- The CAS disk cache is located at the path

`/cas/cache`(This is the path as seen by the CAS process inside the pods). - On all nodes of our test environment (controller, workers), that path resides on a disk with ~127GB of capacity, on a device called

`sda4`or`sdb4`. - As we have verified in the previous article, these correspond to the OS root disks.

- This indirectly confirms that we are using a default

`emptyDir`.

2. Configure CAS DISK CACHE

We will follow and adapt the instructions from the official documentation to configure CAS so that the CAS Disk Cache will use the local temporary storage we configured in the previous article.

The example in the "Tune CAS_DISK_CACHE" section shows how to mount a volume of type `hostPath`, but you can choose the type that best suits your needs.

If your environment is based on Kubernetes 1.23 or later, you can leverage the generic ephemeral volumes that became GA in that release. This combines the advantages of using a PersistentVolumeClaim with the advantages of ephemeral storage:

- You can specify, in the pod definition, a template volume claim that mimics a statically provisioned PVC (such as the one we used in the previous article to test the local storage)

- OpenShift will take care of managing the PVC lifecycle: it creates a PVC bound to the pod when the pod starts and deletes the PVC when the pod is terminated. This is perfect for temporary storage, such as CAS DISK CACHE!

Here is a sample yaml transformer, based on the one provided in the official documentation, that defines a generic ephemeral volume that references our `sastmp` Storage Class.

# # this defines the volume and volumemount for CAS DISK CACHE location

---

apiVersion: builtin

kind: PatchTransformer

metadata:

name: cas-cache-custom

patch: |-

- op: add

path: /spec/controllerTemplate/spec/volumes/-

value:

name: cas-cache-sastmp

ephemeral:

volumeClaimTemplate:

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "sastmp"

resources:

requests:

storage: 100Gi

- op: add

path: /spec/controllerTemplate/spec/containers/0/volumeMounts/-

value:

name: cas-cache-sastmp

mountPath: /cas/cache-sastmp # # mountPath is the path inside the pod that CAS will reference

- op: add

path: /spec/controllerTemplate/spec/containers/0/env/-

value:

name: CASENV_CAS_DISK_CACHE

value: "/cas/cache-sastmp" # # This has to match the value that is inside the pod

target:

version: v1alpha1

group: viya.sas.com

kind: CASDeployment

# # Target filtering: chose/uncomment one of these option:

# # To target only the default CAS server (cas-shared-default) :

labelSelector: "sas.com/cas-server-default"

# # To target only a single CAS server (e.g. MyCAS) other than default:

# name: {{MyCAS}}

# # To target all CAS Servers

# name: .*

After creating the cas-cache-custom transformer, the process to apply it is the same as any other customization. Since it depends on the method originally used to deploy SAS Viya, please see the SAS Viya official documentation referenced above for the exact steps.

The next step now is to stop and restart the CAS server to pick up the new configuration. It is enough to terminate the CAS server pods: the CAS Operator will take care of restarting them. You can use a quick command such as:

oc -n delete pods -l app.kubernetes.io/managed-by=sas-cas-operator

3. Resolve issues

If you followed this far, you are now expecting to have a working environment with CAS using the locally attached disk as its temporary storage. And that could be true if we were using any other Kubernetes platform.

Yet, as of February 2023, we may still need a crucial step to get there on OpenShift.

After applying the changes above and terminating CAS pods, you may find that the CAS operator is unable to restart your CAS server. The sas-cas-operator pod log may contain errors such as: "sas-cas-server-default-controller" is forbidden: unable to validate against any security context constraint.

What’s going on?

Simply put, OpenShift Is doing one of its jobs: enforcing a strict security policy.

Before installing SAS Viya, as a preliminary step, the cluster administrator created some security context constraints as instructed by the Preparing for OpenShift documentation page. One of these describes the permissions granted to CAS, including a list of permitted volume types: anything else gets automatically denied.

Assuming you used the sas-cas-server security context constraint, you can check what volumes it permits with the following code:

oc get scc sas-cas-server -o jsonpath='{.volumes}'

By default, you should get a listing like the following: notice that the "ephemeral" volume we tried to use is not there.

["configMap","downwardAPI","emptyDir","nfs","persistentVolumeClaim","projected","secret"]In summary, the missing step is to add ephemeral volumes to the list of permitted entries in the CAS security context constraint. Here is a simple command to do it:

oc -n get scc sas-cas-server -o json | jq '.volumes += ["ephemeral"]' | oc -n apply -f -

This one-liner uses the OpenShift CLI to read the current definition of the sas-cas-server security context constraint and feed it into the `jq` tool which appends the entry "ephemeral" to the array of permitted volumes. Then, `jq` feeds the result back into another OpenShift CLI that re-loads the updated definition into the cluster.

This should be all. At this point, OpenShift should successfully validate the CAS controller request to allocate ephemeral volumes for the CAS pods, and the CAS server should be automatically started.

The ephemeral disk is now used by the CAS pod

4. Verify the new configuration

As a last step, we just have to verify that the new setting is actually working (trust but verify!).

We can use the same code in SAS Studio as we did initially. The new result should give us the information we are looking for:

- The CAS disk cache is now located at the new path

`/cas/cache-sastmp`, as we specified. This is the path as seen by the CAS process inside the pods. - On all nodes (controller, workers), that path resides on a disk with ~299GB of capacity, on a different device than the previous run.

- We can recognize that these correspond to the Azure temporary SDD disks. Bigger, faster.

SUCCESS!

Sample code in SAS Studio to show the updated CAS configuration.

Conclusion

In this series of articles, we are discussing how to leverage locally attached ephemeral disks for pods running in an OpenShift Container Platform cluster. They are a convenient choice for SAS Viya temporary storage and, in this article, we have seen how to configure CAS_DISK_CACHE to use them as Kubernetes generic ephemeral volumes.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello @EdoardoRiva ,

Thanks for sharing these articles. They're mandatory reading for OpenShift practitioners with Viya.

I wanted to know what account privileges are required to apply the scc manifest generated on the fly :

oc -n get scc sas-cas-server -o json | jq '.volumes += ["ephemeral"]' | oc -n apply -f -

is it Openshift admin cluster-wide or SAS Viya namespace admin ?

In our on-prem site, we deploy within a shared Openshift cluster therefore administration privileges cluster-wide and ns admin roles are kept strictly separate.

Ronan

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello @ronan , I'm glad you find these articles useful.

I modify the scc on the fly with the same user account that was used in the pre-installation steps to originally create it as described in the Security Context Constraints and Service Accounts section of the official documentation. Since scc are highly privileged objects, that usually means that a cluster administrator has to do it.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thanks for your answer @EdoardoRiva and for the link also, this is clear.

Register Today!

Join us for SAS Innovate 2025, our biggest and most exciting global event of the year, in Orlando, FL, from May 6-9. Sign up by March 14 for just $795.

Free course: Data Literacy Essentials

Data Literacy is for all, even absolute beginners. Jump on board with this free e-learning and boost your career prospects.

Get Started

- Find more articles tagged with:

- Azure

- CAS Disk Cache

- ephemeral disk

- GEL

- Kubernetes

- local storage

- OCP

- Openshift

- Openshift Container Platform

- saswork

- temporary disk