Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Home

- /

- SAS Communities Library

- /

- An Autoscaling Experience on SAS Viya

Options

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

An Autoscaling Experience on SAS Viya

Article Options

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Views

2,083

Modernization of analytics platforms requires focus on costs and higher efficiency.

Workload Management, the SAS Viya solution to handle many, intensive compute workloads efficiently, has been generally available as an add-on to SAS Viya since Nov 2021.

Two recent developments bring renewed focus upon Workload Management:

-

It’s now more easily accessible! From monthly stable 2023.08 onwards, Workload Management is provided out of the box with the majority of SAS Viya offerings. You only need to enable and configure the same.

- Configuration now enables automated scaling of compute nodes to accommodate workloads of varied profile! This provides multiple benefits. For one, automation can quickly address current demand. More significantly, administrators can differentiate resources to suit the type of workload being submitted.

The documentation on how to administer autoscaling policies is pretty straightforward and can be found here. This article demonstrates how we configured an example deployment for autoscaling and were able to execute workloads using the right resource level and type.

Infrastructure

We’ve based our example on Azure cloud resources, but configuration and setup follows a similar pattern across providers. When considering infrastructure, we made use of the GitHub Viya 4 Infrastructure-as-Code (IAC) repository for Azure. In this, we can specify our desired infrastructure which is used by an automation tool (terraform) to interact with the cloud provider and make such ready. Here’s the topology of SAS Compute nodes we took into account (note that since Workload Management is currently concerned with Compute nodes only, we have omitted information on other node pools, network and storage, but those also have to be factored in).

Table 1: Topography of Compute Nodes

|

Node Pool Name

|

Purpose

|

Machine Type

|

Min – Max Range

|

Labels

|

|

Compute

|

For Interactive users (SAS Studio and similar)

|

Standard_E4bds_v5

4 vCPUs, 32GiB RAM, 150 GiB disk space

|

1 - 1

|

wlm/nodeType=”interactive”

|

|

Combatsm

|

Small SAS jobs submitted through batch

|

Standard_E4bds_v5

4 vCPUs, 32GiB RAM, 150 GiB disk space

|

0 – 8

|

wlm/nodeType=”batchsmall”

|

|

Combatmd

|

Medium SAS jobs submitted through batch

|

Standard_E8bds_v5

8vCPUs, 64GiB RAM, 300 GiB disk space

|

0 - 2

|

wlm/nodeType=”batchmed”

|

|

Combatlg

|

Large SAS jobs submitted through batch

|

Standard_E16bds_v5

16 vCPUs, 128GiB RAM, 600 GiB disk space

|

0 - 1

|

wlm/nodeType=”batchlarge”

|

|

For All Node Pools

|

workload.sas.com/class=”compute”

launcher.sas.com/prepullImage=”sas-programming-environment”

|

|||

Some salient points:

- As already stated, this is only for SAS Compute node pools. SAS Compute node pools are designated to handle programs which run in either a compute server or batch server session. Mostly SAS programs which usually run on SAS datasets, they may also cover programs which make calls to other compute engines such as SAS Cloud Analytics Services (CAS) or Python or R (if Python or R has been configured). All nodes forming part of the above node pools need to be labelled as workload.sas.com/class=”compute”. Only nodes labelled as such will consider Compute workloads for processing.

-

The above is opinionated, meaning we, as administrators, decided to provision the above configuration for purposes of this example. How do organizations decide on the type of node pools they can harness? Some may choose to go with just one, while some others may have a wider range at their disposal. This choice is based on their current profile of SAS workloads and many other factors (including cost). One tool which can help your organization make this decision is Ecosystem Diagnostics, described further in this article.

-

Notice a majority of the planned node pools start with a minimum of 0 nodes. This signals that even were we to provision a variety of node pools, autoscaling enables a scale up from zero and we wouldn’t need to pay for compute resources unless they are actually used.

-

At the same time, notice the outlier (“Compute”, for Interactive purposes) node pool which has a minimum of 1. This is done on purpose, because interactive users appreciate a compute server which is always on due to the nature of interactive workloads. Simply put, in this day and age, you don’t want interactive users staring at spinning wheels or cranking up a node to make it start. You have the flexibility to keep a warm node to serve a subset of users, maybe with a small machine in order to keep cloud costs low.

-

Don’t forget to add the additional label: launcher.sas.com/prepullImage=”sas-programming-environment” for all nodes. Saves you a lot of angst.

Configuration

This is the fun part. Given provisioned infrastructure, let’s look at how to configure Workload Management & optimize usage as per needs.

You can configure Workload Management through an plugin called Workload Orchestrator (WLO) in the SAS Environment Manager application. Administrators use WLO to implement decisions regarding the appropriate resource to run a workload. For users, this is a great resource to monitor the status of jobs.

The process of configuration is greatly eased when you consider the entire configuration as a single JSON containing all the required details. In the configuration page of WLO, simply click on “Import”, import the sample configuration provided here, make required changes, and you are set. Conversely, you can also export the configuration at any time to make use of the same in a different environment later.

Of course, even more fun is actually looking at the individual components making up the configuration. The official documentation on configuring Workload Orchestrator provides more details. Here, we’ll focus on tasks which support the following basic flow of events.

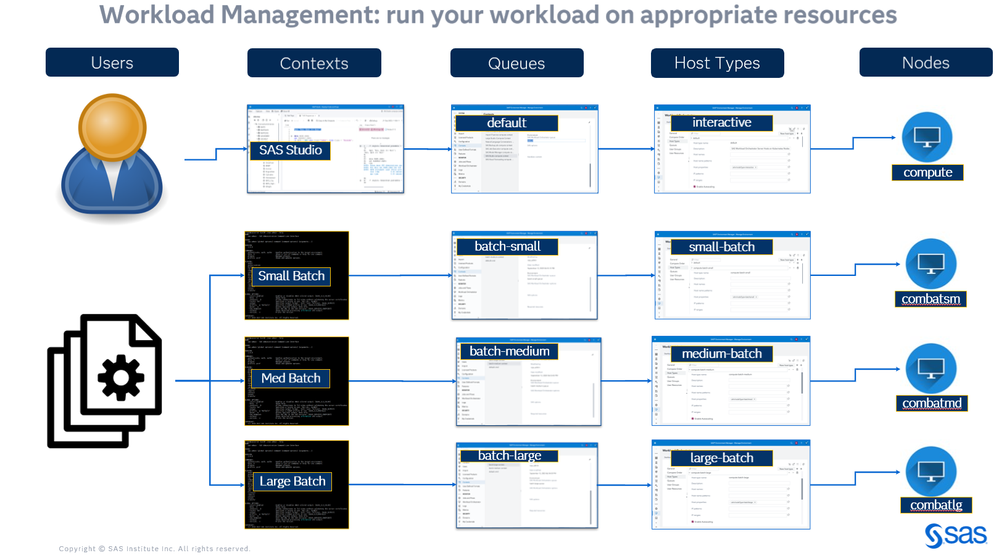

Figure 1: Workload Execution - user flow

A lot going on in the picture above, therefore let’s attempt to summarize:

- Users require execution of their workloads (SAS programs).

- They submit workloads through interfaces like SAS Studio (which contain an element of interactivity) or via batch jobs from the command line.

- Every submission is flagged with a context, which indicates the broad set of parameters under which this job will run. The context could be either a Compute context or a batch context.

- This context is wired to run in a SAS Workload Orchestrator queue. Queues are defined in Workload Orchestrator to govern, among other things, where and when the jobs may be executed.

- The queue is configured to request that the job be run on a host type where such is defined.

- Host types are configured with host properties that specify the labels (from the table in Infrastructure, above) which identify candidate nodes to run the workload on.

- The host types are also flagged as being enabled for autoscaling or not.

- Given a request for a job to run on a host type, Workload Manager makes a request for an available node. If the node is available, the requested session (either sas-compute or sas-batch) is started on that node to execute the job.

- If a node is not available, but the autoscaling flag is enabled on that host type, then Workload Manager works along with the Kubernetes cluster-autoscaler to signal a need for a node to execute the session on. The cluster-autoscaler responds to this signal by requesting a node from the cluster, which is then spun up to execute the job.

There are a number of conditions which determine whether a node is available or not, which is explained in detail in the following documentation link.

Experience

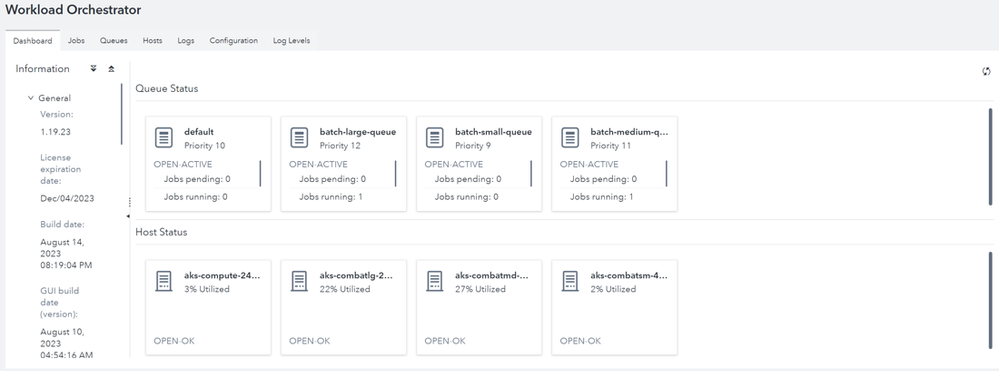

Let’s now actually watch things in action! In an initial state, with everybody goofing off - er, let’s just say it’s the start of the day – the following is the state of the system as represented by SAS Workload Orchestrator.

Figure 2: Dashboard of SAS Workload Orchestrator

Figure 2 is the dashboard view of Workload Orchestrator. On the left hand side, there is information about the version, license expiration, build date and GUI build date. The top half view lists Queue Status. In our case, there are four queue status tiles representing what’s configured - default, batch-large-queue, batch-small-queue, batch-medium queue. From the queue status, you can see all the queues are open and active. No closed queue. All have 0 jobs pending. The lower half view is the Host Status. There’s one server shown as Open and in OK status.

Which can also be picturized as follows:

Figure 3: Initial State

Whoa! – you may chortle in righteous indignation. How come there’s a machine switched on if there’s no work? Well, that’s the warm node kept alive for interactive users. It’s a small price you pay to have nodes available for users who may come back to their desks and start coding. Luckily, thanks to Workload management, you can keep this lean by provisioning only a small machine (minimum cores and CPUs) to satisfy this usage pattern and keep cloud costs low.

Let’s now take up a case when work actually starts to happen.

Figure 4: Screenshot of sas-viya CLI

Figure 4 demonstrates submitting a program using the Command Line Interface. The Runme.sas program is submitted using the default (batch) context. The default context maps to the batch-small-queue. In Figure 3, there is one job pending in the batch-small-queue. Since autoscaling is enabled, the job is kept pending until the cluster auto-scaler requests a node available, and that node becomes available and ready to be used. As mentioned above, the cluster auto-scaler obtains this signal (to auto-scale) from Workload Management, based on the configuration provided.

Figure 5: Updated Dashboard View

Figure 5 shows a change in state in the updated Dashboard where the program RunMe.sas is in a pending state in the batch-small-queue. The job will stay in the pending state until the new node is available.

Figure 6: Updated Dashboard View

Figure 6 shows a change in state in the updated Dashboard where the program RunMe.sas is now running in the batch-small-queue and a new node is up.

Figure 7: Calling README.sas through the Command Line Interface

Figure 7 displays the job completed and Figure 8 now displays the current state of the Dashboard. The job completed. However, the new compute node is still active showing Open-Ok, looking for any other jobs before it scales down. After lapse of a certain time period (governed by configuration), if the node continues to be idle, it is picked up by Kubernetes for termination. This link describes the conditions which trigger a scale-down of nodes.

Figure 8: View of Workload Orchestrator after program run

Now it is time to really have fun. Jobs are being submitted to the batch-large-queue, batch-medium-queue and default queues in Figure 7.

Figure 9: View Queues tab

The view of the queues tab shows 1 job is running on the batch-small-queue. 1 job is pending in the batch-large-queue and 1 job is batch-medium-queue. Why are they pending? Remember from Figure 8, since we already ran a job from the batch-small-queue a node was up and waiting for more requests. Now, we are waiting for nodes from the batch-medium context and batch-large context to fire up and enter a state of readiness, which leads to an Open-OK against the host.

Figure 10: New WLO view

Figure 10 shows the dashboard view again. We focus on the Host status where we can see the interactive host waiting on interactive jobs. The hosts are associated with the batch-small-queue is active since we have a job running. Figure 11 shows another host that scale up running the job from the batch-medium-queue. Figure 12 displays the batch-large-queue is running a job and the host associated with the server is Open and Ok.

Figure 11: Dashboard View with batch-medium-host available

Figure 12: Dashboard View with batch-large-host available

The above (Figure 12) is the state when all available Compute node types (as detailed in the infrastructure section) are utilized. As workloads increase (based on business needs) , the extent to which these node types are accessed will vary, highlighting the ability to differentiate resources as per the needs of the workload. Pictorially, Figure 12 can also be represented as follows:

Figure 13: Workload Management in a busy state

Figure 14: Hosts tab

Figure 14 displays the hosts tab. The information on the Dashboard and the host tab are the same information but the information is presented in a different view.

With the completion of all the jobs running, the hosts have scaled down and we are left with the Interactive Host waiting for interactive jobs in Figure 15.

Figure 15: WLO rests

In summary

As evidenced by SAS Viya’s move to cloud-based architecture, modernization of analytics platforms focusses on costs and higher efficiency. Workload Management, through its recent autoscaling capabilities and other elements, facilitates the following:

- Reduced idle capacity

- Differentiated & right-sized resources per workload

- Automated decision making on resources, triggered by user activity

- Reduced pending jobs and higher queue utilization

- Centralized administrative activity and interfaces (less overlap between Kubernetes & SAS Viya administration control)

Drop us an email with any additional questions.

References

About Azure Virtual Machines: https://learn.microsoft.com/en-us/azure/virtual-machines/ - SAS Ecosystem Diagnostics: https://communities.sas.com/t5/Ask-the-Expert/Why-Do-I-Need-SAS-Enterprise-Session-Monitor-and-Ecosy...

- Documentation related to Workload Management and cluster autoscaler: https://go.documentation.sas.com/doc/en/sasadmincdc/default/wrkldmgmt/n1s5vpyfr4sq3zn1i1dp1aotpzka.h...

Register Today!

Join us for SAS Innovate 2025, our biggest and most exciting global event of the year, in Orlando, FL, from May 6-9. Sign up by March 14 for just $795.

Free course: Data Literacy Essentials

Data Literacy is for all, even absolute beginners. Jump on board with this free e-learning and boost your career prospects.

Get Started

Article Labels

Article Tags

- Find more articles tagged with:

- DataOpsWeek - Environmental Management

- DataOpsWeek - Orchestration

- Grid

- SAS Workload Management