- Home

- /

- Programming

- /

- SAS Procedures

- /

- Re: compute 95%CI of r square in proc reg

- RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi everyone!

I'm running a weighted linear regression with the following code;

proc reg data=mydata ;

weight variance;

model sur_estim_y= sur_estim_x;

run;

I want to obtain the 95%CI of the Rsquare estimate (or the standard dev of the Rsquare estimate)

In the manual I saw the possibility to compute 95%CI for the parameter estimates but not for the Rsquare.

Does anyone know how to do it?

Thanks a lot

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

This is what I found: https://www.google.com/url?sa=t&rct=j&q=&esrc=s&source=web&cd=&cad=rja&uact=8&ved=2ahUKEwjngf_OgKT9A...

R-squared is simple the square of the correlation coefficient, which is what this paper gets confidence intervals for.

Paige Miller

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Thanks a lot!

I used proc corr and obtained the same Rsquare as from proc reg (corr coefficent **2)

However i'm not sure I how to use standard error from the corr coefficent to compute 95%CI for the Rsquare estimates

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

You obtain the confidence limits for the correlation coefficient r as shown in the article. Then you square them to get the confidence limits for R-squared.

Paige Miller

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

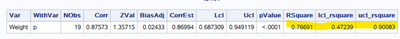

Yes. RSquare is just the square of correlation between Y and Yhat(Y predicted value).

You could use fisher options of PROC CORR to get its CI .

proc reg data=sashelp.class ;

weight age;

model weight= height;

output out=want predicted=p;

quit;

ods output FisherPearsonCorr=FisherPearsonCorr;

proc corr data=want outp=outp fisher;

var weight;

with p;

weight age;

run;

data FisherPearsonCorr;

set FisherPearsonCorr;

RSquare=Corr**2;

lcl_rsquare=lcl**2;

ucl_rsquare=ucl**2;

run;

proc print noobs;run;

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

I like the idea of calculating a confidence interval via the correlation coefficient, like Ksharp explained. However, after trying it out on a concrete example, I find that it does not correspond to another method to calculate the CI via calculating a standard error for the R2 estimate as explained here (for example):

https://agleontyev.netlify.app/post/2019-09-05-calculating-r-squared-confidence-intervals/

Notably, this method will result in a symetric CI around the R2 estimate, whereas the method via the correlation coefficient will not yield a symetric CI. So I am a bit confused as to what method should take precedence.

If anyone can shed light on this it would be greatly appreciated.

KR,

Joakim

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Something else that might be of importance with the Proc Corr approach is weights are treated slightly differently than in Proc Reg.

In Proc Reg

Values of the weight variable must be nonnegative. If an observation’s weight is zero, the observation

is deleted from the analysis. If a weight is negative or missing, it is set to zero, and the

observation is excluded from the analysis.

In Proc Corr

The observations with missing weights are excluded from the analysis.

By default, for observations with nonpositive weights, weights are set to zero and the observations

are included in the analysis.

You can use the EXCLNPWGT option to exclude observations with negative or zero weights from the analysis.

If you have non-positive weights it looks like you need the EXCLNPWGT option to match the R-square from Reg

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Sorry. I have no idea about it.

If you want symmetric CI, you could try option " fisher(BIASADJ= no) ". But that is still not get exact symmetric CI.

proc reg data=sashelp.heart(obs=100) ;

weight ageatstart;

model weight= height Diastolic Systolic;

output out=want predicted=p;

quit;

ods output FisherPearsonCorr=FisherPearsonCorr;

proc corr data=want outp=outp fisher(BIASADJ= no);

var weight;

with p;

weight ageatstart;

run;

data FisherPearsonCorr;

set FisherPearsonCorr;

RSquare=Corr**2;

lcl_rsquare=lcl**2;

ucl_rsquare=ucl**2;

_lcl2=lcl_rsquare-RSquare;

_ucl2=ucl_rsquare-RSquare;

run;

proc print noobs;run;

Or you could try Bootstrap Method to get RSquare CI.

Maybe @Rick_SAS @StatDave knew something you want.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

> this method will result in a symmetric CI around the R2 estimate, whereas the method via the correlation coefficient will not yield a symmetric CI. So I am a bit confused as to what method should take precedence.

Note that R-squared is always in the interval [0, 1], so you would not expect a symmetric CI for the R-squared statistic. The true sampling distribution of R-squared is not symmetric. The formula you quote (in Cohen's book, but actually from Olkin & Finn, 1995, which I have not read) is a large-sample asymptotic approximation that assumes symmetry for the R-squared distribution. I would not use it unless the sample R-squared is far from 0 and 1, and the sample size is large.

For the case of one regressor, you can use the connection between the regression R-squared value and the (squared) correlation coefficient. I think KSharp has the right idea.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Thank you Rick for a clear and logical explanation. It makes sense to me.

And thanks Ksharp for neat solution to the problem.

KR,

Joakim

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Available on demand!

Missed SAS Innovate Las Vegas? Watch all the action for free! View the keynotes, general sessions and 22 breakouts on demand.

Learn the difference between classical and Bayesian statistical approaches and see a few PROC examples to perform Bayesian analysis in this video.

Find more tutorials on the SAS Users YouTube channel.

Click image to register for webinar

Click image to register for webinar

Classroom Training Available!

Select SAS Training centers are offering in-person courses. View upcoming courses for: