- Home

- /

- SAS Communities Library

- /

- Using generic ephemeral volumes for SASWORK storage on Azure managed K...

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Using generic ephemeral volumes for SASWORK storage on Azure managed Kubernetes (AKS)

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

This blog can be seen as an “extension” to my overview describing common options for SASWORK storage on Azure AKS (see here). While the other text compares various storage options, I’d like to provide more details about a specific new Kubernetes API called “generic ephemeral volumes” in this blog.

Generic ephemeral volumes have been introduced to Kubernetes in release 1.23. As the name suggests, the lifetime of these volumes is coupled to the lifetime of the pod which makes the request. As the Kubernetes documentation puts it: a generic ephemeral volume is a “per-pod directory for scratch data“ and for that reason it is a prime candidate for being used as SASWORK and CAS disk cache data storage. In case you are wondering why you should bother at all about this I’d like to refer you to the blog I mentioned above. Just as a short summary: the default configuration for SASWORK (emptyDir) will not meet the requirements for any serious workload and the alternative hostPath configuration (albeit providing sufficient performance) is often objected by Kubernetes administrators for security reasons.

As we will see in this blog generic ephemeral volumes provide a good alternative to both options but some caution is advised to make sure that the I/O performance meets your expectations. While the Kubernetes API defines how generic ephemeral volumes can be requested and how their lifecycle looks like, it is up to the specific Kubernetes CSI provisioners how this API is implemented. This blog describes how generic ephemeral volumes can be used in the managed Kubernetes service on Azure (also known as “AKS”). The most straightforward implementation of this API in AKS can be found in provisioners based on the CSI driver named disk.csi.azure.com. A StorageClass like managed-csi-premium, which is provided by default with each AKS cluster, is a good example for this. All these provisioners make use of Azure managed disks which are mounted and unmounted to the Kubernetes worker nodes.

Introduction to generic ephemeral volumes

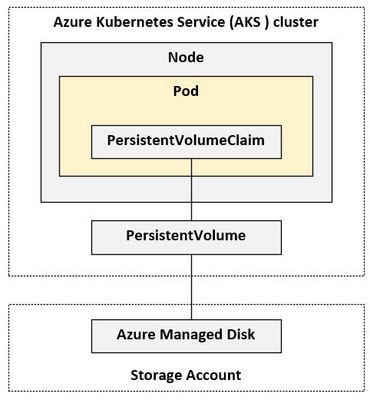

The basic functionality of generic ephemeral volumes is simple to explain. A pod can request a volume by adding a volumeclaimTemplate to its’ manifest. The template contains all relevant information about the requested storage such as which CSI driver to use (a.k.a. the StorageClass), the access mode(s) and the volume size. In case you picked one of the default storage classes on AKS which are backed by the Azure Disk service, the CSI driver will attach a new disk to the Kubernetes host and mount it to the requesting pod. Once the pod terminates, the disk will be detached and discarded. Take a look at this picture for a better understanding:

This seems simple enough, however it gets a little more complicated if we want to use this approach for SASWORK, because a SAS compute pod is based on a PodTemplate and these PodTemplates are dynamically built using the kustomize tool.

Using generic ephemeral volumes for SASWORK

It might be easier to work backwards and start by looking at the resulting manifest which we want to create. Here’s a super-simplified PodTemplate describing how a SAS compute pod should look like:

apiVersion: v1

kind: PodTemplate

metadata:

name: sas-compute-job-config

template:

spec:

containers:

name: sas-programming-environment

volumeMounts:

- mountPath: /opt/sas/viya/config/var

name: viya

volumes:

- name: viya

ephemeral:

volumeClaimTemplate:

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "managed-csi-premium"

resources:

requests:

storage: 128Gi

Note the volumes section and the use of the “ephemeral” keyword which tells Kubernetes that a generic ephemeral volume is requested. This example uses a default StorageClass on AKS which will provision Premium_SSD disks.

Secondly, note the name of the volume – “viya” – and the mount path which is used inside the pod. By default a SAS session started in SAS Viya on Kubernetes will create it’s WORK storage in a folder below /opt/sas/viya/config/var. Submit the following SAS commands in a SAS Studio session to see where your SASWORK can be found:

%let work_path=%sysfunc(pathname(work));

%put "SASWORK: &work_path";

"SASWORK:

/opt/sas/viya/config/var/tmp/compsrv/default/932f131e-...-f0bcce9ded4f/SAS_work1C5C000000B9_sas-launcher-9dfdf749-...-r4ltl"

The name of the volume configuration used here is not arbitrary: it turns out that the SAS developers have created a kind of “plugin” mechanism. We can plug in any storage configuration for SASWORK we want (be it emptyDir, hostPath or a generic ephemeral volume) – as long as our configuration uses the name “viya” it will work without problems.

The SAS deployment assets bundle contains a suitable patch which you can easily modify to define your desired storage configuration (check the README at $deploy/sas-bases/examples/sas-programming-environment/storage for a detailed explanation). Here’s how the kustomize patch should look like in order to generate a PodTemplate which matches the one we just saw above:

apiVersion: v1

kind: PodTemplate

metadata:

name: change-viya-volume-storage-class

template:

spec:

volumes:

- $patch: delete

name: viya

- name: viya

ephemeral:

volumeClaimTemplate:

metadata:

labels:

type: ephemeral-saswork-volume

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "managed-csi-premium"

resources:

requests:

storage: 128Gi

In case you’re wondering about this line “$patch: delete” – that’s a special label used by Kubernetes patches which will simply remove any existing content before the new configuration is added. After you’ve created this file (e.g. in the site-config folder), it needs to be added to the kustomization.yaml like this:

patches:

- path: site-config/change-viya-volume-storage-class.yaml

target:

kind: PodTemplate

labelSelector: "sas.com/template-intent=sas-launcher"

Note how this patch is applied to all PodTemplates used by the launcher service by setting the labelSelector attribute.

Done! That’s it? Well, let’s check …

Let’s assume you’ve configured SASWORK to use the generic ephemeral volumes and have deployed your SAS Viya environment on AKS. How can you confirm that the configuration is working as you’d expect? There are several checkpoints to be reviewed:

- The application (SAS): nothing changed actually. The SAS code snippets shown above still shows that the same folder is used for SASWORK (/opt/sas/viya/config/var). Not surprising actually …

- The pod: here’s a change to be seen. The new volume is showing up if you exec into the sas-programming-environment pod:

$ df -h

Filesystem Size Used Avail Use% Mounted on

overlay 124G 18G 106G 15% /

/dev/sdc 125G 13G 113G 11% /opt/sas/viya/config/var

(output abbreviated) /dev/sdc is the new ephemeral managed disk.

- Finally the Kubernetes worker node: the new disk will show up here as well. Run this command from a node shell:

$ df -h

Filesystem Size Used Avail Use% Mounted on

/dev/sda1 124G 18G 106G 15% /

/dev/sdb1 464G 156K 440G 1% /mnt

/dev/sdc 125G 2.8G 123G 3% /var/lib/kubelet/plugins/kubernetes.io/csi/pv/pvc-dc2...123/globalmount(output abbreviated). Again, /dev/sdc represents the new ephemeral managed disk. As you can see, on the OS layer the disk is mapped to a folder managed by the Kubernetes daemon (/var/lib/kubelet) which will re-map it for the pod. Just as a side note: /dev/sda1 represents the node’s OS disk – this is where your SASWORK would be located if you had stayed with the default emptyDir configuration. The console output above was taken from an E16ds VM instance which means that there is an additional data disk attached to the machine by default. This is shown as /dev/sdb1. You would want to use this second disk if you configured SASWORK to use a hostPath configuration.

Quiz question: what would happen if you launch a second SAS session? Obivously the only difference will be on the node level. Here you would see yet another disk appearing (/dev/sdd probably) and this is the recurring pattern for every new SAS session – that is: until you reach the maximum number of disks which can be attached to a virtual machine in Azure (which is 32). Don’t worry too much about this limit: keep in mind that these disks are being discarded when the SAS session are closed.

Performance considerations

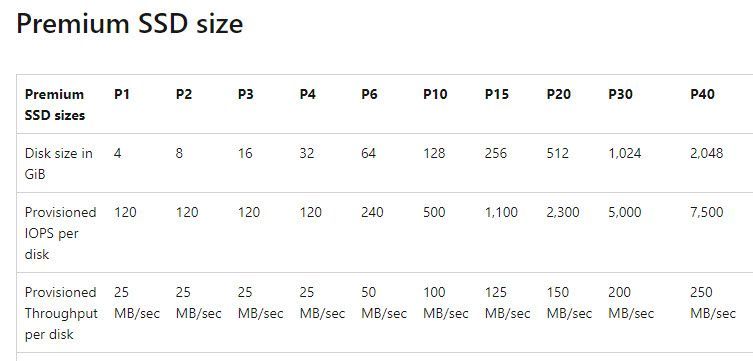

The example I’ve been using so far uses the managed-csi-premium storage class which uses Premium_SSD disks. How do these disks compare against alternative configurations – most notably against a hostPath configuration? Unfortunately this question is difficult to answer. Remember that this storage driver leverages Azure managed disks and their I/O performance is depending on the size of the disk. The screenshot below is an excerpt taken from the Azure documentation:

The CSI driver tries to match the disk types to the size which was requested for the SASWORK area. In the example above, 128GB were requested and thus the CSI decided to attach a P10 disk to the worker node. Sadly, the P10’s I/O performance (IOPS, throughput) is quite disappointing … What’s the solution for this problem? Requesting tons of gigabytes for SASWORK just to convince the driver to use a more powerful disk? Well, this is one option. Another option is to look for disks with a better performance. Enter Ultra disks.

Quoting the Azure documentation, „Azure ultra disks are the highest-performing storage option for Azure virtual machines”. That sounds appealing, isn’t it? Let’s see what needs to be done.

First of all Ultra disk support is only available for selected Azure VM types and in selected Azure data centers. This page lists the current availability. Luckily, the E*-series of VMs which we prefer to use for SAS Viya compute and CAS nodepools belongs to the machine types which are eligible for Ultra support and the major data centers will be ok to use as well.

Secondly, AKS nodepools do not offer Ultra disk support out of the box. There’s a flag named --enable-ultra-ssd which needs to be set when building the nodepool (or cluster):

# Create a cluster with Ultra_SSD support

$ az aks create -g myrg -n myaks (...) --enable-ultra-ssd

# Or: add a new nodepool with Ultra_SSD support to an existing cluster

$ az aks nodepool add --cluster-name myaks2 (...) --enable-ultra-ssd

Note that this flag is only available when submitting the commands using the Azure CLI (not the portal).

Finally, you’ll notice that there is no StorageClass available which supports Ultra disks out of the box. So we’ll have to create one on our own:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

labels:

addonmanager.kubernetes.io/mode: EnsureExists

kubernetes.io/cluster-service: "true"

name: sas-ephemeral-ultrassd

parameters:

skuname: "UltraSSD_LRS"

cachingMode: "None"

DiskIOPSReadWrite: "30000"

DiskMBpsReadWrite: "2000"

allowVolumeExpansion: true

provisioner: disk.csi.azure.com

reclaimPolicy: Delete

volumeBindingMode: WaitForFirstConsumer

Note the parameters section. The skuname makes sure that we’ll be requesting Ultra disks while DiskIOPSReadWrite and DiskMBpsReadWrite are performance settings specifying the requested IOPS and throughput (these values are configurable to some extent, check the documentation here and here for the caps and even more performance flags).

Closing remarks

This blog covered some details about a rather new storage option for SASWORK (and CAS disk cache). Generic ephemeral volumes are available beginning with Kubernetes 1.23 and provide an interesting alternative to the often objected hostPath configuration. Configuring ephemeral volumes is not a very complicated task per se because the SAS developers have prepared most of the groundwork so that it’s easy to “plug in” the right storage configuration. However, keeping an eye on the performance is still of prime importance. In my tests only Ultra SSD disks performed with a reasonable performance when compared to locally attached disks (hostPath). Preparing the AKS infrastructure for Ultra support is a slightly more “involved” task but still a manageable effort which is worth the effort.

I hope that the blog has helped you a bit when planning your Kubernetes infrastructure for SAS Viya on the Azure cloud. Let me know what you think and feel free to ask any question you might have.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thank you very much for this article, so far there has been only blogs about cas disc cache but about SASWork. Very important point: in case of ephemeral storage this number is per session. This was not clear without your article!

If one is going to have many users of SAS Viya, mainly many report viewers, I think it can be advisable to limit the users of SAS Studio, SAS Model Studio and user of batch jobs. I do not know if I forgot sth but this is what I have learnt from your blog.

In our environment we have successfully implemented ephemeral storage for SAS Work, also 100Gb pers session. I am not on Azure, I think that we have one provider of ephemeral storage and use just "standard" storage class, I do not think we have the possibility to define ultra-ssd. We will see if it will be not fast enough.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

As of Stable version 2023.02 the change is done through a transformer instead of the patch mentioned in this post.

For information see Deployment Note:

https://go.documentation.sas.com/doc/en/sasadmincdc/v_039/dplynotes/n0wdkggww6pvi5n1lqk0gvonz0au.htm

SAS Innovate 2025: Save the Date

SAS Innovate 2025 is scheduled for May 6-9 in Orlando, FL. Sign up to be first to learn about the agenda and registration!

Free course: Data Literacy Essentials

Data Literacy is for all, even absolute beginners. Jump on board with this free e-learning and boost your career prospects.