- Home

- /

- SAS Communities Library

- /

- The Relationship between Factor Analysis and Regression

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

The Relationship between Factor Analysis and Regression

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

In this post I will talk about the conceptual basis for exploratory factor analysis (EFA). I’ll be describing regression models related to EFA using matrix algebra. You’d benefit from a working knowledge of matrix algebra, but you won’t necessarily need to follow the mathematics to understand the concepts.

In my last post I talked about the relationship between Pearson correlation coefficients and simple linear regression slope coefficients. Specifically, I explained and demonstrated how correlation coefficients are identical to simple regression coefficients that result from first standardizing both the Y and the X variables. If you missed that discussion, it might be helpful to read it before continuing here. And, if you are interested in learning in detail about exploratory factor analysis, you can look at our Course: Multivariate Statistics for Understanding Complex Data (sas.com)

At first glance, factor analysis might seem to be unrelated to linear regression. However, a simple EFA example using PROC FACTOR will illustrate how factor analysis can be thought of as a series of linear regressions. I’ll use the baseball data set from the SASHELP library. You can find documentation for PROC FACTOR at SAS Help Center: The FACTOR Procedure,

SAS® PROC FACTOR to Perform Exploratory Factor Analysis

PROC FACTOR is the primary tool in SAS for performing exploratory factor analysis. I'll start here with some basic options with a single factor.

- I use the ODS OUTPUT statement to save some of output table data into SAS® datasets.

- The ODS SELECT statement instructs SAS® which output objects (tables or graphs) to display in the results window of the user interface.

- In the PROC FACTOR statement:

- The N=1 option requests exactly one factor.

- The METHOD=ML option requests the maximum likelihood for parameter estimation.

- The CORR option requests the display of the correlation matrix of the input variables.

- The RESIDUALS option requests display of the residuals (to be explained further on).

- The VAR statement names the variables I am using to discover factors. I am starting with are the career statistics for the baseball players, which are the only variables beginning with the letter c.

- The PATHDIAGRAM statement produces a plot showing the factor solution, including parameter estimates.

- The DECP=4 option requests statistics in the plot to be displayed to four decimal places.

- The DECP=4 option requests statistics in the plot to be displayed to four decimal places.

Note: You can start exploratory factor analysis with a correlation matrix instead of the data matrix of observations by variables.

ods output factorpattern=Loadings corr=XCorrs ResCorrUniqueDiag=U;

ods select factorpattern pathdiagram corr ResCorrUniqueDiag;

proc factor data=sashelp.baseball

n=1

method=ml

corr

residuals

;

var c:;

pathdiagram decp=4;

run;

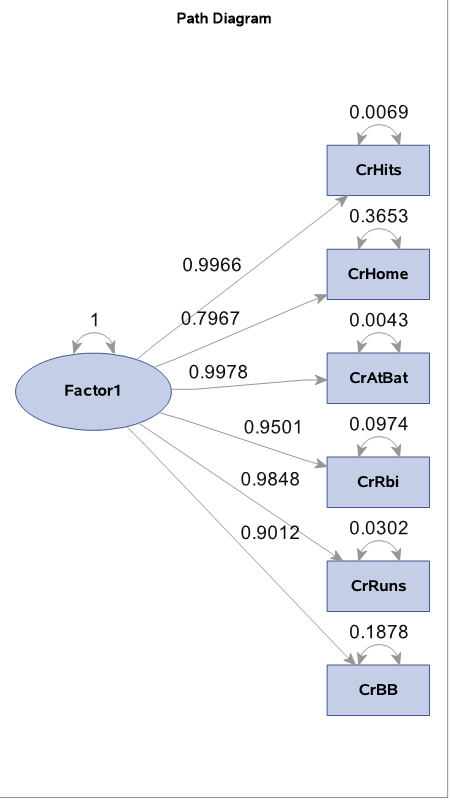

Let me jump to the path diagram in the program output.

The Path Diagram

Baseball statistics factor structure.

The equation most people recognize as linear regression is yi=β0+β1x1+...+β0xk+εi. In the equation, yi is the response variable value for individual i, x1 through xk are the explanatory variables, β0 is the y-intercept, β1 through βk are the regression coefficients, and εi is the error associated with individual i. The path diagram displays 6 regression equations.

- The unidirectional arrows show the causal paths of the regression relationships.

- The response variables are the input variables named in the VAR statement PROC FACTOR. These measured variables are referred to as manifest variables in factor analysis. The manifest variable names are displayed in rectangles.

- The double-headed arrows show the variances of factors and the errors for the manifest variables (referred to as uniquenesses in factor analysis). For example, the equation for Career Walks (CrBB) is CrBB=0.9012*Factor1 + 0.1878.

"Where are the y-intercepts?" you might ask. The equations are for standard normal distributed variables. By default, we use standardized manifest variables. We simply presume the factor to be normally distributed with a mean of zero and a variance of one. If you read my previous post, you will see how, when all regression variables are on a standard normal scale, the y-intercept will be zero.

Factors and Latent Variables

At this point, you might be wondering what Factor1 is. A factor in factor analysis represents a latent construct. We use the term latent variable for a variable which, while not itself directly measured, can be indirectly inferred by the presence of a group of manifest variables that it causes. The classic example is the concept of “intelligence”. We don’t measure intelligence directly. We might indirectly measure it using any number of intelligence tests. Each test might be composed of items that can be objectively scored. Because we use manifest variables to indicate the presence of latent variables, factor analysts often refer to those manifest variables as indicator variables.

Because we don't actually measure a latent variable, we can set its mean and variance to anything we want. A common practice is to set the mean of a factor to zero and a variance of one. We then assume a standard normal distribution.

Another common practice is to name the factor something that represents its apparent latent construct. The naming is usually based on looking at the concept shared by the manifest variables that load highly onto it. In this case, all of the manifest variables have high loadings on Factor1. They all seem to measure career batting statistics. Therefore, I might call this factor "Career Batting".

Hollywood Factor Analysis?

Whenever I think of latent variables and how manifest variables indirectly indicate their presence, I think about the 1990 movie, "Ghost", in which main character, Sam, has been killed. Sam's ghost is trying to communicate with Sam's girlfriend, Molly. Let's think of Sam's ghost as a latent variable. Molly cannot see him or sense him in any way. However, Sam's ghost has learned some tricks, including sliding a penny from the floor up a door. I think of the behavior of the penny as a manifest variable. Eventually, the physical evidence, which Molly can see, convinces her that the ghost does, in fact, exist. The latent variable (the ghost) is the causal agent of the physical behavior (the manifest variables). However, we must start with the manifest variables in order to infer the presence of the latent variable.

So, I guess this is how you ruin a perfectly good classic romantic movie. You interpret it as an exercise in machine learning. I apologize to so many people - my wife among them.

The Table of Manifest Variables Correlations

|

Correlations |

|||||||

| CrAtBat | CrHits | CrHome | CrRuns | CrRbi | CrBB | ||

| CrAtBat | Career Times at Bat | 1.00000 | 0.99489 | 0.79222 | 0.98069 | 0.94741 | 0.90035 |

| CrHits | Career Hits | 0.99489 | 1.00000 | 0.77573 | 0.98208 | 0.94254 | 0.88452 |

| CrHome | Career Home Runs | 0.79222 | 0.77573 | 1.00000 | 0.82093 | 0.92799 | 0.80619 |

| CrRuns | Career Runs | 0.98069 | 0.98208 | 0.82093 | 1.00000 | 0.94314 | 0.92677 |

| CrRbi | Career RBIs | 0.94741 | 0.94254 | 0.92799 | 0.94314 | 1.00000 | 0.88500 |

| CrBB | Career Walks | 0.90035 | 0.88452 | 0.80619 | 0.92677 | 0.88500 | 1.00000 |

There is a high degree of correlation among these variables. Without at least modest correlations among your variables, exploratory factor analysis would be as fruitless as my backyard peach tree after the birds and squirrels get their beaks and claws on them.

The next table is the Factor Pattern matrix.

The Factor Pattern Matrix

| Factor Pattern | ||

| Factor1 | ||

| CrAtBat | Career Times at Bat | 0.99782 |

| CrHits | Career Hits | 0.99655 |

| CrHome | Career Home Runs | 0.79667 |

| CrRuns | Career Runs | 0.98476 |

| CrRbi | Career RBIs | 0.95008 |

| CrBB | Career Walks | 0.90125 |

The factor pattern matrix in this case also serves as the factor structure matrix. A factor structure matrix contains the simple correlations between the manifest variables and the factors. They are the same as the regression coefficients we saw on the path diagram. I explained how this could be true in a previous post. Correlations are the same as regression coefficients when all variables are on a standard normal scale, with a mean of zero and a variance of one.

The final table produced by this program is the Residual Correlations table with uniquenesses on the diagonal.

The Residual Correlations Table (With Uniquenesses on the Diagonal)

| Residual Correlations With Uniqueness on the Diagonal | |||||||

| CrAtBat | CrHits | CrHome | CrRuns | CrRbi | CrBB | ||

| CrAtBat | Career Times at Bat | 0.00435 | 0.00051 | -0.00271 | -0.00193 | -0.00059 | 0.00106 |

| CrHits | Career Hits | 0.00051 | 0.00688 | -0.01819 | 0.00072 | -0.00426 | -0.01362 |

| CrHome | Career Home Runs | -0.00271 | -0.01819 | 0.36532 | 0.03640 | 0.17109 | 0.08820 |

| CrRuns | Career Runs | -0.00193 | 0.00072 | 0.03640 | 0.03024 | 0.00754 | 0.03926 |

| CrRbi | Career RBIs | -0.00059 | -0.00426 | 0.17109 | 0.00754 | 0.09735 | 0.02875 |

| CrBB | Career Walks | 0.00106 | -0.01362 | 0.08820 | 0.03926 | 0.02875 | 0.18775 |

If you look closely, the diagonal values on this table match the uniquenesses (numbers on the double-headed arrows) reported in the path diagram.

Matrix Algebra, Summary, and a Sneak Preview

The matrix version of the linear regression equations in both linear regression and EFA is Y=XB+E. Let’s look at what each of the elements of the equation represents in EFA.

In EFA, the Y matrix contains all the measured variables used for factoring (the manifest variables). The B matrix contains all the regression coefficients (the factor loadings). The E matrix contains the errors (the uniquenesses). The X matrix contains the factors (the latent variables), which are said to “cause” the manifest variables. This is just a preview of the matrix algebra that I will explain in the next post.

In this post, I have introduced the fundamental concepts of exploratory factor analysis with the aid of linear regression and correlation. I showed a simple example using one factor and described how we can interpret the basic output from PROC FACTOR as a series of regression equations. I have yet to fully explain how the coefficients are estimated. For that, I will need to go into a little more detail about the matrices that I introduced at the end of this post. For those interested in moving to the next level of understanding of exploratory factory analysis, look out for my next post.

Available on demand!

Missed SAS Innovate Las Vegas? Watch all the action for free! View the keynotes, general sessions and 22 breakouts on demand.

Free course: Data Literacy Essentials

Data Literacy is for all, even absolute beginners. Jump on board with this free e-learning and boost your career prospects.

Get Started

- Find more articles tagged with:

- GEL