- Home

- /

- SAS Communities Library

- /

- Working with SAS and Hadoop: Part 1 - Base SAS and SAS ACCESS

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Working with SAS and Hadoop: Part 1 - Base SAS and SAS ACCESS

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Is your organization embracing Hadoop technology? Do you need to understand what Hadoop is and figure out how you can use SAS with Hadoop? If so, this 3-part article series will contain key information to help you start working with Hadoop as a SAS user, a brief overview of the SAS technologies available for Hadoop, and some of the training courses available for each.

A Quick Hadoop Primer

To begin working with Hadoop it's helpful to consider what it has in common as well as how it differs from working in a Microsoft Windows, UNIX or Linux environment. Like those environments, Hadoop has a file system with various applications that read and write files on the file system and process data in memory. Like other file systems, you can store all kinds of file formats in Hadoop, perhaps delimited text files. XML files, JSON files, and various binary files designed for specific applications.

Unlike the other environments, though, Hadoop is not on a single machine. Hadoop consists of software installed on multiple machines communicating across a network so that the system can act as a single unified system. The collection of machines running Hadoop are often referred to as the Hadoop cluster. Any given file you create in Hadoop is spread across all the machines in the cluster (distributed). In other words, a part of the file is on each machine. When you use applications and run programs to process that distributed data, each machine does part of the work with its share of the data. And data is moved around among the machines as needed. Hadoop automatically manages all this so that different parts of the data can be processed simultaneously (parallel processing). This allows you to process large amounts of data in a reasonable amount of time. Since the complexities of distributed storage and parallel processing are handled automatically, this frees up programmers and application users to continue to work in a familiar way, naming input and output files and defining required data management and analytical operations using methods and logic similar to the way they accomplish this in single machine environments.

Hive is one key Hadoop application that will be of interest to some SAS users. With Hive you can use an SQL-like language to define, manage, and query data in Hadoop. Hive uses the Hadoop distributed file system to store the data. Hive is widely adopted by Hadoop users because people can use a familiar SQL based language for data management and querying of data stored in the Hadoop file system.

SAS and Hadoop

BASE SAS and Hadoop: If you use SAS on Windows, Linux, or UNIX you may use a DATA step, PROC IMPORT, PROC EXPORT or other methods to read or write text files on the file system. With the same techniques you can also read or write to files in the Hadoop file system (HDFS). Compare the two programs below. The first reads a comma delimited text file from a Window file system and writes the output to a fixed column format test file on the file system. The second program performs the identical read and write operations using files stored in HDFS.

Very little differs in the program statements when reading and writing HDFS files versus files local to the SAS server. Such read/write operations for files in Hadoop can be a useful technique in certain applications and, as part of BASE SAS, requires no extra software licensing. However there are two limitations to these BASE SAS methods. The data needs to move between Hadoop and SAS because the DATA step executes in SAS. In addition, the DATA step does not perform parallel processing. With the large amounts of data in Hadoop it is often necessary to design applications that allow the data to be processed in parallel within Hadoop. But take heart and read on, as I will describe SAS methods that allow you to do just that.

SAS ACCESS and Hadoop: SAS ACCESS Interface software is available for a number of Data Base Management Systems (for example ORACLE, TERADATA). For each database, the SAS ACCESS technologies provide two types of methods for SAS users to read and write data in database tables. The first method, SQL pass-through, allows users to embed native database SQL queries in the SAS SQL procedure, send those native SQL statements to the database for execution, and have the results returned to SAS. The second method defines a library (LIBNAME) connection to the database which allows users to name database tables as SAS datasets in any SAS program step. With this method, SAS automatically generates native database SQL to query the database tables in a language that the database understands.

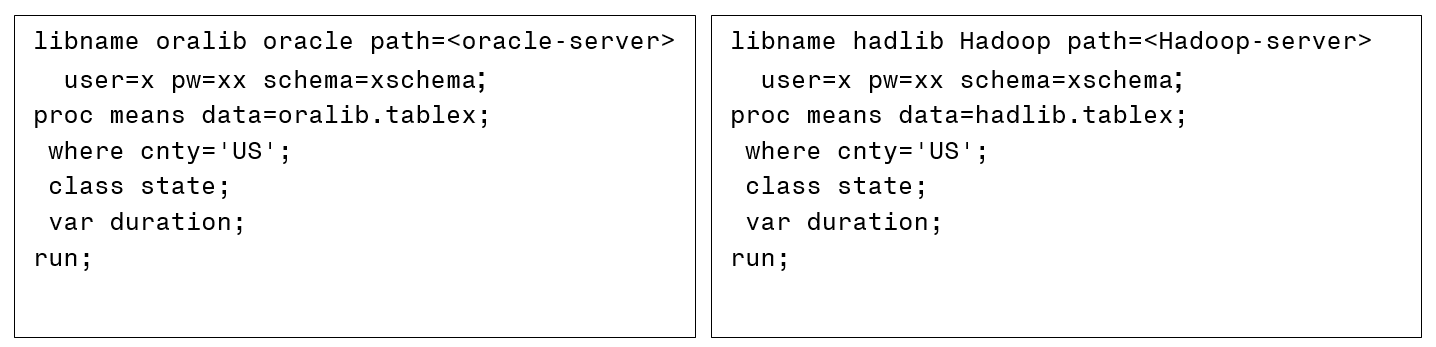

Within Hadoop, Hive is an SQL-based application for managing and querying data and thus Hive is the application the SAS ACCESS Interface to HADOOP interacts with to allow users to apply these same well established SQL-based SAS ACCESS techniques to work with data in Hadoop. Compare the two programs below. The first uses SAS ACCESS to ORACLE to process an Oracle table. The second uses SAS ACCESS to Hadoop to process a Hive table:

The only difference is the connection options you specify on the LIBNAME statement.

SAS ACCESS Interface to Hadoop, like all SAS ACCESS technologies, is designed to push as much processing as possible into the Hadoop cluster to maximize efficiency. With SQL pass-through, the native query is sent to Hive for execution and thus the data stays in Hadoop and is processed in parallel. When you use the LIBNAME method to process Hive tables, SAS generates Hive SQL (HiveQL) to request data from Hive. For some SAS language elements, SAS automatically converts those language elements into HiveQL equivalents to maximize processing in Hadoop and to limit the volume of data returned to SAS. Examples include WHERE statements for subsetting, summary calculations performed by a handful of procedures including PROC MEANS and PROC FREQ, and KEEP or DROP dataset options that limit the number of columns returned.

What if you wanted to read and process a Hive table in Hadoop with a DATA step? With the exception of a small set of language elements like WHERE statements and KEEP and DROP dataset options, the DATA step language is not converted into HiveQL equivalent processes by the SAS ACCESS Interface to Hadoop and the data needs to be returned to SAS for further processing. To perform DATA step-like processing on Hive tables in Hadoop we need to go beyond generating HiveQL because of the unique processing capabilities of the DATA step. The solution is DS2 and the Code Accelerator for Hadoop … which we’ll go over in more detail next week in Part 2 on DS2 programming with Hadoop.

Interested in learning more about HiveQL and Hadoop? Check out these resources to dive deeper.

- Introduction to SAS and Hadoop will equip you with the knowledge you need to effectively implement BASE SAS and SAS Access Interface to Hadoop programming methods.

- If you are looking for a course more focused on open source Hadoop technology with a briefer overview of SAS data management programming techniques for Hadoop, you might be interested in taking Hadoop Data Management with Hive, Pig, and SAS.

- SAS 9.4 Supported Hadoop Distributions show you the minimum supported versions for Hadoop distributions and Kerberos are provided. Check out the tables!

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thanks for the article on SAS Communities. I like your brief explanations

of come complex topics. One of my tasks is putting together some training

materials for our Hadoop environment. Can I post your article (as is) on our

corporate website?

I'm looking forward to your other articles on SAS Hadoop.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi RedPlanet. Thanks for your comments! Do feel free to post this on your website and I would be pleased if you did so. Please cite the original article when you do post it.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello --- Do you know of any users that install\configure Hadoop, Hive, and Pig directly from Apache and then successfully connect the Hadoop SAS\Access engine to these? Can SAS connect to a stand-alone Hadoop cluster using proc hadoop?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

PROC HADOOP is part of base SAS and works now from PC. I created a Hadoop Cluster in UBUNTU 16 LTS and ran the latest hadooptracer.py script from SAS. I then copied over the config files to my PC. Note that the output configs and jars are for a single node pseudo distributed mode configuration. The config files contain the JARS and the XMLs SAS needs to talk to the Hadoop cluster. I placed these on my PC and ran the following program (configs are at \\MY NETWORK SHARE\Hadoop). Note that "Configured Hadoop User" has the .bashrc configured for JAVA and HADOOP on the UBUNTU cluster...

options SET = SAS_HADOOP_JAR_PATH " \\MY NETWORK SHARE\Hadoop\lib";

options SET = SAS_HADOOP_CONFIG_PATH " \\MY NETWORK SHARE\Hadoop\conf";

proc hadoop

username='Configured Hadoop User on UBUNTU' password='user password';

hdfs mkdir="/user/new";

run;

If you go to http://YOURCLUSTER FULL ADDRESS:50070/dfshealth.html#tab-overview > Utilities > Browse the file system > look under “user” and you will see the new HDFS directory. So, it appears to be possible to connect sas to a home-brew cluster.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi @mich1

SAS only supports the Hadoop distributions listed on this web page:

https://support.sas.com/en/documentation/third-party-software-reference/9-4/support-for-hadoop.html

Customers running a non-supported Hadoop distribution, or non-supported version of a supported distribution, will not be able to obtain assistance from SAS Technical Support.

Feel free to contact me if you, or anyone else, would like further clarification.

Best wishes,

Jeff

Advisory Product Manager

SAS Data Management Product Line

SAS Innovate 2025: Save the Date

SAS Innovate 2025 is scheduled for May 6-9 in Orlando, FL. Sign up to be first to learn about the agenda and registration!

Free course: Data Literacy Essentials

Data Literacy is for all, even absolute beginners. Jump on board with this free e-learning and boost your career prospects.

Get Started

- Find more articles tagged with:

- Working with SAS and Hadoop