- Home

- /

- Learn SAS

- /

- Ask the Expert

- /

- SAS Modeling Best Practices - Ask the Expert Q&A

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

SAS Modeling Best Practices - Ask the Expert Q&A

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

UPDATED for the 2/8/19 session

Did you miss the Ask the Expert session on SAS Modeling Best Practices? Not to worry, you can catch it on-demand at your leisure.

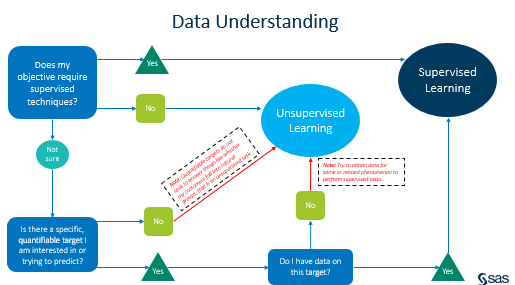

This session provides general guidelines for accessing and determining the best modeling methodology or methodologies for a given business issue. Best demonstrated in SAS® Enterprise Miner™, learn how to:

- Identify and outline your business issue, question or desire, determining whether you want to predict or describe something.

- Determine the type of problem or question at hand.

- Identify the best modeling algorithm or algorithms for the business issue or requirement.

- Measure the effectiveness, performance or accuracy of your model.

- Apply these concepts in SAS Enterprise Miner.

Here are some highlighted questions from the Q&A segment held at the end of the session for ease of reference.

You briefly mentioned there are ways to handle missing values. What are some that you would suggest?

For numeric variables, the most common way to handle missing values is by using the mean or median of the variable in question. There are additional methods such as mid-range, Tukey’s biweight, Huber, and Andrew’s Wave. For categorical variables, the most common methodology is “count” wherein you fill the missing values with the most common level of the categorical variable. Additional methods are distribution wherein the replacement values are calculated based on random percentiles of the variable’s distribution and tree imputation wherein the replacement values are estimated by analyzing each input as the target with the remaining input and rejected variables serving as the predictors. These two methods also apply to numeric variables.

When would you use PCA vs. variable clustering for dimension reduction?

Both methodologies work well for dimension reduction. They both remove multicollinearity and decrease variable redundancy. However, the results of variable clustering are easier to interpret than the results of PCA. Additionally, both are computationally expensive as the size of the data set grows and do not handle categorical variables well. In the case of categorical variables, both methods convert them to dummy variables wherein each level of the categorical variable serves as its own variable. This can slow down the process as the number of dimensions grows with this conversion. Other options for categorical variables is to apply weight of evidence encoding.

When would you use random forests instead of decision trees?

This depends upon what your priorities are. If you are more concerned with getting a model together fast and one that is more interpretable, you would most likely want to apply the decision tree algorithm as it handles large data sets well, is fast to compute, and easier to understand and explain. Decision trees, however, are prone to overfitting one’s training data and are therefore sensitive to any changes made to such data. Random forests limit overfitting without substantially increasing the error due to bias. Therefore, you’re more likely to get a better performing model with random forests. However, do keep in mind they are computationally expensive and are harder to interpret. So ultimately it depends upon how much time you have, what level of transparency you need to provide and how well you need your model to perform.

What evaluation metrics would you suggest using, outside of the ones mentioned?

I mentioned ASE for regression models and misclassification (or accuracy) for classification models. However, there are several options for either type of model that you can use for evaluation and should most likely consider along with the metrics already mentioned. When it comes to classification, you’ll want to investigate the confusion matrix in addition to obtaining the misclassification and accuracy rates. If there is information on profit or loss (benefit or cost), this will be multiplied by the expected rates of the confusion matrix to obtain the total profit or loss obtained with a specific model. Additionally, you can use the precision or recall measurements to get an idea of the number of false positives or false negatives, respectively. The F1-score is a combination of the precision and recall that may tell a more complete story of your classifier’s predictive performance. Log loss is an additional metric for classification that measures the value of the model’s predictions; the higher the log loss, the better the predictions. For regression models, the RMSE (root mean squared error) is one of the most common measurements (along with the ASE or MSE). However, the explained variance score (proportion of variance in the data explained by the model) and the R2 score (proportion of variance in the dependent variable that is predictable from the independent variables) are also used to measure the goodness of fit.

Is there a minimum response rate (1s in the target variable) that is necessary to build a classification model? Is 5% too less to build a model?

There is not a minimum, but you may find that my either oversampling a rare event and then fitting the oversampled dataset or by using techniques that are available for rare events, will give you a better predictive model. The rule induction node was created to help with rare target variables, so you may want to try it out.

Where can I find more information about how to assign the importance rate to the target levels as well about the cost matrix and the expected value.

The Getting Started with SAS Enterprise Miner Book has an example of using the cost matrix and decisioning. You can find it here. Go to the tab for your current version.

Can you show a demo of scoring a test set?

Here is a video to help you with scoring new data within SAS Enterprise Miner.

What is the good practice for variable selection to start the model development process?

There are many theories around variable selection. The great news is using tools like SAS Enterprise Miner you can try several and compare the results. We have an Ask the Expert session on Variable Selection that talks about several of these methods.

Can modeling be done without using Enterprise Miner? Can modeling be done using Base SAS & SAS EG?

Yes, the analytics lifecycle presented in this session is applicable to any tool you use SAS Enterprise Guide, SAS/Stat & Base SAS, SAS Studio, SAS Visual Statistics, SAS Visual Data Mining and Machine learning and any combination of these tools or others.

Does SAS Rapid Predictive Modeling (RPM) transform variable automatically if they are not normally distributed?

Yes, it will depend on which flow you choose (basic, intermediate or advanced) as to the method that is used. Here is a link where you can learn more about what RPM is doing behind the scenes.

What do you think about variable information value and weight of evidence in combination of R-Square to select variables?

Variable information values and weight of evidence (WOE) is a very uses variable selection technique. If you have the Credit Scoring add-on to SAS Enterprise Miner, you can do this directly in EM. Otherwise you can use PROC HPBIN to calculate these statistics. These options are covered in another Ask the Expert on Variable Selection.

Are data transformations applied to Train, Validation, and Test sets? Or only to Train set?

The data transformations are applied to all the data sets you have created. So, if you have created a Training, Validation and Test datasets each will include the transformed variables. The transformations are also included in the SAS score code created in the Score node for the best model.

Recommended Resources

Want more tips? Be sure to subscribe to the Ask the Expert board to receive follow up Q/A, slides and recordings from other SAS Ask the Expert webinars. To subscribe, select Subscribe from the Options drop down button above the articles.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

I wouldn't use either PCA or clustering for dimension reduction, because neither of them take into account the Y-variable(s). You could get clusters and principal components which are not very predictive of the Y-variables.

Why not use a method that performs dimension reduction by looking for dimensions that ARE predictive of Y (if such dimensions exist)? Makes perfect sense to me, rather than the recommendation to use PCA or clustering. That method is Partial Least Squares Regression.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

The answers above were provided by Chelsea Zerrenner the presenter of this topic. One of the great benefits of using SAS Enterprise Miner is there are many methods for variable section or variable reduction. Many of these methods are covered in two separate Ask the Experts on Variable Selection and Model Selection. Click on the links to review on demand versions or attend the next live session.

SAS Enterprise Miner makes it easy for you to try many of the methods and compare the results to see which method creates the most reliable and/or accurate model. I'm often pleasantly surprised when PCA followed by a modeling techniques gives me the best results. And I find Variable Clustering is often the best method when I have data from surveys or other studies that have variables that are closely related.

Its a great discussion and one the ultimately lies with not the most favorite answer for which method is best, "It depends". The great news is with SAS Enterprise Miner we can quickly test multiple methods.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Chelsea also provide some additional details...

First, there are a number of other methods you can use for dimension reduction. PCA and variable clustering were mentioned because they are the most common techniques.

Second, it seems you may be confusing dimension reduction with variable selection. A technique like partial least squares is used to identify the most powerful predictors of your target. PCA and the like are used to reduce the dimensions of your data while retaining the information. This way, when you do perform a variable selection technique, you'll be pulling from a smaller dimensional space. This helps to improve the time taken to perform something like variable selection. You would most likely combine the two techniques; PCA or the like to reduce your dimensions, then partial least squares to select the best predictors of your target. So you may have 100 inputs in your data, you'll apply PCA to reduce these inputs to new components (let's say 20), and then apply partial least squares regression in which only 5 of the components were found to be good predictors of the target. The difference between partial least squares and PCA is that partial least squares identifies inputs that are good predictors, whereas PCA reduces these inputs to a smaller number of components while maintaining a certain amount of information (generally, 90% of the variance in the data). Partial least squares needs information on the dependent to make it's selection, PCA or variable clustering doesn't need the dependent because it's trying to maintain the information the inputs have while reducing them into new components or clusters.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

PLS can reduce dimensions, it is not just for prediction.

The explanation above ignores the fact that PCA might not find predictive variables/predictive dimensions, because it doesn't use the Y-variable(s), which to me is a fatal drawback of the method. Just because PCA is a "common technique" does not make it a good technique.

In my opinion, PCA followed by PLS on the PCA dimensions is an abomination that gives you the worst features of PCA (it may not be predictive) and loses any benefit of PLS. Why not just use PLS for dimension reduction, since it will find dimensions that are predictive (if such dimensions exist)?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

You are right that PCA is not always a great solution for prediction. I have found exceptions where doing PCA followed by PLS has given me excellent predictions. Its all about the data as to what will work best. I suggest that when you are doing predictive modeling try as many options as time allows. This gives you the best chance of finding the best solution to your problem.

If PCA followed by PLS doesn't work for your data and your application then there are many other methods that can be used that do use the Y to help select important variables.

Personally I never use only one method for variable selection. I like the feature (using the metadata node) in SAS Enterprise Miner that allows me to combine the results of several methods to determine my input variables.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

How did the problem of monotonocity be resolved?? Which node in SAS EM helps in finding WOE & IV (other than Interactive Grouping)??

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

WOE and IV is available through point and click in SAS Enterprise Miner through the Interactive Grouping node. If you do not have access to it, you can use the SAS Code node and the following code to calculate WOE and IV.

proc hpbin data=sas-data-set numbin=5;

input age/numbin=4;

input all other variables;

ods output Mapping=Mapping;

run;

proc hpbin data=sas-data-set WOE BINS_META=Mapping;

target Y/level=nominal order=desc;

run;

The documentation for hpbin can be found here.

And a video on proc hpbin is available here.

There are several papers that also talk about this subject.

- SAS® Macros for Binning Predictors with a Binary Target

- Weight of Evidence Coding for the Cumulative Logit Model

- Expanding the Use of Weight of Evidence and Information Value to Continuous Dependent Variables for ...

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

thanks

SAS Innovate 2025: Save the Date

SAS Innovate 2025 is scheduled for May 6-9 in Orlando, FL. Sign up to be first to learn about the agenda and registration!

SAS Training: Just a Click Away

Ready to level-up your skills? Choose your own adventure.

Your Home for Learning SAS

SAS Academic Software

SAS Learning Report Newsletter

SAS Tech Report Newsletter