- Home

- /

- Analytics

- /

- SAS Data Science

- /

- Scoring data and fit statistics in SAS E Miner

- RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi folks,

I am building a predictive model in SAS E Miner and through model comparison I choosed Neural Network model as best. I have also imported scoring data and scored new data with Neural Network model.I got predicted values and predicted probabilities in a new table. But requirement is also to get the fit statistics for the new scored data.

Please help!!

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Please note that to compute assessment statistics, you will need to have the outcome/target information for each observation. You cannot assess the fit if the outcome is unknown. In most cases, you are scoring new data where the outcome is unknown. If you later want to assess how the model performed, you can use a Model Import node to import a scored data set containing predicted values (or predicted probabilities) and compute the assessment statistics.

To do so, follow these steps:

1 - Add the scored data as a new Input data source and set the role to Training (even though it is a 'scored' data set)

Note: You will need a separate Model Import node flow for each scored data set you wish to import.

2 - Make sure the target variable has the same name as the one used in the SAS Enterprise Miner modeling flow

3 - Make sure at least one variable (even a dummy variable) is listed as an Input variable;

4 - Make sure any binary/nominal/ordinal target variable has the same level and is sorted in the same order as the one used in the SAS Enterprise Miner modeling flow

5 - In the Model Import node, map the probability of the target event to the correct level of the target variable (if using binary/nominal/ordinal target variable).

You can then view the Assessment information in the Model Comparison node.

As Wendy mentioned, if you have the outcome/target information and the input data, you can also specify the data source as Test (assuming you don't already have a Test data set) and pass it to the first node beyond the Data Partition node. If no Data Partition node is present, connect it to the same node the training and/or validation data set is connected to. SAS Enterprise Miner will then create assessment information for the 'Test' data (which is actually your scored data) as part of the flow.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Can you just clarify for me what your exact steps are?

You have a flow with a Model Comparison node that chooses the Neural Network model.

Do you then attach the Model Comparison node and another Input Data node that has new data (but has the observed target in it) to score to a Score node? If so, what role is assigned to your Input Data?

Then you want assessment for that, correct?

Please let me know if I'm understanding correctly!

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for reply!

Yes, Model Comparison node is used in the flow which chooses Neural Network as a best model.

Yes, I attached new input data with the Model Comparison node and used Score node to predict new cases.

Input role is assigned to the input data node.

Yes need a assessment of input data.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

There might be a better way to do this, but the only way I can figure is to set the role of this Input Data node to Test (this assumes you don't already have a Test partition), and connect that directly to the Neural Network node instead of the Score node, and you will get the assessment statistics there. The Score node is typically used for scoring new data where the target is unknown.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Dear Wendy, Thank you. Whether this can be done with Scorecard node as well to assess the fit statistics instead of Neural network?

I receive an error when trying to do it.

Thanks

Kind regards,

Mari

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Please note that to compute assessment statistics, you will need to have the outcome/target information for each observation. You cannot assess the fit if the outcome is unknown. In most cases, you are scoring new data where the outcome is unknown. If you later want to assess how the model performed, you can use a Model Import node to import a scored data set containing predicted values (or predicted probabilities) and compute the assessment statistics.

To do so, follow these steps:

1 - Add the scored data as a new Input data source and set the role to Training (even though it is a 'scored' data set)

Note: You will need a separate Model Import node flow for each scored data set you wish to import.

2 - Make sure the target variable has the same name as the one used in the SAS Enterprise Miner modeling flow

3 - Make sure at least one variable (even a dummy variable) is listed as an Input variable;

4 - Make sure any binary/nominal/ordinal target variable has the same level and is sorted in the same order as the one used in the SAS Enterprise Miner modeling flow

5 - In the Model Import node, map the probability of the target event to the correct level of the target variable (if using binary/nominal/ordinal target variable).

You can then view the Assessment information in the Model Comparison node.

As Wendy mentioned, if you have the outcome/target information and the input data, you can also specify the data source as Test (assuming you don't already have a Test data set) and pass it to the first node beyond the Data Partition node. If no Data Partition node is present, connect it to the same node the training and/or validation data set is connected to. SAS Enterprise Miner will then create assessment information for the 'Test' data (which is actually your scored data) as part of the flow.

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi Doug,

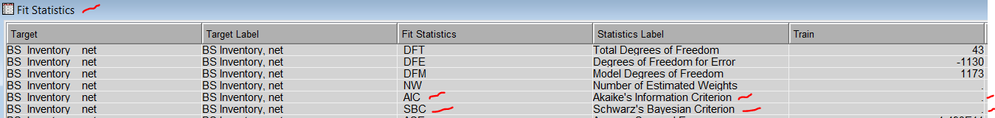

My neural networks do not have AIC in their fit statistics (SAS EM 14.3, Interval target). What is a reason for that?

Please help me with that. Thank you very much.

Regards,

Stewart

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

My neural networks do not have AIC in their fit statistics (SAS EM 14.3, Interval target). What is a reason for that?

The AIC statistic is a "penalized" criterion which penalizes based on the number of parameters in a model. The AIC is only computed for training data (not validate or test data) when the data can estimate the model parameters while retaining degrees of freedom for error. Since Neural network models use the same predictors in multiple ways, they can potentially require more parameters than can be fit by the data leading to the node displaying negative degrees of freedom for error in the node results. In these cases, no AIC will be reported.

Hope this helps!

Cordially,

Doug

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

Hi ,

I have built a model and also found the champion model. I would like to test how model is performing. I have four months data with the outcome. Now my question is how should I proceeded the validation of model by calculating confusion matrix ( ppv, sensitivist etc )

Will you please walk me thru the process?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- RSS Feed

- Permalink

- Report Inappropriate Content

I have built a model and also found the champion model. I would like to test how model is performing. I have four months data with the outcome. Now my question is how should I proceeded the validation of model by calculating confusion matrix ( ppv, sensitivist etc ) Will you please walk me thru the process?

Validation of a model is typically done during the training process by using a holdout sample of data (called the "validation" data) to compare candidate models which were fit to the training data. It is not uncommon to choose the candidate model which performed best on the validation data and then put that model into production for regular scoring purposes.

Unfortunately, models have a "shelf life" and tend to perform somewhat more poorly over time. You can evaluate ongoing performance over time in a variety of ways but they all involve comparing recent model performance to how the model performed when it was first implemented to assess how that performance has changed over time. As needed, you can build new "challenger" models to compete against previously implemented "champion" models to determine whether the "champion" model should be replaced by the "challenger" model for regular scoring purposes.

The approach to monitoring model performance depends on what tools you have available. SAS offers products such as SAS Model Manager that assists with managing your models or you can do so using a more manual method but the basic outline is similar:

1 - Build the best possible model (the "champion" model) in light of your business objectives

2 - Implement the "champion" model into your production scoring.

3 - Monitor the performance of the "champion" model at regular intervals.

4 - Build new "challenger" models should the "champion" model performance become too poor and/or if the business objectives/climate change and/or if substantial time has passed

5 - Compare performance of the "challenger" and "champion" models to determine whether to retain or replace the previous champion model.

6 - Repeat steps 3-5 as needed

Hope this helps!

Cordially,

Doug

Don't miss out on SAS Innovate - Register now for the FREE Livestream!

Can't make it to Vegas? No problem! Watch our general sessions LIVE or on-demand starting April 17th. Hear from SAS execs, best-selling author Adam Grant, Hot Ones host Sean Evans, top tech journalist Kara Swisher, AI expert Cassie Kozyrkov, and the mind-blowing dance crew iLuminate! Plus, get access to over 20 breakout sessions.

Use this tutorial as a handy guide to weigh the pros and cons of these commonly used machine learning algorithms.

Find more tutorials on the SAS Users YouTube channel.