- Home

- /

- SAS Communities Library

- /

- The Illustrated Guide to Parallel LASR Loading

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

The Illustrated Guide to Parallel LASR Loading

- Article History

- RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

The Distributed SAS LASR Analytic Server can crank through huge volumes of data at the speed of electric light. However, its ability to crush complex statistical analytics so quickly is only part of the challenge of working in a production environment. Before LASR can act on the data, that data needs to get loaded up into RAM where LASR can work with it first. And that data often comes from the more conventional world of spinning disk drives, relational databases, flat files, and SAS data sets. With LASR setting a very high bar on transaction performance perception (“visualize a billion rows in an instant!“), how can we get data loading to approach anything like that that level of speed? By enabling multiple concurrent i/o streams which transfer the data across parallel pathways between the source system and LASR, eliminating any serial bottlenecks along the way.

Serial Loading

Before jumping into parallel loads, let's look at what we're trying to avoid when possible: serial loads. SAS supports loading LASR with data utilizing a serial transfer technique. Data is sent to the LASR Root Node which then distributes the data evenly across all of the LASR Workers.

Figure 1. Serial loading a SAS LASR Analytic Server.

In some cases, serial loading the only option available to us, such as for the SAS Visual Analytics Autoload facility and LASR's Reload-on-Start feature. Furthermore, it's used as a fallback technique in some situations if a parallel load cannot occur. So, it's a good thing we have the ability to load data to LASR serially - but when possible, we prefer faster loading when working with large volumes of data. That's where the parallel loading options come in.

Of course, if you're using the Non-Distributed SAS LASR Analytic Server - that is, LASR running on just one host machine - then by definition it is only capable of serial loading.

LASR to LASR

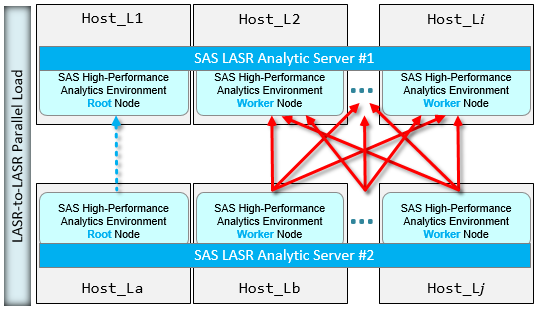

In an environment where you have more than one LASR Server running, it is possible to copy in-memory tables from one LASR to another using the IMXFER procedure.

Figure 2. Parallel load between two SAS LASR Analytic Servers.

The LASR servers can reside in the same collection of physical hosts alongside each other or they can be physically separate running on different machines. Other than having enough physical RAM available to complete the transfer, there's no requirement for both LASR servers to span the same number of hosts. Furthermore, if there are network limitations such that the Workers in one LASR server cannot "see" the other LASR server's Workers, then use the HOSTONLY argument to force a serial transfer of the data between the Root Nodes of the two LASR Servers.

SAS Embedded Process to LASR

The SAS Embedded Process is part of our In-Database technology offerings. The EP is supported on numerous data providers, including DB2, Greenplum, Netezza, Oracle, SAP Hana, Teradata, and more. The EP is also supported on Hadoop distributions from Cloudera, Hortonworks, IBM BigInsights, Pivotal HD, and MapR. The EP on most of those platforms can perform parallel transfer of data to the SAS LASR Analytic Server - refer to the SAS 9.4 In-Database Products: Administrator's Guide for details.

Figure 3. Parallel load from the SAS In-Database Embedded Process to the SAS LASR Analytic Server.

When the EP has been deployed to a supported Hadoop cluster and working with SAS/ACCESS Interface to Hadoop, then the EP can transfer data in parallel from several kinds of data stored in HDFS, including:

- Delimited text files (CSV)

- Hive (and Impala) tables

- SPD Engine tables

- SPD Server tables

There is, however, one SAS proprietary file format stored in HDFS which the EP cannot work with: SASHDAT. That's because LASR itself is responsible for all aspects of interaction with SASHDAT files.

SASHDAT on HDFS to LASR

The SASHDAT file format is the fastest and most efficient technique we can use to (re-)load data into LASR. For most Hadoop distributions, this requires that the LASR services are symmetrically co-located with their HDFS counterparts.

Figure 4. SASHDAT supports parallel load from HDFS to the SAS LASR Analytic Server.

As shown in Figure 4, notice that the contents of SASHDAT are read from (or written to) physical disk directly from the table stored in memory.

SASHDAT on MapR-FS to LASR

With the M3 release of SAS 9.4, we added support for the MapR Distribution of Hadoop with our SAS High-Performance Analytics Environment software (which provides LASR) as well as with the SAS In-Database Embedded Process. One of the interesting aspects of MapR's implementation is that they do not have HDFS, instead, they've created an entirely new and improved distributed filesystem named MapR-FS. LASR interacts with MapR-FS to read and write SASHDAT files using tried-and-true NFS (network file system).

Figure 5. SASHDAT supports parallel load from MapR-FS using NFS to the SAS LASR Analytic Server.

This means that LASR treats MapR-FS as a standard network attached storage solution and doesn't require any special client utility to work with it - we just need the NFS mount point to MapR-FS defined identically on all LASR host machines. Notice that on the MapR platform then, we no longer require symmetric co-location of LASR and HDFS services because there are no HDFS services there. There's no Hadoop NameNode nor are there any Hadoop DataNodes. MapR-FS has its own architecture - but for compatibility reasons, you'll find that MapR does support the HDFS APIs and command-line utilities. This means software offerings (such as other Hadoop projects) can talk to MapR-FS as if it is HDFS when necessary.

CAS and SASHDAT in the Future

With the release of SAS Viya and the SAS Cloud Analytic Server, the lessons learned from years of previous iterations of in-memory analytics are providing new benefits. Due to the trailblazing work which allows us to utilize SASHDAT tables in MapR over NFS now in SAS 9.4, later on with CAS under SAS Viya, we'll be able to save a new generation of SASHDAT files to any NFS-supported network attached storage solution.

This seemingly minor detail has a big architectural impact - we can fully decouple Hadoop and LASR architecture roles! This helps contribute to the overall excitement around CAS and SAS Viya being elastic and robust as our future analytics platform.

--

Rob Collum is a Principal Technical Architect with the Global Architecture & Technology Enablement team in SAS Consulting. When he’s not trying to suss out if his data is transferring in parallel or not, he enjoys sampling coffee and m&m’s from around the SAS campus.

Don't miss out on SAS Innovate - Register now for the FREE Livestream!

Can't make it to Vegas? No problem! Watch our general sessions LIVE or on-demand starting April 17th. Hear from SAS execs, best-selling author Adam Grant, Hot Ones host Sean Evans, top tech journalist Kara Swisher, AI expert Cassie Kozyrkov, and the mind-blowing dance crew iLuminate! Plus, get access to over 20 breakout sessions.

Free course: Data Literacy Essentials

Data Literacy is for all, even absolute beginners. Jump on board with this free e-learning and boost your career prospects.

Get Started

- Find more articles tagged with:

- architecture

- cas

- co-located

- collocated

- EP

- GEL

- HPAE

- lasr

- MapR

- MapR-FS

- NFS

- parallel

- SAS Visual Analytics

- sashdat

- serial

- symmetric